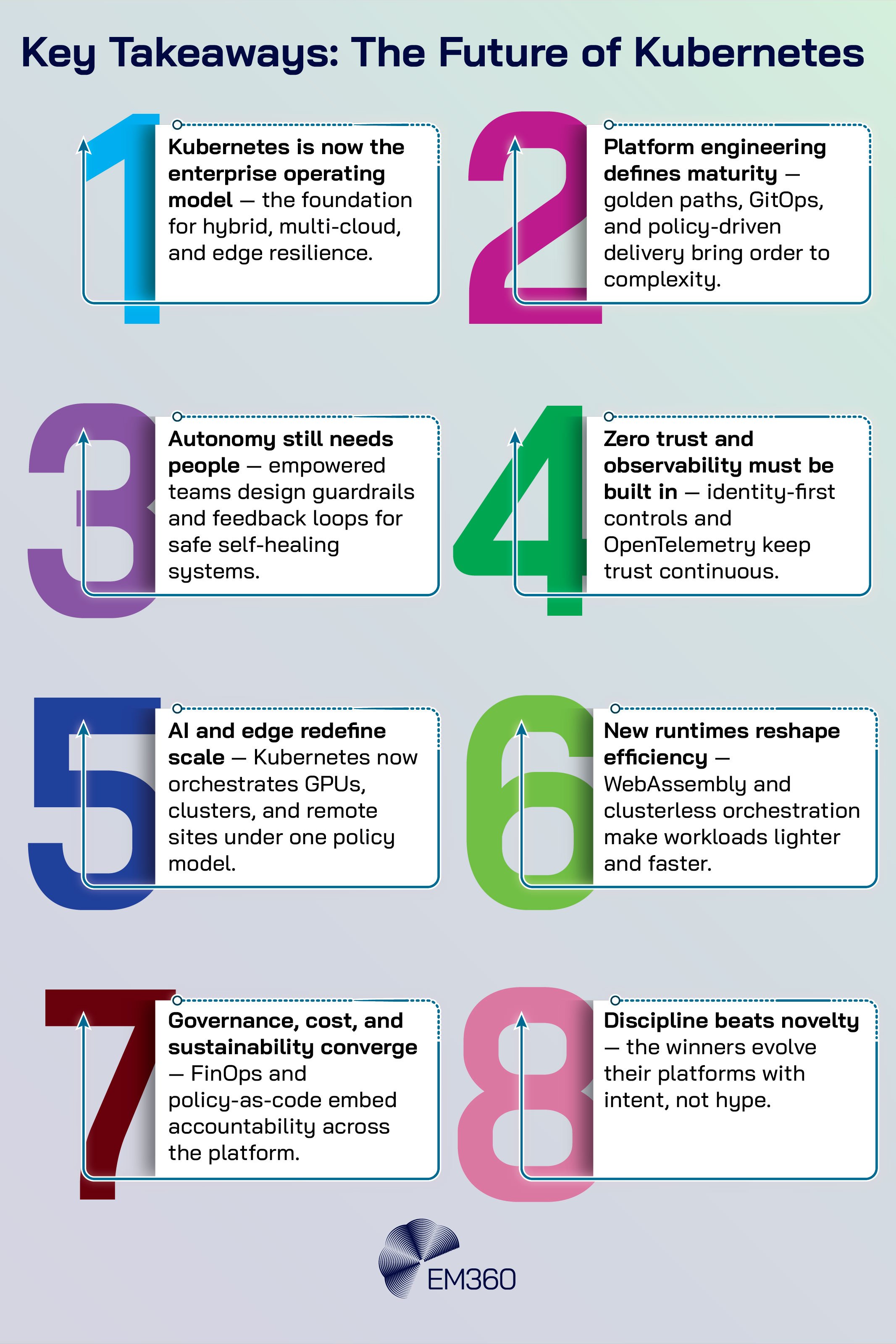

Kubernetes is no longer the shiny new tool in the stack. It has become the operational heartbeat of modern digital businesses, quietly running behind the scenes while teams ship software, scale services, and keep costs and risks in check. What comes next is not another wave of hype or a wholesale replacement.

The future is about discipline. It is the work of translating cloud native promises into dependable outcomes across AI, edge, and multi-cloud environments. For infrastructure leaders, that means building an operating model that is predictable, auditable, and ready for autonomy at scale.

From Container Orchestration to Enterprise Operating Model

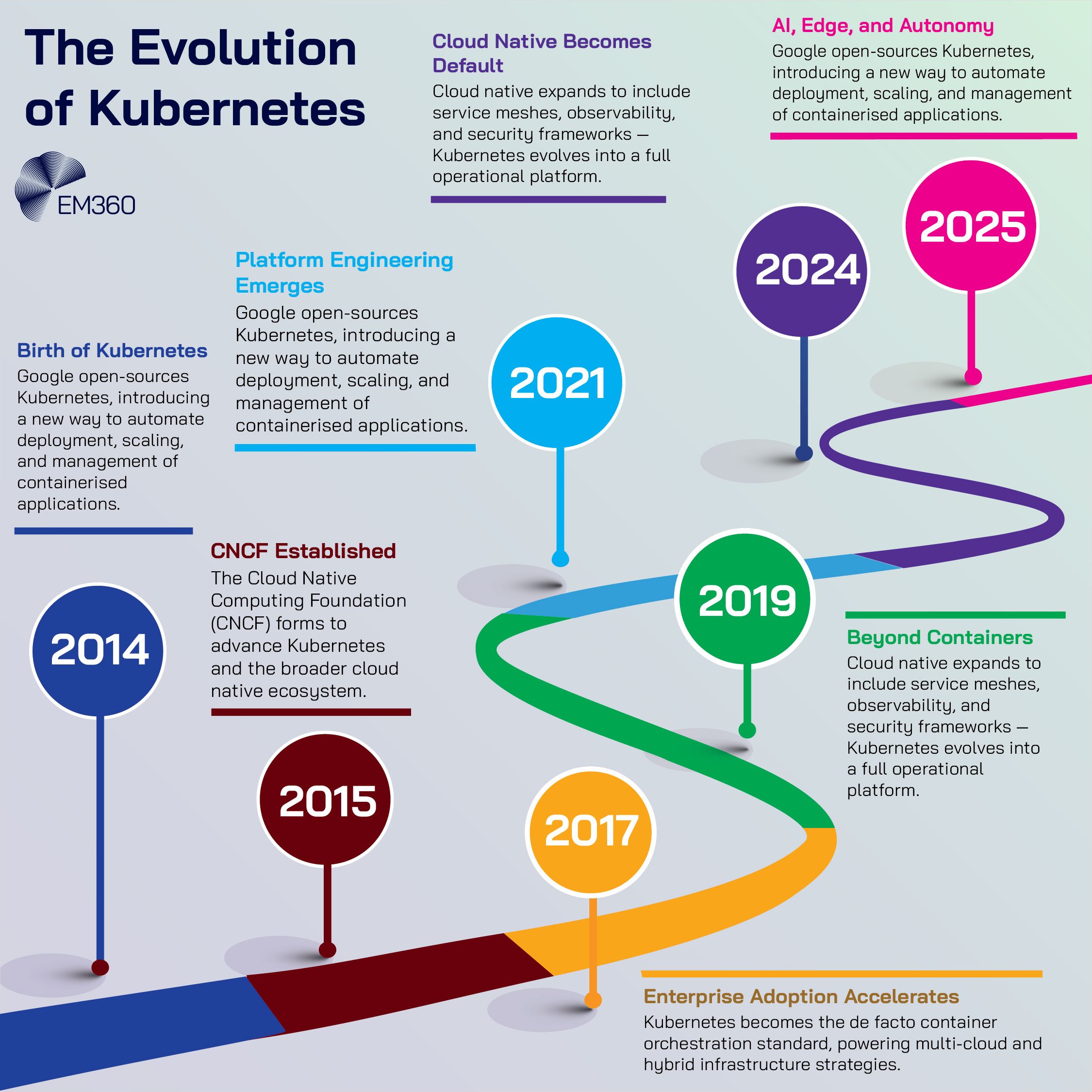

Kubernetes has become more than the scaffolding beneath cloud native applications. Its evolution reflects how infrastructure itself has changed — from isolated systems and manual intervention to dynamic environments that can reconfigure in real time.

As businesses lean harder on digital reliability, Kubernetes has quietly moved from a tool teams adopt to a foundation enterprises depend on.

Kubernetes as the backbone of modern infrastructure

Kubernetes started out as an answer to one problem: how to reliably schedule and run containers. It quickly solved that challenge and then kept going. Today it sits at the centre of hybrid and multi-cloud ecosystems, standardising how applications are packaged, deployed, scaled, and observed.

Instead of bespoke scripts that vary by team or platform, organisations converge on a shared orchestration layer that handles failure, resource allocation, service discovery, and policy.

That standardisation has reshaped the operating model. Rather than treating each environment as a special case, teams treat the platform as the constant and push variation to configuration and policy.

It is a quiet shift with strategic consequences. You can run the same application estate across on-premise, public cloud, edge sites, and everything between without rewriting your playbook for each location.

Adoption reflects this reality. The Cloud Native Computing Foundation’s most recent annual survey reports that the overwhelming majority of organisations now build and deploy software using cloud native techniques.

It is no longer a niche. When nearly everyone works this way, the competitive advantage moves upstream into the quality of the platform itself: how it is designed, governed, secured, and evolved.

The maturity curve: from deployment to discipline

Early Kubernetes initiatives focused on getting workloads live. Success was measured by the number of clusters created, how many services were containerised, and whether deployments became faster. Mature teams now set different targets.

They measure mean time to recovery, policy compliance, cost allocation accuracy, and the percentage of change executed through repeatable pipelines. These are markers of discipline. This is where platform engineering enters the picture. Platform engineering gives application teams paved roads rather than blank canvases.

It defines clear contracts between developers and operations: the cluster provides capabilities, developers consume them through self-service, and changes are captured declaratively so they can be reviewed, audited, and rolled back. The outcome is not just speed. It is speed that is sustainable and secure.

The Rise of Platform Engineering and the GitOps Standard

As Kubernetes environments scale, complexity tends to creep in. Teams start building their own workflows, scripts, and tooling layers to keep delivery moving — and before long, the platform itself becomes fragmented.

Platform engineering emerged as the antidote: a way to bring order, consistency, and predictability back into how cloud native systems are built and run.

Standardising Kubernetes with golden paths

A golden path is a reusable blueprint that makes the right way the easy way. Instead of telling teams what to do and hoping they remember, you give them a templated path that bakes in best practice.

Why Observability Now Matters

How telemetry, tracing and real-time insight turn sprawling microservices estates into manageable, high-performing infrastructure portfolios.

For Kubernetes this often means a standard application scaffold, a base Helm chart or Kustomize overlay, built-in policies for network and identity, and a prescribed observability stack. When a developer needs a new service, they start from the golden path and fill in the specifics.

This approach reduces variance without throttling innovation. It avoids one-off exceptions that later turn into incidents. It also improves onboarding. New engineers do not need to learn every cluster’s quirks. They follow the path and get a compliant, observable service by default.

Industry research and community case studies show the same pattern. Organisations that adopt internal developer platforms with pre-approved templates see fewer deployment mistakes, faster lead times, and clearer ownership. Most important, they make changes more reversible. If something goes wrong, they know which template and which commit introduced it.

GitOps and continuous delivery discipline

GitOps takes that discipline a step further. Instead of clicking through consoles or running imperative scripts, teams manage cluster state declaratively through version control. Tools such as Argo CD watch the repository and reconcile the live cluster to match what has been defined.

The repository becomes the source of truth, and the cluster becomes an implementation detail. This helps compliance as much as it helps developers. Every change has an author, a timestamp, an approval, and a diff. Incident reviews can reconstruct what happened without guesswork. Audit becomes part of the workflow rather than an extra task after the fact.

When you carry this through to infrastructure definitions, network policies, and identity rules, you get a platform that is observable and reviewable end to end. The practical payoff is predictability. When everything is declared, environments converge towards the intended state, even after failures.

When everything is versioned, you can roll back with confidence. When everything is automated, you reduce the human error that still drives a significant share of outages.

The human factor in autonomous operations

Inside Unified Cloud Control Planes

A board-level look at platforms that knit FinOps, SecOps and CloudOps into one layer to manage hybrid estates at enterprise scale.

Autonomy does not remove people. It changes what people do. In mature teams, site reliability engineers and platform owners focus on defining the rules and feedback loops that keep systems healthy. They design guardrails, not one-off fixes. They monitor for drift. They invest in runbooks that the platform can execute automatically.

When an incident occurs, they expect the platform to perform first-line actions and escalate with context. For infrastructure leaders, this is an organisational design question. Platform teams must be empowered to say no to unsafe patterns and to maintain the golden paths as living products.

Application teams need clear contracts on what the platform guarantees and what they own. When the incentives are aligned, autonomy increases reliability rather than hiding risk.

Cloud Native Security and Observability Reach New Maturity

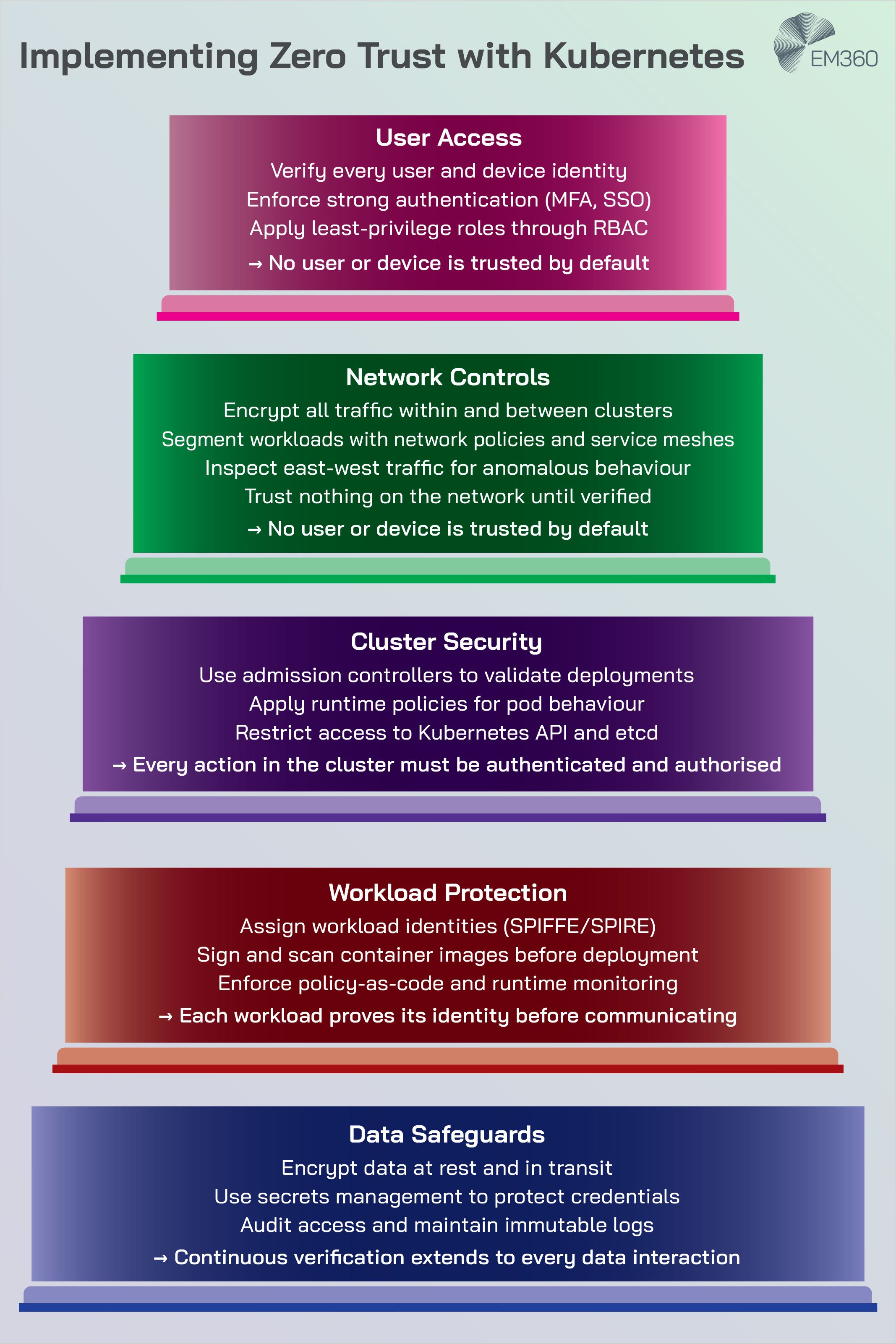

With Kubernetes now central to enterprise infrastructure, its security and visibility layers have become just as critical as its orchestration logic. The ecosystem has matured from reactive monitoring and patchwork defences to proactive, policy-driven protection — where identity, context, and observability converge to keep environments trustworthy at scale.

Identity-first security for hybrid workloads

As environments scale, identities start to outnumber humans. Service accounts, machine identities, automation tokens, and ephemeral credentials multiply with each new microservice and each new cluster. Recent industry reports highlight this shift and encourage tighter controls on credential lifespan, scope, and rotation.

An identity-first approach starts with discovery. You cannot secure what you cannot see, so teams map identities across clusters and clouds, classify them by risk, and apply shortest-lived, least-privileged patterns wherever possible. Policies enforce token lifetimes and mandate workload identity where the platform can assert and validate identity without static secrets.

When credentials are unavoidable, checks ensure they are never written to logs or images and that compromise can be contained. Supply chain concerns sit alongside identity. Images must be built from trusted sources, signed, and scanned. Dependencies need to be tracked. Build pipelines must be locked down so attackers cannot slip malicious code into production.

The goal is to reduce trust in any single step and to make tampering detectable.

Managing AI Complexity at Scale

Why the next wave of enterprise value hinges on pairing observability data with generative AI to govern risk and automate decisions.

eBPF and Cilium redefine runtime protection

Traditional agents often struggle to keep up with container density and kernel behaviour. eBPF changes that. It lets teams run lightweight programs safely in the kernel, so you can observe network flows, system calls, and application behaviour without heavy hooks or constant polling. The network becomes a rich source of truth rather than a black box.

Projects such as Cilium use eBPF to unify networking, policy, and observability. Network policies can be expressed at the service level rather than by IP. Encryption can be applied transparently between pods. Flow logs can be correlated with application context. The result is better performance with deeper visibility, especially in clusters that stretch across nodes, regions, or clouds.

Runtime protection benefits as well. Because eBPF programs can capture granular events with low overhead, teams can detect anomalous activity quickly and respond without imposing heavy instrumentation. Combined with identity-aware policies, this gives defenders the context needed to act with precision.

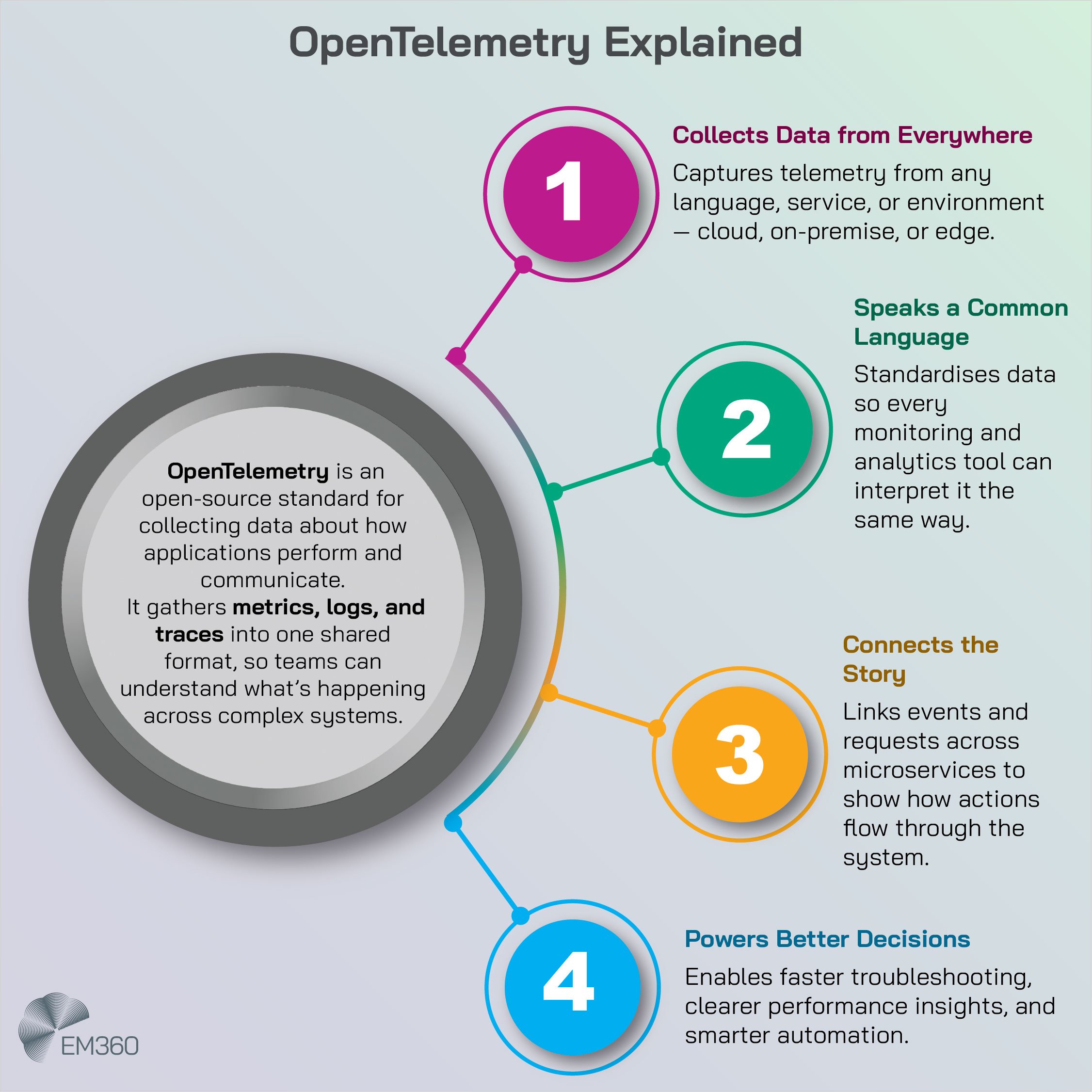

Observability standardisation with OpenTelemetry

You cannot run a modern platform blind. What has changed is how teams collect and correlate signals. OpenTelemetry has emerged as the standard for capturing metrics, traces, and logs consistently across languages and services.

Rather than assembling a patchwork of agents and formats, organisations instrument once and route data to the backends that suit their needs. This standardisation pays off in two ways. First, it accelerates debugging. Traces link service calls across boundaries and show where latency or errors begin. Metrics reveal patterns and regressions.

Logs provide detail at the moment you need it. Second, it reduces tool sprawl. With a common telemetry layer, you can change or combine analysis tools without re-instrumenting your estate. In practice, teams pair OpenTelemetry with service meshes, eBPF flow data, and GitOps change logs to create a complete picture of the system.

Why AIOps Now Leads IT Strategy

How AI-driven operations move IT from reactive firefighting to predictive, self-healing services across complex hybrid and cloud environments.

When an incident occurs, the platform can surface not just symptoms, but the change that likely caused them. That is how mean time to recovery moves from hours to minutes.

AI-Native and Edge Kubernetes Redefine Scale

The boundaries of Kubernetes are expanding fast. What once lived inside data centres now stretches across edge locations, AI clusters, and specialised compute environments built for speed and intelligence. This shift is redefining what scale means — and how infrastructure must evolve to support it.

AI-native workloads reshape cluster design

AI is not a single workload pattern. Training, fine-tuning, retrieval, and inference each place different demands on compute, memory, networking, and storage. Kubernetes adapts by treating accelerators as first-class resources and by letting teams segment clusters according to need.

On the ground, that means GPU-aware scheduling, orchestration for mixed CPU and GPU nodes, and clear quotas so teams cannot starve each other of resources. It means batch workloads for training and data processing living comfortably alongside low-latency inference services. It also means thinking about data gravity.

Moving large datasets across regions is expensive and slow, so architectures shift toward bringing compute to data rather than moving data to compute. Autoscaling strategies evolve as well. Inference services benefit from predictive scaling that anticipates traffic, while training jobs can flex with spot capacity when workloads allow.

The winning pattern is not one configuration, but a playbook that chooses the right pattern at the right time without complex manual work.

Edge Kubernetes moves from pilot to production

The edge is no longer a proof-of-concept playground. With the CNCF graduating KubeEdge, the ecosystem has a recognised path for running Kubernetes in places with constrained resources and intermittent connectivity. Factories, transport hubs, shops, and renewable energy sites are all candidates when local processing reduces cost or latency.

Edge orchestration changes assumptions. Nodes may be small and numerous. Power and network conditions vary. Updates must be resilient to interruptions. Observability needs to work even when central systems are temporarily unreachable.

Teams respond by designing for autonomy. They treat edge clusters as independent sites that can operate for a period without central control and then resynchronise when connectivity returns.

Security at the edge means starting from zero trust. Identities must be verifiable, secrets must be short-lived, and local access must be locked down. Physical risk increases as well. Devices might be tampered with or stolen, so teams plan for rapid revocation and rebuild.

Balancing energy, latency, and data gravity

Performance is not the only constraint. Energy usage and sustainability targets now influence architectural choices. Deploying inference closer to the user can cut network traffic and reduce latency, but it may increase the number of devices under management.

Centralising training in energy-efficient regions can lower costs and emissions, but may add complexity to data pipelines. The right balance is context-specific and should be revisited regularly.

Governance helps here. When teams model the energy and cost implications of architectural choices alongside performance and risk, trade-offs become visible. The platform can then enforce limits on resource use, choose greener regions by default, and schedule batch workloads to align with energy pricing or renewable availability.

Emerging Technologies Reshaping the Cloud Native Future

Kubernetes continues to evolve through the innovation of its surrounding ecosystem. New tools, runtimes, and frameworks are emerging that challenge long-held assumptions about how workloads are built, deployed, and scaled. Together, they point to a future where efficiency, portability, and automation define the next stage of cloud native maturity.

WebAssembly joins the Kubernetes ecosystem

WebAssembly (WASM) promises fast startup times, strong isolation, and portable binaries. It is not a container replacement, but it is a strong complement where density and latency matter. Recent community moves, including the SpinKube project entering the CNCF ecosystem, give teams a concrete path to run WASM workloads alongside containers on Kubernetes.

Where does it fit? Edge environments with limited resources, event-driven services that must start instantly, and per-request compute patterns that benefit from very small footprints. The operational model looks familiar. You still build, deploy, observe, and control through the platform. The difference is the runtime, which is lighter and often safer by default.

Clusterless orchestration and automation

Kubernetes works best when the cluster becomes invisible. Teams define desired state, and the platform finds capacity, schedules workloads, and maintains health without manual placement decisions. The trend toward “clusterless” experiences pushes this further by abstracting away cluster boundaries and letting policies drive placement.

Think of it as a spectrum. On one end you have highly managed, opinionated platforms that hide most cluster details. On the other, you have bare Kubernetes with full control. Many enterprises land in the middle.

They keep cluster access for platform teams but provide a clusterless experience to developers and data scientists. This hybrid approach preserves governance while optimising for velocity.

The convergence of DevOps, FinOps, and SecOps

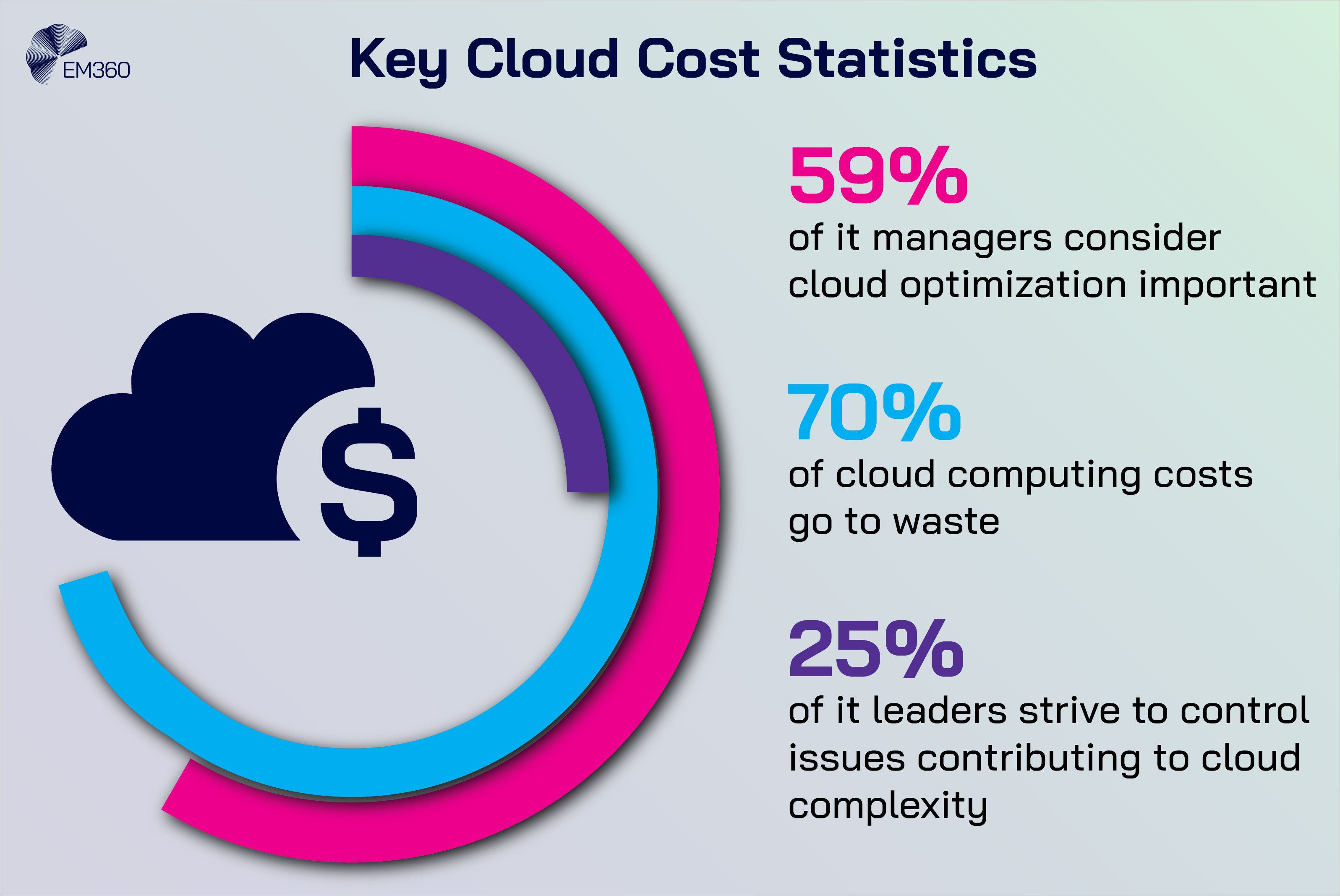

The days of separate playbooks are fading. Enterprises now align DevOps, FinOps, and SecOps under platform engineering so that performance, cost, and risk are managed together. The result is governance by design. Budget constraints surface in the same pipelines that enforce security policy.

Cost allocation and showback are visible in the same dashboards as SLOs. Security controls live next to deployment manifests. This convergence is not a nice-to-have. It is a response to real pressure. Independent research continues to place cloud cost control among the top challenges for enterprises.

When costs are managed inside the platform, rather than through side spreadsheets or late audits, teams avoid surprise bills and reduce the temptation to bypass controls.

Governance, Cost, and Sustainability in the Next Decade

As Kubernetes matures, the focus is shifting from expansion to accountability. Enterprises are no longer asking how to scale faster but how to scale responsibly — balancing performance, spend, and environmental impact. Governance, cost management, and sustainability are becoming inseparable pillars of the same operational strategy.

Building cost-aware infrastructure

A cost-aware platform shows teams the economic impact of their design decisions. It tags resources automatically, maps spend back to teams and services, and enforces sensible quotas. It right-sizes workloads and scales them proactively.

It identifies unused capacity and removes it. Importantly, it does these things without turning developers into accountants. To make this work, the cost model must be trusted. That means accurate tagging from day one, consistent labels across clusters and clouds, and a shared taxonomy for applications and environments.

With those foundations, teams can compare options transparently. Do we use GPUs or CPUs for this workload? Do we keep this dataset in one region or replicate across two? The platform should bring the numbers to the table so decisions are taken with full context.

Industry surveys have repeatedly shown that most organisations still struggle with cloud cost control. The opportunity is to embed FinOps into daily operations rather than treating it as a quarterly exercise. When automation applies the rules and pipelines carry the checks, financial governance becomes an outcome of good engineering practice.

Sustainability as a performance metric

Sustainability is now a measurable requirement, not a generic aspiration. Infrastructure leaders treat energy usage and carbon impact as constraints alongside latency and reliability. They choose regions with lower carbon intensity for training jobs. They adopt efficient runtimes where possible.

They schedule batch work to align with renewable availability or lower-cost time windows. Kubernetes helps by making scheduling and placement policy-driven. Autoscalers can be tuned to avoid waste. Resource requests and limits can be set with discipline. Idle services can be hibernated.

When measured and reported, these practices translate into provable improvements that matter to customers, regulators, and boards.

Governance-as-code for resilient operations

The most dependable platforms express policy as code. That includes security baselines, network rules, data handling requirements, and cost controls. Policies live in version control, are reviewed like any other change, and are enforced through admission controllers and pipeline gates.

This is how you achieve consistency across clusters and clouds without slowing teams down. Resilience comes from this repeatability. You can recreate an environment with the same controls anywhere. You can test policy changes safely. You can show auditors the exact rule set that was in place on a given day and the approvals associated with it.

That level of transparency de-risks growth. It lets you run more clusters in more places with fewer surprises.

Final Thoughts: Kubernetes Maturity Is the Measure of Cloud-Native Success

Kubernetes has grown into the orchestration layer that connects AI ambitions, edge autonomy, and sustainable operations. The shift is not about chasing the next tool. It is about choosing a disciplined operating model where golden paths reduce risk, GitOps captures truth, OpenTelemetry reveals what is happening, and identity-first security keeps that truth intact.

The organisations that win will be the ones that pair automation with accountability and treat the platform as a product that improves every quarter. The future of Kubernetes is not about building faster. It is about building smarter systems that learn from their own telemetry, enforce their own guardrails, and scale with care.

That is how cloud native moves from promise to practice. If you are shaping a roadmap and want a clear view of what great looks like, EM360Tech curates practical playbooks, platform patterns, and evidence-led analysis that help teams raise their maturity with confidence. When your platform becomes your strongest habit, resilience follows.

Comments ( 0 )