Modern enterprises generate data everywhere – on factory floors, in hospital wards, across smart cities. Consider a remote oil rig or a busy retail store: sending every sensor reading to a distant cloud introduces latency and risk. Instead, organisations are pushing computing power closer to the source.

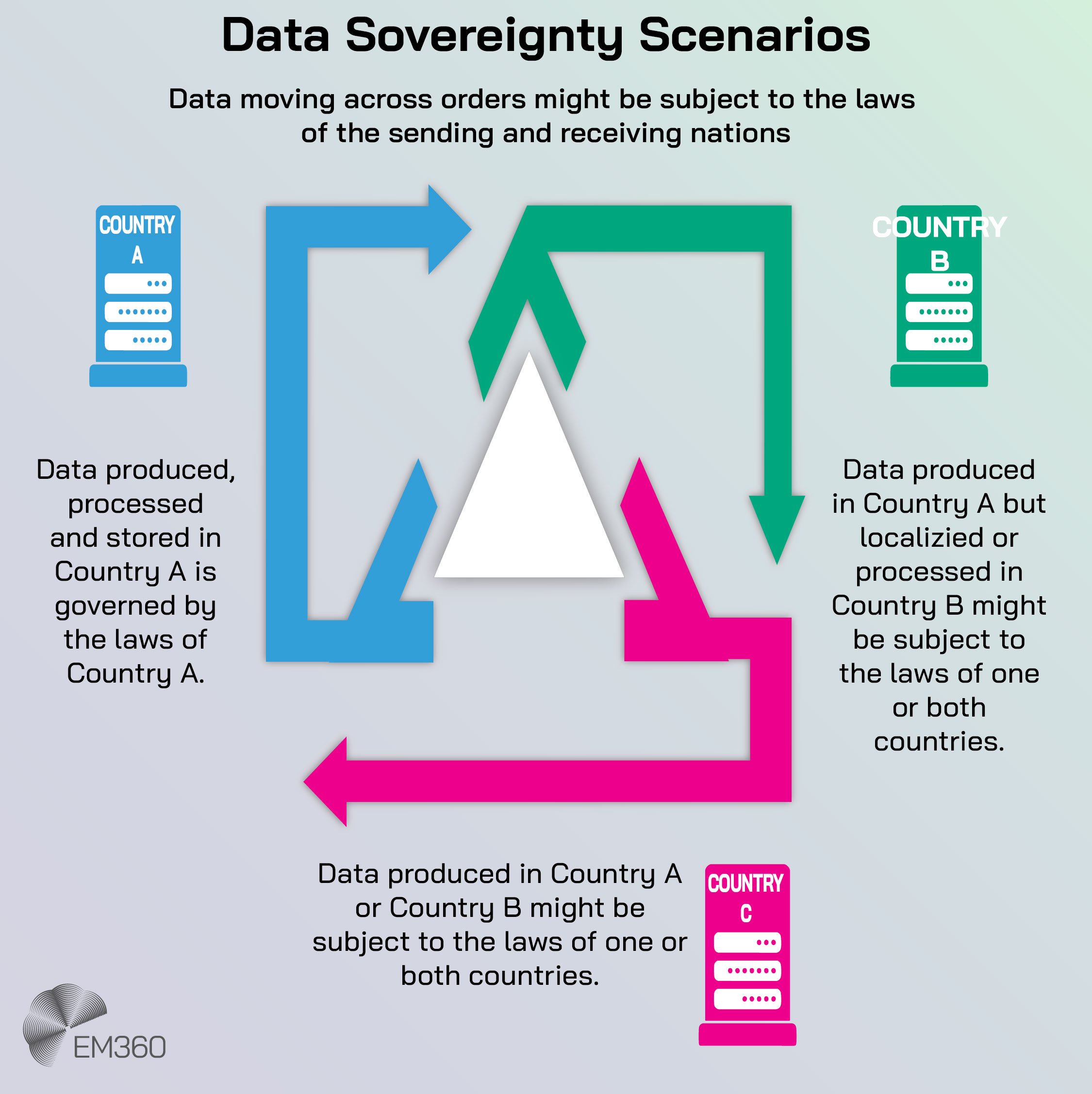

This shift to edge data processing and near-data processing is driven by a simple truth: when it comes to real-time insights, data locality matters. By processing information on-site or in nearby micro data centres, businesses can minimise delays, maintain data sovereignty, and make decisions in the moment.

From AI at the edge guiding autonomous vehicles, to distributed processing in smart factories, working with data where it’s produced unlocks a world of new possibilities. And with regulatory compliance becoming stricter, keeping sensitive data local isn’t just smart – it’s often necessary.

Crucially, this isn’t about abandoning the cloud; it’s about extending it. We’re seeing a hybrid infrastructure model emerge, blending centralised and distributed resources. Data that needs global aggregation still flows to core clouds, but real-time analytics and AI inference increasingly run on the edge nodes right next to users or machines.

It’s a confident evolution in enterprise architecture, aligning technology with the practical demands of latency and data gravity. The result is a new kind of agility: one where intelligence lives both in the cloud and on the ground, wherever it can deliver value fastest.

The Shift to Industry Edge and Near-Data Processing

Enterprise infrastructure is undergoing a strategic pivot. Instead of hauling all data into a central repository, companies are embracing industry edge deployments – processing data at or near its creation point. This is not a passing trend, but an operational evolution redefining how IT systems are built.

By keeping computation close to source, organisations achieve immediate latency reduction (critical for things like machine control and immersive customer experiences) and address data sovereignty concerns (critical for compliance and privacy).

Analysts underscore this momentum: Gartner projects that by 2025, 75 per cent of enterprise data will be processed outside of traditional central data centres, up from barely 10 per cent in 2019.

In parallel, worldwide investment in edge computing is surging – IDC expects global edge spend to reach $261 billion in 2025 as enterprises seek faster insights and local control.

Why this shift now? Partly, it’s the sheer volume and velocity of data. Near-data analytics has become essential as IoT sensors, cameras, and devices churn out information that cannot wait for a round trip to the cloud.

Industries from manufacturing to healthcare are finding that operational efficiency gains and cost savings are unlocked when decisions are made on-site, in real time. There’s also the matter of resilience: an outage in a distant region won’t halt a smart factory if the critical analytics are running on a nearby edge server.

In essence, enterprises are re-balancing their hybrid cloud strategy – not rejecting the cloud’s scalability, but augmenting it with localised computing for the AI inference and data crunching that just can’t tolerate delay. The result is infrastructure that’s more distributed by design, aligned with both business needs and regulatory realities.

How Edge and Near-Data Processing Work in Practice

How does an edge architecture actually function? At a high level, it introduces an intermediate tier between central clouds and the multitude of devices at the network edge. Imagine a hierarchy: core cloud data centres sit at the top, powerful but distant; on the ground, we have devices and sensors (the “far edge”) generating data.

When AI Overloads the Grid

Why boardrooms must treat AI data centre power demand as a core constraint on growth, and which providers are engineering for efficiency.

Edge computing lives in between – often in the form of rugged servers or micro data centres on factory sites, retail locations, telecom towers, or regional hubs. These edge nodes orchestrate data pipelines by ingesting device data locally, performing initial processing or running AI models on-site, and then selectively forwarding summaries or critical events to the cloud.

This approach optimises the data lifecycle: time-sensitive information gets acted on immediately, while less urgent or aggregated data flows upstream for long-term storage or deeper analysis.

In practice, technologies like containerised workloads and virtualization are key to making this work. Many organisations deploy Kubernetes at the edge – a lightweight variation of the same container orchestration used in the cloud – to manage applications across these remote sites.

This ensures that new analytics models or software updates can be rolled out to thousands of edge devices as easily as to one central cluster. The relationship between cloud and edge is one of cooperation. The cloud still provides global visibility, heavy-duty processing (like large-scale model training or multi-site data correlation), and central control.

Meanwhile, the edge handles local real-time decisioning: for example, an edge server in a wind turbine can adjust blades instantly based on sensor data, without needing to ask a cloud application 1,000 miles away.

By intelligently coordinating workload placement, enterprises achieve the best of both worlds – the low network latency and continuity of on-site processing, plus the scalability and unified management of the cloud.

In short, near-data and edge architectures work by putting each computation in the optimal place: close to the data when speed or autonomy is paramount, and in the cloud when broader perspective or muscle is needed.

Why Edge Data Platforms Matter for Enterprise Infrastructure

For CIOs and CTOs mapping out the next five years, edge data platforms have moved from nice-to-have to must-have in the enterprise toolkit. The reason is simple: they directly address core business priorities in a digital, data-driven era. First, consider infrastructure modernisation and agility.

Qwen As Enterprise AI Stack

Dissects Qwen’s open-weight models, Alibaba Cloud integration and tool-calling agents as a backbone for scalable automation.

Traditional centralised IT can become a bottleneck when an organisation needs to deploy new services across hundreds of sites. Edge platforms, by contrast, enable a distributed fabric of compute that can scale outwards – bringing cloud-like capabilities on-premises, from retail branches to remote industrial sites.

This means new applications or AI models can be rolled out where they’re needed without overhauling everything centrally, supporting faster innovation at the enterprise infrastructure level.

Compliance and data governance are another driving force. In industries with strict regulatory oversight – think finance, government, healthcare – keeping data within specific geographic boundaries is often non-negotiable.

Edge solutions allow these organisations to process and store information locally to meet data sovereignty requirements, all while still integrating with a global architecture. In effect, an edge platform can act as a local data fabric node that enforces sovereignty and privacy, yet remains interoperable with the wider enterprise systems.

There’s also the matter of resilience and continuity. Distributed edge nodes ensure that if connectivity to the cloud is lost, critical operations can continue unaffected – a factory’s assembly line doesn’t shut down because the WAN link is flaky.

This built-in resilience goes hand-in-hand with improved performance: local processing reduces dependency on bandwidth and eliminates cloud egress costs for massive data transfers, optimising costs.

Modern edge platforms also come packed with enterprise-grade features: AI integration (so organisations can deploy trained machine learning models to do inference on-site), built-in observability and remote management (because no one can send IT staff to every branch for troubleshooting), and an emphasis on interoperability.

Many leverage open standards to avoid locking businesses into a single ecosystem, which is important for long-term flexibility. In short, edge data platforms matter because they align technology infrastructure with today’s business realities – enabling workload optimisation (running each task where it runs best), ensuring compliance and security, and unlocking new levels of scalability and efficiency.

For the enterprise, investing in edge is investing in an architecture prepared for both the demands of today and the innovations of tomorrow.

When AI Hardware Becomes Policy

Nvidia’s China strategy shows how AI compute, software lock-in and end-user controls are reshaping security policy and revenue exposure.

Leading Platforms Enabling Industry Edge and Near-Data Processing

Selecting the right edge platform is a strategic decision. The top players distinguish themselves by maturity, integration capabilities, and proven deployments at scale. Below, we highlight ten leading platforms (in no particular order) that are empowering enterprises to bring compute closer to data.

Each has unique strengths – from global cloud integration to open-source flexibility – and each addresses the challenges of managing distributed, near-data environments. Our selection prioritises enterprise adoption and innovation, focusing on solutions known for reliability, security, and the ability to run critical workloads outside the traditional data centre.

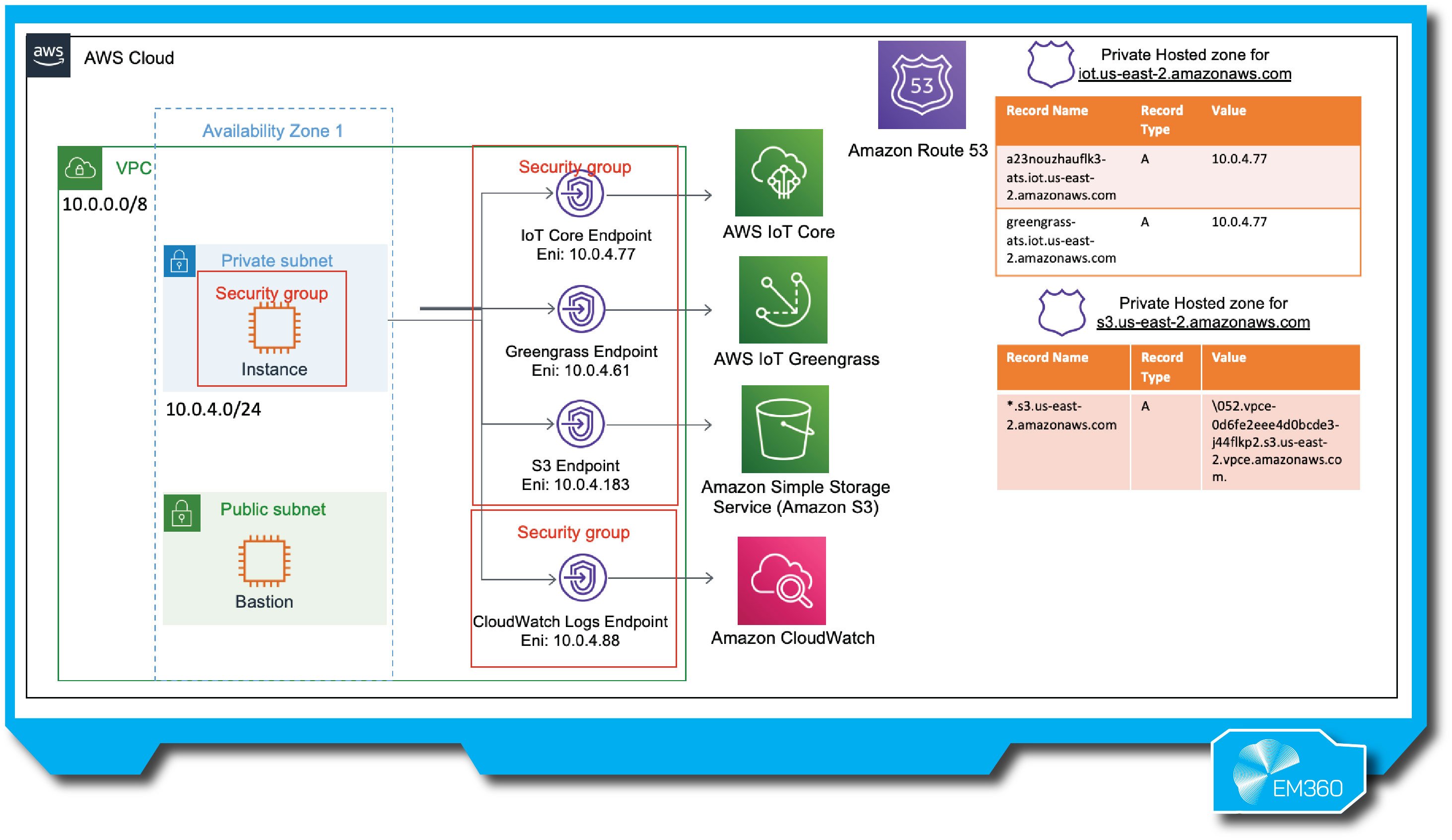

AWS Outposts + AWS IoT Greengrass

Amazon Web Services extends its cloud into on-premises environments with AWS Outposts (a fully managed hardware stack) and AWS IoT Greengrass (edge runtime software). Outposts, introduced in 2019, brings hybrid deployment consistency – it’s essentially AWS infrastructure delivered to your data centre or edge site.

Enterprise ready features

This means organisations can run AWS services (compute, storage, databases, etc.) locally on Outposts while seamlessly connecting to the broader AWS cloud for management and additional services. AWS IoT Greengrass complements this by allowing IoT devices and gateways to run AWS Lambda functions and machine learning models on-site, enabling quick IoT data processing and device control even with intermittent cloud connectivity.

Together, Outposts and Greengrass create a powerful edge ecosystem: Outposts provides the on-site muscle (with options from 1U servers to full 42U racks), and Greengrass provides intelligent processing at the device level.

Notably, AWS has leveraged its strengths in security and cloud tooling here – Greengrass can perform local ML inference on data using models trained in the cloud, and Outposts offers the same IAM, monitoring, and APIs as AWS cloud regions for a truly consistent hybrid experience.

Pros

When to Bet on Open Models

Board-level framework to align open-source, proprietary and hybrid AI choices with cost, control and innovation priorities.

- Familiar AWS environment on-premises, ensuring consistent APIs and management tools across cloud and edge.

- Low-latency local processing with support for AWS services (EC2, EBS, RDS, etc.) running next to on-site data.

- Integration with AWS IoT Greengrass for local event response and ML inference on edge devices.

- Fully managed hardware and software updates by AWS, reducing on-site maintenance burden.

- Broad ecosystem and support – benefits from AWS’s extensive partner network and cloud innovations.

Cons

- Cost can be high; Outposts is a premium solution with ongoing AWS subscription fees and potential cloud egress costs if not managed carefully.

- Ties you into the AWS ecosystem (proprietary hardware and services), raising considerations of long-term lock-in.

- Hardware form factors, while varied (1U, 2U servers, or full racks), have limits – e.g. physical installation requirements and lead times for delivery.

Best for

Large enterprises already invested in AWS, especially those in regulated sectors (finance, healthcare, government) that need AWS cloud capabilities on-prem for data residency. Also a fit for multi-site retail and manufacturing companies that want uniform infrastructure across dozens of locations – Outposts in each facility for local compute, all managed centrally via the AWS console.

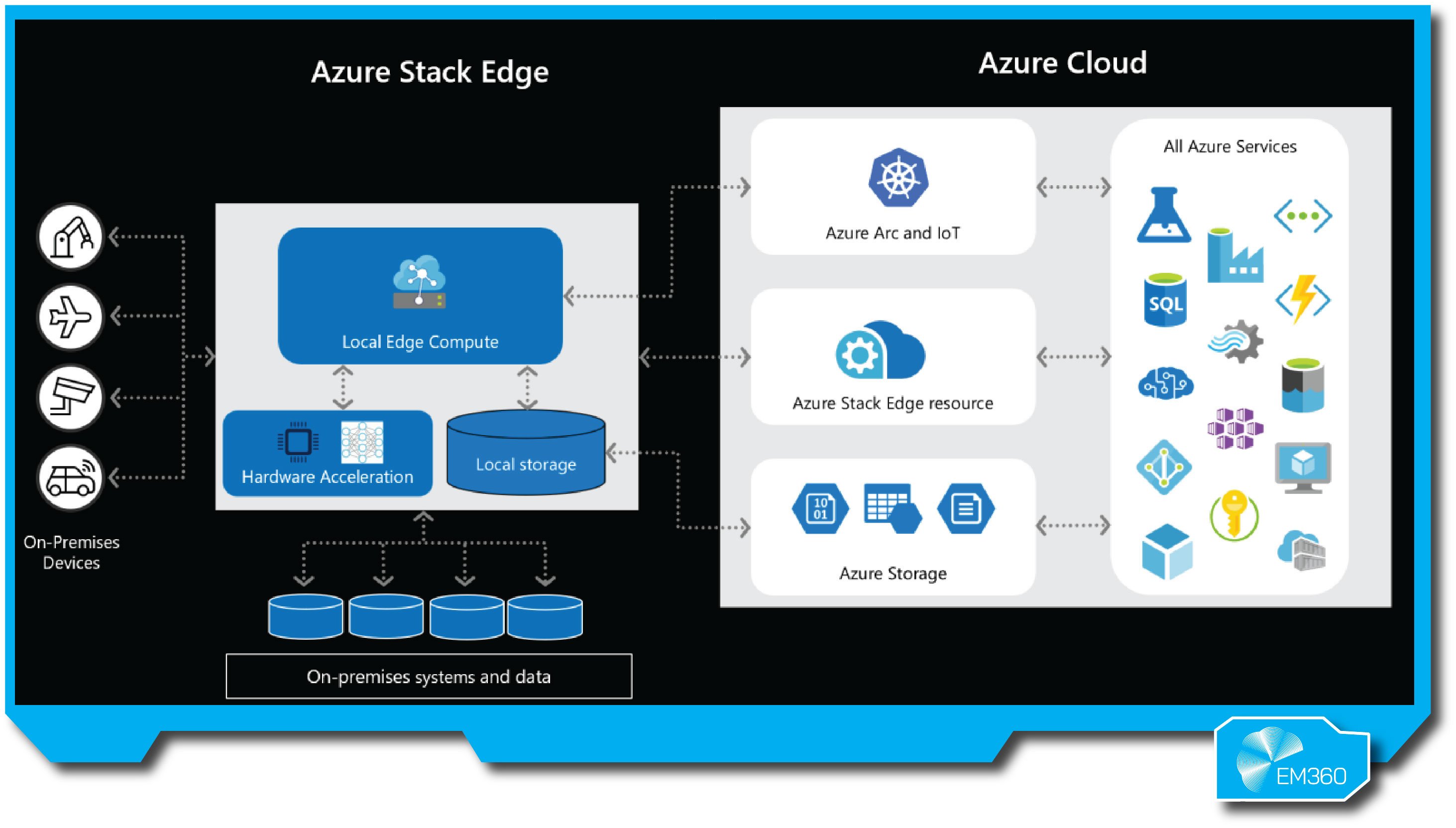

Azure Stack Edge

Microsoft’s Azure Stack Edge is a hardware-as-a-service offering that brings Azure’s cloud capabilities into the local environment. First released in 2019, it’s essentially a cloud-managed edge appliance with options for built-in hardware acceleration (GPUs or FPGAs) for AI at the edge.

The device arrives configured and is managed through the Azure portal, so deploying one feels like adding a new region to your Azure footprint – but one that sits in your office or data centre.

Enterprise ready features

With Azure Stack Edge, enterprises can run hybrid workloads: you can process and analyse data on-site (say, to do image recognition or filter IoT telemetry) and then send only the needed results up to Azure.

Since it’s an Azure service, it integrates nicely with Azure IoT Hub, Azure Machine Learning, and other services for a smooth cloud-to-edge pipeline. Notably, Azure Stack Edge supports containerised workloads via IoT Edge or Kubernetes, and can function even when disconnected for periods (storing data and syncing when back online).

Its tight integration with the Azure ecosystem means features like Azure security, updates, and monitoring extend to the edge device. Microsoft also emphasizes GPU acceleration on certain models, which is great for scenarios like healthcare imaging or onsite video analytics in retail.

Pros

- Managed directly from Azure, using the same portal and Azure Resource Manager – easy for Azure-centric IT teams.

- Built-in hardware options for AI: GPUs and FPGAs enable local ML inference, ideal for real-time analytics on video, audio, or sensor data.

- Consumption-based model (as-a-service device); no large upfront hardware cost – you pay as part of your Azure subscription.

- Supports offline operation – can buffer data and function with intermittent connectivity, crucial for remote sites.

- Regular updates and patches handled through Azure, keeping edge devices secure and up-to-date without hands-on maintenance.

Cons

- Primarily benefits Microsoft Azure users – less attractive if your ecosystem isn’t Azure-centric (it’s a lock-in to Azure services to get full value).

- Limited device models and capacity; scaling might require multiple devices, which can get expensive.

- Available regions and lead times for delivery can be a constraint (not as instantly accessible as cloud VMs, since physical units must ship and be installed).

Best for

Organisations with significant Azure cloud investments looking to extend services to the edge – for example, hospitals doing on-premises imaging analysis (to keep patient data local), or telecom and manufacturing firms that need cloud-connected processing on factory floors or 5G network sites.

It’s especially useful in scenarios requiring GPU-accelerated AI at the edge, such as analysing video feeds in retail for customer behavior or running inference on medical images in clinics, all while maintaining central Azure oversight.

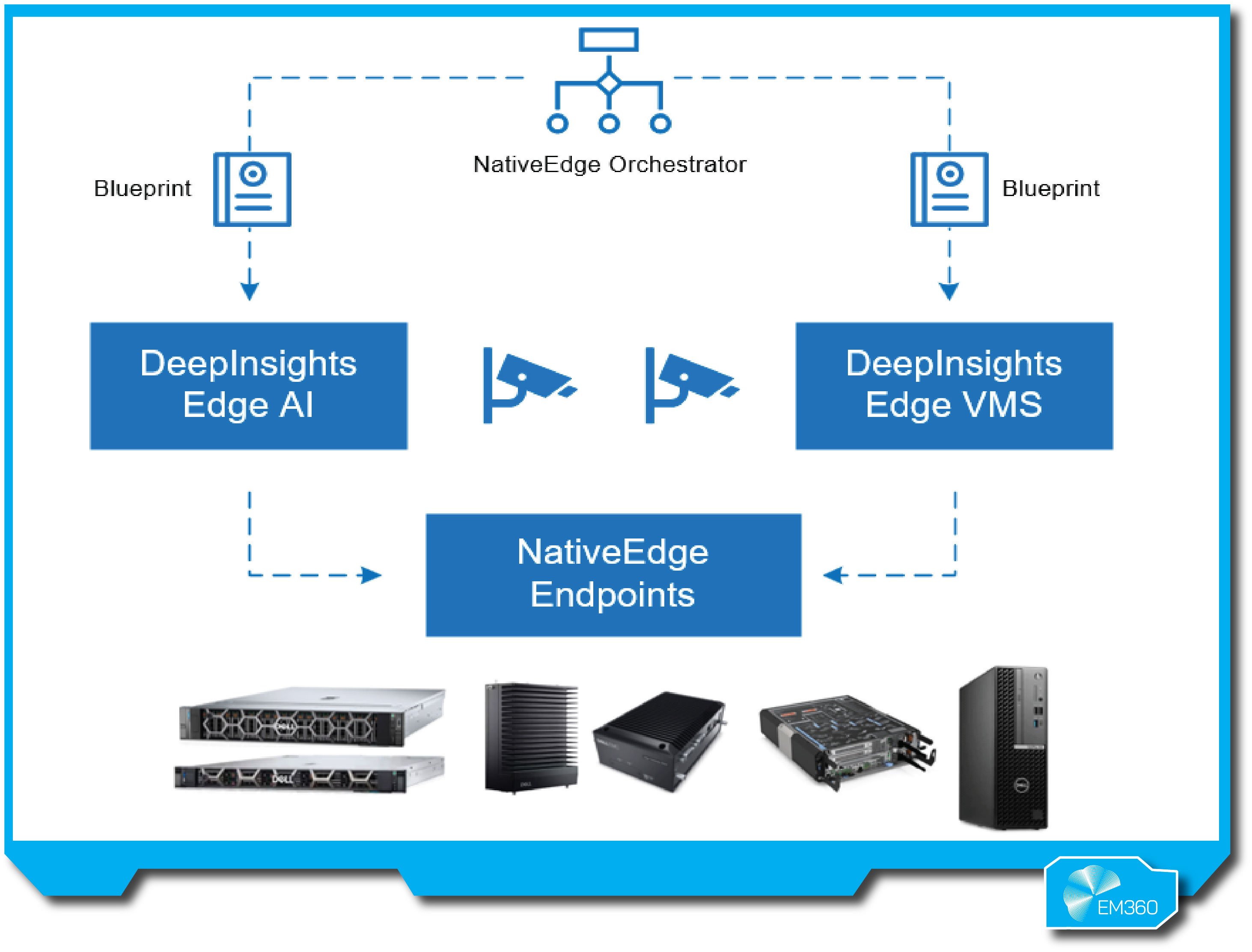

Dell NativeEdge

Dell NativeEdge is an edge operations software platform from Dell Technologies aimed at simplifying and scaling edge infrastructure for enterprises. Launched in the mid-2020s as Dell’s answer to the growing edge orchestration demand, NativeEdge focuses on centralised management and zero-touch deployment for distributed sites.

Dell brings its long hardware heritage here but made the platform hardware-agnostic – it can manage Dell and non-Dell edge devices in a unified way. With NativeEdge, companies can provision and orchestrate workloads across many edge nodes from one console. It supports running virtual machines and containers on anything from tiny gateway devices to full GPU-packed servers.

Enterprise ready features

Key features include high availability clustering at the edge, policy-based automation (for example, auto-deploying an application to all retail stores with one click), and integration with Dell’s broader ecosystem like PowerStore storage for edge data and even third-party tools.

Security is a strong point too: NativeEdge includes built-in zero-trust and authentication features (LDAP/OIDC integration) to enforce enterprise security standards out to the edge. Essentially, Dell NativeEdge aims to make edge computing as automated and reliable as cloud, reducing the need for IT staff at each location by enabling zero-touch onboarding of devices and centrally managed updates.

Pros

- Comprehensive edge orchestration: provides a single pane for managing diverse edge deployments, with zero-touch provisioning to onboard devices without on-site technicians.

- Runs on a wide range of hardware (not limited to Dell gear) and supports both containers and VMs, offering flexibility for different workloads.

- High availability and scaling features like clustering and load balancing at the edge, which ensure reliability for mission-critical operations.

- Strong security focus: centralized user authentication, certificates, and compliance features built-in, targeting regulated industries.

- Deep Dell ecosystem integration when needed (storage, data protection), plus open API support – balancing Dell’s enterprise experience with modern openness.

Cons

- Relatively new platform – while Dell is experienced, NativeEdge itself is newer on the scene than some cloud-vendor solutions, so some features may still be maturing.

- Best benefits are seen in Dell-centric environments; truly hardware-agnostic use is possible but organisations might gravitate to pairing it with Dell infrastructure for full support.

- Licensing and complexity could be a factor – it’s an enterprise software platform, which may require a strong IT team or Dell services for optimal setup and operation.

Best for

Enterprises with large-scale distributed operations – think global retailers, logistics companies, or manufacturers – that need to manage many edge sites efficiently. For example, a smart retail chain running applications in each store (POS analytics, CCTV AI processing) could use NativeEdge to manage all stores centrally.

It’s also attractive to organisations already using Dell Technologies for infrastructure, who want a trusted partner to extend into edge management. Industries like manufacturing, energy, and smart cities, where on-site computing and automation are growing, would find NativeEdge helpful for its combination of automation and robust security in edge deployments.

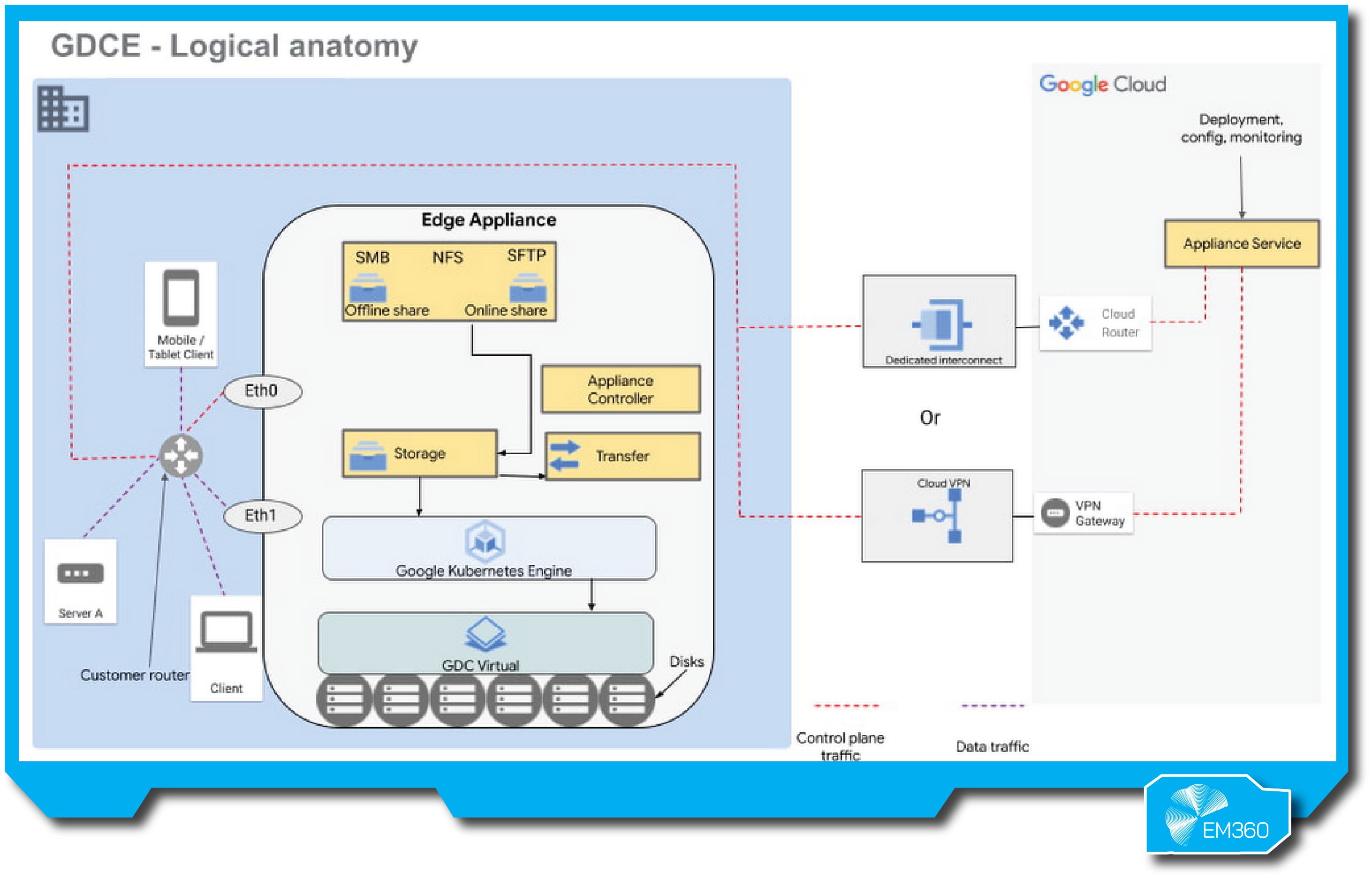

Google Distributed Cloud (Edge/Connected)

Google Distributed Cloud (GDC) is Google Cloud’s portfolio of solutions to extend its services to customer data centres and edge locations. Based on Google’s Anthos hybrid platform, GDC comes in variations, notably GDC Edge (for Google-managed hardware at edge locations or telecom facilities) and GDC Hosted (for disconnected, on-prem deployments).

The idea is to let enterprises run Google Cloud services and analytics near their data sources, rather than hauling everything into Google’s central data centres.

Enterprise ready features

Under the hood, GDC uses Kubernetes (Anthos) to provide a consistent environment. A retailer, for example, could run a mini Google Cloud region inside each store for ultra-fast data processing, while still connecting to Google Cloud for management and aggregated analysis.

One standout aspect is support for AI/ML workloads: Google enables things like Vertex AI (Google’s machine learning platform) to operate in Distributed Cloud environments, so you can deploy and serve ML models at the edge with capabilities like Vision AI or Translation without constant cloud calls.

GDC also touts an offline mode in its Hosted version – meaning it can continue operating even without any internet connectivity, which is crucial for secure locations or remote areas. Essentially, Google Distributed Cloud marries Google’s expertise in containers, AI, and global networking with the reality that data gravity sometimes demands local processing.

Pros

- Consistent Anthos-powered platform: bring Google’s Kubernetes-based environment on-prem, achieving a unified hybrid cloud (develop once, deploy to cloud or edge).

- Strong support for AI and data analytics at the edge – integration with Google’s AI tools (like Vertex AI and BigQuery Omni) allows advanced analytics in local environments.

- Options for fully disconnected operation (via GDC Hosted), which addresses strict sovereignty or air-gapped requirements (e.g., public sector or telecom core networks).

- Multi-environment flexibility: can run on Google-provided hardware or on your own infrastructure (with GDC Virtual/bare metal), offering various deployment modes for enterprise needs.

- Backed by Google’s global infrastructure and innovation – benefits from continuous improvements in Kubernetes, AI, and security driven by Google.

Cons

- Still emerging – Google’s edge offerings aren’t as longstanding as Azure or AWS equivalents, so enterprises might find fewer case studies or shorter track record in production.

- Tends to be complex; Anthos/GDC has many moving parts (Kubernetes, Istio, etc.), which might require skilled teams or Google support to implement and manage.

- As with any cloud-vendor solution, there’s a degree of lock-in – while Anthos is multi-cloud by design, you are investing in Google’s ecosystem and management plane, which may be an adjustment for those not already Google Cloud customers.

Best for

Companies that want a cloud-like experience on-premises and are inclined towards Google’s technology stack. For instance, telecommunications firms can use GDC Edge for 5G MEC (Multi-access Edge Computing) deployments, bringing Google’s network and container expertise into telco sites to run latency-sensitive network functions.

Likewise, retailers with many stores can deploy Google’s edge nodes for local processing (like inventory analytics or in-store personalization) while keeping everything integrated with Google Cloud analytics.

It’s also a fit for public sector or healthcare organisations that require data to stay on-site for compliance, but still want to leverage Google’s AI and cloud services in a controlled way.

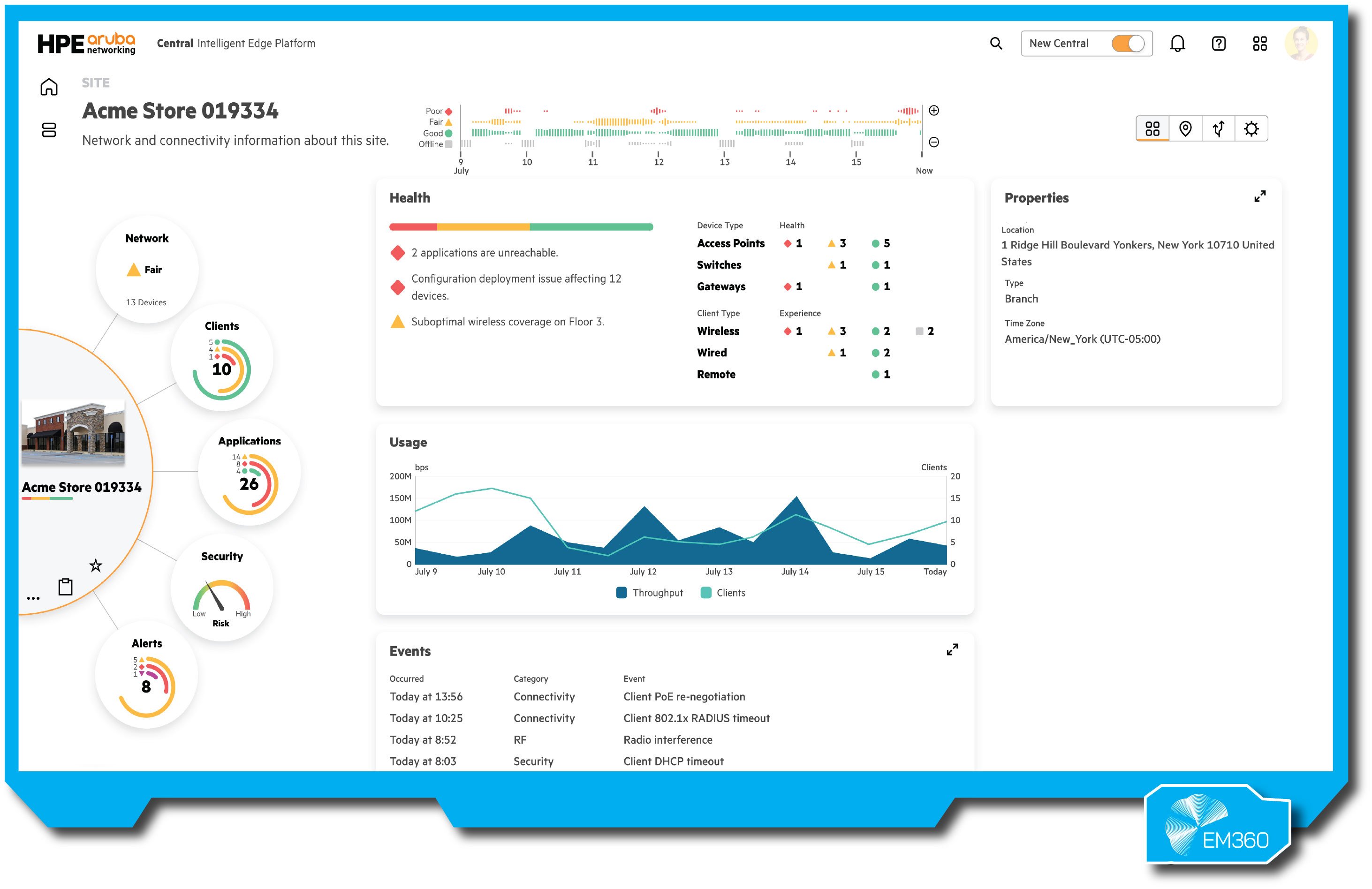

HPE GreenLake (Edge/Aruba)

Hewlett Packard Enterprise’s GreenLake platform has taken the company’s hardware and software portfolio and transformed it into an as-a-service, edge-to-cloud offering. Under the GreenLake umbrella, HPE offers everything from cloud services in your own data centre to networking at the edge through its Aruba products.

The key idea is “edge-to-cloud” consistency: enterprises can consume compute, storage, and network connectivity on-premises just like they would from a public cloud – fully managed and subscription-based.

Enterprise ready features

At the edge, HPE leverages its Aruba networking line (acquired in 2015) to provide intelligent network infrastructure (Wi-Fi, switching, SD-WAN) with integrated security and analytics (the Aruba Edge Services Platform).

This means an organisation could, for example, equip a smart campus or a chain of offices with Aruba wireless and edge compute devices, all monitored and managed through GreenLake’s cloud interface. Network intelligence and observability are big focuses – HPE’s tools can gather telemetry from network devices and user experience, helping IT pinpoint issues or optimise performance across distributed sites.

GreenLake’s edge offerings also tie into HPE’s IoT and data services, enabling real-time data processing close to source (like analysing surveillance feeds or machine sensor data on-site). Essentially, HPE GreenLake at the edge provides infrastructure as a service (servers, networking, and even apps) on customer premises, with HPE handling a lot of the heavy lifting (deployment, updates, maintenance) under a usage-based model.

Pros

- Unified platform for compute and networking: one of the few that deeply integrates edge network infrastructure (via Aruba) with edge compute, useful for holistic solutions (e.g., a smart building needs both compute and connectivity).

- Consumption-based as-a-service model – avoids large capital outlays; you pay for what you use, scaling capacity up or down as needed.

- Strong emphasis on observability and management: HPE GreenLake Central provides a dashboard for your entire fleet (data centres to edge), with AI-driven insights to optimise performance and uptime.

- Since it’s on-prem infrastructure, data can be kept locally to meet compliance, and HPE provides robust security features (Aruba’s networking gear is known for built-in Zero Trust capabilities).

- Broad sector applicability with tailored solutions (e.g., HPE offers pre-designed GreenLake solutions for manufacturing, energy, healthcare etc., aligning with common industry edge scenarios like factory automation or hospital networks).

Cons

- Requires commitment to HPE’s ecosystem – while open standards are supported, you’re likely using HPE hardware and software end-to-end, which may not suit those wanting a DIY or mix-and-match approach.

- Can be complex to initially set up contractually and technically (essentially bringing cloud-like service on-site is not plug-and-play) – many organisations engage HPE’s services team to implement.

- Pricing transparency can be an issue; as-a-service quotes are custom, so it may be hard to compare costs directly versus traditional capital purchase or public cloud costs.

Best for

Enterprises looking to modernise their infrastructure across edge and core in a unified way, especially if they prefer an OPEX model. For example, a university campus (“smart campus”) could use GreenLake with Aruba to deliver seamless connectivity for students and IoT devices, with local edge servers for video analytics and an HPE cloud management overlay to handle it all.

Similarly, manufacturing plants or energy companies with remote sites benefit from GreenLake’s fully managed approach, deploying ruggedised edge systems on-site for data collection and control, all managed as part of the company’s cloud. It’s a strong choice for organisations that value a one-stop solution for both IT and OT (operational technology) needs at the edge, with the confidence of HPE’s enterprise support behind it.

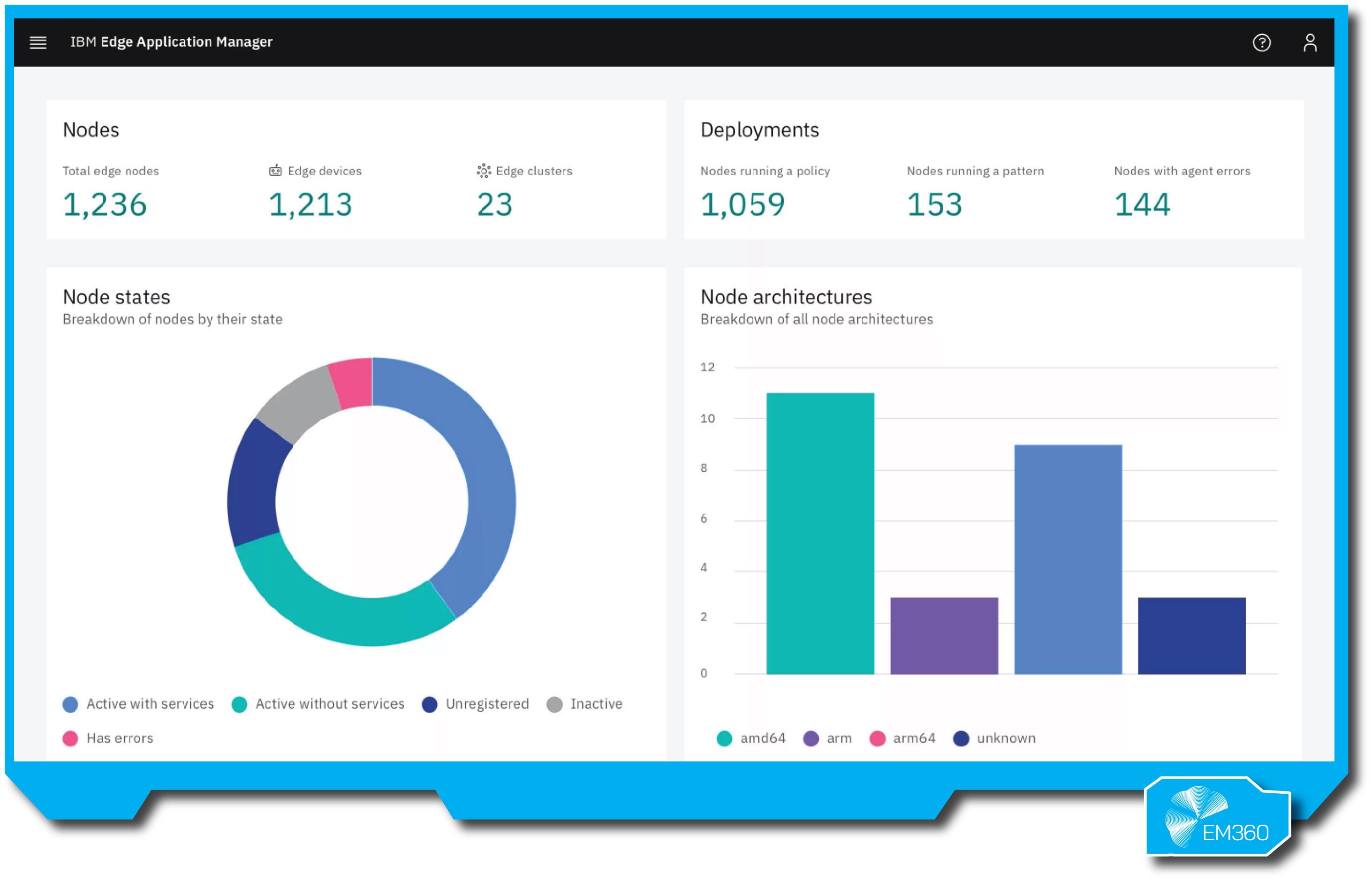

IBM Edge Application Manager

IBM Edge Application Manager (IEAM) is a platform for autonomous management of applications across edge devices and clusters at massive scale. It emerged from IBM’s expertise in both enterprise IT and the open-source Open Horizon project, aiming to help organisations deploy and monitor containerised workloads on tens of thousands of endpoints with minimal human intervention.

Enterprise ready features

IEAM works by using a central management hub, usually running in an IBM Cloud or OpenShift environment, which holds the deployment policies and software packages. From there, small agents (based on Open Horizon) run on each edge node (could be anything from a server in a branch office to a IoT gateway or even a Raspberry Pi).

Policy-based orchestration is the hallmark: an admin defines rules like “deploy this AI inferencing microservice to all edge devices in region X that have a GPU and are online weekends” – the system then autonomously takes care of rolling that out and keeping it running.

This drastically cuts down operational overhead, since you’re not manually fiddling with each site. IBM has built IEAM to be open and flexible: it supports any CNCF-certified Kubernetes environment (AKS, EKS, OpenShift, K3s, etc.) at the edge, which means it’s not locking you into IBM hardware.

It’s also designed with AI lifecycle in mind – helping deploy AI models (perhaps coming from IBM’s Watson or other AI tools) to edge locations and updating them as models retrain. In essence, IEAM is for organisations that need to scale edge computing without scaling their IT headcount linearly, using autonomy and intelligent automation to manage complexity.

Pros

- Unmatched scalability – built to handle thousands of edges, using autonomous agents that reduce the need for central micromanagement. This makes it feasible to manage huge IoT or retail deployments with a small team.

- Policy-driven deployment: very flexible targeting (by location, hardware capabilities, time, etc.), ensuring the right workloads run in the right place automatically.

- Runs on open standards (Kubernetes and containers) and is cloud-agnostic – works with existing infrastructure whether it’s on IBM Cloud, AWS, or on-prem OpenShift.

- Strong lineage in security and reliability – IBM’s background means features like encryption, signing of software, and audit trails are baked in (important for industries like banking or utilities).

- Good for AI at scale – was used in scenarios like distributing AI models (e.g., vision analytics) to thousands of cameras or devices, with the system handling updates and versioning.

Cons

- Can be complex to conceptualise and set up initially; it introduces new constructs (policy, patterns) that have a learning curve. Some organisations might require IBM services or significant training to use it effectively.

- IBM’s cloud and AI ecosystem ties: while open, it naturally integrates well with IBM Cloud Pak for Data, Watson, etc. Enterprises not using any IBM software might not take full advantage or might prefer a different vendor’s approach.

- Primarily focuses on application deployment/management; less about providing hardware or an entire stack, so you still need the underlying edge infrastructure in place (it’s a manager, not a full edge-as-a-service offering).

Best for

Organisations facing “edge at scale” challenges – for example, a global banking corporation managing thousands of ATMs and branch servers, or a smart city project coordinating software across myriad sensors and cameras. It’s also well-suited to industrial and energy sectors, like an oil company with thousands of rigs or wells each with an edge device needing software updates.

Essentially, any scenario where a small team must manage an extremely large, distributed application footprint reliably can leverage IBM Edge Application Manager’s strengths in policy automation and autonomy. Sectors like utilities, manufacturing, and telco (for 5G edge deployments) also align well, benefiting from IBM’s emphasis on reliability and integration with enterprise systems.

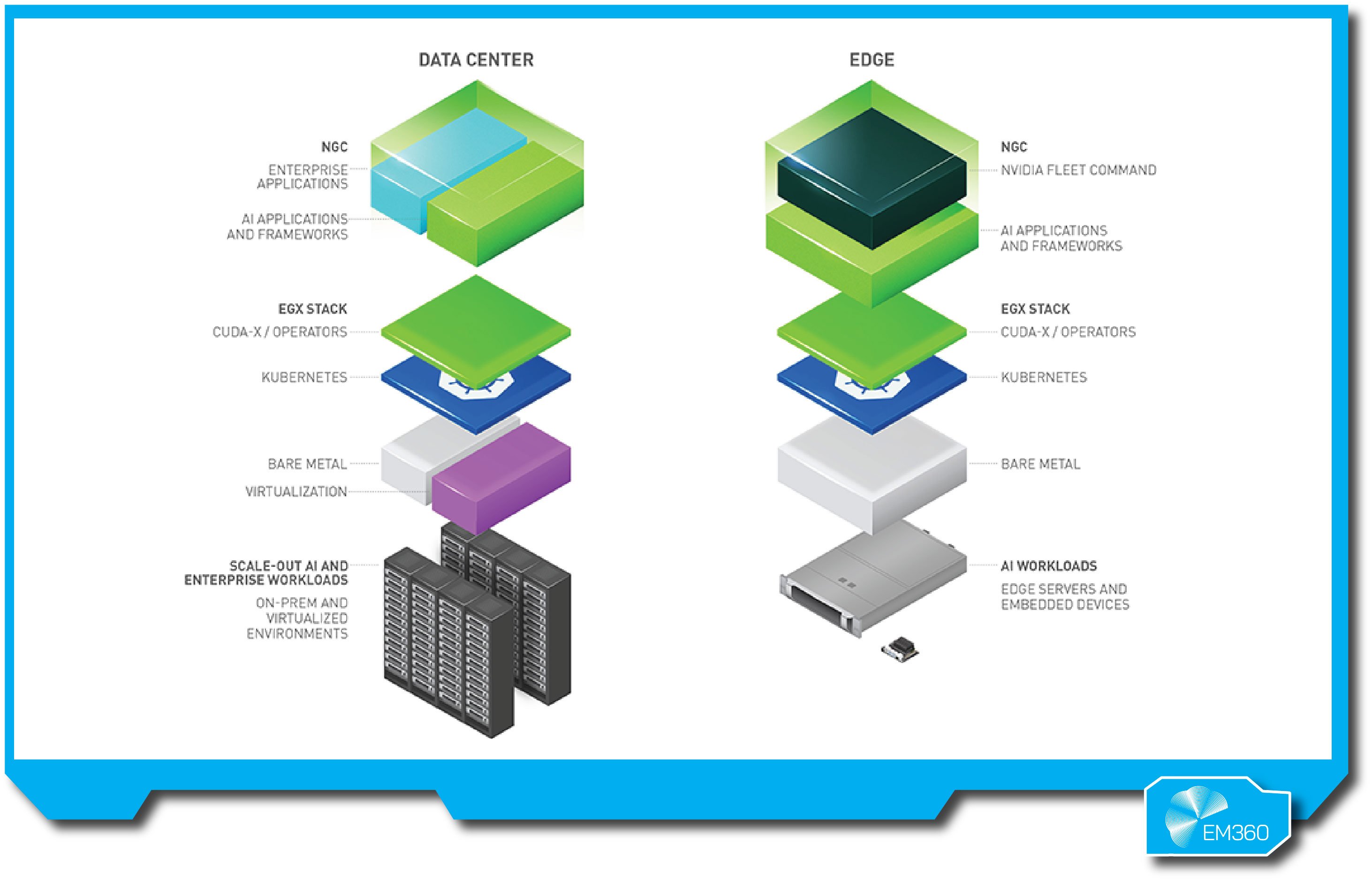

NVIDIA EGX

NVIDIA EGX is a cloud-native edge AI platform that combines NVIDIA’s renowned GPU hardware with an optimised software stack for running AI and data analytics outside the core data center.

NVIDIA observed that many AI tasks (like video analytics, robotics control, or sensor fusion) need to happen in real time on the edge, so they created EGX to make it easier for enterprises to deploy GPU-accelerated workloads in places like factories, hospitals, or retail stores.

Enterprise ready features

On the hardware side, EGX can range from small Jetson devices (for IoT gateways or cameras) up to hefty server cards with NVIDIA A100 GPUs. On the software side, it includes tools like the NVIDIA Fleet Command cloud service for centrally managing and monitoring these edge systems, as well as the NVIDIA AI Enterprise suite (with components like TensorRT for optimising models, Triton Inference Server for serving models, and pre-trained models from NGC, NVIDIA’s model registry).

In practical terms, an enterprise could use EGX to run a computer vision app across hundreds of retail stores – each store has an EGX server that does the AI crunching on-prem (e.g., detecting stock levels or customer flow from camera feeds), and Fleet Command ensures all those servers have the latest AI model and are functioning properly.

EGX is also designed to integrate with existing infrastructure: it supports running GPU workloads on Kubernetes (with plugins for Kubernetes scheduling on GPUs), and can work with VMware (through vSphere with GPU support) or Red Hat OpenShift environments, meaning you don’t have to reinvent IT processes to use it. Essentially, NVIDIA EGX brings high-performance AI to the edge in a manageable, scalable way.

Pros

- Unparalleled AI performance at the edge, thanks to NVIDIA GPUs – enables advanced use cases like real-time video analytics, natural language processing on-site, or complex IoT sensor fusion that other platforms might struggle with.

- Complete AI stack that includes not just hardware but also containers, models, and orchestration (Fleet Command) geared specifically for AI, reducing integration work for the user.

- Centralised management with Fleet Command: even non-GPU experts can deploy and update AI applications across distributed sites with a few clicks, and monitor health/security centrally.

- Strong partner ecosystem – adopted by many server OEMs (Dell, Lenovo, etc.) and software partners, ensuring compatibility and a variety of deployment options.

- Future-proofing for AI growth – as enterprises incorporate more AI, having an architecture in place that can handle heavier models (by swapping in newer GPUs) is a plus.

Cons

- Focused on AI workloads: if your edge needs are more general IT or lightweight (simple data filtering, basic control systems), EGX might be overkill in complexity and cost.

- Power and cooling considerations – GPU hardware can be power-hungry; deploying EGX at very remote or constrained sites requires ensuring sufficient power and environment for the hardware (sometimes a challenge for extreme edge locations).

- Vendor specificity – while it runs on standard servers, the magic is in NVIDIA’s stack, so you are aligning with NVIDIA for the long term for updates, support, and possibly needing NVIDIA’s cloud services for management.

Best for

AI-intensive industries and applications at the edge. Think of a hospital running sophisticated AI models to assist in medical imaging diagnostics in each department, or a manufacturer using computer vision on the assembly line for quality control (identifying defects in real time).

Smart city deployments like traffic monitoring or public safety analytics (where video feeds in thousands of cameras need analysis) would benefit from EGX’s GPU acceleration. Also, sectors like retail (for loss prevention and shopper behavior analysis via cameras) and logistics (real-time package tracking and sorting using AI) are ideal candidates.

Essentially, if an organisation foresees deploying neural networks or real-time analytics algorithms outside the cloud, NVIDIA EGX provides the performance and manageability to do it effectively at scale.

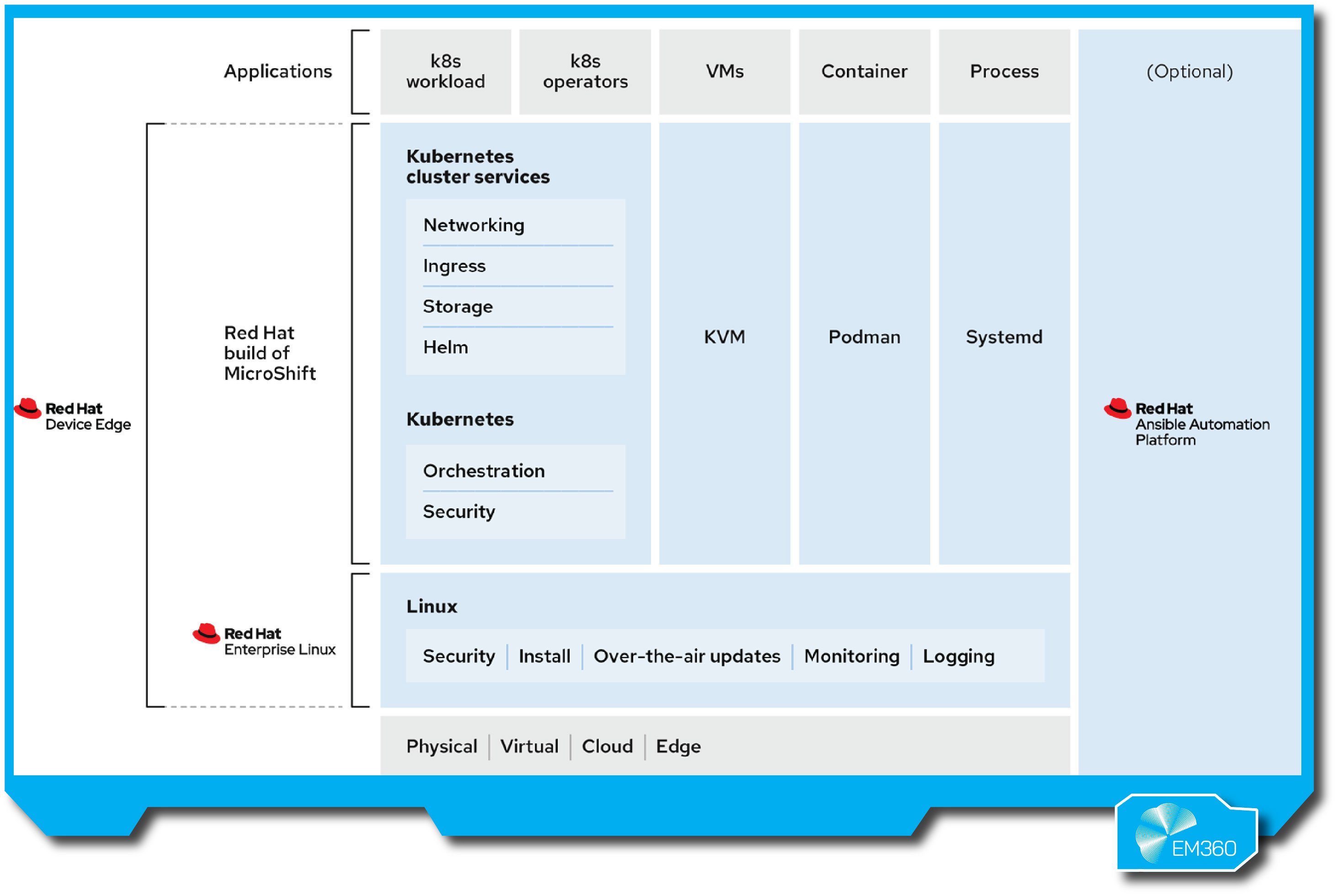

Red Hat OpenShift + Device Edge

Red Hat OpenShift is already a household name for enterprise Kubernetes, and with the rise of edge computing, Red Hat has extended its platform to cover tiny devices and remote deployments through what it calls Red Hat Device Edge. At its core, this approach combines the reliability of Red Hat Enterprise Linux (RHEL) with a lightweight Kubernetes distribution called MicroShift to run on resource-constrained hardware.

So, OpenShift – which powers cloud and data centre containers – has been trimmed down to run on something as small as an IoT gateway or an edge server with limited CPU/RAM. The goal is to maintain a consistent Kubernetes API from the cloud to the far edge, so that the same development and deployment tools can be used uniformly.

Enterprise ready features

Device Edge integrates Red Hat’s automation tooling (Ansible) for zero-touch provisioning and management of those remote nodes, and it leverages the existing OpenShift ecosystem (operators, security features, etc.) in an edge context. This platform is open-source at heart, aligning with Red Hat’s ethos, and can run on any vendor’s hardware that supports Linux.

A typical scenario might be a telco deploying OpenShift at cell tower sites (with MicroShift on small boxes) to run network functions or local services, all managed from a central OpenShift console. The open-source community aspect (with projects like MicroShift and Open Horizon in the mix) means there’s a lot of innovation and flexibility in how it can be adapted.

Security is also strong: RHEL’s built-in security and SELinux policies extend to the edge devices, and the platform supports over-the-air updates, rollbacks, and GitOps-style management to ensure even far-flung devices can be updated reliably.

Pros

- True cloud-native consistency: it brings the proven OpenShift container platform to the edge so your developers don’t need to learn a new paradigm for edge.

- MicroShift can run on small x86 or ARM devices, meaning you can put Kubernetes in places it previously couldn’t fit, from factory machines to on-vehicle systems.

- Strong focus on security and stability (RHEL at the core) – you get enterprise Linux durability, long-term support, and a huge ecosystem of certified software.

- Red Hat Ansible Automation Platform is included for managing fleets of devices, doing things like one-click provisioning or configuration of hundreds of edge nodes. Also supports GitOps (using repositories to sync desired state) for consistent configuration across sites.

- Vendor-neutral and open so works on any hardware, and you’re not locked into a cloud provider – plus you benefit from community innovation and avoid proprietary traps.

Cons

- OpenShift’s complexity can still be there – even a slimmed version may be too complex for extremely simple edge scenarios. Running Kubernetes, even MicroShift, might be overkill for tiny single-purpose devices.

- Requires Linux savvy and possibly Red Hat subscription investment; while open source, getting full value means having Red Hat support and using their ecosystem, which has licensing costs.

- Competing edge solutions from cloud providers might be simpler if you’re all-in on that cloud (for example, OpenShift Device Edge vs. AWS Greengrass – the latter might be easier if you only use AWS). So it’s best if you have a multi-cloud or on-prem strategy that makes OpenShift worth it.

Best for

Organisations with a strong open-source and hybrid cloud orientation, especially if they already use Red Hat OpenShift in some capacity. Telcos are a prime example – many 5G deployments rely on OpenShift for network functions, and Device Edge would let them extend those Kubernetes-managed functions to cell sites or customer premises.

Manufacturing companies that are standardising on containerised applications (e.g., for assembly line monitoring or automation) can use this to deploy apps on the factory floor with the same tools used in the cloud. Government or defence agencies, who often prefer open-source solutions for flexibility and auditability, might use Red Hat Device Edge for edge deployments in the field where proprietary solutions won’t do.

Also, any enterprise aiming to avoid cloud lock-in and maintain a cohesive on-prem/cloud dev environment – such as a financial institution running sensitive workloads on-prem but wanting cloud-like orchestration – could leverage OpenShift + Device Edge to cover both core and edge needs uniformly.

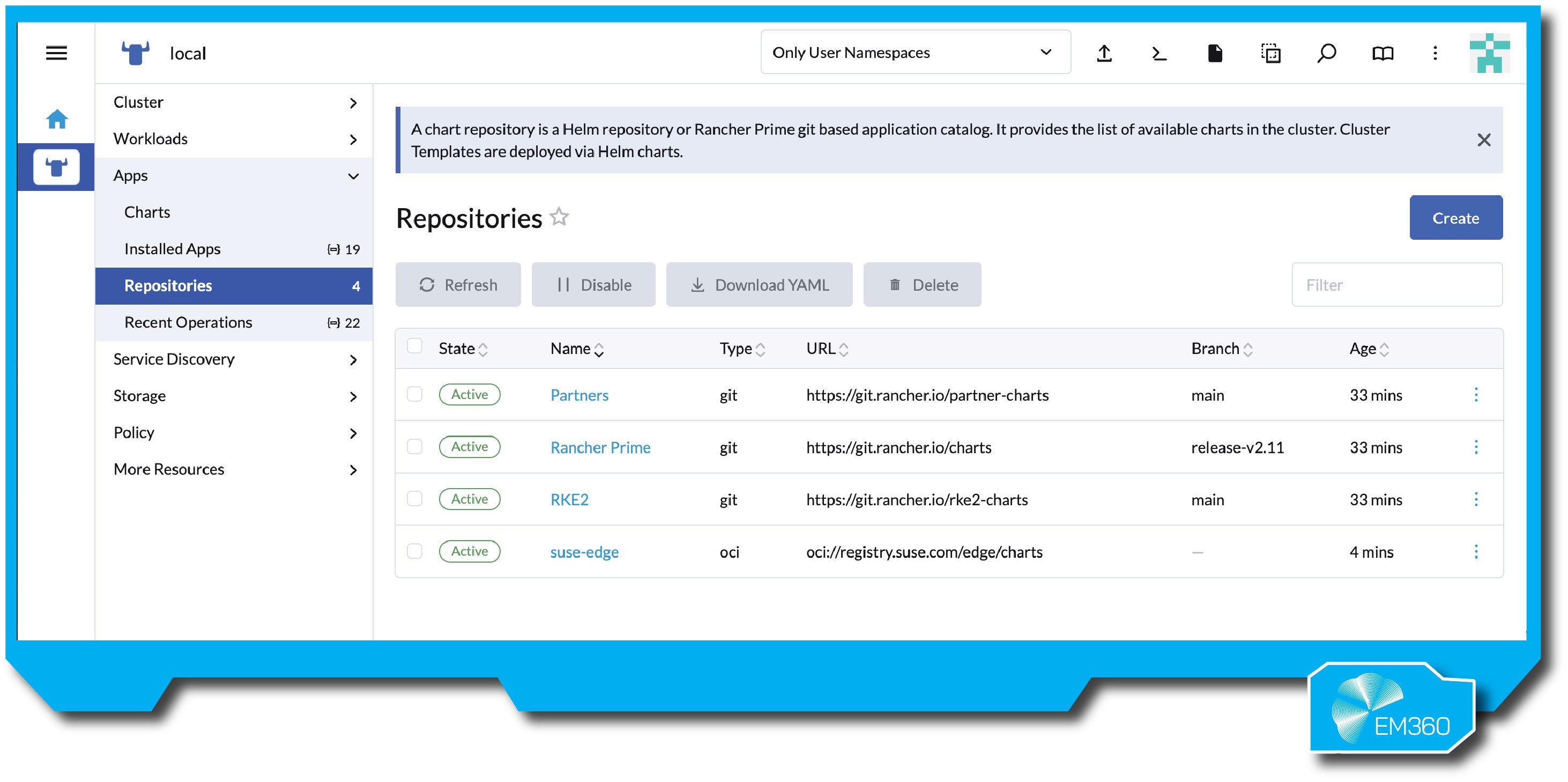

SUSE Edge Suite

SUSE, known for its Linux and the popular Rancher Kubernetes management platform, offers SUSE Edge (sometimes referred to as SUSE Edge Suite) as a cloud-native edge solution emphasising openness and automation.

A cornerstone of SUSE’s edge approach is K3s, the lightweight Kubernetes distribution originally developed by Rancher, which is perfect for edge because of its small footprint and ease of use. SUSE Edge Suite combines K3s for running containers on small devices with Rancher for central management of all clusters, plus additional tooling for zero-touch provisioning and GitOps.

Enterprise ready features

The philosophy is “deploy anywhere, manage from anywhere” – you can stand up a Kubernetes cluster on a remote edge device (even in an IoT scenario) with minimal manual steps, and have it automatically enroll into a management plane. SUSE has also invested in technologies for automated updates and rollback, so you can update thousands of edge nodes reliably without sending engineers on-site.

Security and reliability are addressed through an “immutable infrastructure” concept – for instance, SUSE’s adapted Linux OS for edge can be read-only and remotely updated in a transactional way to prevent configuration drift or tampering. The suite is modular: you might use SUSE Manager for some Linux device management, Rancher for Kubernetes, etc., depending on needs.

Crucially, SUSE Edge is built on open source (Linux, K3s, etc.), which appeals to organisations wanting to avoid proprietary constraints. It aligns well with multi-cloud and hybrid strategies, since Rancher can manage not just edge K3s clusters but also cloud-based clusters (EKS, AKS, etc.), giving a single pane of glass for all Kubernetes everywhere.

Pros

- Lightweight Kubernetes (K3s) means you can run container orchestration in very resource-constrained environments – ideal for remote IoT devices or branch offices with a single server.

- Supports fully remote provisioning of clusters through an emphasis on zero-touch automation. For example, plug in an edge box and it can auto-configure itself via pre-loaded instructions – great for scaling to many sites.

- Strong central management with Rancher: one dashboard to manage all your edge clusters, plus apply consistent security policies, access control, and monitor health across the fleet.

- Completely open-source based, reducing vendor lock-in. You have the flexibility to customise or extend components, and a community of developers contributes to these projects (K3s, etc.).

- Good security and reliability practices: “immutable” infrastructure and Kubernetes ensure each deployment is consistent; plus SUSE provides enterprise support for the whole stack if needed.

Cons

- Organisations without Kubernetes/container knowledge may face a learning curve to containerise their edge workloads.

- SUSE’s ecosystem, while robust, is not as large as, say, AWS or Azure in terms of third-party integrations. You may need to rely on SUSE and open-source tools more so than big vendor solutions for things like device management, etc.

- Multi-component deployment – to fully utilise SUSE Edge Suite, you might end up deploying SUSE Linux Enterprise, Rancher, K3s, maybe Longhorn for storage, etc. It’s powerful but could be seen as a lot of pieces (though Rancher helps unify them).

Best for

Enterprises that prioritise openness and flexibility in their edge strategy, especially those already familiar with Kubernetes. For example, a multi-site enterprise with hundreds of retail outlets could use SUSE Edge to deploy a small K3s cluster in each store for running point-of-sale and local analytics apps, all overseen by a central IT team via Rancher.

Industrial operations (mining, oil & gas, manufacturing) that want to avoid being tied to a single cloud vendor and need a solution that can run in air-gapped or offline scenarios appreciate SUSE’s approach. Also, telcos and service providers often use SUSE/Rancher as an alternative to Red Hat or VMware for orchestrating edge services on commodity hardware.

In short, it’s a fit for organisations that want full control of their edge environment, leveraging open-source innovation and avoiding proprietary limitations, while still getting the benefits of automation and at-scale management.

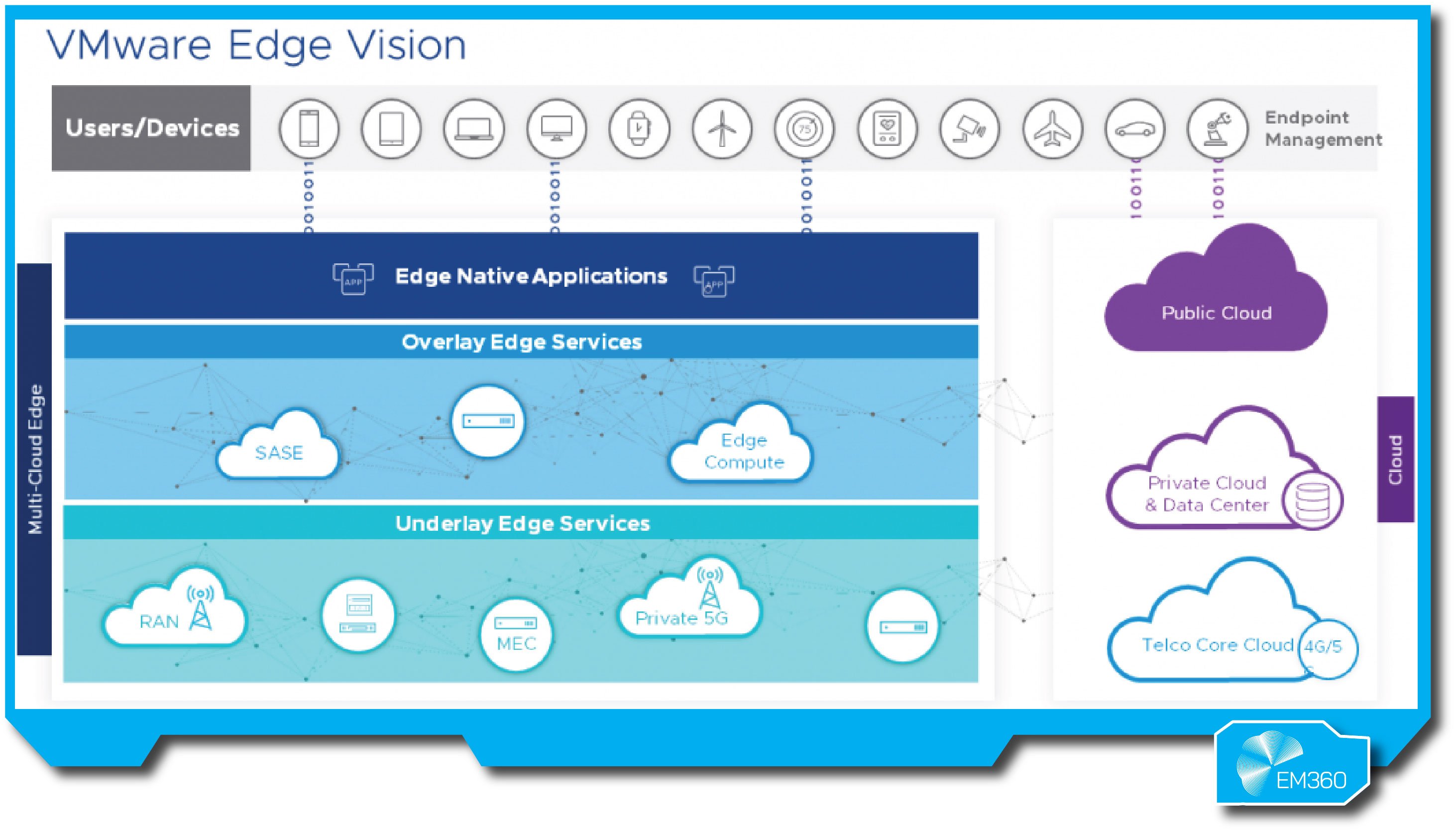

VMware Edge Compute Stack / VCF Edge

VMware, a mainstay of enterprise data centers, has extended its offerings to support edge computing through what’s collectively referred to as VMware Edge Compute Stack (often part of VMware Cloud Foundation for Edge). This solution is about running VMware’s infrastructure software (vSphere for VMs, vSAN for storage, and Tanzu Kubernetes Grid for containers) in a slimmed, edge-optimised package.

Essentially, VMware took the components many IT departments use in core data centers and adapted them for smaller clusters that might live in a retail store back room or on a factory floor.

Enterprise ready features

The Edge Compute Stack provides a consistent virtualisation and container platform – so an edge site can run virtual machines for legacy applications and Kubernetes-orchestrated containers for modern apps, side by side, managed through the familiar vCenter interface. It’s often delivered as a bundle on certified hardware (there are even tiny rugged servers from Dell or Lenovo designed to run this stack).

Key features include remote life-cycle management, network optimisation for sketchy WAN links, and security enhancements for unsecured locations (VMware has features like SD-WAN integration and zero-trust security that pair with the stack).

Also, because it’s part of VMware Cloud Foundation (VCF), it integrates with VMware’s cloud management – meaning if you’re running VMware in your core and maybe VMware Cloud on AWS, you can now extend that operational model to the edge too.

Pros

- Ability to run existing virtualised workloads alongside cloud-native apps on one infrastructure, which is great for gradual migrations and supporting legacy systems in edge locations.

- Leverages vSphere, vSAN, NSX, and Tanzu, so VMware admins can manage edge clusters just like any other vCenter-managed environment, reducing training and error.

- vSAN provides reliable storage even on two-node clusters (often used in edge) with high availability; NSX can secure and network these edge sites with policies.

- Validated edge stacks on numerous partner devices and integration with Dell, HPE, Lenovo etc., means you can get pre-tested solutions (like Dell’s Tiny Edge for VMware) for faster deployment.

- Supports fleet management where thousands of edge sites can be managed through VMware’s central tools, and even uses GitOps style configuration via Tanzu Mission Control for Kubernetes apps in those sites.

Cons

- Even slimmed down, running vSphere + Kubernetes might be heavier than some purpose-built edge solutions (especially on sites with very minimal hardware).

- Licensing model for multiple sites can get expensive, which might be a concern if you have hundreds of edge locations.

- Adopting this stack purely for edge may not make sense as learning and managing VMware could be as complex as the problem it’s solving.

Best for

Enterprises with a strong VMware footprint that want to extend their infrastructure to the edge without adopting an entirely new paradigm. For example, big retail chains or banking networks that have been running applications on VMware in central data centers can push out a small 2- or 3-node vSphere cluster to each branch or store to localise transactions and services, all while managing them centrally.

Also, healthcare networks – think hospitals or clinics that need local servers for electronic health records or imaging – can benefit by using VMware Edge, keeping consistent with their main hospital data center setup.

Manufacturing or energy sites that already use VMware for control systems in central locations might find it convenient to replicate that at remote sites for ease of management.

Key Trends Shaping the Future of Edge and Near-Data Processing

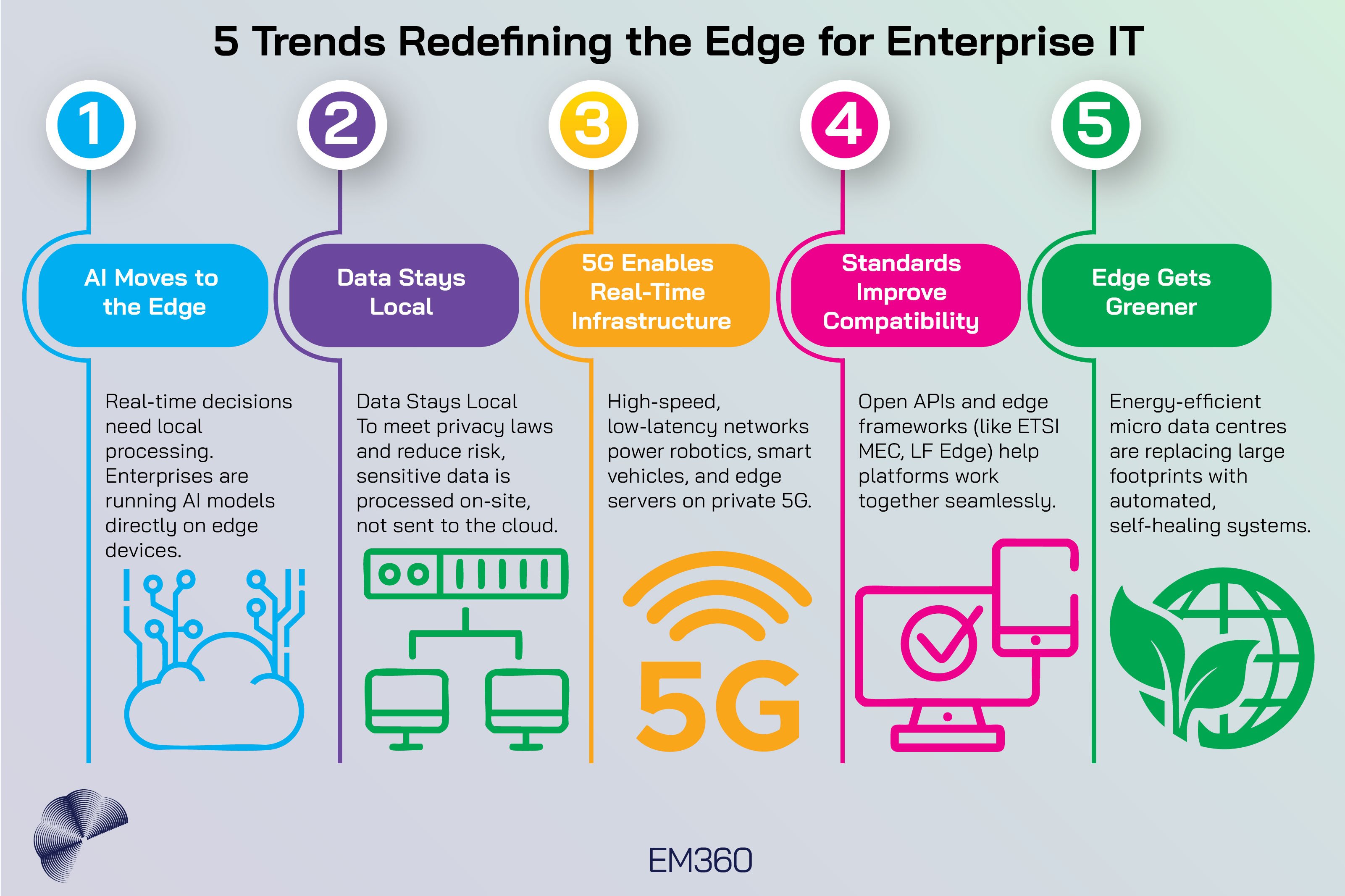

As edge and near-data processing evolve, several shifts are shaping what capabilities leaders need to plan for next. These trends go beyond product features — they signal a broader redefinition of how, where, and why data is processed.

AI at the edge is becoming ubiquitous

One major trend is the proliferation of edge AI – companies are increasingly deploying machine learning models on edge devices to enable everything from real-time video analytics to on-the-fly anomaly detection in manufacturing.

This means edge platforms are evolving to better support GPUs and NPUs, and frameworks that make it easier to update AI models over the air. The ability to run AI inference at the edge unlocks new use cases (like AR/VR and autonomous machines) that simply wouldn’t be feasible with cloud-only processing due to latency.

Data locality and sovereignty take centre stage

With data protection laws tightening worldwide and consumers demanding privacy, organisations are using edge computing to enforce data governance policies. Sensitive information can be processed and aggregated locally, with only non-sensitive insights sent to the cloud.

This local-first approach helps comply with regulations (think GDPR or data residency laws in finance) and also reduces the risk of large-scale breaches. We’ll continue to see architectures where data stays within a country or facility, embodying the mantra that data gravity keeps heavy data close to home.

5G and private networks are powering edge expansion

The rollout of 5G is a huge catalyst for edge computing, especially in telecom and IoT scenarios. 5G connectivity provides the high-bandwidth, low-latency links needed to connect distributed edge nodes (or to connect end devices to nearby edge servers).

Enterprises are also investing in private 5G/LTE networks on their campuses, which pair beautifully with edge computing – a private network gives reliable local connectivity, and edge servers on that network can process the data without ever leaving the site.

This convergence of edge and 5G means ultra-responsive applications (like mobile robotics or smart vehicles) will become far more common.

Industry standards and ecosystems are maturing

To avoid the edge becoming a collection of siloed point solutions, groups like the ETSI MEC (Multi-access Edge Computing) initiative and the Linux Foundation’s LF Edge projects are driving standards and interoperability. These efforts are producing reference architectures and open APIs that ensure different vendors’ edge platforms and the interoperability between telecom operators and cloud providers can work together.

The result should be easier integration of edge components and a richer ecosystem of applications that can run on any conformant edge infrastructure – much like how cloud standardized on APIs like S3 or Kubernetes.

Sustainability and resilience through micro data centres

As the number of edge deployments grows, there’s a parallel push for them to be energy-efficient and self-managing. Many companies are designing micro data centres – essentially small, contained stacks of compute and cooling – that can run with minimal onsite intervention.

These often come with features like automated failover (one node can take over if another fails), remote telemetry for predictive maintenance, and energy-optimised hardware that can run in harsh environments without guzzling power. This trend is about making edge computing greener and more reliable, ensuring that a swarm of edge sites doesn’t become an undue burden in terms of carbon footprint or manpower.

Expect innovations like solar or wind-powered edge units in remote areas, advanced cooling techniques for on-shop-floor servers, and “lights out” operation where an edge site can run unattended for long periods, self-healing when issues arise.

Final Thoughts: Data Gravity Demands Local Intelligence

Enterprise technology is coming full circle – from centralised mainframes, to distributed PCs, to cloud, and now back out to the edge. The driving force behind this latest swing is data gravity: as data volumes explode and the value of instant insight rises, it simply makes sense to process information where it is generated.

Forward-looking infrastructure leaders recognise that a winning infrastructure strategy balances the strengths of the cloud with the immediacy of near-data processing. It’s not a question of cloud or edge, but rather cloud and edge, working in harmony.

By intelligently partitioning workloads – keeping critical, low-latency tasks local and pushing aggregate or less time-sensitive tasks to the center – enterprises achieve new levels of performance, resilience, and customer experience.

The companies that master this balance are poised to lead in the coming decade. In an era of relentless digital transformation, success will belong to those who can glean insights from data instantly, act autonomously when needed, and still leverage global compute power for big-picture analytics.

In practical terms, that might mean smarter factories that adjust on the fly, retailers that personalise in real time, and cities that optimise traffic moment by moment. The common thread is local intelligence as an extension of cloud intelligence.

Building out edge capabilities is no longer just an IT project – it’s a business imperative, tying technology investment directly to innovation and competitive advantage.

At EM360Tech, we continue to analyse how architectures like these are reshaping enterprise infrastructure and data ecosystems — because tomorrow’s advantage will belong to those who understand where intelligence truly lives.

Comments ( 0 )