When a major cloud region slows or a provider suffers a control-plane incident, the downstream impact is felt across product, revenue, and reputation. Multi-cloud strategies promise resilience and freedom of choice, but they also multiply the moving parts that leaders must govern.

Policies drift. Teams create their own patterns. Environments diverge. What looks like flexibility on a slide turns into unpredictability in production.

Platform engineering solves for that unpredictability. By turning cloud operations into a product, platform teams provide standardised, automated, and governed paths for building and running software. They make the safer choice the easiest choice. In a multi-cloud world, that design principle reduces risk as much as it improves speed.

It is how resilience stops being a poster on the wall and starts being the way work gets done. The question for leaders is not whether platform engineering matters. It is how to make it the backbone of multi-cloud risk reduction without adding bureaucracy or friction for developers. That is the focus of this article.

Understanding The Risk Landscape Of Multi-Cloud Environments

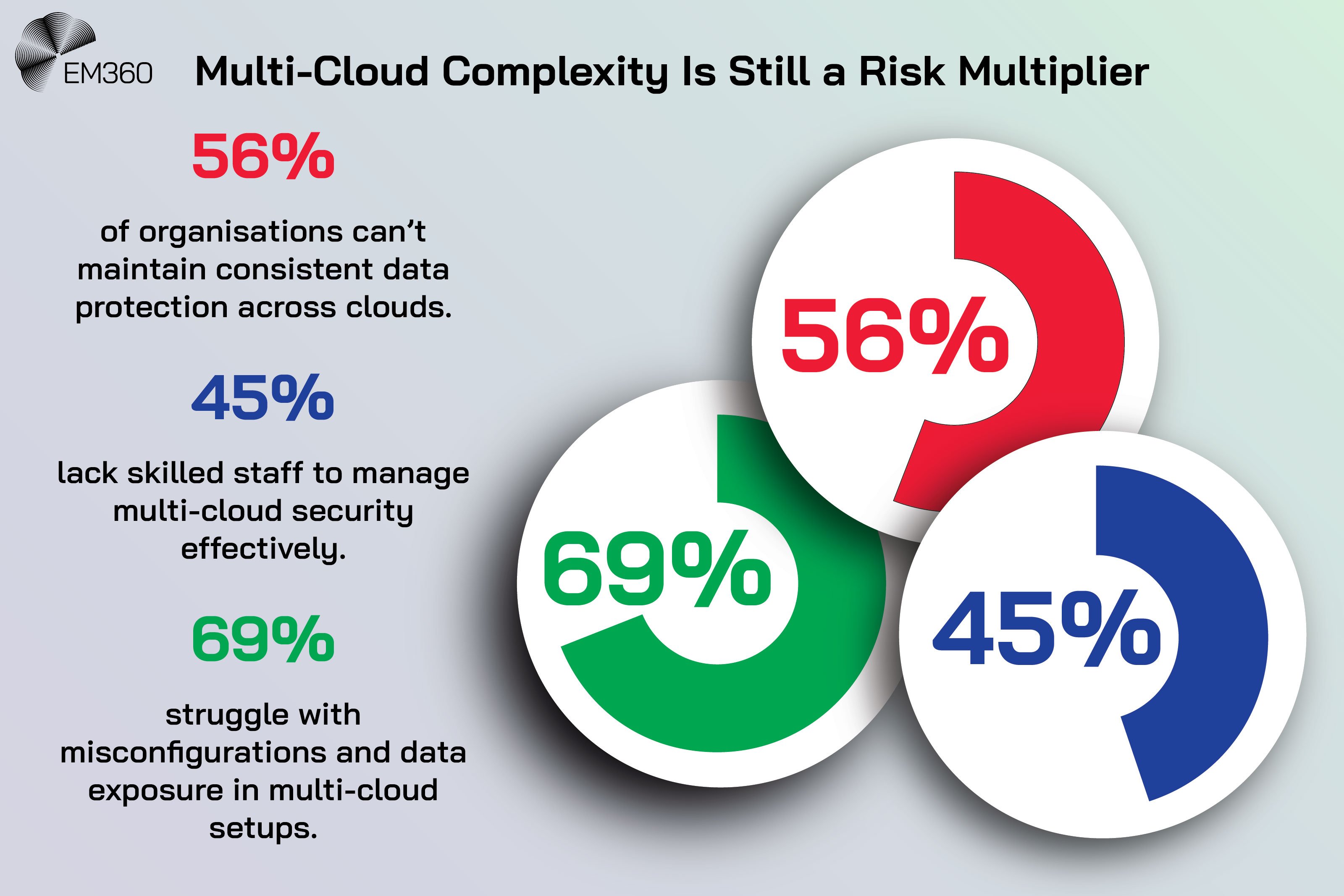

Enterprises have embraced multi-cloud to balance performance, cost, and sovereignty. Yet as portfolios expand across providers, the risk profile changes just as quickly. Each new environment adds its own tooling, governance model, and operational language, turning control into coordination.

Before exploring how platform engineering reduces that complexity, it helps to see what today’s multi-cloud reality truly looks like.

What multi-cloud really looks like today

Modern estates rarely sit on one cloud. Enterprises combine managed services from multiple hyperscalers, run Kubernetes across different regions, and still carry a portfolio of on-premises systems. There are duplicated services with different semantics, drift between staging and production, and multiple identity and access models to reconcile.

Procurement decisions from prior years linger inside critical paths. Partners bring their own hosting. Mergers and acquisitions add more of everything. The picture is not a tidy diagram. It is a living system with competing defaults, parallel pipelines, and uneven controls.

It needs a layer that abstracts complexity, enforces consistency, and provides clear interfaces to teams. That layer cannot be a PowerPoint. It has to be a platform that people actually use.

Why complexity equals risk

As cloud footprints expand, so do failure modes. Risk is not only attackers and outages. It is slow creep. It is the extra key someone adds because a deadline looms. It is the one-off pipeline built for a critical release that becomes the permanent path. It is the configuration drift between environments that makes a fix work in staging and fail in production.

It is the lack of a single view of ownership, cost, and service reliability. Each small variance increases the surface area for misconfiguration, elevates compliance risk, and adds time to every recovery.

The result is familiar. Teams are fast locally and slow globally. Security creates policies that live in documents rather than systems. Operations spend more time stitching together tooling than improving outcomes. Leaders cannot see if the organisation is getting safer or riskier month by month.

The evolving threat and compliance pressure

Threat actors move quickly and target the weakest link in a distributed chain. Vulnerabilities can be exploited shortly after disclosure, which means the window to patch is measured in hours, not weeks. In parallel, regulations keep expanding. Data sovereignty rules differ across regions. Sector guidance expects evidence, not claims.

Auditors want to see the audit trail that links a change back to policy and forward to production.

Multi-cloud magnifies these dynamics. There are more API surfaces, more identities, and more places for secrets to live. There is also less shared context across teams about what “good” looks like. Without a single platform providing visibility and observability, leaders are managing by anecdote rather than evidence.

Why Observability Now Matters

How telemetry, tracing and real-time insight turn sprawling microservices estates into manageable, high-performing infrastructure portfolios.

What Platform Engineering Really Means

Platform engineering has become one of the most discussed transformations in modern infrastructure, yet its purpose is often misunderstood. Many leaders still associate it with tooling or DevOps rebranding, when in reality it represents a structural shift in how enterprises build and operate technology at scale.

To understand its impact on risk reduction, we first need to define what platform engineering actually is.

Defining platform engineering beyond DevOps

DevOps asks teams to own what they build. Platform engineering gives them paved roads to do it safely. Where DevOps focuses on culture and collaboration, platform engineering focuses on productising the environment that teams use each day. The output is not a wiki page.

It is a set of golden paths and self-service capabilities that are versioned, supported, and measured like any other product. A strong platform exposes clear interfaces for compute, data, networking, and deployment. It codifies policies, supplies guardrails, and removes toil. It makes the simplest path the most compliant path.

It is not a centralised command-and-control function. It is a service that teams choose because it is better than going it alone.

The anatomy of a platform team

Effective platform teams bring together product thinking, engineering depth, and operational discipline. They are staffed for outcomes, not tools. A typical structure includes:

- A platform product manager who owns vision, roadmap, and stakeholder outcomes.

- Engineers focused on the platform core, including Infrastructure as Code (IaC), build and release automation, and cloud service integration.

- Security engineers who partner on policy as code, secrets management, and identity baselines.

- Reliability specialists who define SLOs and incident workflows alongside application teams.

- Developer experience leads who ensure that the platform is usable, documented, and supported.

Inside Hybrid Client-Server

How hybrid cloud, edge nodes, containers and observability are reshaping the client-server stack for latency, scale, governance and control.

This blend reflects the work. The platform must reduce cognitive load for developers, satisfy security, and deliver measurable reliability. It does this by providing opinionated defaults, discovery through a service catalogue, and a culture of continuous feedback.

Reducing Risk Through Platform Engineering

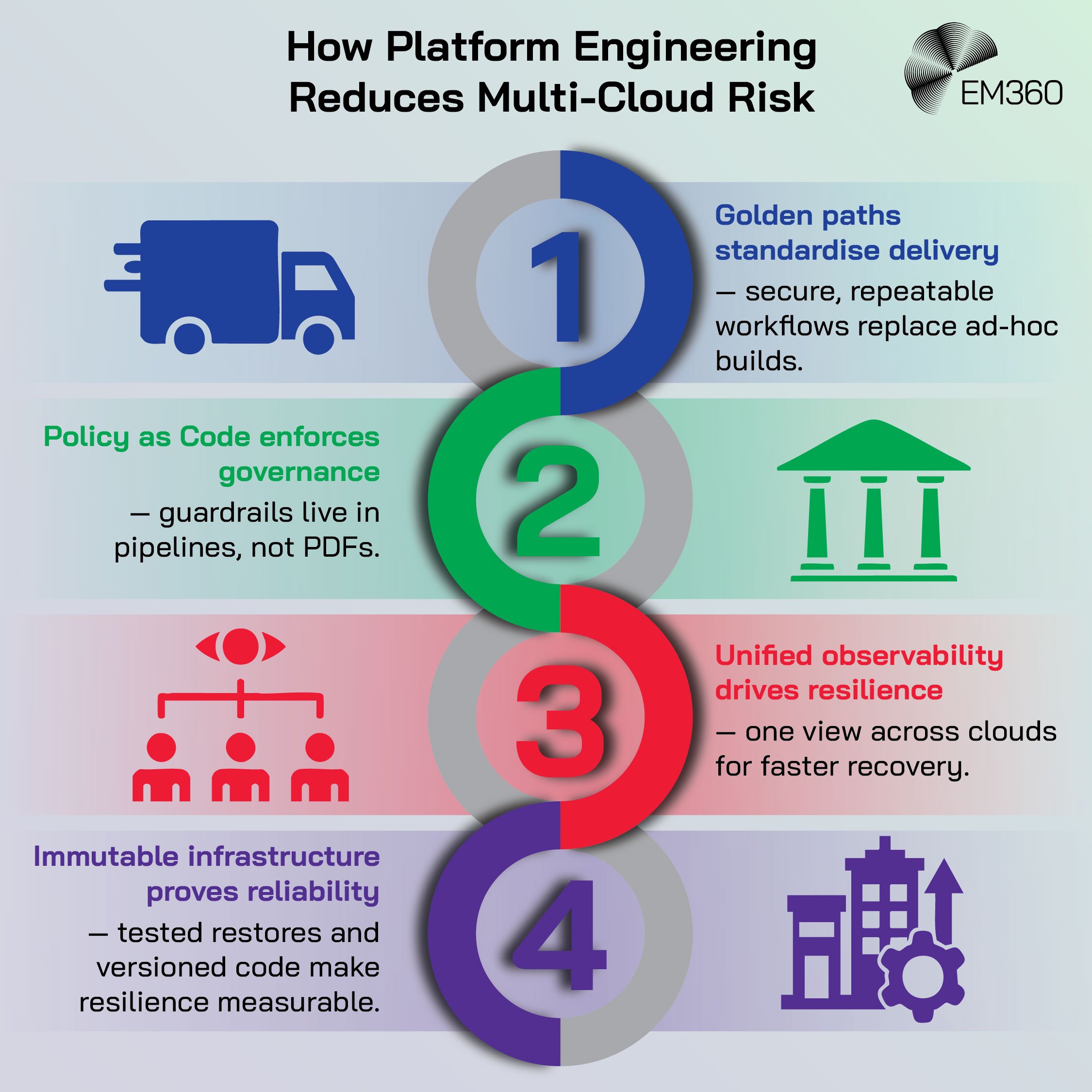

Once the role of platform engineering is clear, its value in reducing multi-cloud risk becomes easier to see. The core principle is consistency — creating predictable, automated environments where security, compliance, and reliability are built in rather than bolted on. That starts with how services are delivered and the paths teams use to build them.

Standardisation through golden paths and service catalogues

Every organisation has multiple ways to deploy, connect, and monitor services. Golden paths transform that sprawl into a small number of supported, secure patterns. They pre-wire CI/CD, identity, network baselines, secret handling, and observability so that each team does not have to reinvent them.

They also encode sensible constraints so that risk-heavy choices are surfaced for approval rather than silently adopted. The service catalogue complements these paths by making capabilities discoverable and self-serve. Teams can request a database with the right backup policy, create a service with approved images, or spin up a test environment with production-like guardrails.

Requests generate records. Records become metrics. Metrics reveal where policy is working and where shortcuts appear. This is how standardisation moves from intention to behaviour.

Policy as code and governance automation

Policies that live in PDFs do not prevent incidents. Policies that live in code can. Policy as code (PaC) does three things. It embeds guardrails in the same pipelines teams already use. It provides clear feedback at the point of change. It creates an audit trail that shows who changed what, when, and why.

Examples include:

Managing IT Risk with AIOps

See how AIOps tackles alert fatigue, skills gaps and mounting complexity while aligning automation with governance and accountable decisioning.

- Enforcing identity requirements at build and deploy time.

- Blocking public storage by default unless a managed exception exists.

- Requiring signed images and verified provenance before deployment.

- Preventing network policies that bypass segmentation baselines.

- Mapping controls to regulations so that compliance evidence is generated as a by-product of normal work.

This is governance automation. It turns policy from a conversation into a system. It also accelerates delivery because teams can see failures early and fix them quickly, rather than waiting for a distant change advisory to identify the issue.

Observability and proactive resilience

You cannot manage what you cannot see. A platform that spans clouds must provide a unified lens across logs, metrics, traces, and events. That lens is used to define service-level objectives that mean something to customers and to drive incident response that is fast and repeatable.

The platform’s role is practical. It standardises telemetry libraries and exporters. It bakes tracing into the golden paths. It gives teams pre-built dashboards and alert rules that match the way services are deployed. It shortens mean time to recovery (MTTR) by helping responders find the point of failure quickly, not by paging more people.

With a consistent data layer, leaders can see patterns that justify investment. They can quantify the services that absorb change well and those that do not. They can measure the effect of new guardrails on failure rates. They can identify noisy alerts that hide real incidents. This is what proactive resilience looks like at scale.

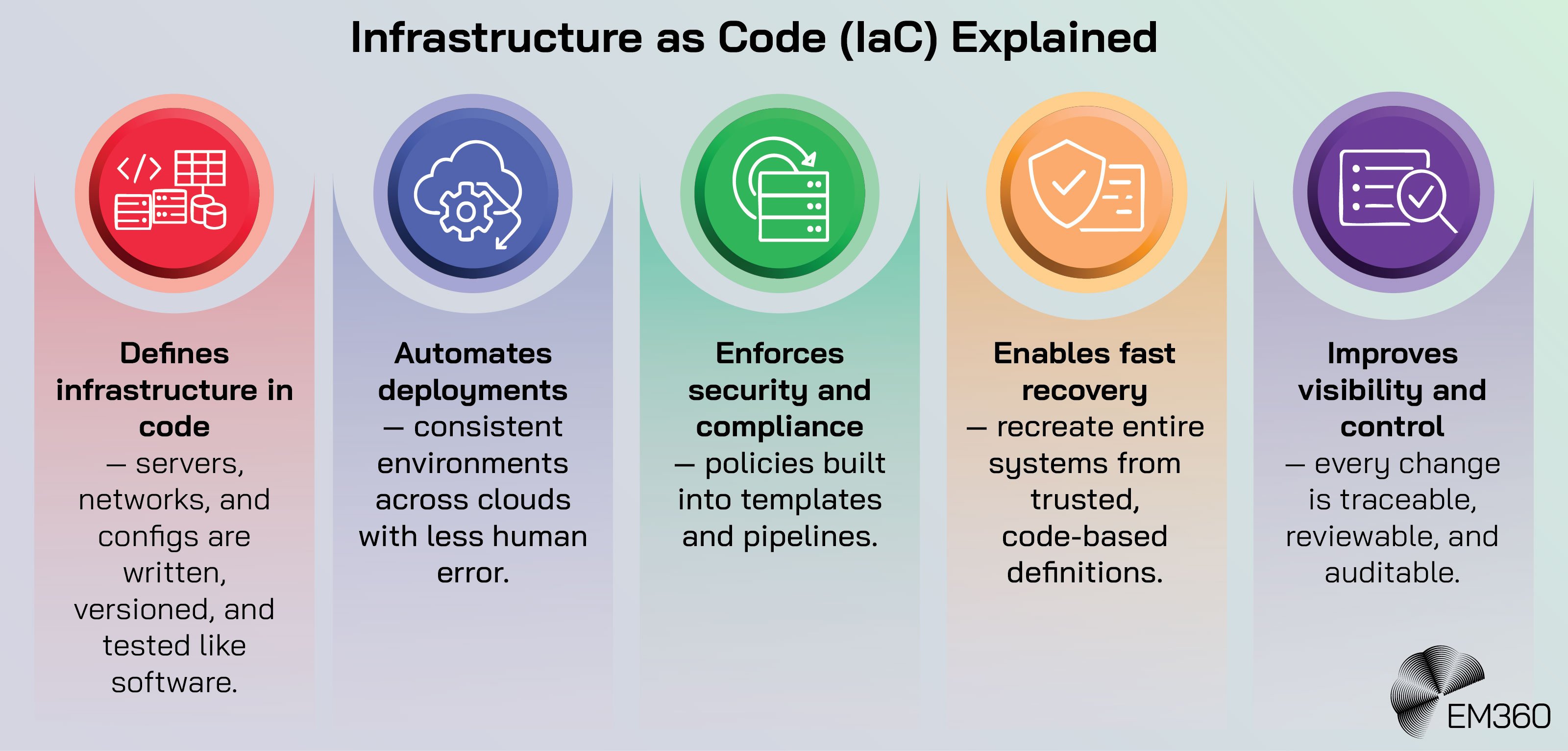

Immutable infrastructure and recovery readiness

Backups are not a strategy if they cannot be restored. Infrastructure as code (IaC), immutable images, and automated recovery tests make resilience testable. If a runtime or region fails, the platform should be able to recreate the environment from code and known-good artefacts.

If a database is compromised, the platform should support clean recovery into a quarantined account with verifiable integrity checks.

Key practices include:

When Data ROI Drives Strategy

Coralogix's Chris Cooney on aligning observability data with board-level ROI and cutting wasted analytics spend.

- Versioned infrastructure definitions that are peer-reviewed and security-scanned.

- Image pipelines that produce signed, minimal base images with patched dependencies.

- Scheduled restore tests for critical data that produce evidence, not just logs.

- Playbooks for cross-region and cross-provider failover that are rehearsed, not written after the fact.

- Pipe-clearing drills that validate assumptions about quotas, dependencies, and DNS.

These controls reduce recovery guesswork. They also make it easier to demonstrate to auditors and boards that resilience is engineered, not simply hoped for.

Building A Platform Engineering Strategy That Scales

Translating platform engineering from concept to capability requires intent and structure. The organisations that succeed treat their platform as a product with clear ownership, measurable outcomes, and a roadmap for maturity. Building that foundation starts with leadership alignment and a defined mandate.

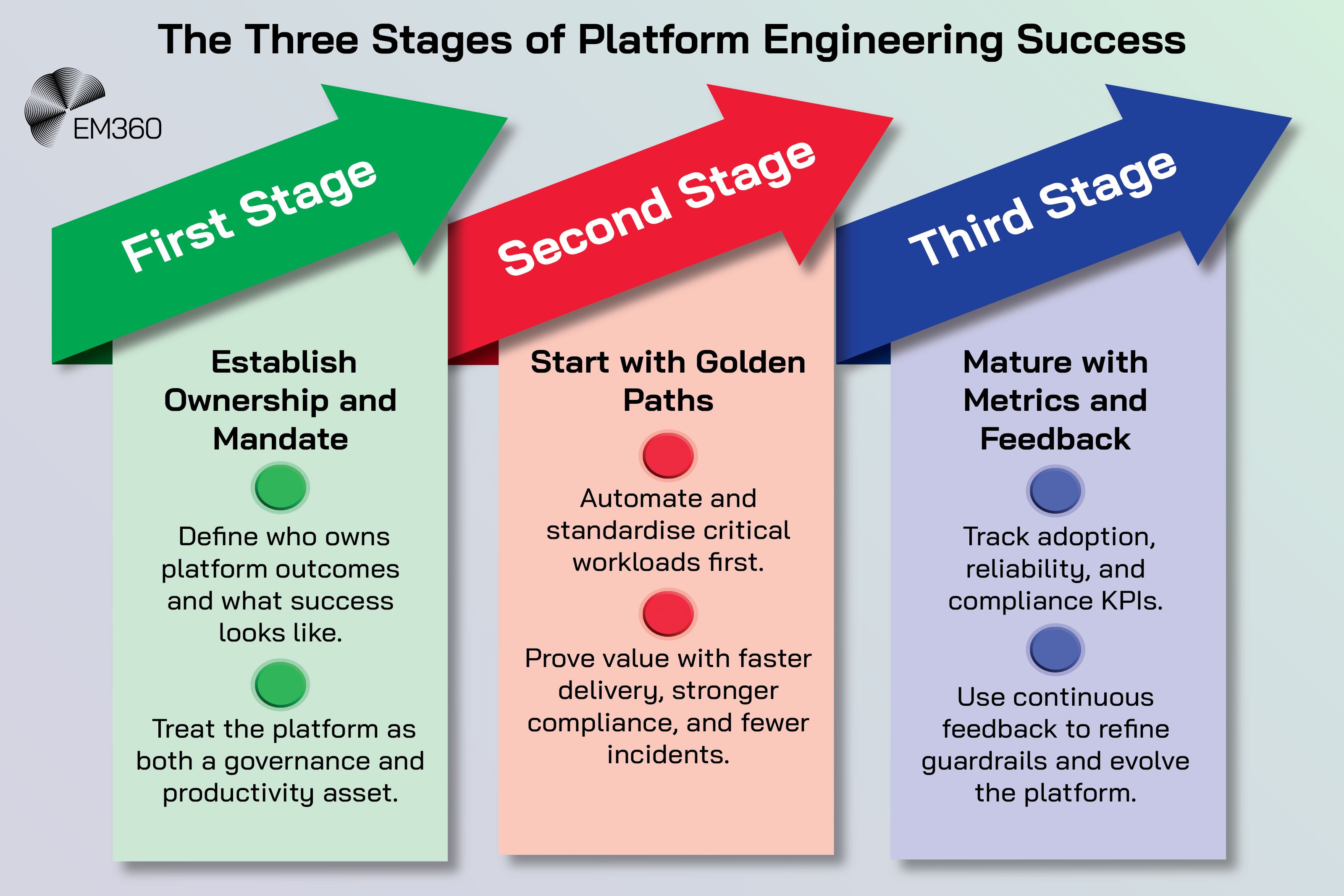

Step 1: Establish platform ownership and mandate

The first step is clarity. Determine who is accountable for the platform’s outcomes and which outcomes matter most to the organisation. Make those outcomes explicit. Examples include reducing change failure rate, improving time to mitigation for critical vulnerabilities, and increasing golden path adoption across high-risk workloads.

Define the operating model. The platform team should publish a contract: what the platform provides, what teams can expect, and how requests and feedback are handled. Position the platform as a risk and cloud governance asset as much as a productivity accelerator. Set the tone that the platform will reduce toil while raising the quality floor.

Step 2: Start with golden paths for critical workloads

Do not try to cover everything on day one. Start where risk and value meet. Identify workloads that handle regulated data, run revenue-critical paths, or sit on complex dependency chains. Build end-to-end automation for those services first.

Include environment creation, CI/CD, security scans, runtime controls, and observability out of the box. Measure adoption and satisfaction. Show that the new path removes friction and cuts incident noise. Use those results to win more teams over. Treat every integration as product discovery.

The aim is to replace a dozen inconsistent patterns with a few well-supported ones that are safer and faster.

Step 3: Mature with metrics and feedback loops

A platform without metrics is another tool. A platform with metrics is a business capability. Establish a small set of key performance indicators (KPIs) that tie directly to risk and reliability:

- Platform adoption rate by product or domain.

- Percentage of services on golden paths.

- Policy-as-code pass rates at build and deploy.

- Change failure rate and MTTR trends.

- Drift detection and remediation time across environments.

- Backup restore confidence scores and drill frequency.

Build feedback loops with product teams and security. Use satisfaction surveys to find friction. Use incident reviews to update guardrails. Align with recognised maturity models so that leaders can see progress over quarters, not just releases. Publish the trendlines. Confidence builds when improvement is visible.

Measuring The Risk Reduction Impact

Risk reduction only becomes meaningful when it can be measured. The strength of a platform lies not just in its architecture but in the evidence that it improves reliability, compliance, and delivery speed. Quantifying those gains turns platform engineering from a theory into a proven business capability.

Quantifying resilience and efficiency gains

Executives need evidence that platform engineering is reducing risk and enabling delivery. The following measures are practical and comparable across domains:

- Resilience metrics. Uptime against SLOs, percentage of critical services with tested failover, and average recovery time by incident class.

- Security posture. Time to patch critical vulnerabilities across services on and off golden paths. Reduction in high-risk misconfigurations caught before production.

- Change quality. Change failure rate by team and by deployment pattern. Percentage of rollbacks caused by environment inconsistencies.

- Operational efficiency. Lead time for changes, queue times in approvals, and time saved through self-service environment creation.

- Compliance evidence. Number of automated controls mapped to obligations. Coverage of audit artefacts produced by pipelines rather than manual collection.

Numbers tell the story, but consistency makes the difference. When leaders can compare a service that uses the platform with one that does not, the value becomes obvious. Risk conversations become specific. Investments shift from intuition to data.

Benchmarking platform maturity

Self-assessment helps prioritise the next quarter. Use a simple rubric:

- Foundational. IaC exists, but patterns vary. Some teams have one-click deploys. Observability is partial. Policies are documented, not enforced.

- Emerging. Golden paths exist for common stacks. Policy checks run in CI. Baseline dashboards and SLOs are defined. Backup drills occur for key systems.

- Established. Most services use the platform. PaC gates deployment. Drift detection is active. Recovery runbooks are automated and tested. Teams measure and review outcomes.

- Optimising. The platform is product-managed with clear roadmaps. Developers give structured feedback. AI-assisted detection and automation reduce toil. Evidence collection is automatic.

Tie initiatives to stages. For example, moving from Emerging to Established might mean expanding the service catalogue, adding provenance checks for artefacts, and lifting trace coverage from 40 percent to 85 percent. Keep the plan simple, publish the targets, and revisit them with stakeholders.

What’s Next: Platform Engineering’s Role In Future Cloud Risk Strategy

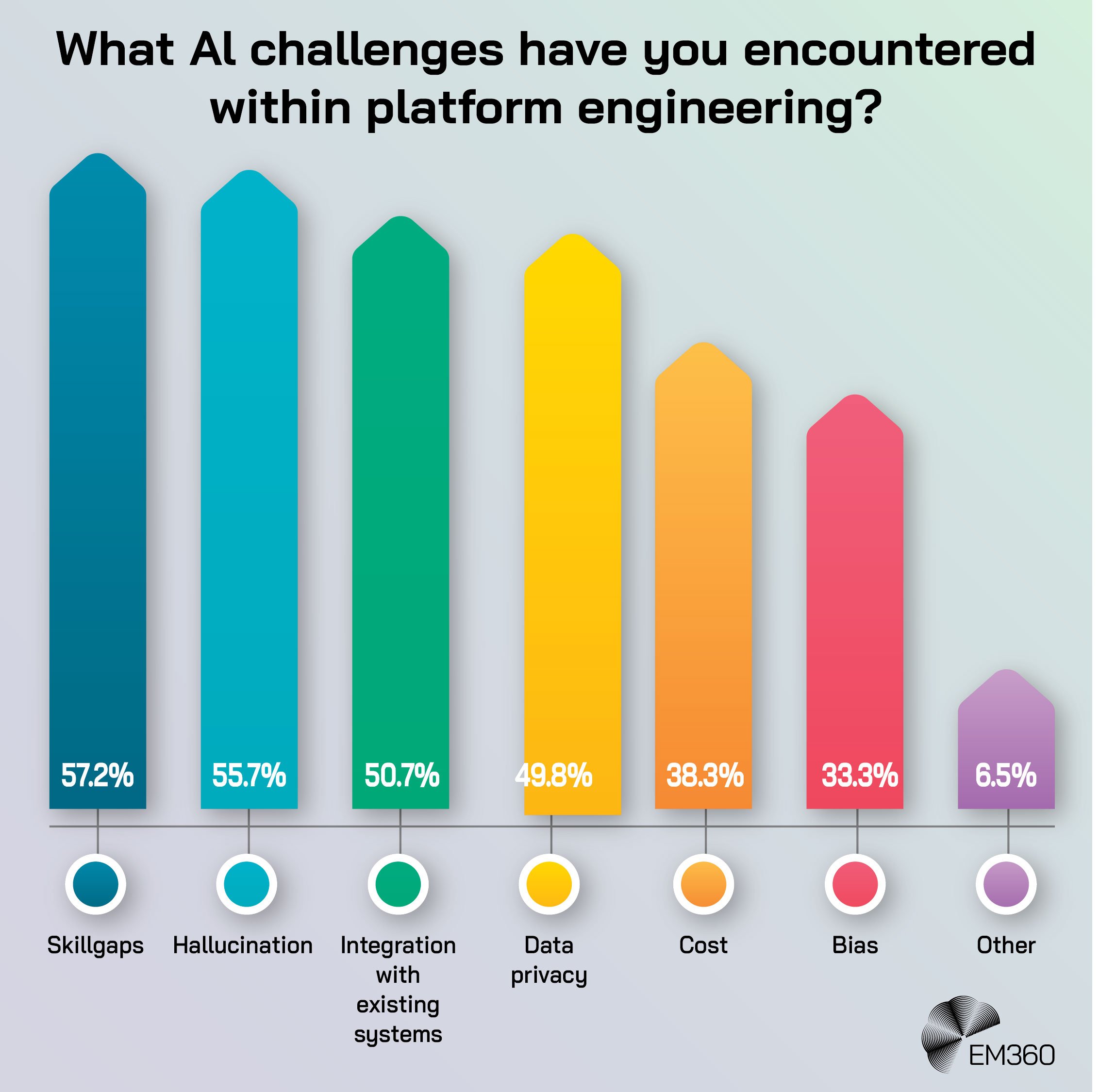

As platform engineering matures, its scope is expanding beyond automation and governance into intelligence. Emerging technologies are enabling platforms to anticipate risk, adapt policies in real time, and make proactive decisions at scale. The next evolution lies in how artificial intelligence enhances these capabilities.

AI-assisted policy and adaptive automation

Artificial intelligence is most useful when it speeds routine work and spots weak signals in large telemetry sets. In platform engineering, that means:

- Identifying policy drift earlier by correlating changes across code, infrastructure, and runtime signals.

- Suggesting least-privilege scopes during development, based on patterns across similar services.

- Predicting capacity risks and noisy dependencies before peak periods.

- Highlighting flaky tests and pipelines that correlate with post-deploy incidents.

- Recommending next-best actions during incidents to reduce time to mitigation.

The fabric remains the same. Good data in. Clear objectives. Transparent controls. AI augments, but it does not replace, the fundamentals of strong platform design and human judgement.

Ecosystem standardisation and interoperability

The direction of travel across the industry is toward interoperability and shared controls. Standards for supply-chain security, artefact signing, and provenance are moving from guidance to expectation. Cross-cloud orchestration is becoming simpler as tools converge on common patterns.

The practical outcome is that platform teams can provide safer defaults with less custom glue. This trend supports risk reduction. When the ecosystem aligns on interfaces and metadata, switching costs fall and resilience patterns become portable. It also reduces the blast radius of vendor change.

The more your platform relies on open standards, the easier it is to adapt when providers shift features or pricing.

Final Thoughts: Governance Is The New Resilience

Multi-cloud complexity will not slow down. New services will arrive. Teams will ship faster. Regulations will evolve. The choice for leaders is whether that complexity grows unmanaged or is channelled through a platform that turns good policy into everyday practice.

The most secure, compliant, and cost-effective organisations treat their platform as a strategic asset. They measure adoption, close feedback loops, and renew the paved roads that keep teams safe without slowing them down. That is how risk falls while delivery accelerates.

Recovery becomes a rehearsed motion rather than a late-night scramble, and the result is resilience that is measurable and repeatable.

If you want to compare notes with leaders who are building platforms that carry real-world risk, join the conversations happening through EM360Tech’s interviews, reports, and peer roundtables. You will find practical insight, tested patterns, and a community that is shaping the next generation of resilient multi-cloud platforms.

Comments ( 0 )