Data without purpose is just an expense. Yet over the past decade most organisations have focused on accumulating data rather than activating it. That phase is ending. As regulatory pressure mounts and artificial intelligence (AI) makes it possible to extract insights from real‑time and unstructured sources, data monetisation is evolving from a niche capability into a board‑level mandate.

AI-driven data monetisation isn’t just about selling datasets though — it’s about using AI to identify, enrich, and package data into products that generate recurring revenue. It’s what turns raw information into scalable intelligence, powering everything from smarter APIs to predictive services.

The market for ai-powered data monetisation platforms is expanding rapidly: Fortune Business Insights estimates that it will grow from US$3.47 billion in 2024 to US$12.62 billion by 2032, while Gartner projects global spending on generative AI to reach US$644 billion in 2025.

Combined with the impending application of the EU Data Act in September 2025 and phased rollout of the AI Act beginning in February 2025, enterprises now find themselves under both market and regulatory pressure to turn data into a revenue engine.

The Enterprise Shift From Data Collection to Data Value

Enterprises have spent years collecting data in silos, investing heavily in storage and analytics without clear pathways to monetisation. In 2025 that is no longer sufficient. Competitive advantage now comes from creating value through AI‑assisted analysis, packaging, and sharing of data. The shift is driven by three forces:

- Regulatory catalysts. The EU Data Act and AI Act formalise obligations for data access, sharing, and transparency. Compliance‑by‑design is now a critical feature of any monetisation strategy.

- Market momentum. Steep spending projections for generative AI and surging investment in data platforms show that the data economy is expanding. IDC expects that by 2028, 60 per cent of enterprises will collaborate on data via private exchanges or clean rooms.

- Technological maturity. Advances in machine learning, AI agents, and automated valuation models mean organisations can now price, package and share data without exposing sensitive information. This opens the door to new revenue models.

How AI Transforms Data Monetisation

AI-powered data monetisation means using artificial intelligence to unlock, enhance, and commercialise the value hidden in enterprise data. There are two primary routes. Internal monetisation improves operations by using AI to enhance decision‑making, efficiency, or product recommendations.

External monetisation creates new revenue by packaging data into products, services, or APIs that can be sold or licensed to third parties. In practice, organisations often pursue both strategies, generating insights internally while commercialising non‑competitive data externally.

How AI Unlocks New Data Value

Artificial intelligence is not just another analytical tool; it changes what can be monetised. Machine‑learning algorithms can infer patterns from messy, unstructured data, and large language models can synthesise disparate sources into useful outputs. For data monetisation, AI brings three transformative capabilities:

- Real‑time pricing and valuation: AI models can continuously assess the value of data based on demand, usage patterns and market benchmarks. This enables dynamic pricing and contract flexibility.

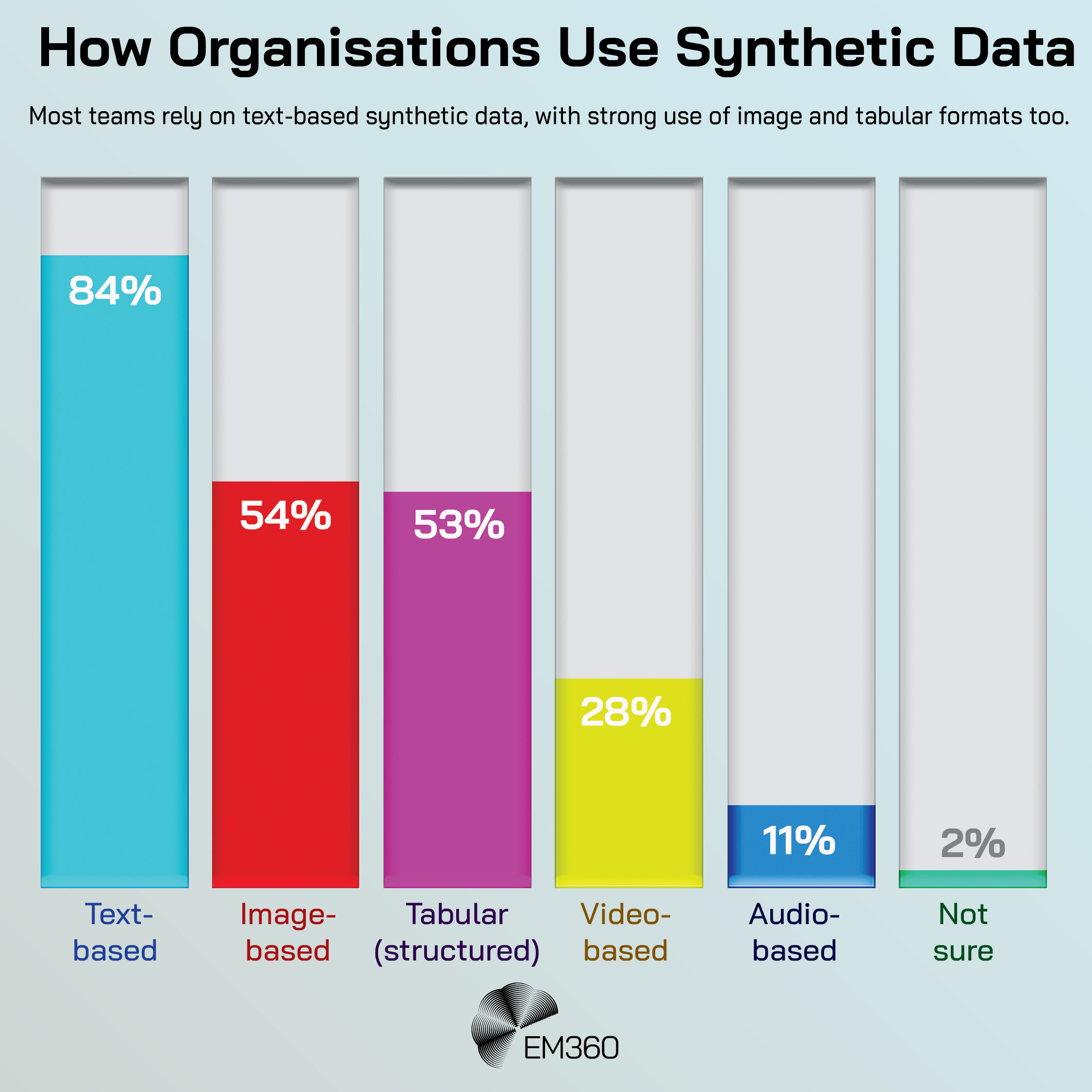

- Synthetic data generation: Generative AI can create statistically similar datasets that preserve patterns while protecting individual privacy. This allows organisations to share insights without exposing raw data.

- Risk‑based access: AI can assess risk and control access to data based on context, ensuring that sensitive information is exposed only under authorised conditions. Combined with automated governance, this reduces manual overhead and improves compliance.

By turning static datasets into decision‑ready assets, AI helps enterprises move from data hoarding to data value creation.

Key Trends Shaping AI-Powered Data Monetisation in 2025

As enterprises mature in their approach to data, monetisation has evolved from a tactical experiment into a strategic capability. The past year has seen an acceleration in how AI, regulation, and ecosystem design are reshaping what “value creation” actually means in data strategy.

When AI Agents Need Old Rules

Reframe agentic AI as an extension of automation, using deterministic workflows to deliver measurable value instead of endless pilots.

From platform consolidation to new compliance frameworks, the environment around data monetisation is being rewritten — and the implications reach far beyond technology teams.

The Rise of AI Marketplaces

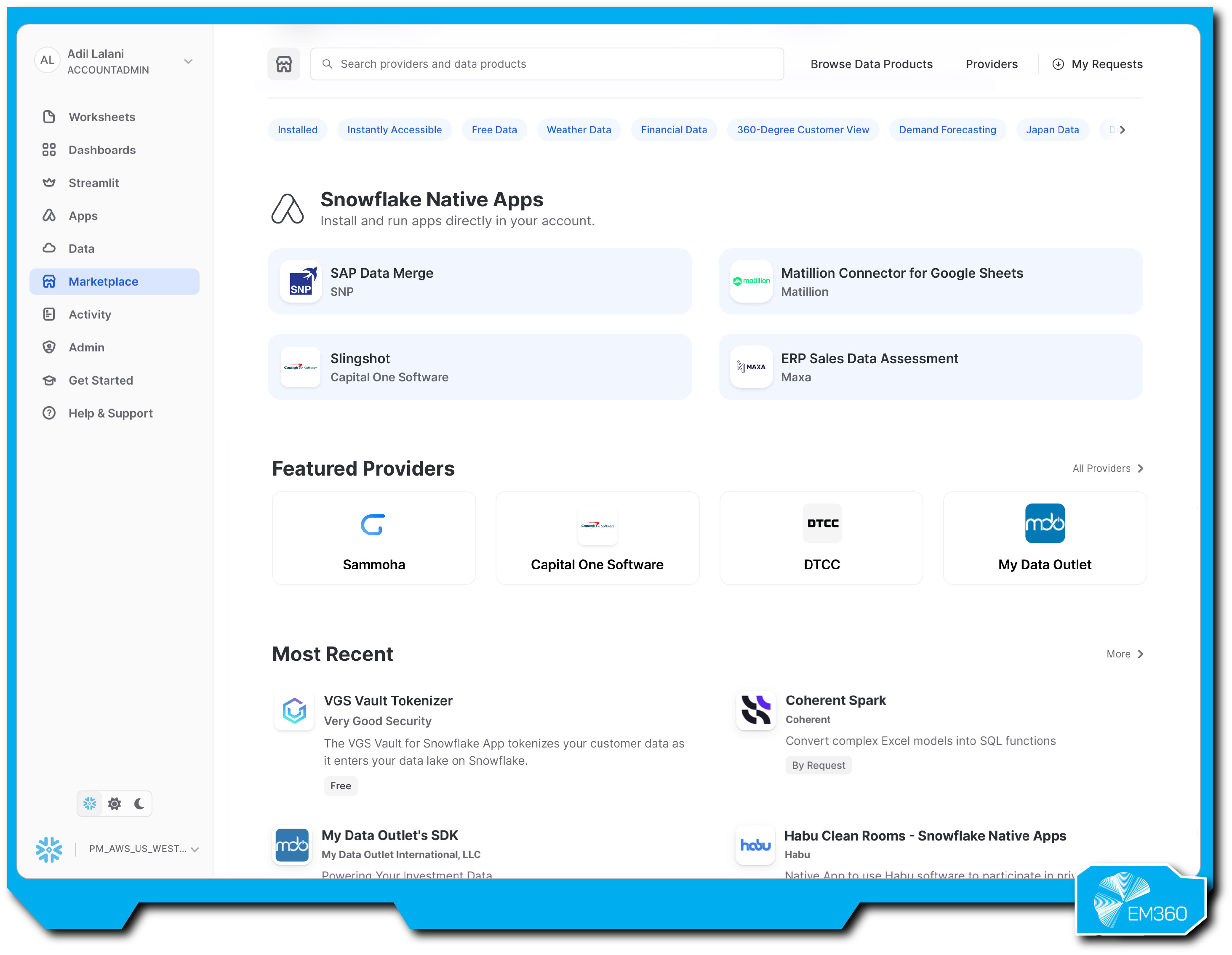

Traditional data marketplaces offered raw datasets; the new generation blends data with AI models and agentic applications. Snowflake Marketplace expanded in 2025 to include agentic products and AI‑ready news and data apps, connecting over 750 providers with more than 3,000 data, application and agentic products.

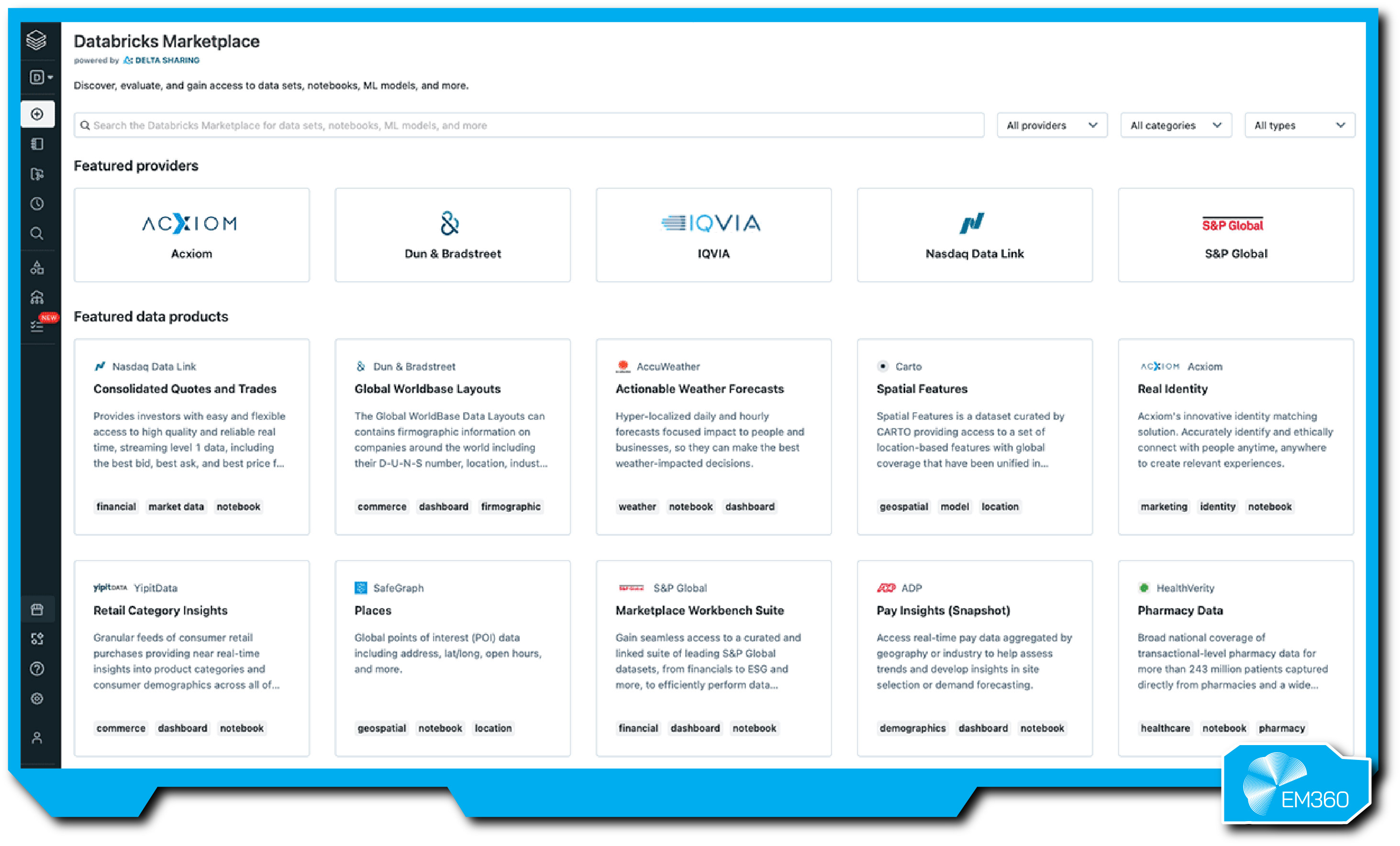

Databricks Marketplace is built on Delta Sharing, allowing organisations to access datasets, machine‑learning models and notebooks across clouds without proprietary dependencies. These platforms are evolving into full ecosystems where organisations can buy, sell and deploy AI models alongside data.

Regulation as a Design Principle

Governments are not just passing laws; they are rewriting how data platforms are architected. The EU Data Act mandates that data generated by connected products must be shareable from September 2025.

The AI Act, entering into force through 2025–2027, imposes obligations on providers to ensure transparency, non‑discrimination and explainability. Platforms like Dawex are designed to comply with the European Data Governance Act, the Data Act and GDPR, offering frameworks for consent management and region‑specific data flows.

Compliance is no longer a bolt‑on feature; it’s a core differentiator.

Privacy‑Preserving Collaboration

Data clean rooms and privacy‑enhancing technologies are moving beyond advertising into enterprise collaboration. InfoSum pioneered a non‑movement architecture where data remains decentralised and is never pooled for processing.

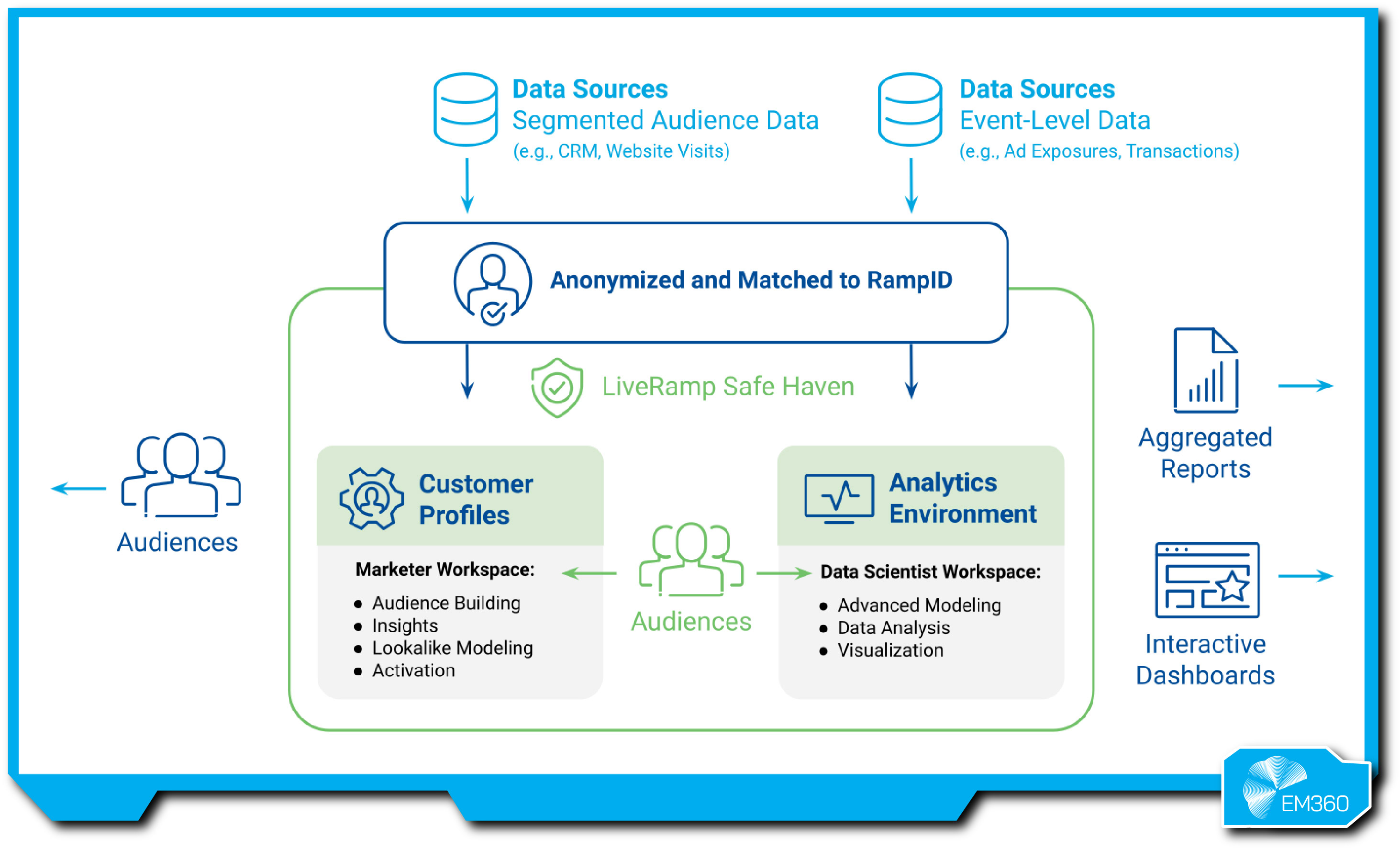

Their platform supports cross‑cloud and cross‑region collaboration while using PETs such as differential privacy, secure multi‑party computation and synthetic IDs. LiveRamp Safe Haven offers flexible trust management, configurable data security and the ability to build models across hundreds of partners while preserving user privacy.

As first‑party data becomes more valuable, these capabilities are crucial.

Ecosystem Consolidation

Guardrails for Agentic AI

Break down the tooling stack behind secure agent workflows, from firewalls and runtime filters to red-teaming kits and multimodal moderation models.

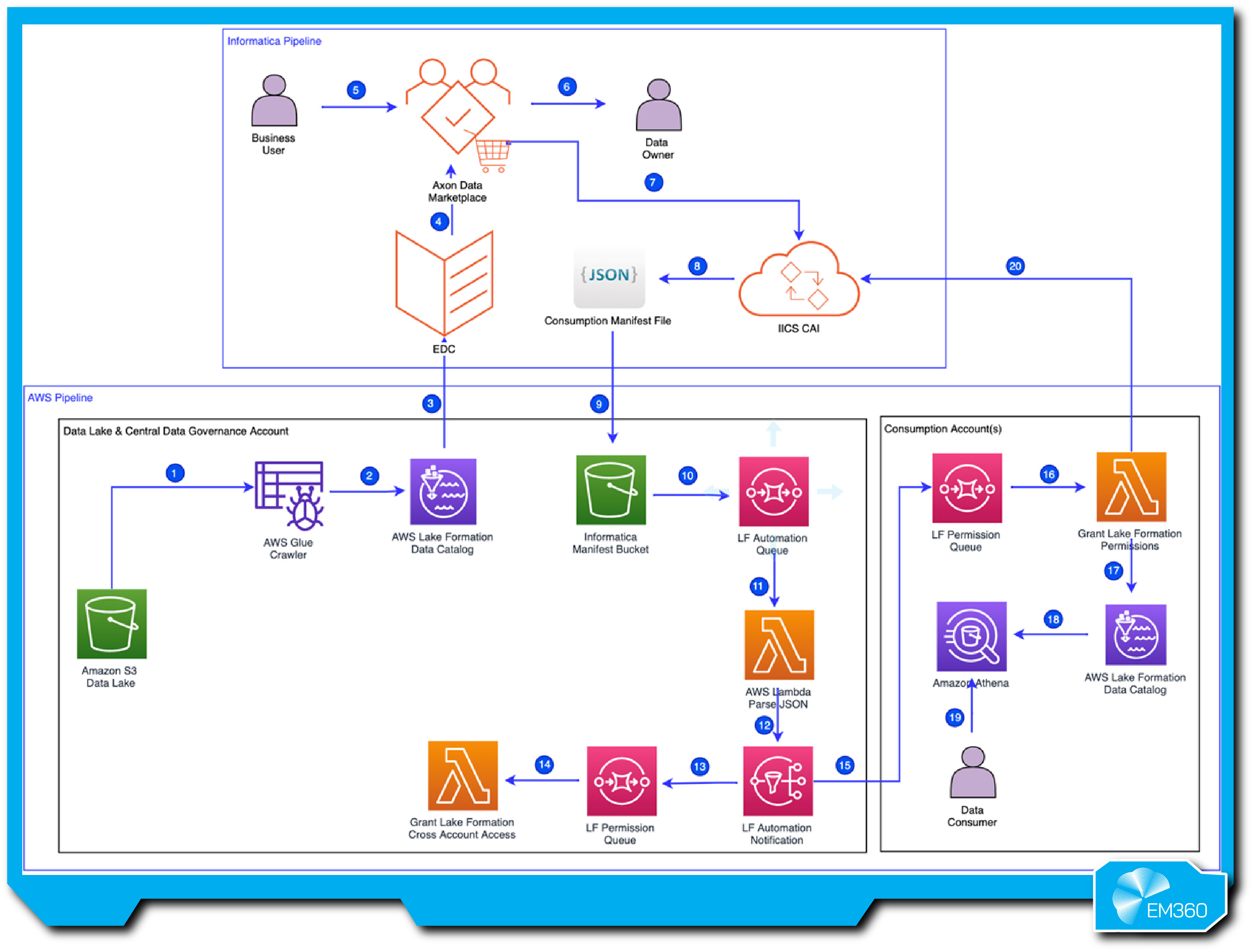

Mergers are reshaping the landscape. Salesforce’s agreement to acquire Informatica for US$8 billion aims to integrate Informatica’s data catalog, integration and governance services into Salesforce’s AI ecosystem.

WPP’s acquisition of InfoSum embeds a privacy‑first data clean room within a global media giant, giving WPP clients access to InfoSum’s cross‑cloud data collaboration technology. Consolidation signals that data monetisation is no longer a stand‑alone function but a component of broader AI and customer‑experience strategies.

Choosing the Right Platform for AI-Driven Data Monetisation

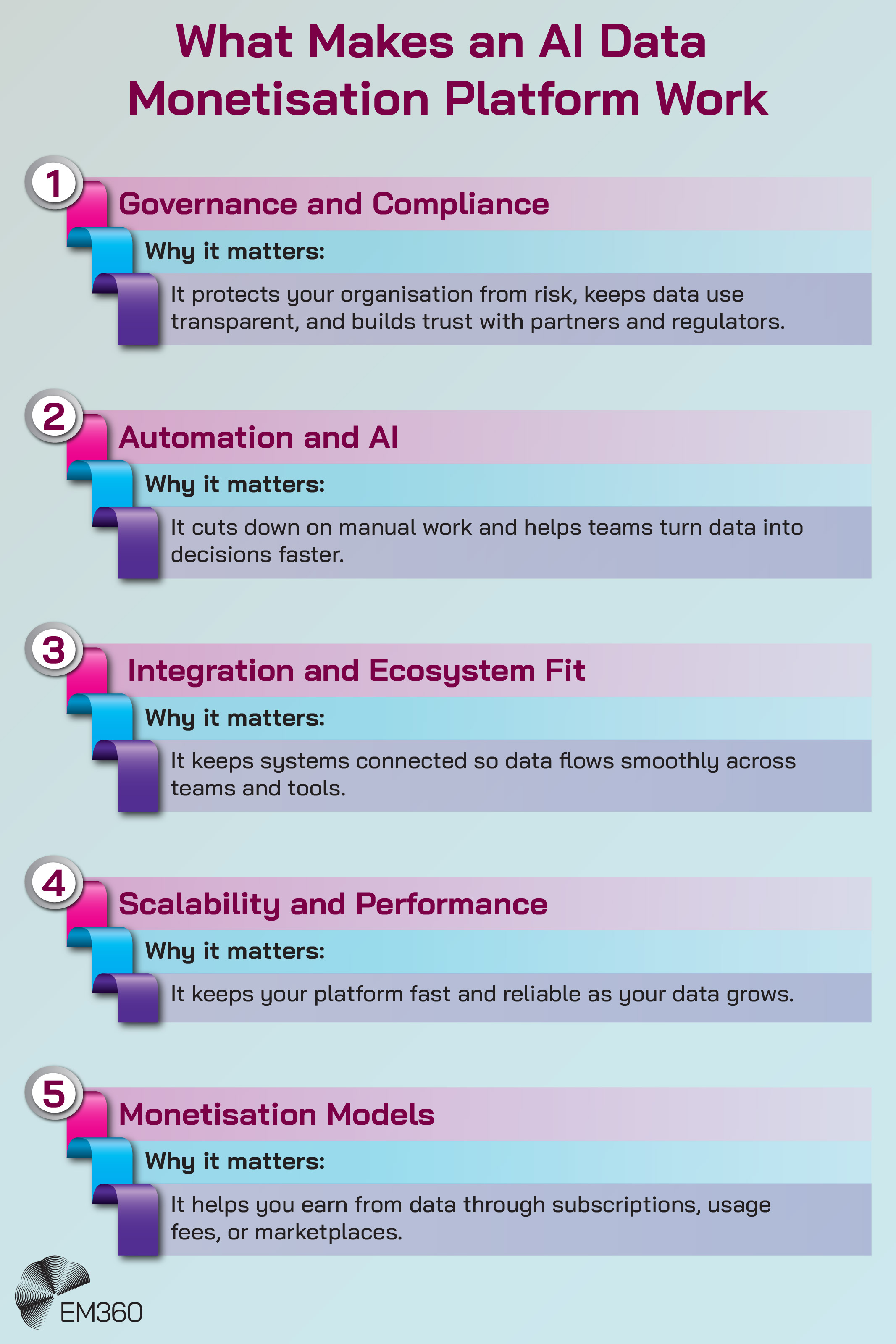

Selecting a platform involves more than just comparing feature lists. The following criteria help align choices with organisational maturity and strategic goals:

Core Evaluation Criteria

- Governance and Compliance: Look for platforms that embed privacy‑by‑design and offer compliance with global regulations (GDPR, CCPA, EU Data Act, AI Act). Full consent management and audit trails are essential.

- Automation and AI Capabilities: Prefer solutions that provide automated data valuation, metadata enrichment, and built‑in AI models. This reduces manual overhead and accelerates time to value.

- Integration and Ecosystem Fit: Consider how well the platform integrates with your existing data infrastructure and whether it supports cross‑cloud or multi‑cloud environments.

- Scalability and Performance: Evaluate throughput, latency and ability to handle large volumes of data without duplication or movement. Zero‑copy sharing, as offered by platforms like Snowflake and BigQuery, improves efficiency.

- Monetisation Models: Determine whether the platform supports subscription, usage‑based, marketplace or API‑based revenue streams, and whether it facilitates pricing, billing and licensing management.

Common Pitfalls to Avoid

AI Agents, Control and Risk

Examining the security, privacy and governance questions raised when autonomous AI can operate your browser, email and local data.

- Undervaluing data quality: Poorly governed data will erode trust and reduce revenue. Invest in lineage and quality monitoring.

- Ignoring licensing frameworks: Ensure that data usage rights, royalties and IP obligations are clearly defined; some platforms automate licensing management.

- Siloed architecture: Choosing a platform that locks your data into one cloud or region hinders collaboration. Opt for open standards and cross‑cloud capabilities.

- Neglecting ROI tracking: Monetisation initiatives should include clear metrics for revenue, cost savings and risk mitigation. Align platform features with KPI measurement.

With these criteria in mind, we examine the leading platforms driving AI‑based data monetisation for enterprises.

Leading Platforms Turning Data Into Economic Value

The market for AI-driven data monetisation platforms is expanding fast, but capability and focus vary widely. Each solution brings its own approach to governance, automation, and scalability — from open data exchanges to tightly controlled enterprise ecosystems.

The following platforms represent the most mature and widely adopted options shaping how organisations turn data into revenue today.

AWS Data Exchange

AWS launched Data Exchange in 2019 as a central hub connecting data providers and subscribers. Today it hosts thousands of third‑party datasets across industries and is tightly integrated with the AWS ecosystem.

Enterprises on AWS can subscribe to curated data products and make them immediately accessible via Amazon S3, Redshift or SageMaker. Providers undergo a rigorous review process before listing datasets, ensuring quality and compliance.

Enterprise‑ready features

AWS Data Exchange offers a comprehensive catalog spanning financial markets, healthcare, geospatial and more. Data ingestion and delivery are seamless: subscribers can load data directly into their AWS environment for analytics or AI model training. Built‑in encryption, verification and audit logs provide security and traceability.

Providers can create custom offers, private data products or subscription models, and consumers pay via AWS Marketplace. Because the platform is native to AWS, enterprises benefit from consistent IAM controls and integration with services like Lake Formation.

Pros

- Large, diverse dataset catalog across industries.

- Native integration with AWS analytics and AI services.

- Robust security with encryption, verification and audit logs.

- Flexible pricing models and private offers.

- Familiar AWS Marketplace procurement process.

Cons

- Dependent on the AWS ecosystem; limited appeal for multi‑cloud strategies.

- Subscription costs can accumulate quickly at scale.

- Governance tooling largely relies on AWS, which may not meet all regulatory requirements.

Inside Google’s AI-First Search

Gemini 2.5 Pro and Deep Search reposition Google Search as a conversational, task engine rather than a link directory for complex queries.

Best for

Organisations already invested in AWS looking for a straightforward way to augment their models with external data. Ideal for industries such as finance, retail and public sector where curated, compliant datasets are available.

Databricks Marketplace

Databricks built its marketplace on the Delta Lakehouse architecture with Delta Sharing to allow open, cross‑cloud exchange. The marketplace offers data sets, machine‑learning models, notebooks and dashboards that run seamlessly on Azure, AWS or Google Cloud.

A March 2025 update introduced features like clean rooms on Azure and AWS, cross‑platform view sharing and secure open sharing using OIDC token federation.

Enterprise‑ready features

Databricks Marketplace is designed for collaboration. Data products can be shared without replication, and providers maintain control through fine‑grained entitlements. The Clean Room capability lets multiple parties run queries on combined datasets without exposing raw data, using zero‑copy sharing.

Recent enhancements enable cross‑platform view sharing, which means a data set or model published on one cloud can be consumed from another without vendor lock‑in. Delta Sharing is an open protocol, so enterprises can build their own data exchanges or integrate third‑party platforms.

Pros

- Open sharing protocol (Delta Sharing) supports multi‑cloud environments.

- Built‑in clean rooms with cross‑platform sharing.

- Supports distribution of models, notebooks and dashboards alongside data.

- Strong integration with Databricks Lakehouse and MLflow.

- Vendors retain control through entitlements and auditing.

Cons

- Requires Lakehouse architecture; not ideal for organisations without Delta Lake.

- Pricing can be complex due to Databricks unit charges.

- Clean rooms currently available only on certain clouds (Azure and AWS).

Best for

Enterprises standardising on the Databricks Lakehouse who need open, cross‑cloud collaboration and advanced analytics. It suits organisations building AI pipelines that span multiple clouds or partner ecosystems.

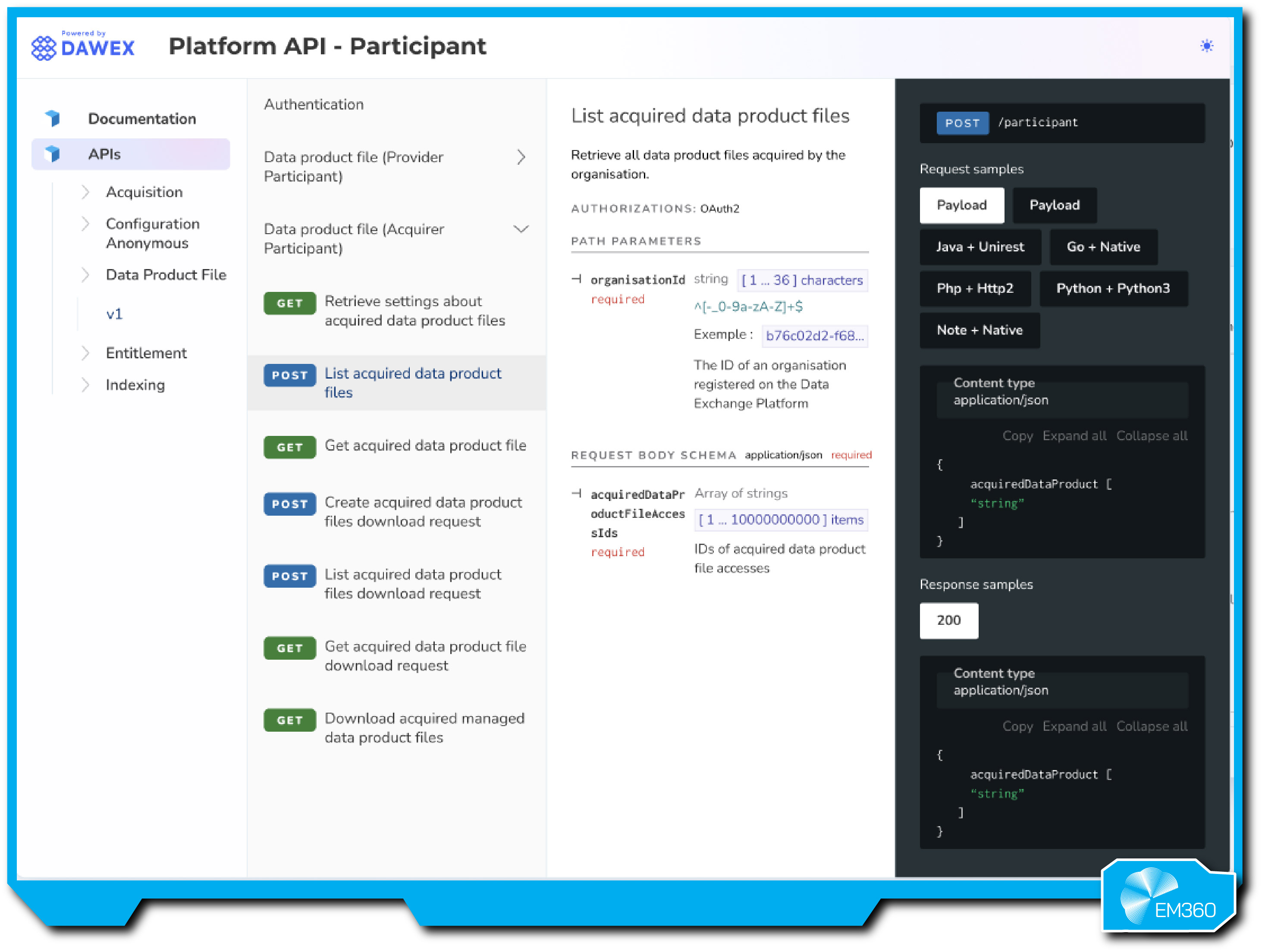

Dawex Data Exchange

Dawex positions itself as a trusted intermediary for organisations looking to source, share and commercialise data securely. While many platforms emphasise convenience, Dawex focuses on compliance and governance. Its global platform connects data providers and buyers, facilitating discovery, pricing and transaction management.

Enterprise‑ready features

Dawex is built for regulatory alignment. It incorporates full compliance with personal‑data regulations such as GDPR, APPI, PIPA and CCPA. The platform provides consent management, ensuring that data subjects’ permissions are verified before data flows to acquirers.

Dawex also supports compliance with the European Data Governance Act and Data Act; orchestrators using the platform are recognised as Data Intermediation Service Providers. For AI applications, Dawex helps model developers source quality data with full traceability.

Pros

- Strong regulatory compliance, including GDPR, EU Data Act and AI Act.

- Global reach with cross‑border data flows and consent management.

- Built‑in pricing and licensing modules for data products.

- Supports both data hubs and industry‑specific data spaces.

- Recognised as a trusted intermediary by European authorities.

Cons

- Primarily focused on compliance, so user experience may be more complex than consumer‑grade platforms.

- Limited AI or analytics tooling; requires integration with external ML frameworks.

- Not as well‑known outside of Europe, though global adoption is growing.

Best for

Regulated industries and organisations needing to navigate complex cross‑border data‑sharing requirements. Dawex suits companies that prioritise trust, compliance and traceability over self‑service convenience.

Google Cloud Analytics Hub (BigQuery)

Google rebranded its Analytics Hub into BigQuery data sharing in 2023. It allows organisations to share data and insights across organisational boundaries while maintaining security and privacy. Publishers can distribute listings through the Google Cloud Marketplace; subscribers discover and access curated libraries without replicating data.

Enterprise‑ready features

BigQuery’s zero‑copy sharing lets users access shared data without creating copies, reducing storage costs and preserving a single source of truth. Publishers define access rights and monetisation terms, and they can bundle datasets with looker dashboards or AI models. Subscribers combine the shared data with their existing data in BigQuery, BigLake or AI Platform, enabling seamless analysis. The platform offers roles for publishers, subscribers, viewers and admins, with robust identity and access management. Integration with Google’s Cloud Marketplace allows for subscription‑based monetisation.

Pros

- Zero‑copy sharing reduces duplication and ensures data consistency.

- Native integration with BigQuery analytics, Looker and Vertex AI.

Ability to monetise data via Google Cloud Marketplace. - Fine‑grained access controls and roles.

- Supports multi‑cloud analysis through BigQuery Omni.

Cons

- Mostly appeals to organisations already using Google Cloud.

- Monetisation features rely on the Google Cloud Marketplace, which may have geographic restrictions.

- Limited built‑in clean room functionality compared with specialised vendors.

Best for

Enterprises that rely on BigQuery for analytics and need to share or monetise data with partners or internal teams. It’s ideal for organisations seeking a cloud‑native, zero‑copy model with integrated AI tools.

Harbr Enterprise Data Marketplace

Harbr is a white‑label platform that enables organisations to build private data and AI marketplaces under their own branding. Rather than offering an open exchange, Harbr focuses on internal and partner‑based data sharing with full governance. The platform supports use cases from data democratisation to data commerce and is deployed in the cloud of the customer’s choice.

Enterprise‑ready features

Harbr’s marketplace provides centralised data discovery and cataloguing, self‑service access with governance controls, collaborative workflows, built‑in data lineage and integration with existing data infrastructure.

Users can find data via curated landing pages and smart filters, enrich products with metadata, and then analyse or integrate them using built‑in tools. Harbr emphasises scalability and governance: fine‑grained permissions and automated policy enforcement allow enterprises to democratise data without sacrificing compliance. Deployment options include AWS, Azure, Databricks and Google Cloud.

Pros

- Private, white‑label marketplace tailored to organisational branding.

- Centralised discovery, self‑service access and collaboration.

- Built‑in data lineage and quality monitoring.

- Deployable on multiple clouds, avoiding vendor lock‑in.

- Promotes faster time‑to‑access (up to 50 per cent faster) and unified data ecosystem.

Cons

- Requires implementation and customization; not an out‑of‑the‑box marketplace.

- Pricing not publicly available; typically enterprise‑grade.

- Focused on internal and partner use, so not suitable for selling data to wide audiences.

Best for

Enterprises looking to build their own branded data marketplace, especially those seeking to democratise internal data and develop data products for partners without exposing information publicly.

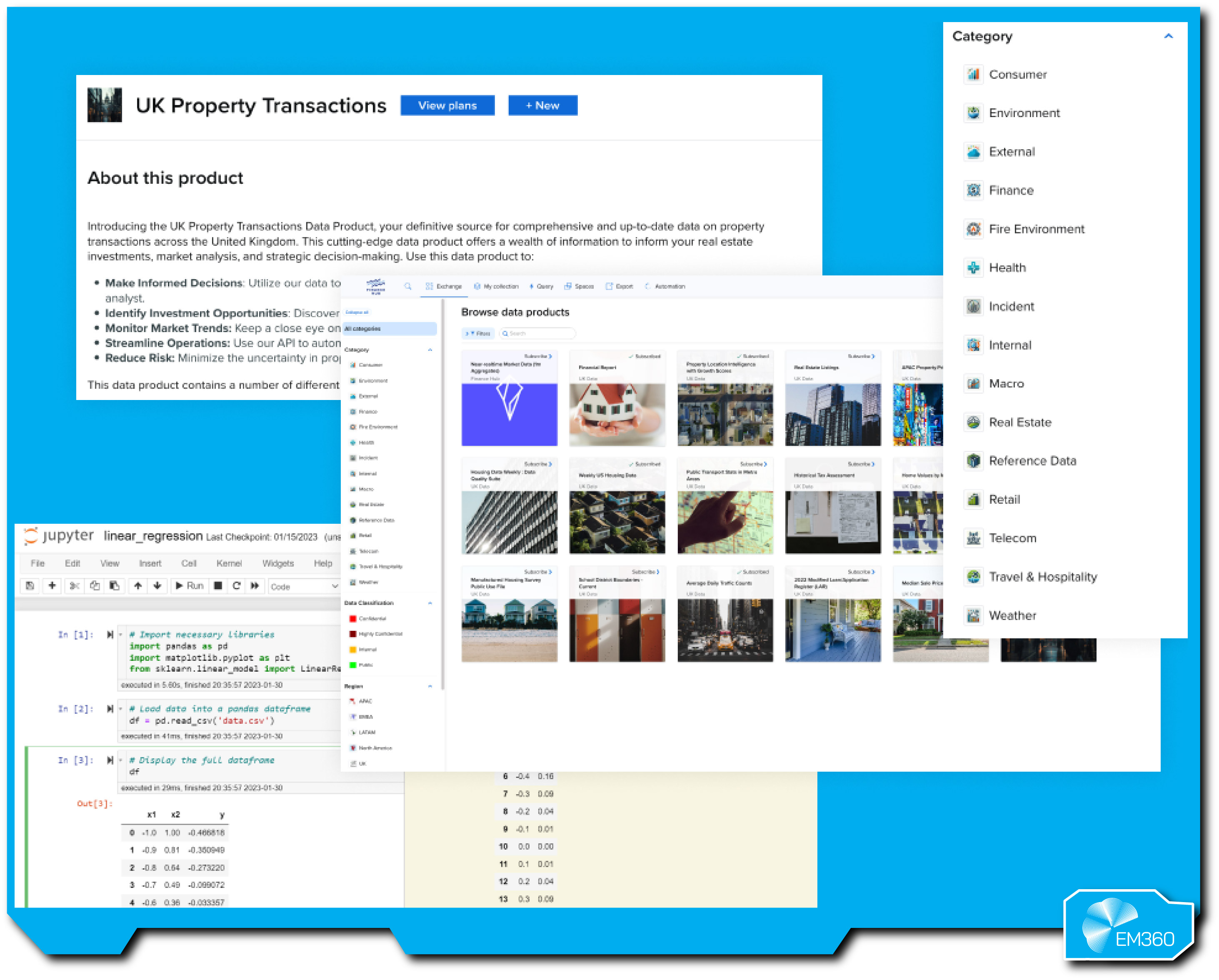

Informatica Cloud Data Marketplace

Informatica’s Cloud Data Marketplace is part of its Intelligent Data Management Cloud (IDMC). It provides a data shopping experience that allows teams to locate, request and evaluate curated data products via self‑service. The marketplace is backed by Informatica’s CLAIRE AI engine, which powers metadata enrichment and recommendations.

Enterprise‑ready features

The marketplace emphasises fast, safe data sharing with trusted data products that fuel analytics and AI. It allows data owners to package and publish data products, including datasets, machine‑learning models and pipelines.

Users can automate data provisioning and delivery, track operational metrics and provide relevant context and guidance to improve data literacy. Collaboration features include chat, reviews, alerts and user ratings to connect teams across the enterprise.

The marketplace operates with consumption‑based pricing and integrates with Informatica’s data governance, quality and catalog services.

Pros

- Self‑service data shopping experience for trusted data products.

- Ability to package and publish datasets, models and pipelines.

- Automated provisioning, delivery and operational metrics.

- Collaboration features (chat, reviews, alerts) to drive adoption.

- Tight integration with Informatica’s governance, quality and catalog services.

Cons

- Works best within the Informatica ecosystem; integration with other clouds may require additional setup.

- Consumption‑based pricing can be unpredictable for heavy usage.

- User experience may require training, especially for non‑technical roles.

Best for

Organisations that have adopted Informatica’s IDMC and want to extend data governance into a marketplace. It suits enterprises seeking to package internal data products and manage end‑to‑end data sharing workflows.

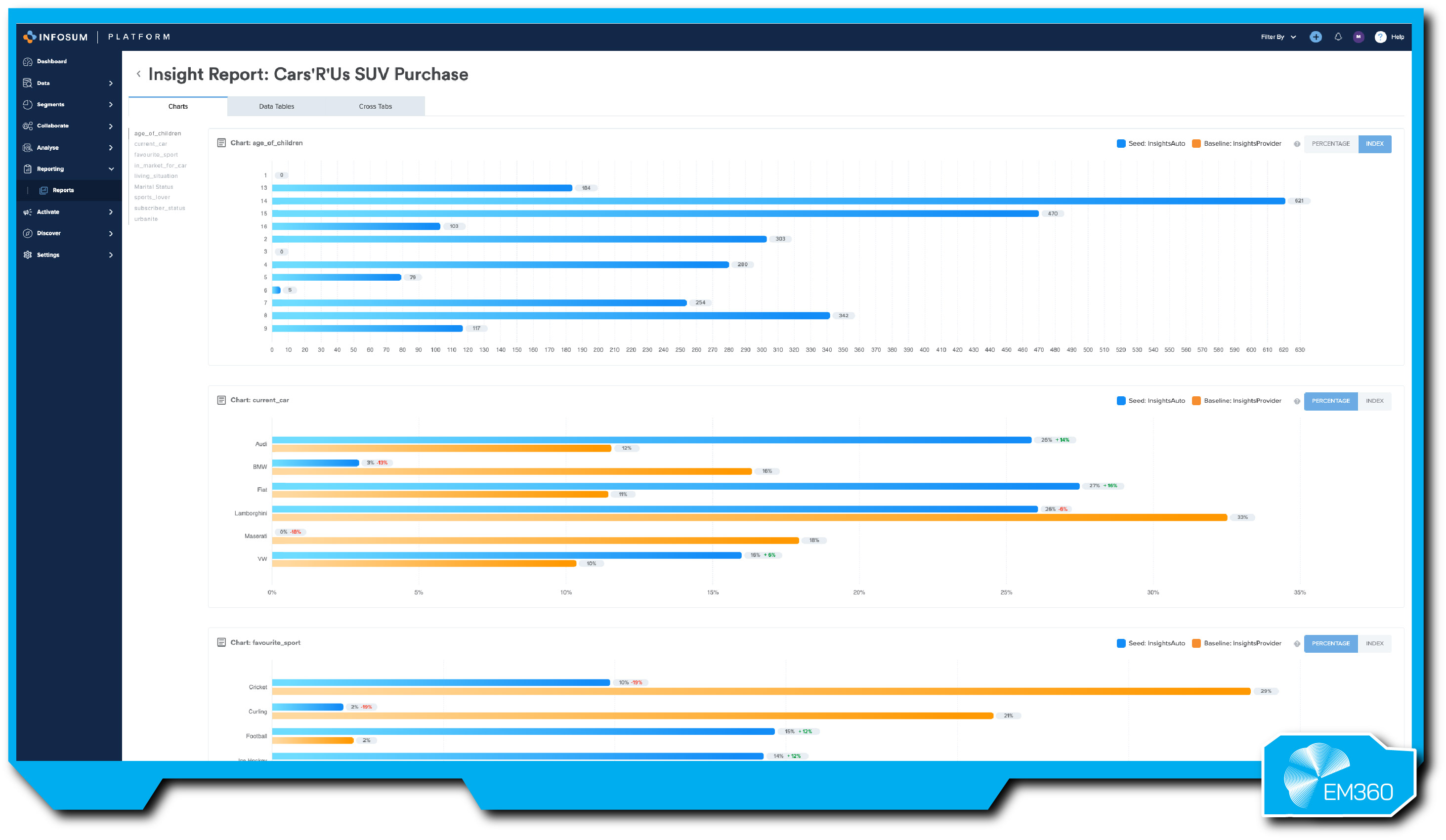

InfoSum

InfoSum pioneered the privacy‑first data clean room. Rather than moving data into a central repository, InfoSum’s architecture keeps data decentralised, using technologies like private set intersection and federated learning to match and analyse data across parties. In April 2025, marketing giant WPP acquired InfoSum to embed this technology into its AI‑driven data offering.

Enterprise‑ready features

InfoSum provides a secure environment where companies can collaborate on sensitive data without sharing or copying it. The platform enforces privacy‑by‑default through role‑based permissions and is always on; users cannot turn off privacy controls. InfoSum’s decentralised architecture supports cross‑cloud and cross‑region collaboration, ensuring data remains within its regulatory region. It uses multiple privacy‑enhancing technologies, including differential privacy, secure multi‑party computation and point‑in‑time synthetic IDs. After WPP’s acquisition, InfoSum’s technology is integrated into WPP Open, enabling clients to generate audience intelligence across data sources without moving data.

Pros

- Decentralised, non‑movement architecture eliminates the need to move or pool data.

- Cross‑cloud and cross‑region collaboration with strict regulatory adherence.

- Robust privacy‑enhancing technologies (differential privacy, SMPC, synthetic IDs).

- WPP acquisition amplifies ecosystem reach and AI integration.

- Extensive network of media, retail and identity partners.

Cons

- Requires a federated approach; may not fit scenarios where data movement is necessary.

- Pricing and access may be tailored toward large enterprises and agencies.

- Focuses on marketing and advertising use cases, though expanding into other sectors.

Best for

Organisations needing privacy‑preserving collaboration across multiple partners or clouds, especially in advertising, media and retail. It is ideal for companies requiring granular control and regulatory adherence.

LiveRamp Safe Haven

Safe Haven is LiveRamp’s data collaboration platform that allows brands, agencies and media owners to connect first‑, second‑ and third‑party data without exposure. Unlike many clean rooms that operate in isolation, Safe Haven integrates addressability, analytics and activation.

Enterprise‑ready features

Safe Haven helps enterprises build and act on consumer understanding by adding more data sources, look‑alikes and analytic packages across hundreds of pre‑qualified partners. It enables closed‑loop measurement, offering journey analytics and incrementality metrics that help marketers understand the full customer journey.

The platform’s trust management is highly configurable, allowing organisations to specify different levels of data access for each partner. Safe Haven brings addressability options, audience insights, analytic visualisations, predictive modelling and data science tooling, all within a secure, neutral environment.

With 500+ turnkey integrations, customers can connect to leading media and analytics partners. The platform enforces consumer privacy through removal of personal data and support for opt‑out workflows.

Pros

- Rich collaboration across hundreds of pre‑qualified partners.

- Closed‑loop measurement and journey analytics.

- Flexible trust management and configurable data security.

- Full suite of audience insights, predictive modelling and data science tools.

- Strong privacy controls with removal of personal data and support for opt‑outs.

Cons

- Optimised for marketing and customer‑data use cases; less suited to industrial or IoT data.

- Complex to implement for organisations without existing LiveRamp integrations.

- Pricing may be enterprise‑oriented and tied to usage volumes.

Best for

Marketers and media networks seeking to collaborate on customer data across walled gardens, channels and clouds. It’s especially powerful for retail media networks and enterprises with large customer bases.

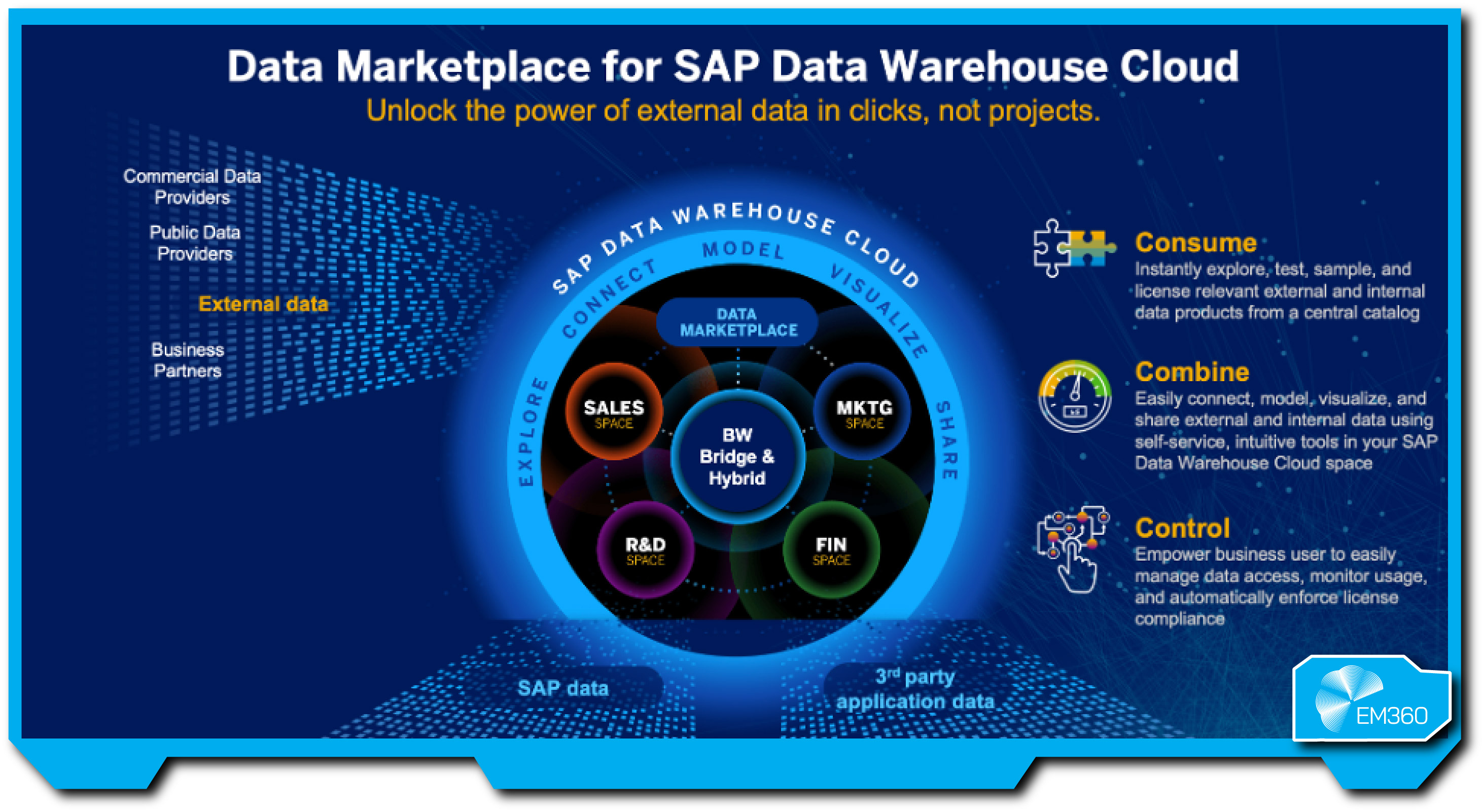

SAP Datasphere Data Marketplace

SAP Datasphere (formerly SAP Data Intelligence) is a cloud‑native data fabric that integrates data management, modelling and analytics. Its data marketplace allows customers to enrich their projects with trusted third‑party data while blending it with SAP‑native sources. The marketplace is part of a broader business data fabric approach.

Enterprise‑ready features

SAP Datasphere accelerates time to value by reusing semantic definitions and associations from SAP applications. Its knowledge graph uncovers hidden relationships, boosting the effectiveness of machine‑learning and large language models. The platform supports data federation and replication across hybrid and multi‑cloud environments, allowing users to virtually access or store data from anywhere.

The data marketplace lets customers enrich data projects with trusted industry data from thousands of providers. Integrations with leading AI platforms and open data ecosystem partners ensure that both SAP and non‑SAP data can be blended. Users gain self‑service access to trusted business data through spaces and semantic onboarding, with governance managed via the SAP Datasphere catalog.

Pros

- Built‑in reuse of SAP semantics and knowledge graphs for deeper insights.

- Data federation and replication across hybrid and multi‑cloud environments.

- Marketplace access to trusted industry data from thousands of providers.

- Integrations with AI platforms and open data ecosystem partners.

- Self‑service access with governance and semantic onboarding.

Cons

- Best suited for organisations already using SAP; learning curve for new users.

- Data marketplace currently focuses on structured data (SQL, CSV).

- Pricing and licensing depend on provider arrangements, which can be complex.

Best for

Enterprises on the SAP stack seeking to enrich their data fabric with external datasets while maintaining governance and context. It’s particularly useful for manufacturing, supply chain and finance sectors that rely on SAP applications.

Snowflake Marketplace

Snowflake’s Marketplace, part of its Data Cloud platform, has matured rapidly. In mid‑2025 Snowflake announced the expansion of the marketplace to include agentic products and AI‑ready news and data apps. Providers can publish not just datasets but also AI applications, and customers can access real‑time feeds for generative models.

The marketplace now connects over 750 providers with more than 3,000 data, application and agentic products.

Enterprise‑ready features

Snowflake Marketplace offers zero‑copy data sharing, meaning data is not duplicated when shared across accounts. The platform’s Cortex Knowledge Extensions allow AI models to access live data feeds and deliver responses backed by real‑time news and financial information.

Enterprises can build Agentic Native Apps that combine data, AI models and functions, and then distribute them through the marketplace. Because Snowflake supports multi‑cloud operation, data providers can reach customers across clouds. The marketplace also supports monetisation through consumption‑based billing and private listings.

Pros

- Expansive ecosystem with over 750 providers and thousands of products.

- Zero‑copy data sharing ensures data stays in place.

- Agentic products and AI‑ready data apps for generative AI use cases.

- Real‑time feeds and Cortex Knowledge Extensions for up‑to‑date insights.

- Multi‑cloud reach and consumption‑based pricing.

Cons

- Requires Snowflake infrastructure; not ideal for non‑Snowflake environments.

- Some advanced features (agentic apps) are new and may need technical expertise.

- Competition among providers can make differentiation challenging.

Best for

Organisations already using Snowflake Data Cloud who want to distribute or consume AI‑ready data and applications. It’s well suited for finance, media and fast‑growing AI startups seeking real‑time content for generative models.

Emerging Frontiers in AI-Driven Data Value Creation

The trends shaping today’s market are only the beginning. As these technologies and frameworks mature, they’re setting the stage for a more autonomous, AI-driven phase of data value creation. As AI systems mature, enterprises are moving beyond structured exchanges and into more intelligent, automated forms of value generation.

These emerging frontiers reveal how data, algorithms, and decision-making are beginning to merge into entirely new business capabilities.

From Data Products to Decision Engines

The next frontier goes beyond selling datasets—it creates decision engines. Generative AI and autonomous agents can embed data products into workflows that predict, recommend and act on behalf of users.

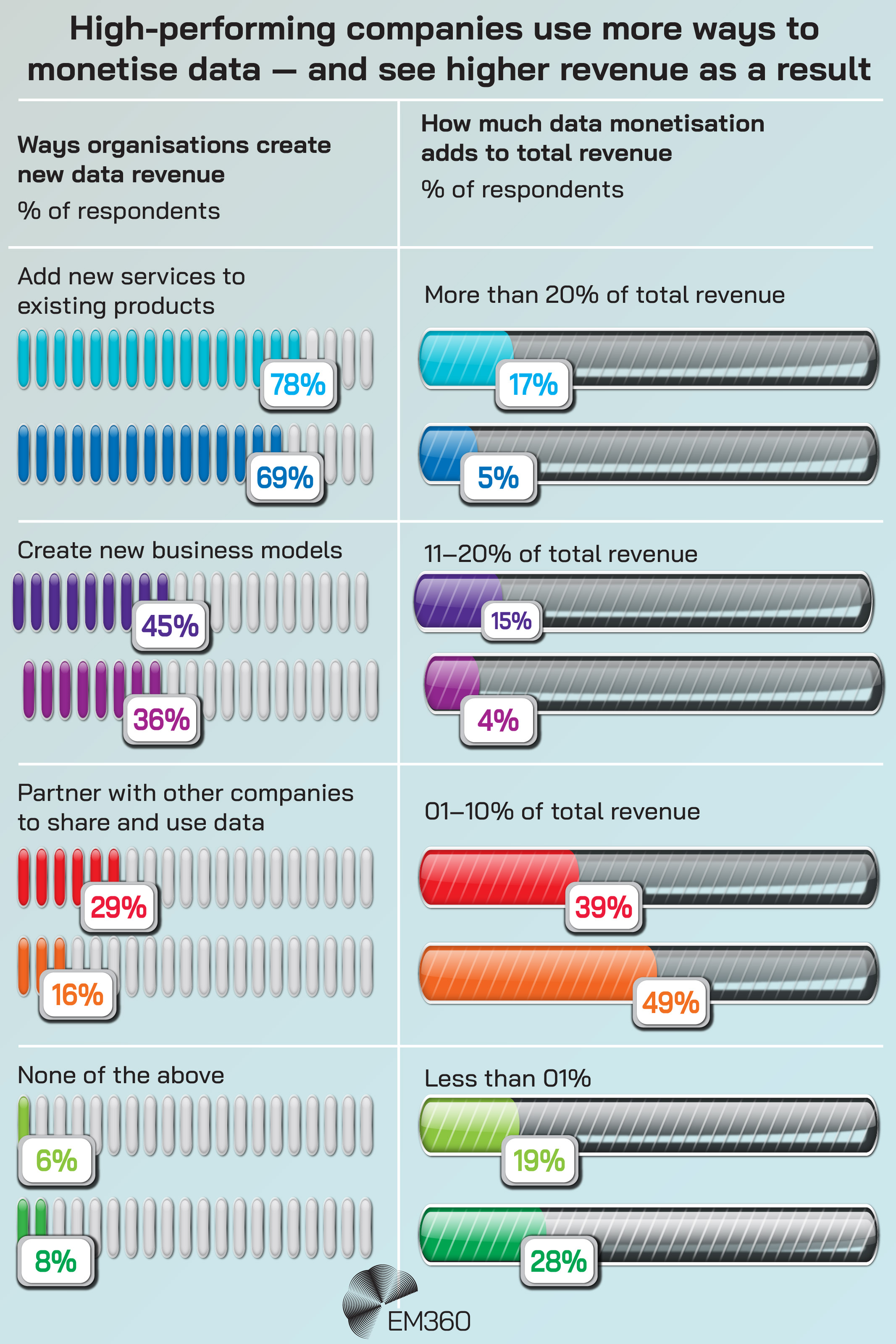

McKinsey notes that top‑performing organisations attribute 11 per cent of their revenue to data monetisation, and generative AI allows companies to tap unstructured data sources to build robust data products. By integrating AI models with governance frameworks, data products can become decision‑as‑a‑service offerings, generating recurring revenue.

Privacy‑Enhancing Technologies and Trust

Privacy‑enhancing technologies (PETs) are moving from experimental tools to foundational infrastructure. Differential privacy, secure multi‑party computation and synthetic data techniques allow organisations to extract value without revealing sensitive information. Combining PETs with decentralised architectures, as InfoSum does, enables cross‑cloud collaborations that respect local regulations. These technologies will underpin data spaces across health care, finance and public services, where trust is paramount.

The Coming Convergence of AI and Data Marketplaces

In future, data marketplaces will not just list datasets; they will host LLMs, APIs and agentic apps side by side. Snowflake’s agentic expansion hints at this convergence. As AI models require access to ever‑richer data, marketplaces that bundle models with data and licensing will become the primary distribution channel. Enterprises will need to evaluate platforms based on their ability to manage model governance, usage tracking and cross‑cloud performance. Platforms like Databricks and Snowflake are moving in this direction, and others will follow.

Final Thoughts: Turn Data Into a Revenue Engine

Data monetisation succeeds when AI and governance align with business value. The landscape is rapidly evolving, driven by regulatory obligations, market momentum and technological breakthroughs. AI transforms raw data into monetisable products, while privacy‑enhancing technologies and compliance frameworks ensure trust.

Enterprises must choose platforms that fit their ecosystem, prioritise governance, and embrace cross‑cloud collaboration. As the data economy matures, the winners will be those who treat information as both an asset and an ecosystem. To see how other enterprises are operationalising data value, stay connected with EM360Tech’s latest insights and analyst discussions.

Comments ( 0 )