Data drives every decision an enterprise makes, yet too often, leadership teams are working with information they cannot fully trust. As data pipelines grow more complex—spanning multiple clouds, regions, and applications—the risk of incomplete, inaccurate, or delayed data increases sharply.

Gartner reports that operational inefficiencies, compliance penalties, and lost opportunities caused by poor data quality cost organisations approximately $12.9 million per year on average. And when you’re competing in markets that are as competitive as today’s, that’s not something you want to ignore.

ETL testing has become a critical safeguard against these types of risks.

By validating every stage of the extract, transform, and load process, enterprises can ensure that the data entering their analytics platforms is accurate, complete, and reliable. In an era where regulatory scrutiny and cybersecurity threats are at an all-time high, this quality assurance is no longer optional.

It is the foundation for trustworthy enterprise data, informed decision-making, and sustainable digital transformation.

Understanding ETL Testing in Modern Data Operations

ETL testing checks that data pipelines work end-to-end. It makes sure the right data is pulled, transformed as intended, and lands in its destination without being altered or dropped.

Traditional data checks only look at what’s sitting in a system at a point in time. They don’t tell you if the pipeline itself introduced errors along the way.

ETL testing does. It follows the data through extraction, transformation, and loading to find where meaning gets lost. In large, distributed environments, that’s often the only way to know the data you’re looking at is the data you actually collected.

ETL testing vs traditional data QA

Basic data checks look for obvious issues — missing values, duplicates, and broken formats. They tell you what’s wrong with a dataset after it’s already landed. That reactive approach is costly. Monte Carlo’s 2022 survey found that data professionals spend 40 per cent of their time checking data quality issues, and poor-quality data still eats into 26 per cent of company revenue.

ETL testing works differently. It tracks the data while it moves. It checks each step of extraction, transformation, and loading to find where data goes missing or changes. Without it, those issues usually surface only after reports are built and decisions are made.

Why ETL Testing Matters for Data Quality and Trust

Bad data costs more than most leaders realise. Errors buried in data pipelines can change forecasts, misinform strategy, and create compliance risks that surface months later. Gartner estimates that poor data quality costs organisations millions each year, yet most of those costs come from decisions made long before anyone notices the issue.

ETL testing reduces that risk. It validates the pipeline itself, not just the data that comes out at the end. By checking that extraction is complete, transformation logic holds, and loads happen without loss, ETL testing makes sure analytics teams work with data they can rely on.

This isn’t just a technical safeguard. In large enterprises, it’s what allows data leaders to give executives information they can act on with confidence, knowing it hasn’t been altered or dropped somewhere along the way.

The cost of data errors in analytics

Bad data is expensive. As we mentioned before, studies put the cost in the millions each year for large enterprises. Some of that shows up as rework and delays. The bigger impact is harder to see: wrong forecasts, bad investments, and missed opportunities that don’t get traced back to the pipeline.

Without ETL testing, these errors move through systems unchecked. Small issues in extraction or transformation change numbers downstream. A broken mapping in one integration can distort an entire analytics model. By the time it reaches leadership, decisions are already based on flawed information.

When Data Trust Becomes Strategy

Why observability, quality, and governance are collapsing into a single data trust mandate for CIOs and CDOs.

For organisations under regulatory pressure, the risk is higher. Inconsistent or incomplete data doesn’t just hurt insight. It increases compliance exposure and weakens audit trails, which can lead to fines or failed reviews.

Types of ETL tests enterprises should implement

ETL testing isn’t just about preventing bad data from slipping through. In complex, federated environments, it’s how data leaders keep analytics trustworthy, audits clean, and pipelines that are resilient under scale. The most effective testing strategies combine several layers of validation.

Data completeness and accuracy tests

Completeness testing verifies that every record meant to be extracted actually makes it into staging and through to the final destination. Accuracy checks go further, comparing transformed values against the source to catch subtle shifts in meaning. In distributed architectures where pipelines span regions and vendors, these tests are often the only safeguard against silent data loss that would otherwise distort forecasting models and regulatory reports.

Transformation logic validation

Modern ETL processes apply thousands of mappings and business rules to raw data. A single logic error can cascade across dashboards, machine learning features, and reconciliations. Transformation testing isolates those steps, validating calculations, joins, and derived fields so executives don’t get blindsided by incorrect margins or compliance figures.

Data integrity and referential checks

Mergers, acquisitions, and multi-source data platforms make integrity testing essential. These tests confirm that relationships between tables remain intact, primary keys stay unique, and no orphaned or duplicated records creep in during processing. Without them, financial reconciliations, supply chain reports, and risk models lose the reliability boards depend on.

Performance and load testing

Pipelines that work in a lab environment often fail under real production loads. Performance tests stress the system with enterprise-scale data volumes to ensure transformations, joins, and bulk loads meet SLAs. This is critical for leaders promising timely insights to regulators, auditors, or operational teams relying on near-real-time analytics.

Regression and automation testing

Inside Modern ETL Architectures

What differentiates leading ETL platforms across connectivity, automation, and scalability for warehouses, lakes, and real-time pipelines.

Data platforms evolve constantly—schema updates, vendor integrations, and rule changes happen weekly. Automated regression suites continuously validate that new releases don’t break established pipelines or reporting logic. Without these guardrails, seemingly minor changes can ripple through analytics environments, undermining trust in strategic dashboards and investor reporting.

ETL Testing Tools and Platforms in 2025

Modern ETL testing platforms have moved far beyond manual scripts and row counts. Enterprises now rely on automated suites that integrate directly into data pipelines, enabling continuous validation and monitoring at scale.

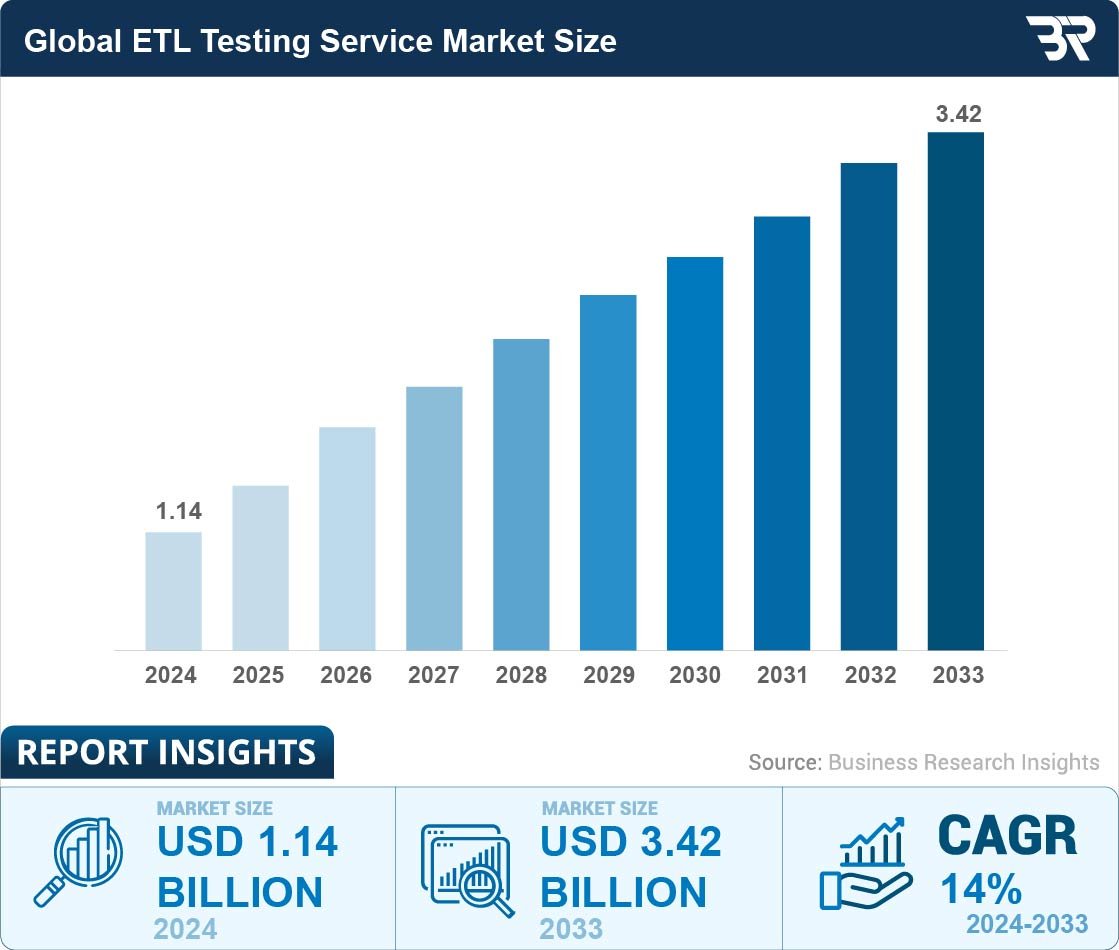

In fact, the global ETL testing service market was valued at approximately $1.14 billion in 2024 and is expected to reach $3.42 billion by 2033, with a CAGR of about 14 per cent

And the most effective tools combine traditional test coverage with capabilities designed for hybrid cloud architectures and complex data governance requirements.

Automation and AI-driven testing

Automation reduces the heavy lift of creating and maintaining test cases for thousands of data flows. Leading platforms use machine learning to detect anomalies, generate test scenarios, and flag schema drift automatically. This cuts the time to identify and resolve issues from weeks to hours, keeping transformation initiatives on schedule and within budget.

Data observability and end-to-end lineage

Testing is only part of the equation. Data observability platforms extend visibility across pipelines, mapping lineage from source to consumption. They alert teams when data freshness, volume, or distribution patterns deviate from baselines, enabling proactive fixes before decision-makers see flawed dashboards.

Schema validation and governance integration

With regulatory and internal compliance pressures rising, schema validation has become a critical feature. Advanced ETL testing tools automatically validate against governance policies, ensuring sensitive data remains properly classified and audit-ready. Integration with data catalogues and security controls helps enterprises demonstrate compliance without slowing operations.

Tooling landscape

Vendors such as QuerySurge, Informatica, Talend, dbt with Great Expectations, and cloud-native solutions embedded in platforms like Snowflake and Databricks now dominate the market. These tools offer varying levels of automation, observability, and governance support, giving data leaders options that align with their architectural strategy and regulatory posture.

Integrating ETL Testing into Enterprise Data Governance and Security

The Cost of 'Good Enough' Data

As AI consumes every flaw with confidence, weak data quality and unclear accountability become material enterprise risks.

For large organisations, data governance and security frameworks often stand apart from pipeline testing. That separation leaves blind spots. Data may be validated for accuracy but not for policy compliance. Security teams may harden access controls while transformation logic introduces unmonitored risk.

Embedding testing into governance controls

Effective governance depends on knowing where data came from, how it was transformed, and whether it meets organisational policies. ETL testing provides that assurance by validating lineage and enforcing schema rules as data moves through the pipeline. When testing is tied to governance workflows, audit trails become more reliable, making regulatory reviews faster and less disruptive.

Strengthening security posture

Data breaches and insider threats often exploit weak points in integration layers. Testing doesn’t replace encryption or access control, but it can surface vulnerabilities in transformation scripts, staging areas, or load routines that create unauthorised exposure. Automated validation also helps security teams ensure that masking and tokenisation policies are consistently applied, even as pipelines evolve.

Unified visibility for compliance and risk management

Linking ETL testing with governance and security tools gives enterprises a unified view of risk across their data estate. Instead of separate reports for pipeline health, compliance checks, and security incidents, leaders see how these factors intersect. This joined-up approach makes it easier to demonstrate compliance, respond to regulatory enquiries, and assure stakeholders that critical business decisions are based on trusted, protected data.

Common Mistakes and Pitfalls to Avoid

ETL testing often fails not because teams lack tools or expertise, but because of gaps in approach. Large organisations face recurring challenges that quietly undermine data reliability.

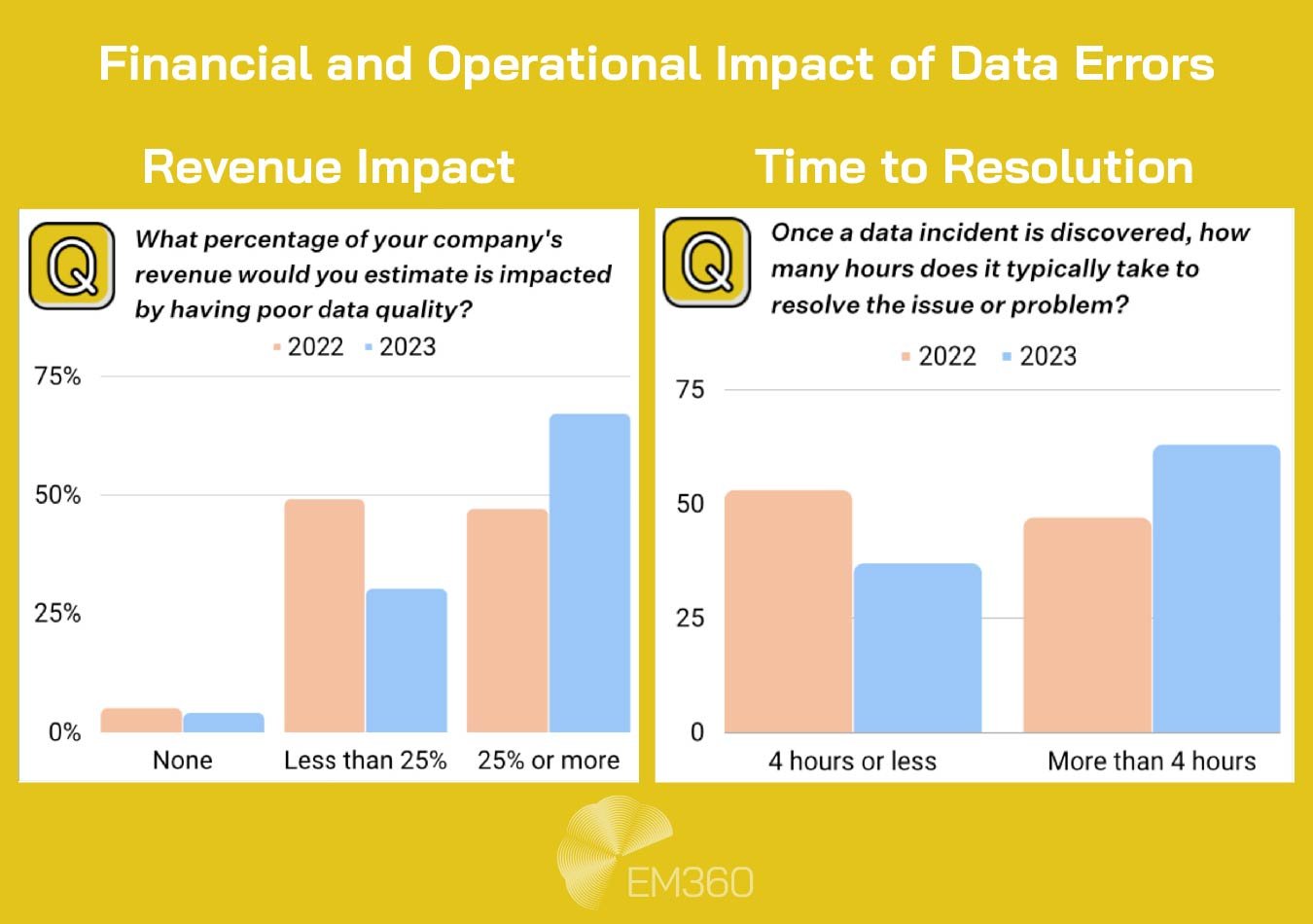

According to industry research, 68 per cent of respondents reported that detecting a data incident now takes four hours or more, and the average time to resolve one has risen to 15 hours per incident — a 166 per cent increase over the previous year. Those delays leave leadership teams making decisions long before root causes are found and fixed.

Treating testing as a one-off exercise

When Master Data Drives ROI

How disciplined MDM investment turns fragmented records into a single source of truth that lifts margins, CX quality, and decision speed.

Many enterprises run tests during initial pipeline development and assume that’s enough. In reality, pipelines evolve constantly. Without ongoing validation, small schema changes, new data sources, or vendor updates can introduce errors that escape notice until they affect reporting.

Over-reliance on manual checks

Manual data comparisons can work in controlled environments but rarely scale. As pipelines grow, relying on ad hoc scripts or human review leaves too much room for inconsistency and missed issues. Automated testing frameworks are essential for maintaining coverage and speed.

Incomplete test coverage

Testing that focuses only on final outputs ignores where most errors occur. Issues often surface mid-pipeline—during transformation logic or intermediate staging. Skipping these layers means critical errors pass through unnoticed.

Isolated testing from governance and security

ETL testing is sometimes treated as a developer or QA task, disconnected from broader data governance and security policies. This leads to gaps in compliance checks, weak audit trails, and vulnerabilities in handling sensitive information.

Lack of observability and root cause analysis

Even with automation, some enterprises stop at pass/fail results without diagnosing why issues occur. Without observability and lineage tracking, teams waste time chasing symptoms instead of fixing the underlying cause.

Best Practices for Reliable, Scalable ETL Testing

Enterprises that manage sprawling data estates know ETL testing isn’t a box-ticking exercise. It’s how they keep analytics aligned with regulatory expectations, operating targets, and board-level decisions. Building that kind of assurance requires more than tools—it demands practices designed for scale, governance, and change.

Align testing strategy with business risk

Not every data flow needs the same scrutiny. Critical pipelines feeding regulatory reports or executive dashboards should have deeper validation than low-impact data marts. Testing strategies should mirror enterprise risk models, ensuring effort is spent where failure has the highest cost.

Integrate testing into release management

Treat data pipelines like any other software system. Continuous integration and deployment practices bring automated regression testing into the release cycle, preventing schema changes, vendor upgrades, or mapping updates from breaking established data flows. This reduces rework and avoids late-stage surprises before critical reporting deadlines.

Embed governance policies into validation layers

Testing for technical accuracy alone isn’t enough. Enterprises need to know pipelines enforce data classification rules, preserve lineage, and comply with retention policies. Advanced testing frameworks now embed governance rules directly into test cases, giving auditors and compliance teams evidence of control without slowing delivery.

Move from monitoring to observability

Traditional pipeline monitoring checks for job completion or runtime errors. Observability tracks data health holistically—freshness, drift, and schema evolution—and uses anomaly detection to flag issues before they impact analytics. This shifts ETL testing from a reactive safety net to a proactive reliability mechanism.

Scale testing with reusable frameworks

Global organisations often manage hundreds of transformations across hybrid cloud environments. Maintaining siloed tests for each one is operationally impossible. Parameterised and reusable test frameworks allow teams to validate multiple pipelines with consistent logic, making it feasible to sustain testing coverage as data estates grow.

Final Thoughts: ETL Testing as a Strategic Control

Enterprises don’t lose trust in their data all at once. It erodes gradually as errors slip through pipelines, unnoticed until they change the decisions that matter most.

ETL testing closes that gap. It’s more than a quality check—it’s a control mechanism that keeps data reliable, audits defensible, and strategic insight grounded in fact. Organisations that embed this discipline in their data operations build resilience. They can meet regulatory scrutiny, move faster with analytics, and trust the information guiding boardroom decisions.

For leaders looking to strengthen data strategy and governance, EM360Tech offers more expert insights and frameworks to help navigate the next stage of transformation.

Comments ( 0 )