Fragmented data. Siloed systems. Too many tools trying to do the same job, and none of them talking to each other properly. For enterprise teams under pressure to deliver faster insights with fewer missteps, these aren’t just technical growing pains. They’re architectural warning signs.

The problem isn’t a lack of data. It’s a lack of structured, reliable flow. Data that’s collected but not trusted. Platforms that ingest but don’t integrate. Teams that operate in parallel, each solving for their own slice of the stack. And you end up dealing with slower decisions, shadow infrastructure, and missed opportunities in a world that no longer tolerates lag.

This is where data pipelines matter. Not as backend connectors or coded jobs buried in someone’s inbox, but as the foundation of modern enterprise infrastructure. A study by Wakefield Research and Fivetan found that data teams spent 44 per cent of their time just building and maintaining pipelines — at an average cost of US$520,000 per year in 2022.

But when they're built correctly, pipelines don’t just move data. They're the tools that enforce trust, support compliance, and make scaling with your business possible. In other words, they turn operational chaos into systems that deliver insight with intention.

And with AI acceleration, cloud sprawl, and governance mandates now converging, the architecture behind your data flow has never been more critical.

What Is a Data Pipeline, and Why Does It Matter?

A data pipeline is the system that moves data from where it’s created to where it’s used. Not just a connector and not just a script. It’s an orchestrated sequence of processes that capture, shape, validate, and deliver data across the business in a way that’s repeatable, reliable, and ready for action.

In modern enterprise environments, pipelines span far more than point-to-point transfers. They form the operational backbone that links ingestion sources, transformation logic, compliance checks, and delivery endpoints into a single flow. One that scales. One that survives failure. One that keeps up with demand.

What makes a pipeline different from a task or a tool is the architecture behind it. You’re not just collecting data. You’re designing how it moves, how it changes, and how it gets trusted along the way. And when that architecture is built into the enterprise data system itself, the impact shows up everywhere, from AI readiness to regulatory reporting.

This is what separates manual ETL jobs from pipeline architecture. A data pipeline is more than a method. It’s an infrastructure decision — one that defines how fast your insights arrive, how clean your data stays, and how confidently your teams can act on it.

Data pipelines are more than ETL workflows

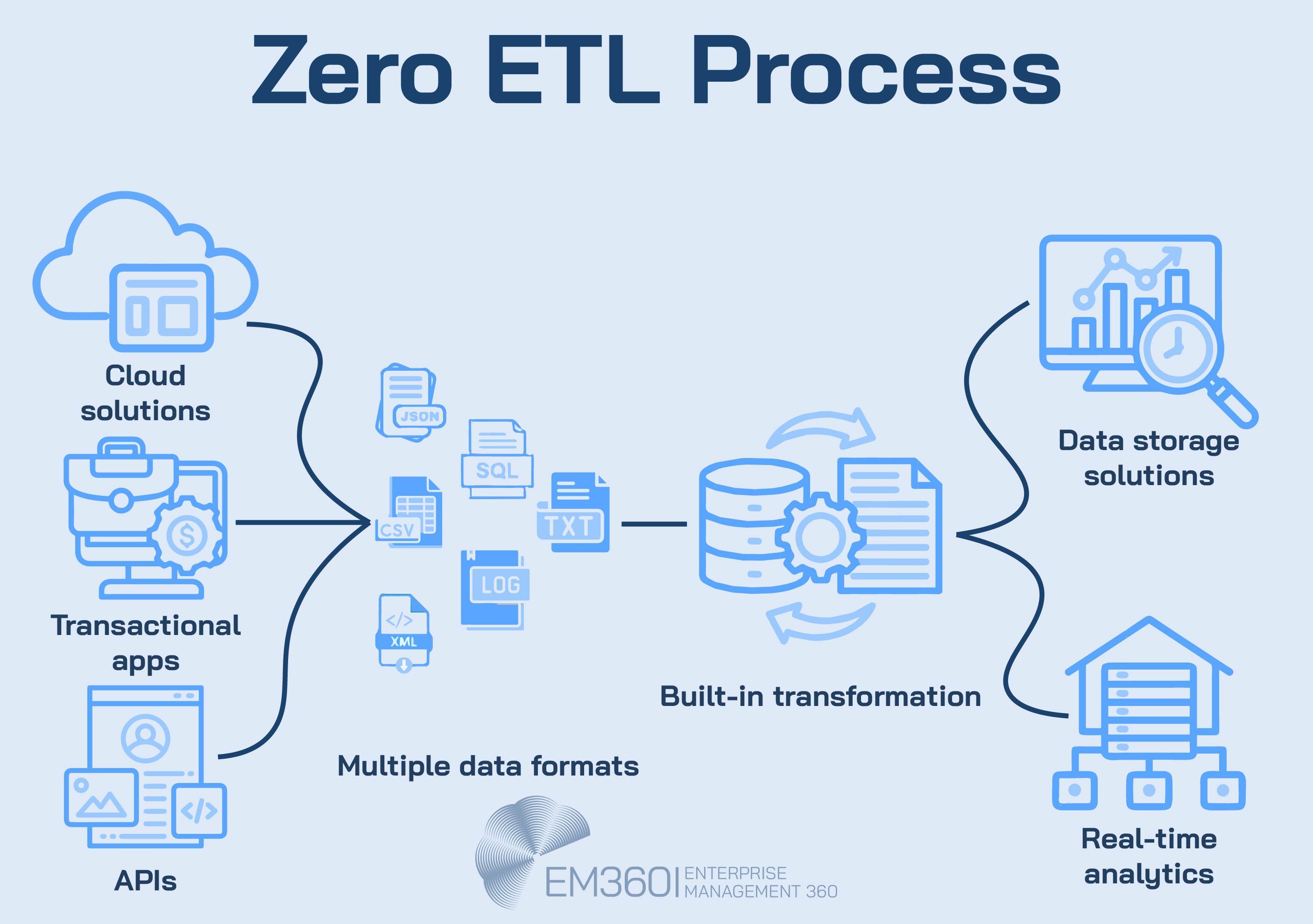

It’s easy to assume that a data pipeline is just another name for ETL. But while ETL is a method for processing data, a pipeline is the architecture that governs how that data flows, transforms, and activates across the enterprise.

ETL — extract, transform, load — is one possible pattern. So is ELT. So is reverse ETL, where data is pushed back out of the warehouse and into operational systems. These are valuable processes, but they operate at the workflow level. A pipeline is something else. It’s the system that sits above them, controlling how those workflows are sequenced, triggered, observed, and scaled.

This is what separates a pipeline from a process. A pipeline accounts for orchestration, dependency management, monitoring, and recovery logic. It spans environments and connects tools. It turns individual data tasks into a composable, traceable system that can evolve without collapsing under its own weight.

Why AI Needs Human Data Judgment

AI scales data mistakes as fast as insights. See why empowering analysts and governance is now a core strategic capability.

That distinction is especially important in enterprise environments where scaling means more than adding compute. It means creating systems that are predictable under pressure, adaptable across teams, and architected for visibility. Whether you're relying on an enterprise-ready ETL pipeline or choosing the right integration pattern for a given workload, the goal is the same: build a pipeline that delivers data you can act on — without compromising control.

Key Components of a Modern Data Pipeline

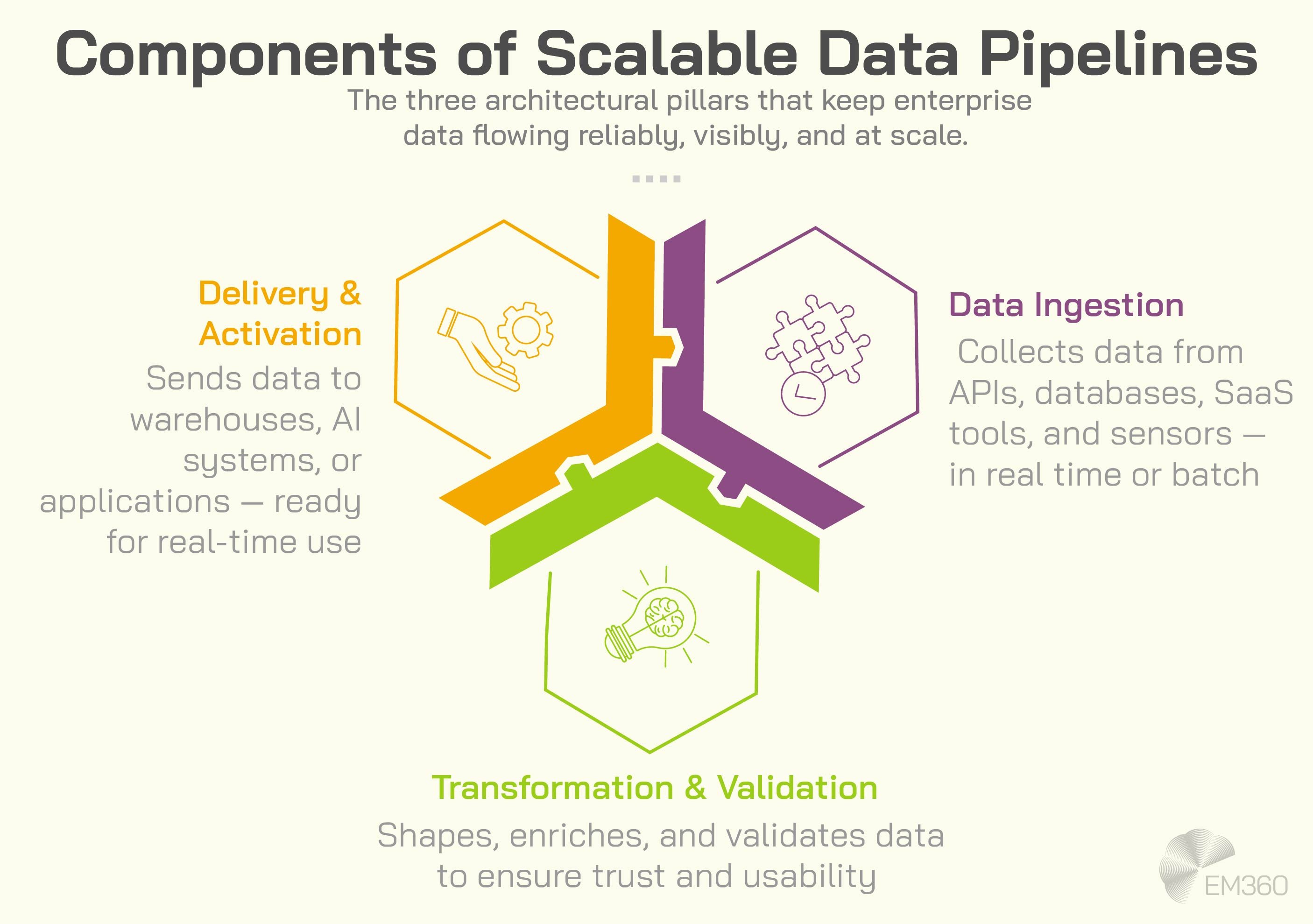

A data pipeline is only as effective as the architecture behind it. That architecture is built from modular components that each serve a distinct purpose — ingesting data from multiple sources, shaping it to meet business logic, and delivering it to systems that put it to work.

What matters most is how those components interact. Pipelines that treat ingestion, transformation, and delivery as disconnected tasks are harder to monitor, scale, and trust. But when these layers are aligned, the result is a flow that’s fast, observable, and reliable under pressure.

Data ingestion

Ingestion is the first link in the pipeline — and often the most unpredictable. Data can come from anywhere: APIs, transactional systems, flat files, mobile apps, industrial sensors, or third-party platforms. Some of it arrives in batches. Some of it arrives in real time. Some of it shows up as structured tables. Some of it doesn’t.

A robust ingestion layer doesn’t just collect data. It handles variability. That means supporting multiple formats, applying source connectors that understand the systems upstream, and allowing for event-based ingestion where data is captured as it’s created. It also means building in the logic that decides what gets pulled, when, and why so you’re not just moving noise.

Transformation and validation

Once ingested, data needs to be shaped into something usable. This isn’t about repeating ETL logic. It’s about applying the transformations that enforce trust, usability, and governance before that data reaches its destination.

That includes schema standardisation, deduplication, enrichment, and validation. It includes metadata tagging to track origin and logic. And in regulated environments, it means applying transformation rules that ensure the output is auditable, compliant, and contextually complete.

Inside Modern ETL Architectures

What differentiates leading ETL platforms across connectivity, automation, and scalability for warehouses, lakes, and real-time pipelines.

The transformation layer isn’t just where data changes. It’s where it earns trust. Which is why transformation logic needs to be modular, testable, and consistent across systems — not hidden inside one team’s pipeline.

Delivery and activation

The final component is where value gets realised. Delivery means pushing validated data to a destination system, but activation means making it useful once it arrives.

For some teams, that means loading into a warehouse or data lakehouse for analysis. For others, it means real-time delivery to automation platforms, AI pipelines, or customer-facing applications. It can also mean reverse ETL, where insights are pushed back into operational systems like CRMs or finance tools to support action at the edge.

A modern pipeline doesn’t just deliver data into storage. It activates it in the context that matters. That’s what turns a pipeline into an asset — not just a process.

The Role of Data Pipelines in Hybrid and Distributed Architectures

Enterprise data doesn’t live in one place anymore. It flows across SaaS platforms, legacy databases, cloud warehouses, regional data centres, and mobile or edge environments. Each one with its own protocols, latency requirements, and governance constraints. And yet, the business expects the data to act like it’s coming from a single system.

This is where pipeline architecture moves from helpful to essential. The more distributed the environment, the more critical the pipeline becomes — not just for performance, but for trust, auditability, and control.

Hybrid environments: Bridging legacy and modern systems

Hybrid architectures often include a mix of cloud-native platforms and deeply embedded on-prem systems that can’t be retired overnight. In these environments, pipelines act as the connective tissue that allows old and new systems to work in parallel.

Managing Risk with Observability

Missed signals in distributed systems become outages and revenue loss; observability tools close visibility gaps before they escalate.

They ingest data from mainframes, ERP systems, or legacy databases. They transform that data into formats compatible with cloud tools and analytics platforms. And they ensure that updates made in modern systems don’t leave the legacy layer behind. For many infrastructure leaders, this is the difference between delivering value now or waiting years for a full migration.

A hybrid-ready pipeline doesn’t just move data between systems. It synchronises them — with validation, monitoring, and fallback logic that keep data usable across both worlds.

Cloud and multi-cloud: Standardising across fragmented systems

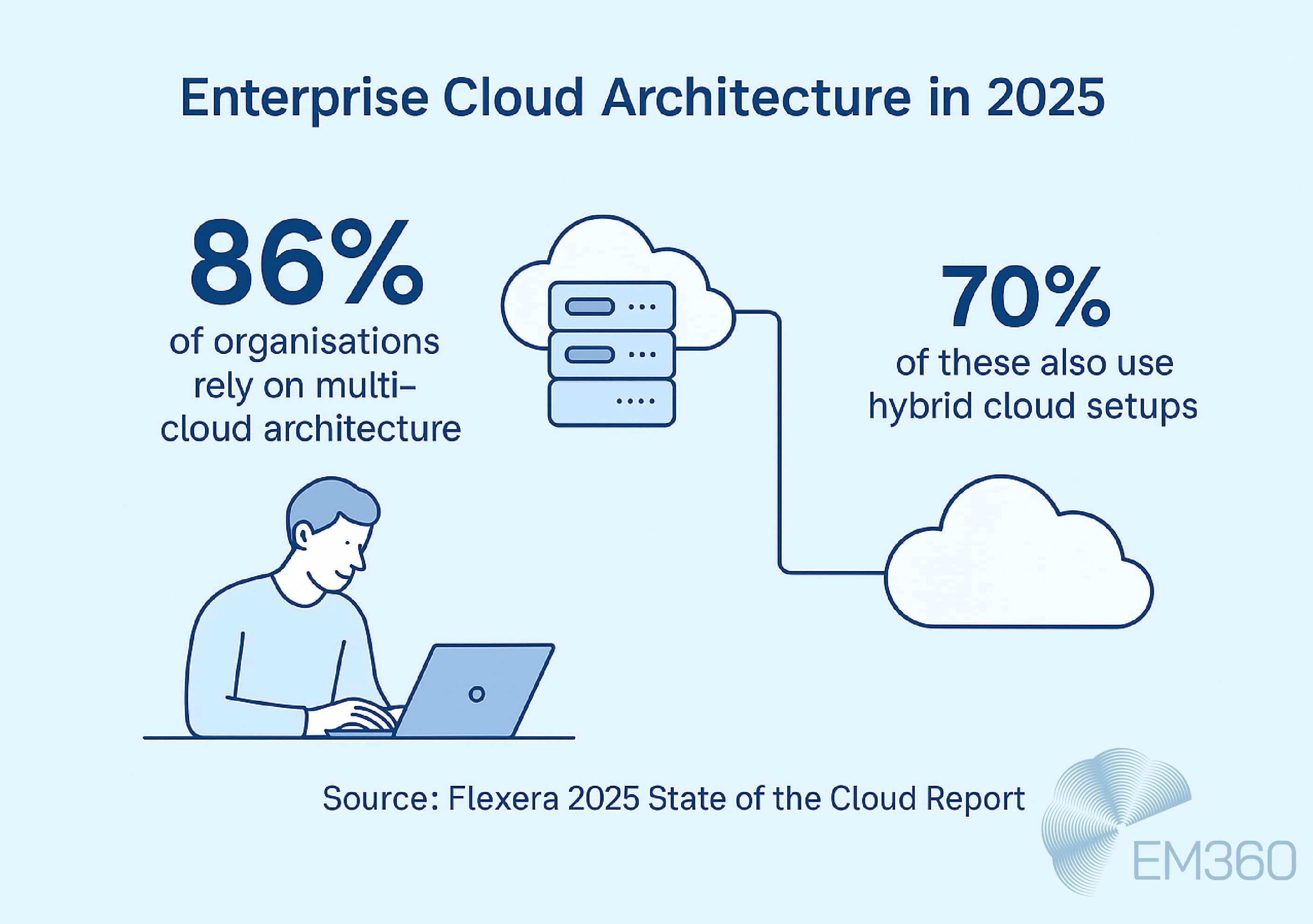

According to Flexera’s 2025 State of the Cloud Report, 86 per cent of organisations now operate in a multi-cloud environment, with 70 per cent also running hybrid cloud infrastructure. In a multi-cloud setup, different business units or regions may operate in different environments entirely. One might use AWS. Another might run on Azure. A third might be moving into Snowflake or Databricks. And all of them need to exchange data without disrupting operations or breaking compliance.

Pipelines in these environments do more than transfer files. They translate behaviours between clouds. That means aligning formats, coordinating access policies, and managing sync intervals across platforms that weren’t designed to work together. It also means handling regional regulations and data sovereignty rules without slowing down insight delivery.

For data teams, this becomes a question of governance. For infrastructure leads, it’s a matter of uptime and cost control. Either way, pipelines are the systems that make multi-cloud usable — not just technically, but strategically.

Edge environments: Acting on data before it reaches the cloud

At the edge, data pipelines work under different constraints. Bandwidth is limited. Processing power is local. And latency tolerance is measured in milliseconds, not hours.

Pipelines in edge environments are designed to act early. They filter and transform data close to where it's generated — in retail locations, vehicles, factories, or field devices — so only the most relevant information is sent back to central systems. This reduces noise, lowers costs, and enables faster decision-making on the ground.

Why CIOs Bet on Integrators

How top system integrators turn fragmented SaaS, legacy stacks, and IoT into a coherent platform for scalable digital operations.

These pipelines must also be resilient. If a connection drops or a sensor fails, the pipeline can’t break. It needs to buffer, retry, or switch logic automatically. That level of adaptability only happens when observability and orchestration are built into the edge itself — not managed as a separate downstream function.

The Operational Core of Scalable Data Pipelines

What makes a pipeline enterprise-ready isn’t just how it moves data. It’s how it manages complexity under pressure. That includes coordinating dependencies, adapting to schema changes, and recovering from failure without manual intervention. These capabilities sit below the surface, but they determine whether a pipeline can scale — or whether it simply runs until something breaks.

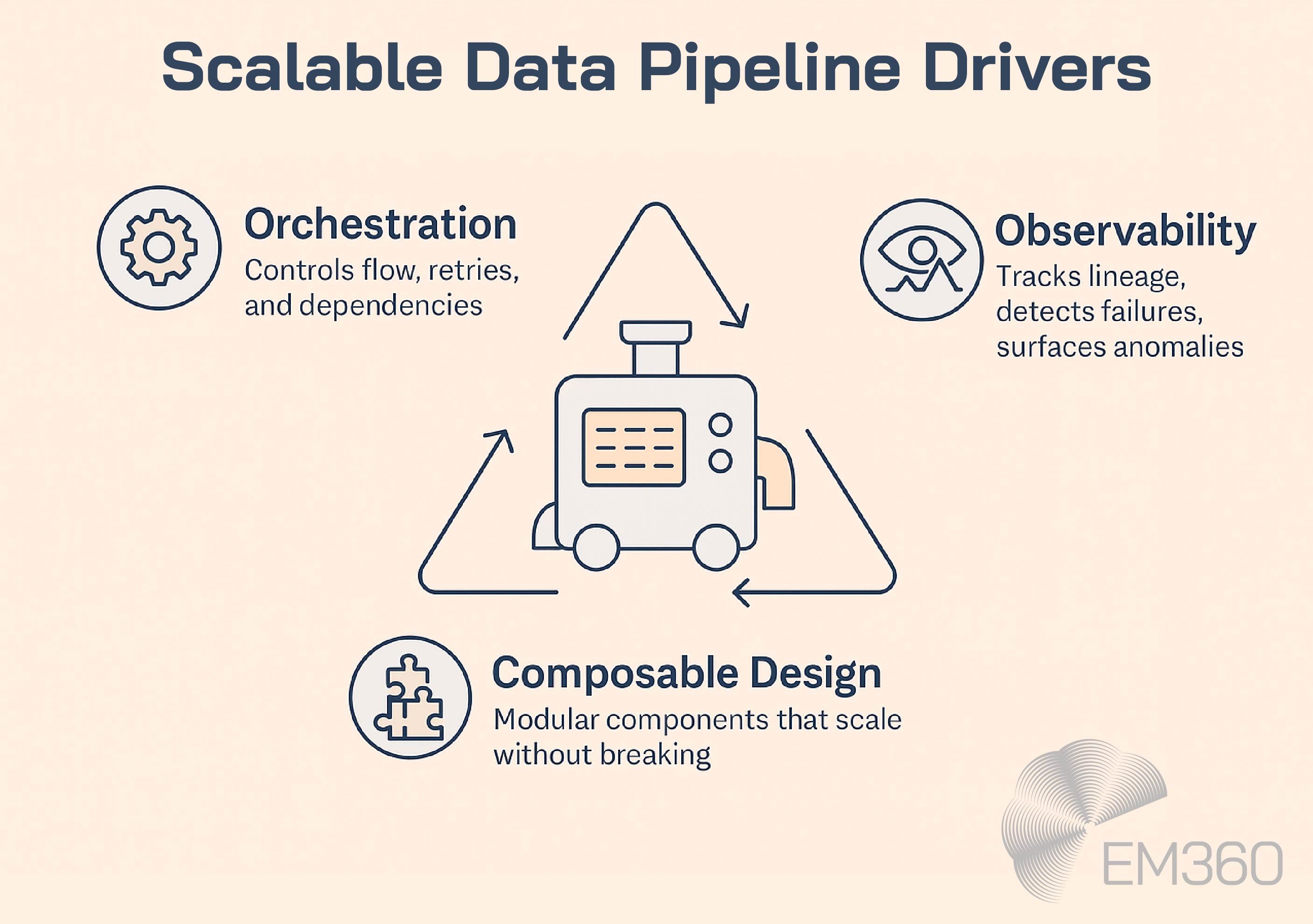

The strength of a pipeline comes from three architectural layers: orchestration, observability, and composability. Together, they form the operational core. Get these right, and your pipeline becomes a reliable system. Miss them, and you’re left with flows that pass validation but fail under real-world load.

Orchestration: Dependencies, retries, and flow control

In most enterprise environments, data doesn’t move in isolation. A single pipeline might wait for a job in another system, trigger logic based on output quality, or route data depending on downstream capacity. Managing that complexity requires more than manual scheduling or point-to-point scripting.

Modern orchestration tools like Airflow, Prefect, or Dagster use directed acyclic graphs (DAGs) to define the flow of logic. DAGs make it possible to control what needs to run, when, and under what conditions. They manage dependencies, coordinate retries, and respond to external signals — all without manual oversight.

When a job fails, the pipeline shouldn’t fail with it. It should retry, escalate, or reroute. That control is what separates a scheduled task from an orchestrated system. It’s also what keeps pipelines running smoothly when workloads spike or components degrade.

Observability: Knowing when and why pipelines break

Failures are inevitable. What matters is whether you can see them before they cause damage. Observability isn’t just about logging. It’s about making pipelines inspectable across their entire lifecycle.

That means tracking data lineage from source to destination, surfacing schema changes in real time, and exposing metrics like run time, throughput, and error rates. It also means identifying anomalies before they become failures — whether that’s a sudden drop in row count or an unexpected shift in field distribution.

Observability platforms like Monte Carlo, Databand, and OpenLineage offer that visibility. But they can’t recover what isn’t instrumented. That’s why observability needs to be designed into the pipeline itself. Monitoring isn’t an afterthought. It’s a foundational layer.

Composable design: Building scalable, modular pipelines

If you can't modify a pipeline without breaking it, it doesn't scale. When a schema change upstream breaks a transformation, or when a single pipeline contains too many tightly coupled jobs, the risk isn’t failure. It’s fragility.

Composable pipelines solve for this by breaking workflows into modular, reusable components. Each step — from ingestion to validation to delivery — can be versioned, tested, and reused independently. This allows teams to scale pipelines across business units without duplicating logic or introducing hard-coded dependencies.

Composable design also enables API-first architecture. Pipelines can be triggered by events, integrated into broader systems, and adapted as business needs evolve. This kind of flexibility doesn’t just support growth. It protects stability while growing. And it reduces the operational cost of change across the data estate.

What Can Go Wrong: Failure Patterns in Pipeline Architecture

When pipelines fail, the root cause often isn't one big breakdown. It's a design flaw that stayed hidden until the conditions changed. A format shift that wasn't accounted for. A dependency that ran out of order. A retry that never triggered. And by the time the issue surfaces, the damage has already worked its way downstream.

In fact, in a recent analysis of GitHub and Stack Overflow activity, researchers found that 33 per cent of data-related issues in pipelines were caused by incorrect data types, particularly during the cleaning stage. Meanwhile, integration and ingestion accounted for nearly half of developer support questions, highlighting the operational strain of getting data into pipelines in the first place.

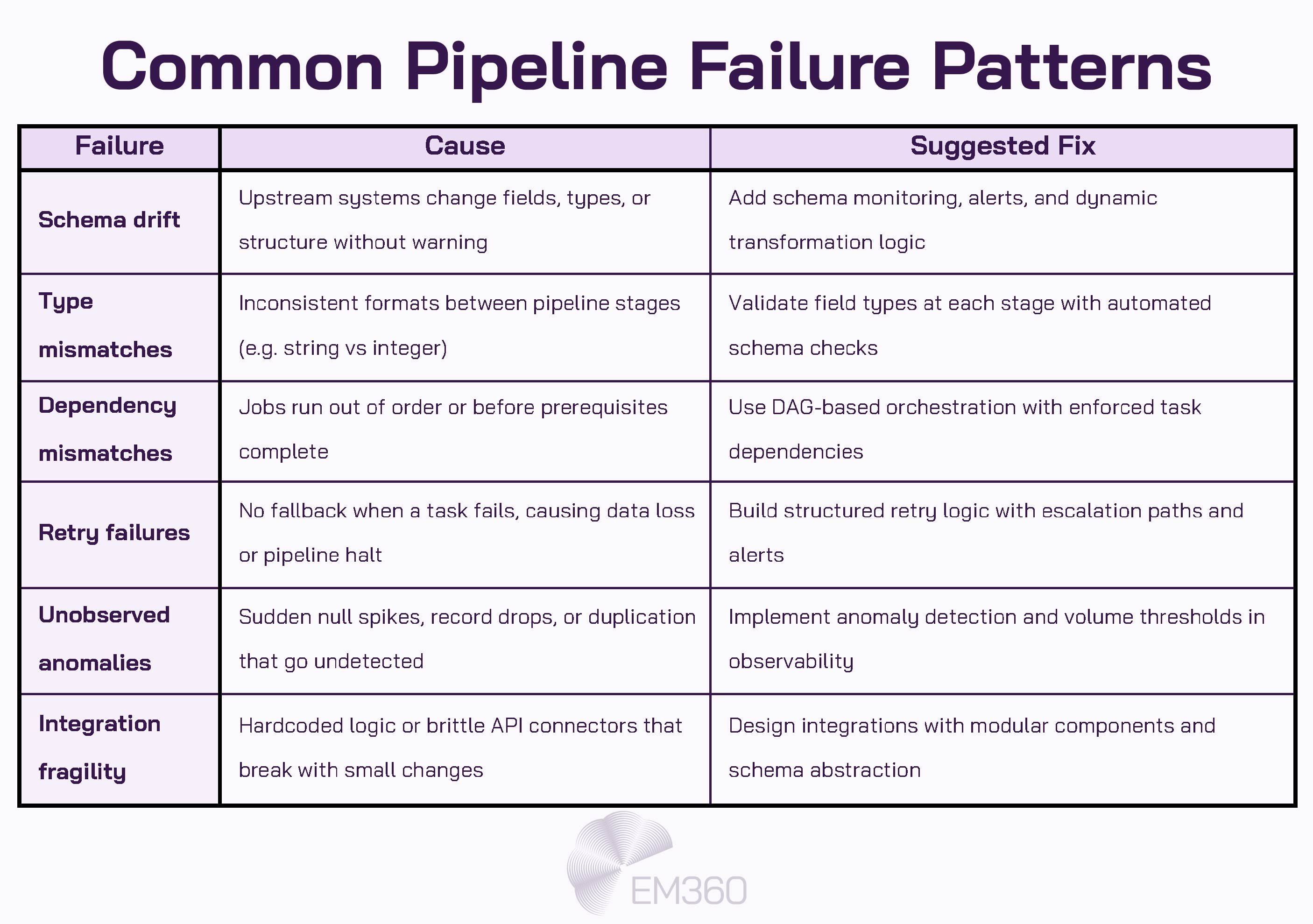

Failure patterns that signal deeper architectural risk

Not every failure in a data pipeline throws an error. Many pass silently, especially when systems are too loosely monitored or too tightly coupled to adapt. But the patterns that show up most often — and do the most damage — tend to reveal deeper weaknesses in orchestration, validation, and monitoring.

- Schema drift breaks pipelines when upstream systems change field types, column order, or structure without warning. If your transformation logic isn't designed to handle variation, the pipeline either breaks outright or pushes flawed data downstream.

- Type mismatches between systems often go undetected until analytics tools or machine learning models start producing unreliable results. This usually points to a lack of runtime validation or missing interface controls between stages.

- Dependency mismatches happen when orchestrated jobs are misaligned. A validation task runs before the data has landed. A downstream load completes while upstream logic is still processing. These issues stem from weak DAG design or incomplete scheduling logic.

- Retry failures expose pipelines that weren’t built for resilience. When a task fails, it should pause, retry, or escalate. Pipelines without structured retry logic either stall or silently drop outputs.

- Unobserved anomalies — sudden drops in record counts, spikes in nulls, duplicate events — often surface too late because observability wasn’t built in. These gaps aren’t monitoring failures. They’re architectural failures.

- Integration fragility shows up when pipelines depend on brittle logic or hardcoded steps. Even minor changes break the flow because the system wasn’t designed for modularity or reuse.

Each of these failure types is preventable. But only if pipelines are designed with flexibility, visibility, and failure tolerance from the start — not just stitched together to meet a deadline.

Tooling: How to Power Pipelines Without Overengineering

Enterprise data stacks aren’t failing because the tools are inadequate. They’re failing because the tools weren’t chosen with the architecture in mind. Too often, platforms are added to fix symptoms rather than to support structure. And what starts as a single connector or scheduler turns into a stack that’s brittle, bloated, and hard to govern.

The impact shows up in engineering velocity. According to research by Redpoint, 67 per cent of large, data-centric enterprises now dedicate over 80 per cent of their data-engineering resources to maintenance, leaving little capacity for strategic innovation. That’s not a tooling failure. That’s an architectural one.

The right way to approach tooling is by function — not by feature set and not by brand. That means understanding what each category of tools actually solves and choosing based on the role your pipeline needs that tool to play. This section breaks down the key categories and gives you a set of diagnostic questions to help guide fit. Because the goal isn’t to collect capabilities. It’s to support architecture.

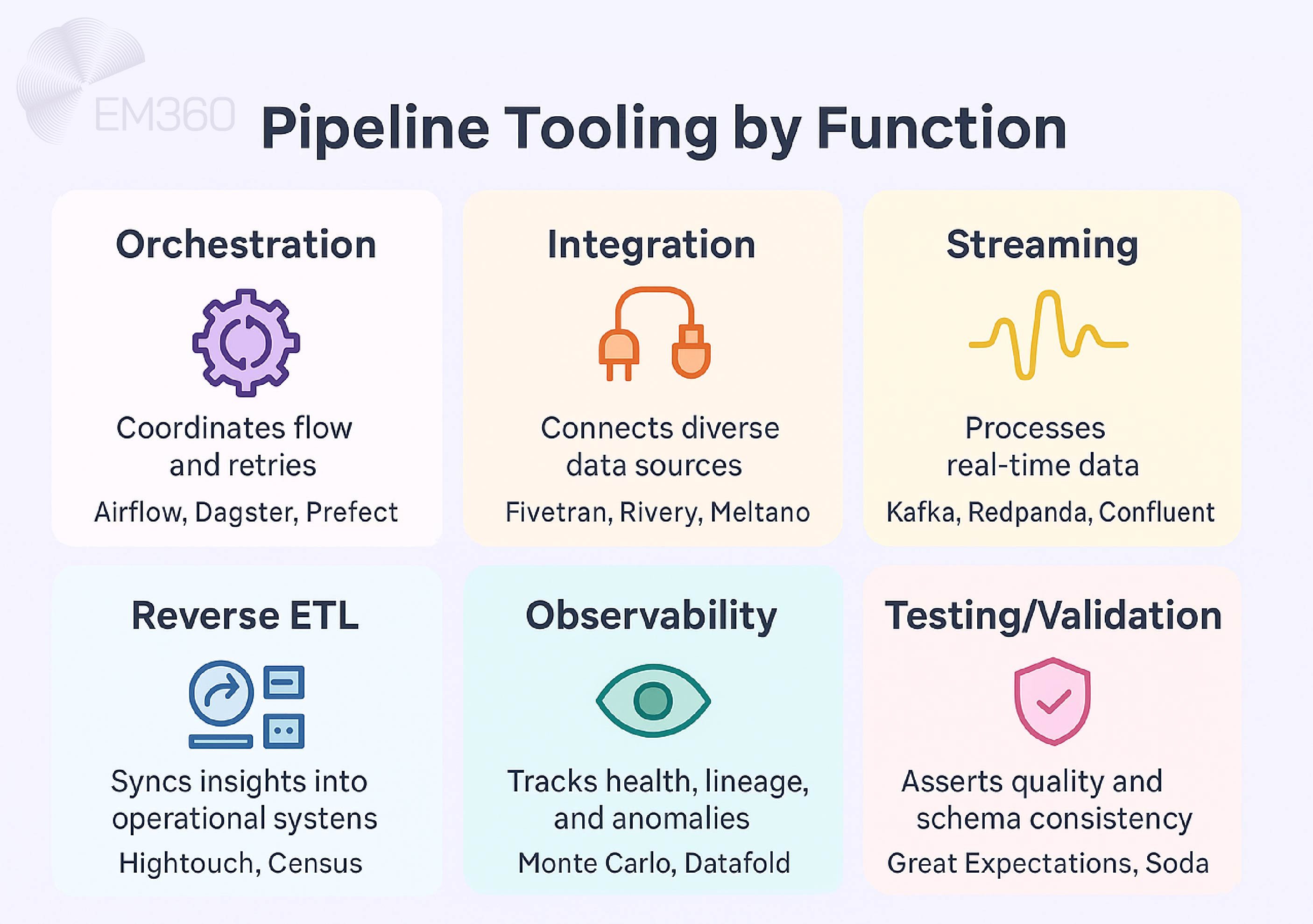

Orchestration

Orchestration tools control the execution flow of your pipeline. They coordinate dependencies, manage retries, and track which jobs run when and under what conditions. The most common orchestration models use DAGs (directed acyclic graphs) to define and control job logic.

These tools also help monitor performance, catch failures early, and enforce run-time policies across multiple teams or systems.

Questions to ask:

- How many teams or tools need to hand off control during pipeline execution?

- Can we visualise job dependencies and error paths in our current system?

- Do we need event-driven execution, time-based scheduling, or both?

Integration

Integration platforms focus on connecting to diverse data sources — SaaS applications, databases, APIs, event logs — and moving that data into your pipelines cleanly. Most offer prebuilt connectors, schema handling, and basic transformations to reduce ingestion time.

Some platforms emphasise low-code interfaces, while others are built for technical teams that want more configuration control.

Questions to ask:

- How many of our core data sources already have supported connectors?

- Will we need to customise ingestion logic for different departments or regions?

- Is the integration platform flexible enough to support both batch and streaming sources?

Streaming

Streaming platforms move data in real time. They handle event-based pipelines, where data is processed as it arrives rather than on a schedule. These tools often include queuing, buffering, ordering, and backpressure control — especially important when volumes spike or delivery needs to happen in near real time.

Streaming pipelines are ideal for operational analytics, machine learning inference, and alerting systems.

Questions to ask:

- Do we have use cases that require sub-second data latency?

- Are our current systems designed to handle continuous ingestion and processing?

- What mechanisms do we need in place to manage throughput, ordering, and failure recovery?

Reverse ETL

Reverse ETL tools move data from central stores like data warehouses back into operational tools — CRMs, marketing platforms, finance systems, and customer applications. These tools help make analytics actionable by syncing enriched insights into systems where decisions actually get made.

The key challenge is maintaining accuracy and performance while syncing at scale.

Questions to ask:

- Which operational teams need access to analytics outputs in their own tools?

- How frequently do those systems need to be updated with new data?

- Can we enforce data governance policies when syncing back into production systems?

Observability

Observability platforms provide visibility into pipeline health, behaviour, and failure points. This includes data lineage, anomaly detection, schema change alerts, performance monitoring, and logging across every stage of the pipeline.

Observability isn't a nice-to-have. It’s what turns a functioning pipeline into a trustworthy one.

Questions to ask:

- Can we track every transformation a dataset has gone through?

- How fast can we detect and trace silent failures like schema drift or null spikes?

- Are our teams spending more time firefighting pipeline issues than resolving root causes?

Testing and validation

These tools focus on asserting data quality, catching breakages early, and enforcing transformation logic consistently across environments. That includes schema validation, volume tracking, constraint testing, and expected value ranges. Some tools allow version-controlled tests that run automatically at each pipeline stage.

Without this layer, pipelines might run — but the results can’t be trusted.

Questions to ask:

- Are we validating outputs before they land in reporting or activation systems?

- Do we have standardised, reusable test logic across teams?

- How are test failures escalated, reported, and resolved today?

What Makes a Data Pipeline Enterprise-Ready

Most pipelines work — until they don’t. They run in staging, pass tests, and deliver results. But when environments scale, systems change, or audits arrive, too many of those pipelines start to break in ways that are hard to diagnose and even harder to fix.

An enterprise-ready pipeline isn’t just one that moves data. It’s one that keeps working as the business grows, regulations tighten, and complexity increases. That means resilience, interoperability, and visibility are not optional. They’re the baseline.

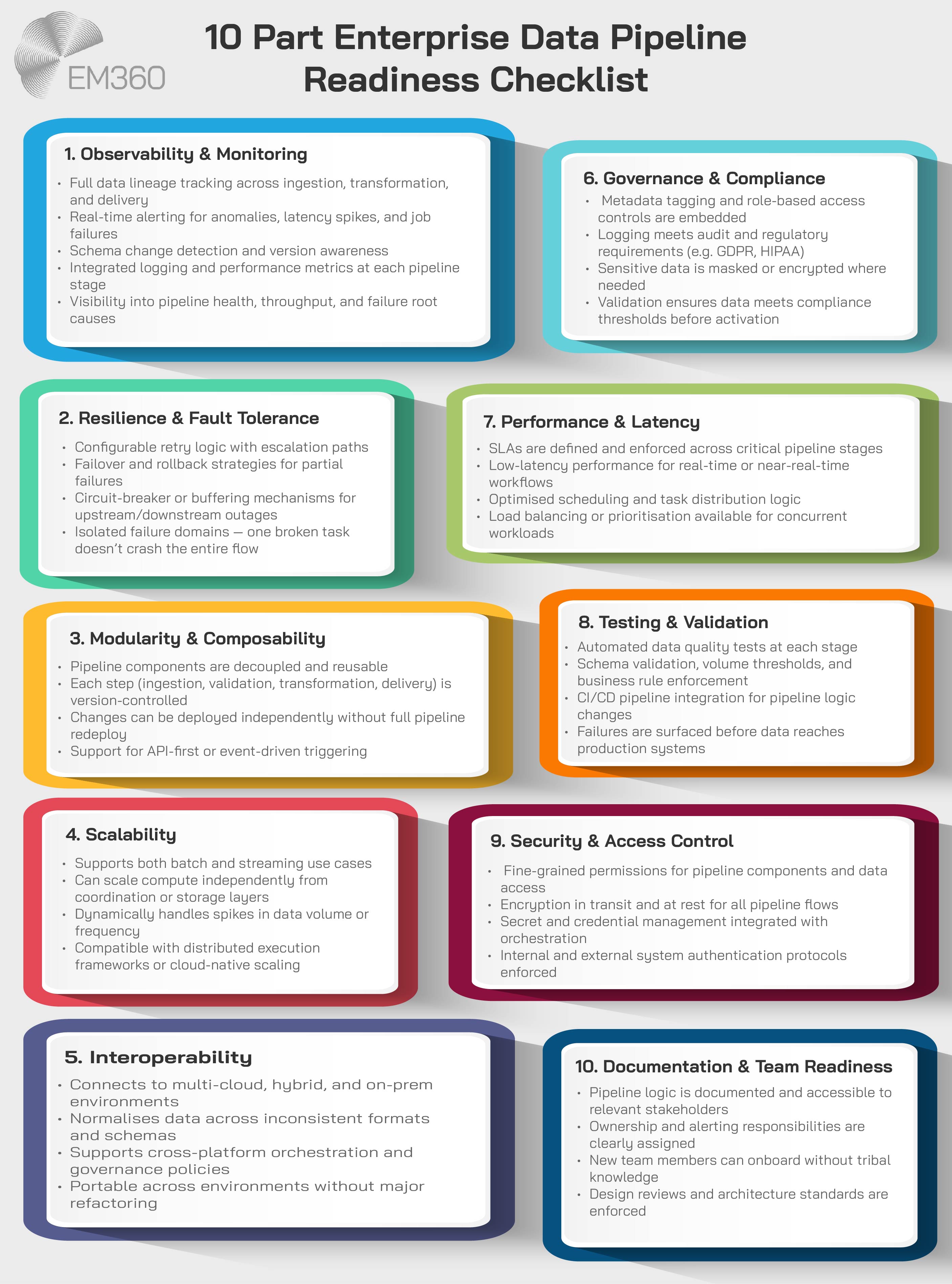

If you’re pressure-testing your architecture, here’s what an enterprise-grade pipeline should be able to do:

Be observable from end to end

You can’t fix what you can’t see. Enterprise pipelines must offer complete visibility across ingestion, transformation, and delivery stages — with real-time monitoring, lineage tracking, and anomaly detection built in.

If something breaks, teams need to know where, why, and what downstream systems are affected. Auditability isn’t just for compliance. It’s for operational trust.

Be composable and modular

Monolithic pipelines don’t scale. When every change requires a full redeploy, progress stalls. Enterprise environments need pipelines built from modular components — ones that can be versioned, reused, and adapted independently.

Composable pipelines also reduce fragility. One transformation step can fail or evolve without dragging the rest of the system down with it.

Be resilient by design

Enterprise-grade pipelines are designed with failure in mind. They include retry logic, failover mechanisms, and fallback strategies to ensure continuity — even when systems upstream or downstream behave unpredictably.

This resilience also extends to schema evolution, platform updates, and shifting data volumes. If your pipeline only works when everything is stable, it’s not ready for enterprise use.

Support governance and compliance by default

Data pipelines don’t just carry data. They carry risk. Which means governance needs to be embedded from the start — not retrofitted later.

That need is driving significant investment. According to market research, the global data governance market is projected to grow from USD 5.38 billion in 2025 to over USD 18 billion by 2032 — a compound annual growth rate of 18.9 per cent. The message is clear: governance isn’t an afterthought anymore. It’s infrastructure.

That includes metadata tagging, role-based access control, lineage capture, and logging that supports audit readiness. It also means enforcing validation at every handoff so data meets quality, privacy, and regulatory requirements before it gets used.

Scale without breaking alignment

Enterprise data needs change constantly — new teams, new tools, new use cases. Pipelines that aren’t designed for growth end up slowing it down.

Whether you’re expanding across business units or integrating new platforms, your pipeline should scale without losing observability, quality, or trust. That’s the mark of a system that’s not just functional but strategic.

Final Thoughts: The Best Pipelines Are Built to Adapt

Data pipelines are more than technical infrastructure. They’re the systems that shape how insight moves, how fast it arrives, and how reliably it reflects the truth. When they work, decision-makers stop guessing. Teams move faster. Platforms stay aligned. When they fail, trust disappears — and the costs follow.

The pipelines that perform at scale aren’t just built to run. They’re built to evolve. They’re observable, modular, and governed from the inside out. And they don’t just connect systems. They connect strategy to execution — ensuring the data your business depends on is always trusted, usable, and ready to deliver.

If you're rethinking how your architecture supports insight, scale, and speed, you're not alone. From orchestration frameworks to observability strategies, EM360Tech brings together the guidance and expert insights to help you move forward with confidence.

Comments ( 0 )