The most dangerous assumption a data team can make is that their pipelines will scale just because their platform does. It’s rarely the tooling that breaks first — it’s the design.

For modern data teams tasked with delivering insight at speed, ETL best practices aren’t optional. They’re foundational. From missed SLAs to inconsistent outputs, most pipeline failures trace back to decisions made at the architecture level.

Not malicious ones, but pragmatic workarounds that worked fine at ten data sources and quietly collapsed at a hundred.

And those failures aren’t rare. In a Censuswide survey of enterprise data leaders, 45% reported experiencing 11 to 25 data pipeline failures in just two years — with 63% saying those failures directly impacted customer experience. Enterprise data pipelines today must balance flexibility with control.

Firstly, they need to be able to adapt to shifting schemas and deliver near real-time analytics. But they also have to uphold governance standards across multi-cloud environments. To meet those demands, scalable architecture and pipeline observability can’t be afterthoughts.

They need to be built in from the beginning.

This is where ETL optimisation gets practical. Not as a theoretical exercise, but as the operational difference between pipelines that run reliably and ones that quietly drift out of spec. Best practices aren’t legacy concepts.

They’re what keeps modern data environments stable, testable, and insight-ready — no matter how fast the business moves.

Build Pipelines for Modularity and Scale

A brittle ETL pipeline doesn’t just break under pressure — it breaks in silence. Most teams only realise it’s happened after the dashboard errors out or the model produces something inexplicably wrong.

To scale effectively, pipelines need more than just throughput. They need modular design, clean separation of logic, and architecture that supports rapid change without multiplying complexity.

Especially in hybrid and multi-cloud environments, where systems are already loosely coupled, the pipeline architecture has to match the operational reality. This is where scalable architecture becomes less about scale and more about control. When pipelines are modular, each component can be tested, reused, observed, and rebuilt independently.

That doesn’t just reduce breakages. It speeds up iteration and future-proofs the pipeline as the ecosystem evolves. And for cloud-native ETL, modularity isn’t just helpful — it’s expected. Orchestration platforms, streaming frameworks, and data contracts all assume that pipeline components are swappable and observable.

If your architecture doesn’t support that, you’re not just behind. You’re already in the way.

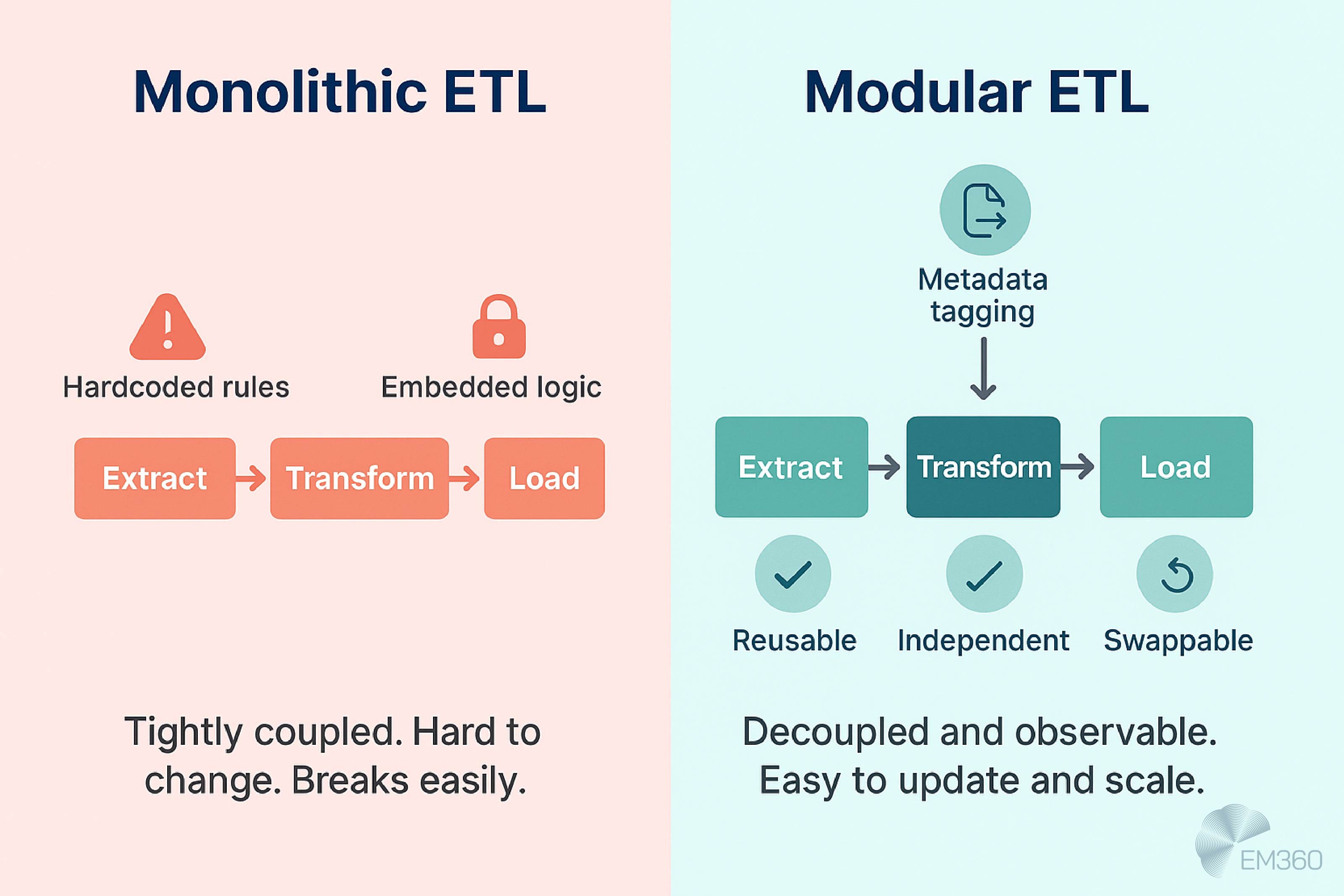

Avoid monolithic pipeline architectures

There’s a reason most engineering retros start with something like “Well, it made sense at the time.” Early on, it's easy to over-couple stages by hard-coding transformations, chaining dependencies, and embedding logic that becomes inseparable later.

But monolithic pipeline design doesn’t scale with the business. It creates fragile systems that break with every schema change, integration request, or platform shift. And it’s usually the biggest reason why updates get delayed or quietly abandoned altogether.

A better approach is to structure pipelines as loosely coupled flows — each with a single responsibility and minimal side effects. In practice, this means designing for ETL architecture that separates extraction, transformation, and loading into independently executable components.

That makes every part of the system easier to test, replace, or upgrade. This kind of operational flexibility isn’t just for complex deployments. It’s what keeps simple pipelines simple — even when the business around them stops being predictable.

Prioritise metadata-first frameworks

Most pipelines treat metadata like an afterthought — something captured at the end or stored in a dashboard no one checks. But metadata tagging, applied early and consistently, is one of the simplest ways to build both observability and data governance into your pipelines from the start.

When Platforms Betray Communities

How ownership changes, ad demands and moderation choices turned a creative social hub into a case study in misaligned platform strategy.

A metadata-first approach means every data asset, transformation, and load process is tracked with context. It supports data lineage across systems, simplifies audits, and makes debugging far easier. It also allows platform teams to enforce access controls, monitor changes, and create reusability without introducing manual friction.

And the industry is backing that shift. The metadata management tools market was valued at USD 11.7 billion in 2024 and is projected to reach USD 36.4 billion by 2030, growing at a CAGR of 20.9%.

And it’s not just about compliance. Metadata enables collaboration. When teams can see what data exists, how it’s structured, and where it came from, they’re far more likely to use it effectively — and less likely to duplicate efforts or introduce risk.

In environments where agility matters, pipeline observability shouldn’t rely on post-mortems. It should be a design principle. Start with metadata, and most of the visibility and governance follow naturally.

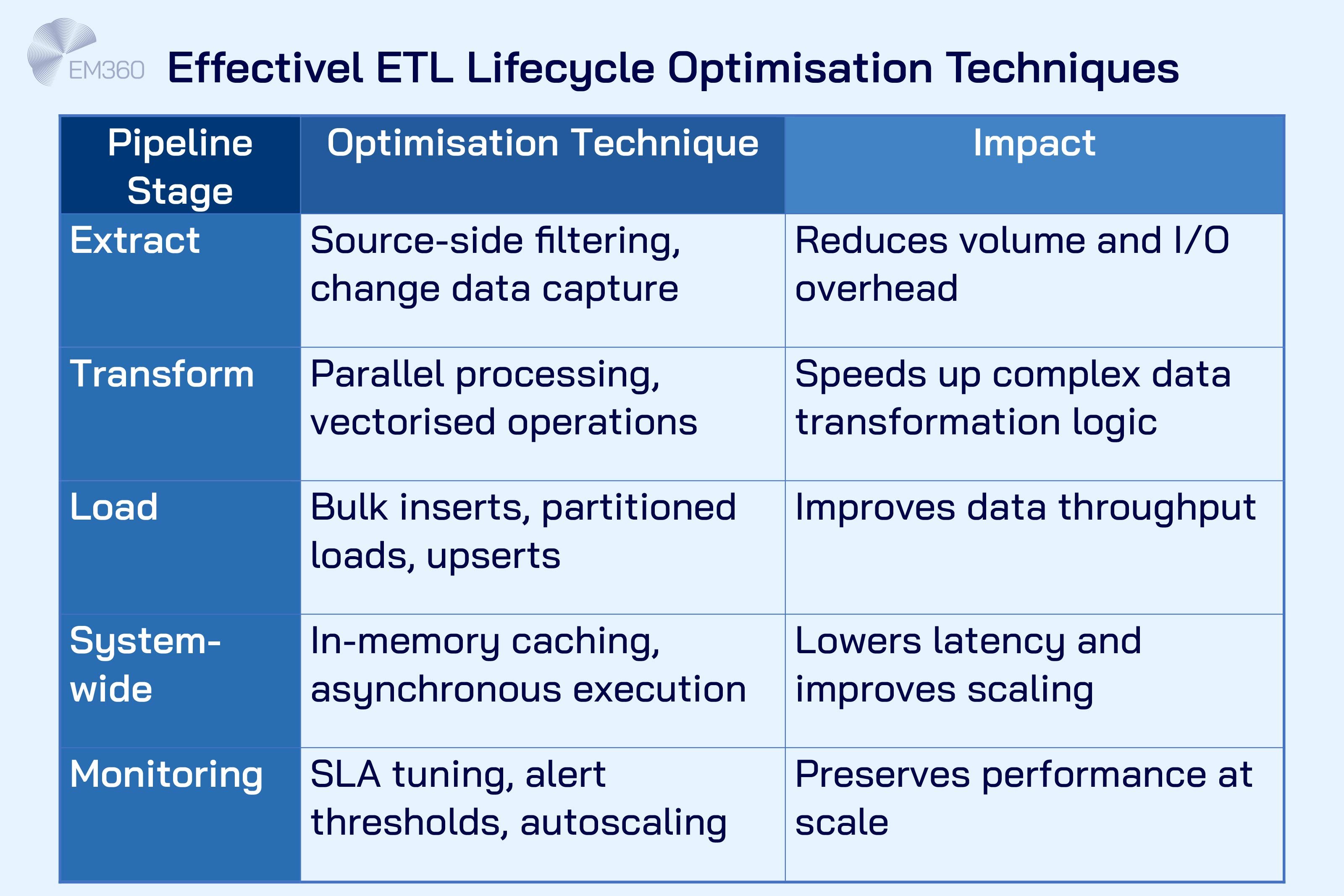

Optimise Performance Without Sacrificing Control

There’s no shortage of ways to make a pipeline faster. The real question is whether the performance gains come at the cost of reliability, visibility, or governance. In enterprise environments, ETL optimisation isn't just about speed. It’s about throughput that can be trusted and scaled.

Each phase of the pipeline — extract, transform,and load — offers its own performance levers. And each decision made along the way affects cost, latency, and operational complexity. Whether you're pushing millions of records per minute or tuning overnight jobs, the goal is to optimise without introducing fragility.

Below are some of the most effective performance tactics across the ETL lifecycle:

Performance is context-dependent. What works for an hourly refresh may fall apart under continuous streaming. The key is to build with elasticity, then tune with precision.

Use parallelism and caching to accelerate throughput

When pipelines slow down, the issue is rarely compute power alone. It’s bottlenecks — in processing, memory, or I/O — that bring everything to a crawl. And the most reliable way to move past those limits is with parallel processing and smart caching strategies.

Inside 2025's BI Stack Choices

Compare leading BI platforms by data stack fit, from semantic layers to multi-cloud alignment, to decide where to anchor enterprise analytics.

Horizontal scaling spreads processing across multiple workers. Vertical scaling increases resources per job. But the real gains often come from structuring tasks so they can run concurrently, not sequentially. That means breaking transformations into smaller, independent stages and using frameworks that support task-level parallelism.

Caching can reduce redundant queries and transformations, especially for reference data or lookup-heavy pipelines. In-memory operations speed things up even further, particularly when combined with metadata-aware orchestration.

Together, these techniques boost ETL performance without sacrificing auditability or traceability. They also create headroom — the operational buffer that allows pipelines to scale without needing to be rebuilt every six months.

Design for schema drift and source volatility

Pipelines don’t fail because systems change. They fail because those changes catch the pipeline by surprise. That’s why schema evolution and source system changes need to be accounted for at the design level.

Most modern platforms offer dynamic schema handling — but relying on automatic tools alone is risky. What matters more is building in logic that can handle optional fields, default values, and validation layers before a transformation breaks production.

ETL pipelines should also support versioned schemas and metadata tagging that flags when source definitions have changed. This makes it easier to track dynamic data formats, adjust transforms, and ensure downstream systems aren’t working off stale assumptions.

Building for volatility doesn’t mean lowering standards. It means expecting change and being prepared to adapt quickly when it arrives.

Choose batch, microbatch, or streaming — not by trend, but by need

Not every pipeline needs to run in real time. The decision to use real-time ETL, microbatching, or traditional batch processing should come from the latency tolerance of the business process, not hype or tooling preference.

Batch processing is reliable and cost-effective when updates can wait. Microbatch architecture suits use cases like near real-time dashboards or periodic synchronisation. True streaming pipelines are essential for event-driven systems, fraud detection, or operational analytics where data is only useful if it’s fresh.

Data Control Lessons from Google+

Uses the Google+ shutdown and Project Strobe review to explore privacy expectations, API governance, and consent in large-scale platforms.

The mistake many teams make is defaulting to streaming without considering downstream readiness, cost implications, or failure recovery. Streaming is powerful, but it’s also complex. When in doubt, microbatching can offer a middle ground — faster than batch, safer than stream.

The point isn’t to chase velocity. It’s to match delivery cadence with decision need. That’s where performance becomes strategic.

Orchestrate Pipelines with Intelligence and Resilience

A pipeline that runs is not the same as a pipeline you can trust. Orchestration is where operational confidence is built — not just through scheduling, but through visibility, control, and automated response.

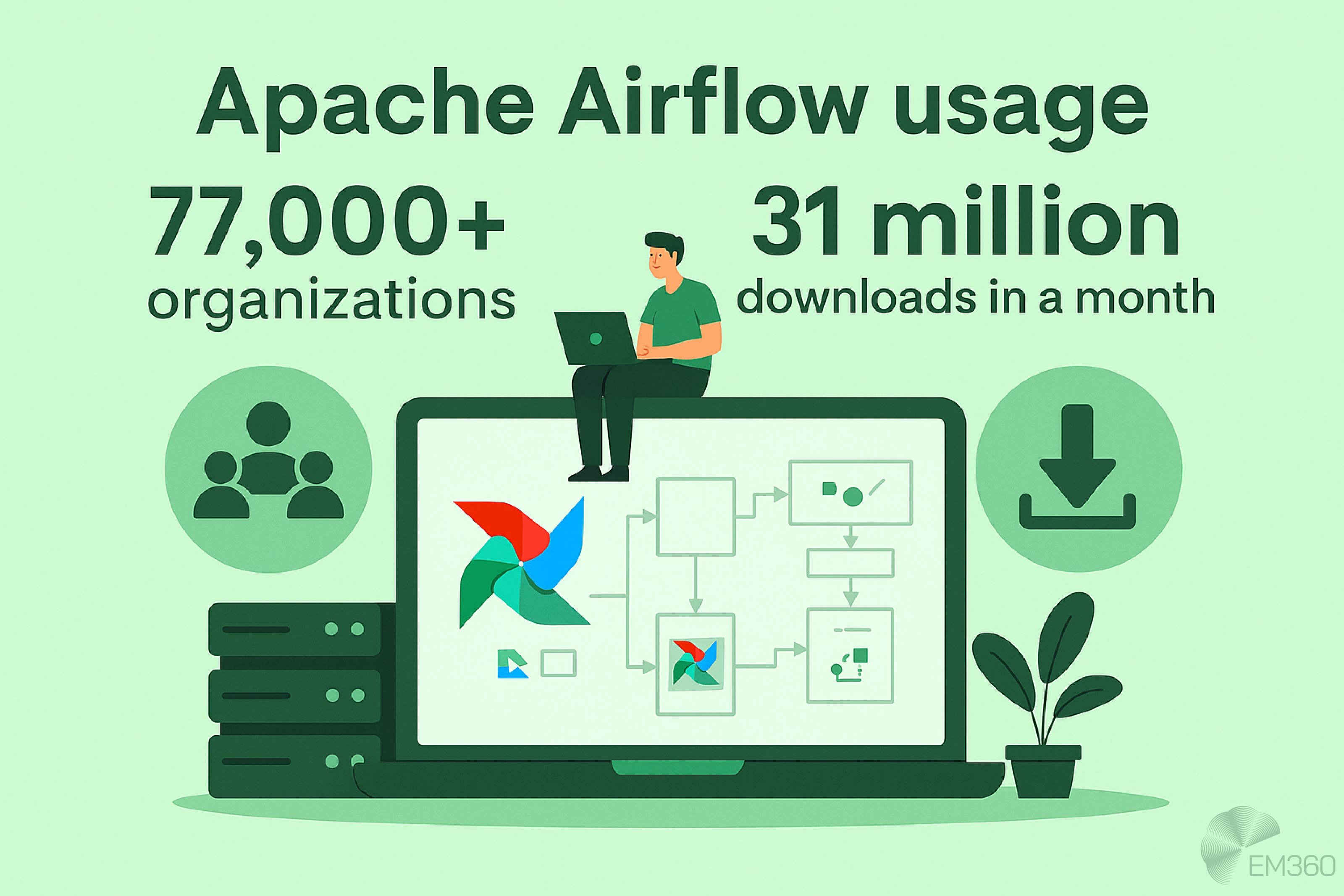

And at enterprise scale, that confidence starts with proven tools. Apache Airflow alone is used by more than 77,000 organisations, with over 31 million downloads in a single month Next47, Kanerika. Adoption at that level reflects how central orchestration has become to building pipelines that are observable, modular, and resilient by design.

Modern pipeline orchestration isn’t just about when a job runs. It’s about how reliably that job executes under pressure, how easily it adapts when things change, and how quickly issues are detected and resolved. That requires more than triggers and task lists. It needs intelligent architecture that connects the flow of data with the flow of operations.

In today’s enterprise stacks, a resilient ETL workflow should be modular, observable, and event-aware. It should also be able to scale independently across pipeline components, using smart retry logic, failover detection, and performance metrics to keep jobs running smoothly without constant manual oversight.

Choose the right orchestration pattern for your workloads

Orchestration starts with one decision: what should trigger the pipeline? And too often, the answer is “whatever was already in place”.

Different workloads demand different approaches. Cron jobs are fine for predictable refreshes, like overnight reports. But when pipelines are tied to operational triggers — such as a new file appearing in cloud storage or a user action inside an app — event-based orchestration becomes essential.

From Audits to Continuous Trust

Shift compliance from annual snapshots to embedded, automated controls that keep hybrid estates aligned with fast-moving global regulations.

Directed acyclic graphs (DAGs) offer a visual and logical way to manage dependencies, often used in tools like Apache Airflow and Dagster. For cloud-native stacks, serverless triggers allow pipelines to launch in response to external events without constant polling.

The key is to select orchestration tools based on job complexity, failure tolerance, and observability needs. No pattern is universally right. But misalignment between workload and trigger type is one of the most common causes of pipeline drift, latency, and silent failures.

Whether you're using data triggers, scheduled tasks, or hybrid orchestration, the priority is control without friction — pipelines that run with precision, even when no one is watching.

Implement observability and SLA monitoring

If orchestration gives you control over pipeline flow, observability tells you whether it’s actually working. And without both, resilience becomes a matter of luck.

A well-instrumented pipeline should include real-time observability across every stage — not just run status, but detailed insights into task duration, success rates, and throughput. Logging should be structured and consistent. Metrics should map to SLAs. And alerts should route to the people who can actually fix the issue.

SLA tracking should also be more than a timestamp comparison. It should factor in data completeness, processing accuracy, and system readiness. When a pipeline misses SLA because the upstream data was malformed or late, that’s not just a failed job. It’s an opportunity to improve the design.

The goal of pipeline monitoring isn’t noise. It’s signal. A healthy orchestration layer surfaces problems early, separates root causes from symptoms, and enables faster, more confident responses.

When your pipelines are observable and trigger with intent, orchestration becomes a strategic layer — not just a scheduler, but a system that protects performance and reduces operational debt.

Design for Governance, Access, and Auditability

Governance isn’t a feature you add once the pipeline is running. It’s a design principle that should be built into every stage of the flow.

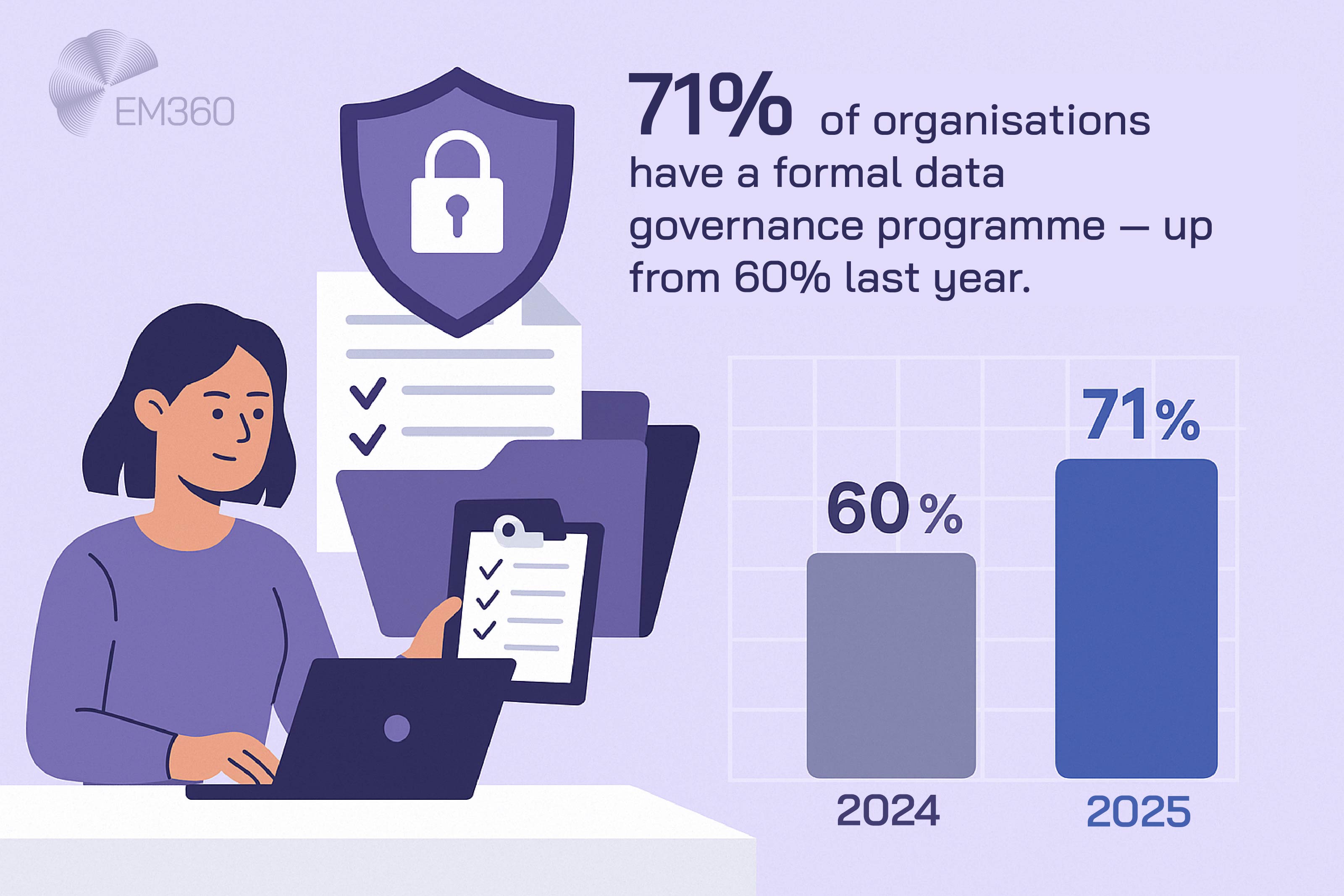

Without embedded data governance, pipelines may run efficiently but invisibly. That’s a liability. In fact, 71 per cent of organisations now report having a formal data governance programme in place, up from 60 per cent last year. When you can’t see where data came from, who touched it, or how it was shaped, you can’t trust it.

And if your team can’t prove control, regulators and auditors won’t assume it either.

This is where data lineage, access enforcement, and version control become strategic. They’re not just for compliance. They’re the foundation of confidence — in the data, the system, and the decisions that follow.

Whether your pipelines feed AI models, executive dashboards, or cross-border analytics, traceability and accountability must be part of how those pipelines are designed to work.

Enforce role-based access and data contracts

Policy enforcement that relies on people remembering the rules is already broken. The only effective governance is the kind that's applied automatically and enforced by design.

Role-based access should be integrated into every layer of the ETL pipeline — from source extraction to destination loads. That means controlling not just who can run the pipeline but who can access specific assets, schemas, or transformations along the way.

Data contracts are another way to operationalise governance. They define what data must look like, how it must behave, and what quality thresholds it has to meet. When embedded into orchestration or transformation layers, they prevent silent degradation and catch schema drift before it pollutes downstream systems.

This kind of compliance architecture doesn’t require massive overhead. But it does require intent. The goal is to ensure that what flows through the pipeline is not just fast or complete — it’s also controlled, governed, and fit for use.

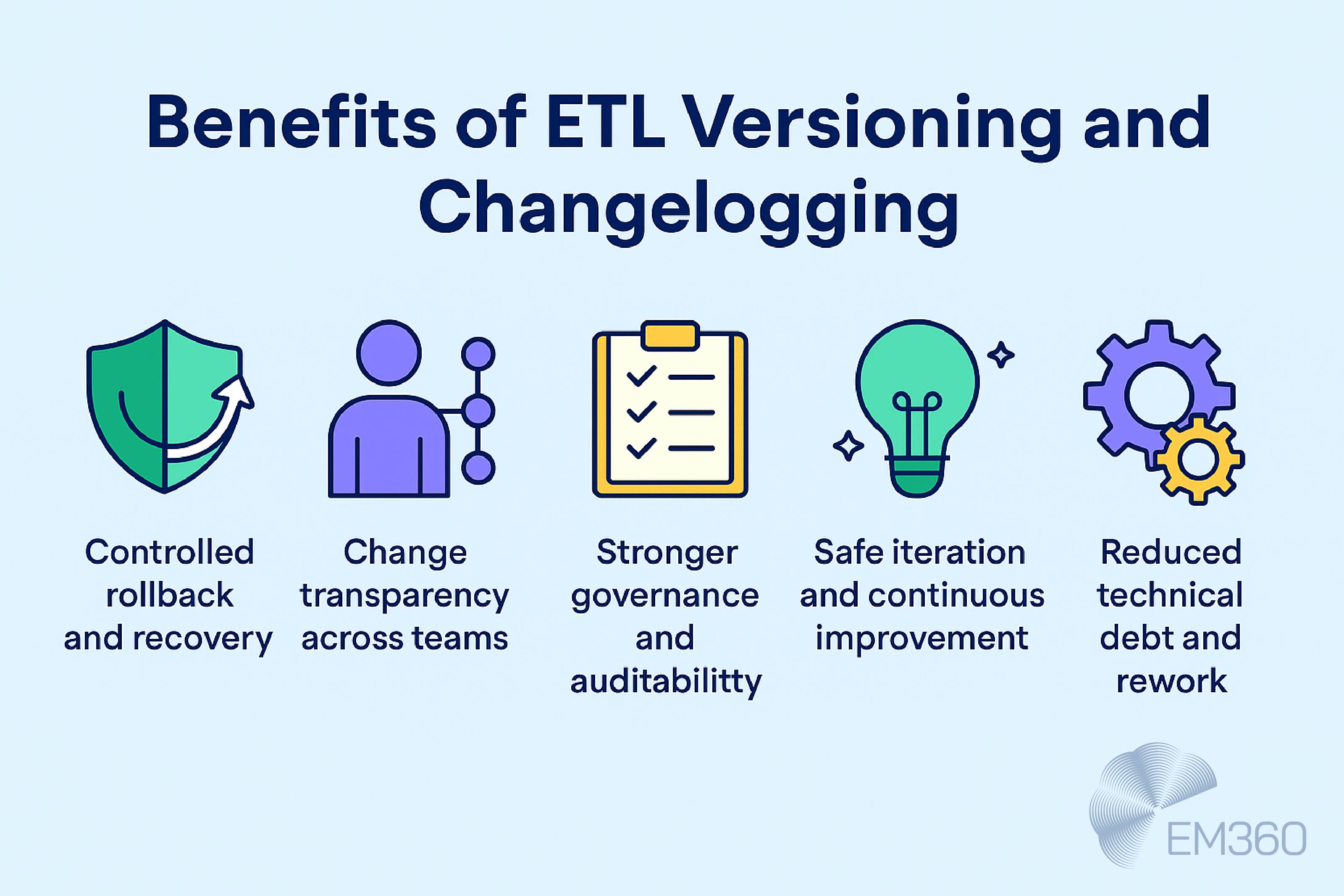

Use versioning and change logging across pipeline stages

No pipeline stays static. But without tracking what changed, when, and why, even small updates can introduce major risk.

Pipeline versioning allows teams to test changes without disrupting production. It also supports rollback when something goes wrong — not just to recover function but to recover confidence in the data.

Change management doesn’t need to be heavy. Even lightweight version control tied to a repository, a changelog, and a CI/CD trigger can bring order to an otherwise opaque deployment process. What matters is being able to answer basic questions: What changed? Who changed it? Did it work?

Structured audit logging closes the loop. It records access events, pipeline runs, data exceptions, and compliance checks. And when paired with metadata tagging and observability, it creates a full trace — from source to destination — that stands up to scrutiny.

Governance isn’t just about rules. It’s about clarity. And clarity comes from designing systems that explain themselves without needing a meeting.

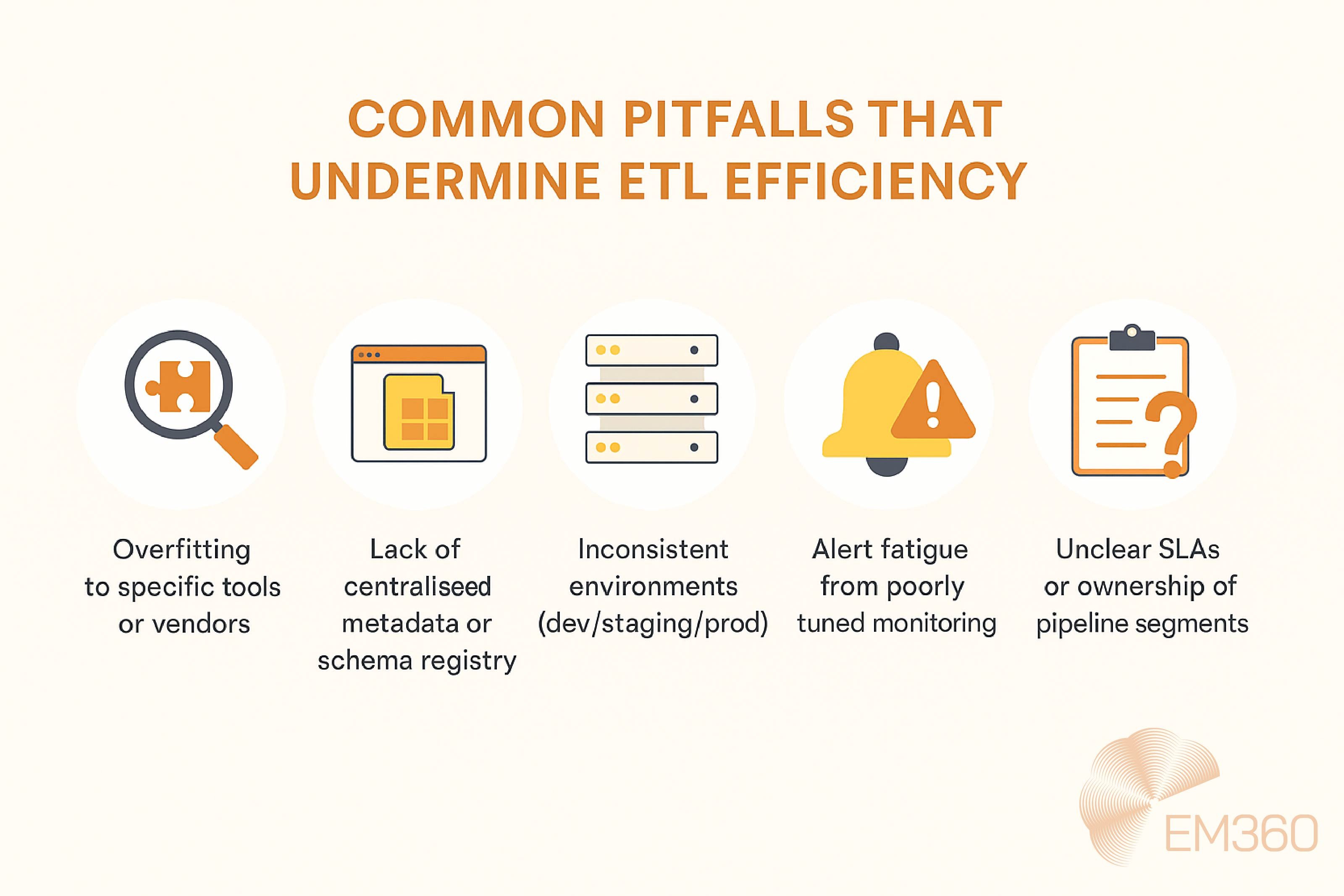

Common Pitfalls That Undermine ETL Efficiency

Even the most sophisticated pipelines can become liabilities when overlooked details stack up. ETL doesn’t usually fail because of a single broken step. It fails because of patterns — unchecked inefficiencies, fragile design choices, and governance gaps that quietly erode trust over time.

This section isn’t a recap of foundational problems. It’s a quick diagnostic tool for identifying where otherwise functional pipelines are leaking value. If you're dealing with unpredictable failures, mounting technical debt, or teams second-guessing their own data, chances are one of these issues is already in play.

These are the most common ETL issues affecting pipeline efficiency and what to do about them.

Overfitting to specific tools or vendors

It’s easy to build pipelines that lean heavily on the quirks of a specific platform or orchestration layer. But vendor-tuned logic rarely translates well across systems. This creates lock-in and makes migrations painful — or worse, impossible without a full rebuild.

The fix: Design for portability. Use abstraction layers, open formats, and configuration-driven logic where possible. Avoid embedding platform-specific logic into transformation scripts or scheduling layers unless absolutely necessary.

Lack of a centralised metadata or schema registry

Without shared context, every team builds their own version of the truth. When metadata is siloed and schemas aren't tracked centrally, duplication, drift, and inconsistency become inevitable.

The fix: Invest in a central schema registry or metadata catalogue that’s accessible across teams. Use it to validate inputs, drive transformation logic, and support lineage tracking across systems.

Inconsistent environments (dev/staging/)

ETL pipelines often behave perfectly in development, then fail silently in production. The cause is usually environment drift — differences in configuration, data shape, or permissioning across stages.

The fix: Standardise deployment processes using infrastructure-as-code, environment parity testing, and containerised pipeline components. Consistency across environments reduces surprise failures and simplifies debugging.

Alert fatigue from poorly tuned monitoring

A pipeline that sends alerts for every warning — or fails to escalate real issues — ends up ignored. Over-alerting is just as dangerous as under-monitoring. It masks real problems behind noise.

The fix: Define alert thresholds based on business impact, not just system events. Route alerts to the right owners, and tag them with actionable context. Use dashboards for visibility, not as a crutch for missing automation.

Unclear SLAs or ownership of pipeline segments

When no one knows who owns which part of the pipeline, accountability breaks down. Missed deadlines, incomplete loads, and incorrect outputs can all go unresolved simply because the escalation path is unclear.

The fix: Document SLAs at both the pipeline and dataset levels. Define ownership explicitly — not just for execution, but for quality, access, and monitoring. Align teams on who’s responsible for what and what good looks like.

These aren’t just minor inefficiencies. Left unchecked, they can stall delivery, degrade trust, and turn ETL into a chronic liability. Fixing them doesn’t require a total overhaul. It requires clarity, discipline, and the willingness to treat pipelines as products — not just utilities.

Final Thoughts: Best Practices Aren’t About Perfection — They’re About Progress

No data team gets it all right. Not at scale, not consistently, and not without some hard lessons along the way. That’s not a failure of skill — it’s a reflection of how complex and evolving modern enterprise data strategy has become.

What separates high-performing teams isn’t perfect execution. It’s ETL maturity. It’s the ability to identify where pipelines are fragile, where governance is missing, and where observability needs to be strengthened. It’s the willingness to prioritise structure over shortcuts, even when delivery timelines are tight.

The best architectures don’t chase trends. They choose patterns that match their workload, stack, and risk appetite. They build scalable pipelines that are modular, testable, and ready to evolve — without burning everything down in the process.

Best practices aren’t about dogma. They’re about continuous improvement. A clear way to spot issues earlier, recover faster, and scale with more confidence as the business grows.

For more tactical guidance on ETL tools, orchestration platforms, or designing resilient data architectures, explore EM360Tech’s deeper coverage on enterprise data pipelines.

Comments ( 0 )