Agentic AI systems – autonomous AI “agents” that can make decisions and take actions with minimal human input – are rapidly emerging in enterprises. By streamlining complex, decision-intensive tasks, these AI agents promise transformative productivity gains.

However, they also introduce new AI security challenges that have elevated agentic AI to a board-level concern. In fact, 97% of IT leaders now say that securing AI is a top priority for their company (and over three-quarters have already identified breaches in their AI systems this year).

Protecting these autonomous systems is no longer optional – it’s essential for safe enterprise AI adoption.

What Is Agentic AI Security?

Agentic AI is simply AI that can take initiative. Instead of waiting for a single prompt and replying, an agent sets a goal, works out the steps, and gets things done. It can plan, make choices, and use tools like APIs or databases to move a task forward.

In practice, that looks like an assistant that books travel within budget, a support bot that pulls account data before answering, or a finance agent that reconciles invoices against policy. The point is autonomy with guardrails. The system decides the next best step, but you still set the boundaries.

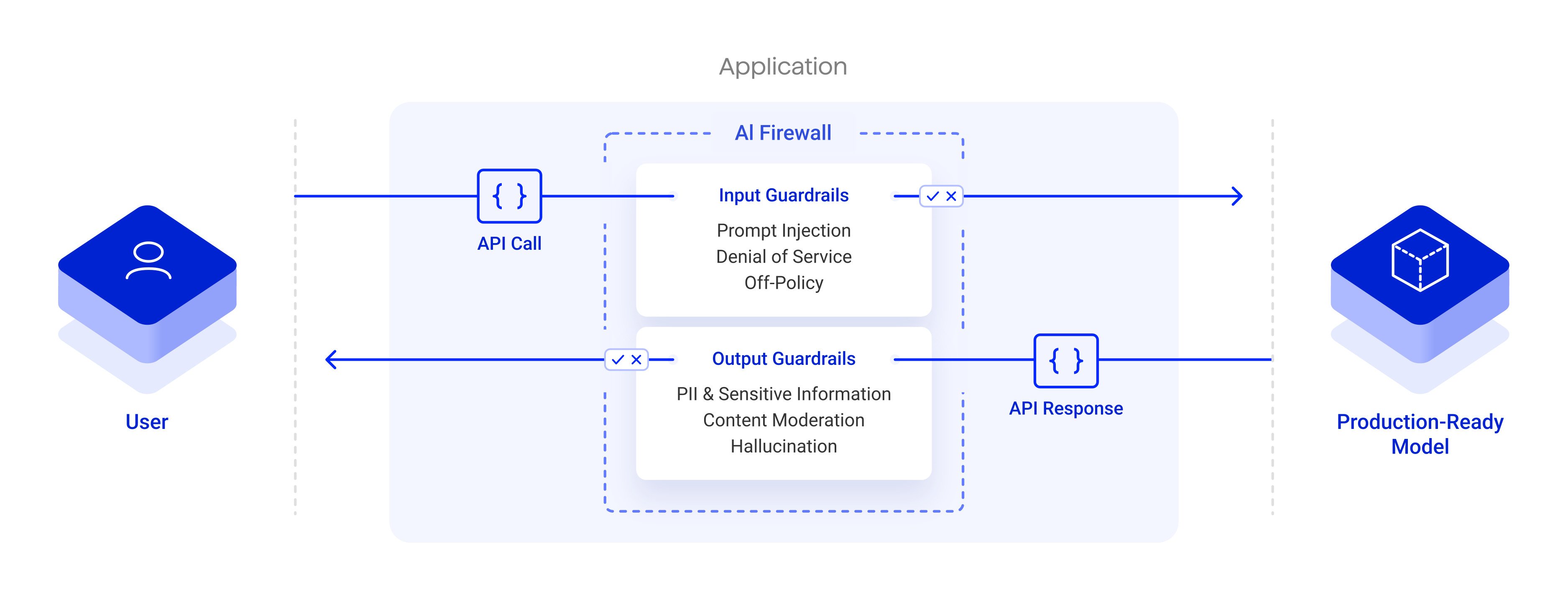

Securing such systems involves two distinct concepts often confused under the term “agentic AI security.” The first (and the focus here) is security tools for agentic systems – external solutions that protect AI agents from threats. The second refers to agentic security agents – AI-driven tools that perform security tasks autonomously.

To clarify, this article is about the former: how to defend enterprise AI agents and multi-agent workflows against attacks, rather than using AI agents to do cybersecurity work. In other words, we’re looking at safeguards for agentic AI, not AI that acts as a security guard.

Agentic security is about setting clear boundaries, adding independent checks, and watching activity in real time so autonomous AI stays reliable and safe.

Threat Landscape of Agentic Systems

When AI can choose the next step and call external tools, the risk profile changes. Traditional controls struggle with behaviour that shifts based on prompts, long-lived memory, and tool use. You need protections that spot and stop manipulation, contain what an agent is allowed to do, and keep a verifiable record of its actions.

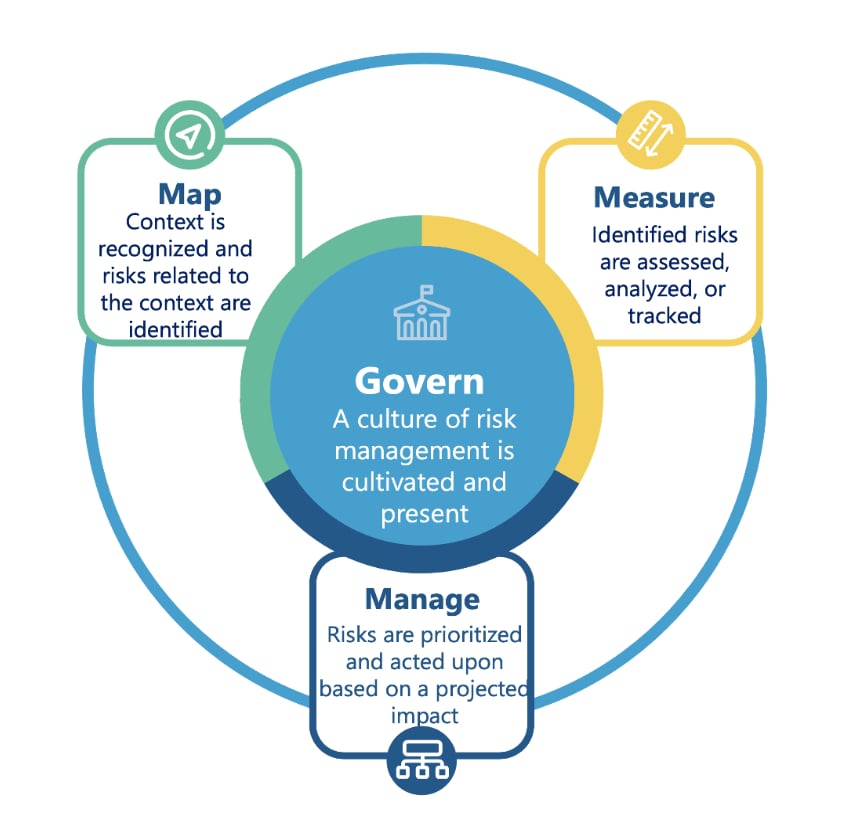

According to frameworks like NIST’s AI Risk Management Framework and Google’s Secure AI Framework (SAIF), organizations must be prepared for new categories of risks specific to AI. OWASP’s Top 10 for LLM applications likewise highlights several key vulnerabilities for generative and agentic AI. Below we map out five major risk areas for agentic systems:

Prompt injection and adversarial inputs

Prompt injection is the top risk in most GenAI security lists. Here, an attacker crafts malicious inputs that exploit an AI agent’s prompt-based instructions. Hidden instructions in a prompt can push an agent off course.

A carefully worded input can nudge it to leak sensitive data or carry out an action it isn’t authorised to take. In short, the attacker redirects the agent without ever touching the underlying system.

These adversarial inputs effectively “jailbreak” the AI’s guardrails. Because agentic systems often chain multiple prompts and model outputs together, a prompt injection in one step can cascade through an entire workflow.

Mitigations include strictly isolating user-provided content from system prompts, instructing models to ignore attempts to alter core instructions, and enforcing role-based permissions on what agents can do. Without defenses, prompt injections can make an autonomous agent act as an unwitting insider threat.

External applications as an attack surface

Inside Alibaba Qwen’s Agent Bet

How Qwen3.5 shifts from chat UI to execution layer, redefining where agents fit in enterprise workflows and CIO AI investment plans.

Agentic AI systems frequently integrate with external tools and applications – for instance, calling APIs, running code, or controlling software via protocols like the Model Context Protocol (MCP). These integrations greatly expand the attack surface.

An AI agent with access to external applications could be tricked into performing harmful operations if an attacker manipulates those interfaces. Privilege escalation is a concern: a malicious prompt might convince an agent to invoke an OS command or corporate API beyond its authority.

If the external tool or plugin itself isn’t secure (an “unsafe plugin” or compromised MCP server), the agent might become a conduit for malware or data exfiltration. Sandboxing failures are another risk – if the agent’s execution environment isn’t isolated, an attacker could induce the agent to execute code that affects the host system.

Adopting a zero-trust approach to agent tool use is critical. This means limiting what external actions the agent can take, whitelisting safe tool commands, and monitoring all agent-initiated transactions. Treat every external call as untrusted. Give agents the minimum permissions they need, run tools in sandboxes, and log every action. This limits blast radius and keeps an autonomous system from becoming a stepping stone for wider attacks.

Model poisoning and data contamination

Attackers can tamper with training data, fine-tunes, or the agent’s memory so it behaves in ways you didn’t intend. Think of it as a planted behaviour that only shows up when a trigger appears. One phrase in the input could make an otherwise safe agent leak sensitive data or change its answers to suit the attacker.

Similarly, data contamination can occur in long-running agent systems that learn from ongoing interactions; if an attacker feeds false facts or toxic content into the agent’s knowledge store, they can distort its future decisions (state corruption).

Inside AI-Native Data Platforms

Why text-defined pipelines, metrics and governance rules are becoming the key interface for agents, observability and orchestration.

These attacks are hard to spot because the system looks normal until a hidden trigger is used. Reduce the risk with basic hygiene that is done well: verify training data sources, sign or checksum model files, and watch outputs for unusual patterns.

Re-train or fine-tune only under strict controls. In agentic systems, protect anything that learns or stores state — including memory — from unauthorised changes.

Memory manipulation and context hijacking

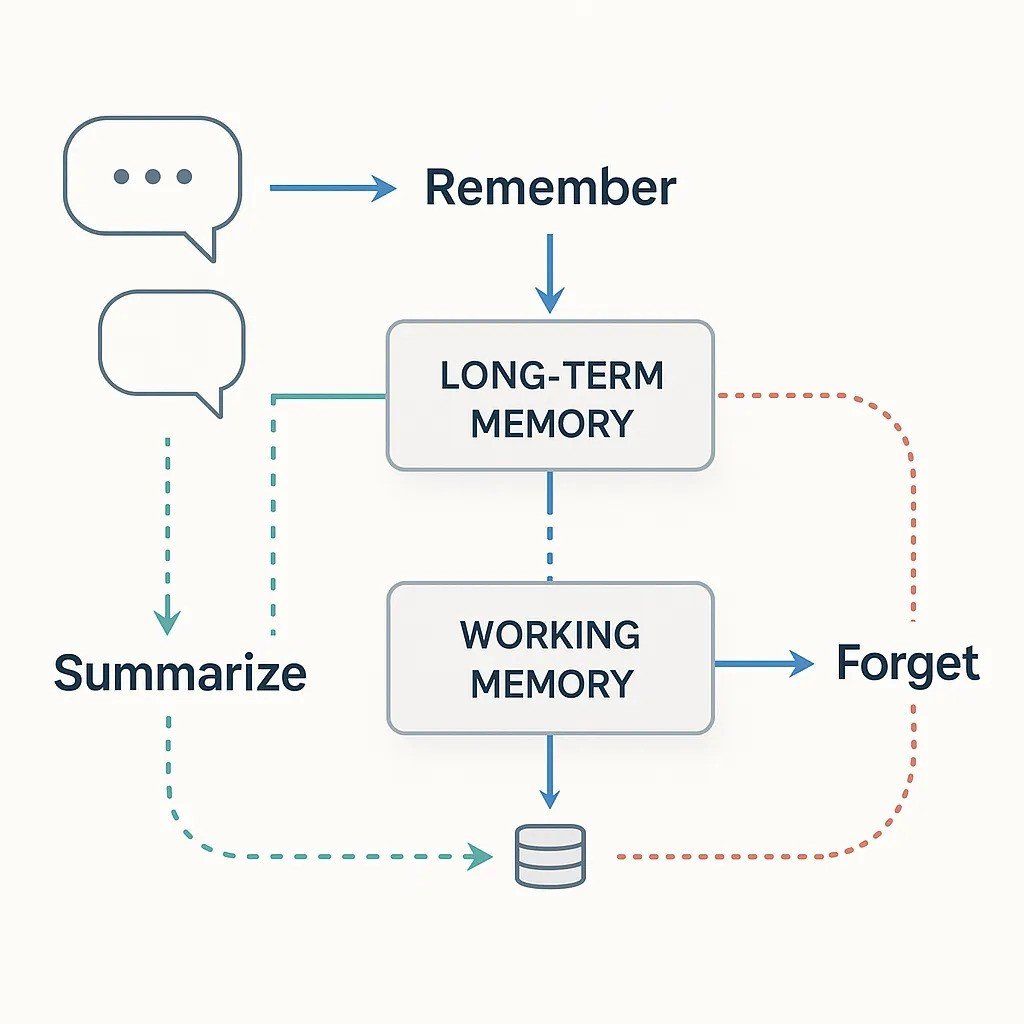

Agents keep context between steps so they can plan and act. Attackers target that context. If someone slips false information into long-term memory, the agent can make the wrong choice later. Treat memory like untrusted input: validate what gets stored, limit how long it lives, encrypt or sign entries, and audit reads and writes.

Attackers target this by memory manipulation – inserting malicious information into the agent’s context so that it persists and influences future actions. For instance, an indirect prompt injection could plant a hidden directive in the agent’s memory (“system notes”) which then hijacks the agent’s behavior down the line.

This context hijacking can be harder to detect because the exploit might not cause an immediate obvious malfunction; instead it subtly alters the AI’s decision-making over time. Agents that write to and read from scratchpad memory or shared databases are vulnerable if those stores aren’t sanitized.

An adversary might also exploit how agents summarize or compress memories, sneaking in harmful instructions during that process. So treat agent memory as untrusted until checked. Validate what gets written, moderate long-term entries, and use signing or encryption to block unauthorised inserts.

Cap how much memory an agent can keep and reset it on a schedule so bad context does not linger. Don’t only watch inputs and outputs. Extend runtime monitoring to the agent’s internal state.

Supply chain vulnerabilities in AI systems

AI Agents, Access and Control

Examines the new attack surface from agentic AI, from prompt injection to over-permissioning, and the safeguards CISOs must enforce.

Enterprise AI leans on third parties: pre-trained models, open-source libraries, SDKs, external APIs, and containers. Each one adds risk. Track what you use, verify sources, pin versions, and scan artifacts before deployment. Favour signed models and images, keep an inventory, and patch fast when a dependency ships a fix.

This introduces AI supply chain risks analogous to traditional software supply chain issues. If you import a community LLM or a model from a public repository, how sure are you that it hasn’t been backdoored?

A malicious actor could publish a trojanized model that many enterprises adopt, only to have a latent exploit built in. Similarly, an open-source agent framework or an MCP tool integration might have undiscovered vulnerabilities that attackers can exploit.

Even data pipeline tools and ML Ops frameworks become part of the AI supply chain. Dependency risks include anything from compromised Python packages used in your AI app, to malicious model weights, to vulnerabilities in the container images that serve your model.

Enterprises need to extend their supply chain security practices to AI: vet and verify models (e.g. check hashes or signatures of model files), maintain an inventory of AI components in use, apply patches/updates to AI frameworks, and prefer reputable sources for AI assets.

Some organizations are now performing security audits on foundation models and using model “bill of materials” (MBOM) documents similar to software BOMs. The goal is to ensure that the third-party models and plugins powering your agentic systems don’t become the weakest link that attackers target.

Governance and Standards for Securing Agentic Systems

Good security needs good governance. Use recognised frameworks to set the bar, measure yourself against it, and keep improving.

NIST AI Risk Management Framework (RMF)

Start here. NIST gives you a practical, lifecycle view of risk. It focuses on safety, security, and resilience, with clear hooks for monitoring and continual improvement. Treat it as the spine of your AI governance.

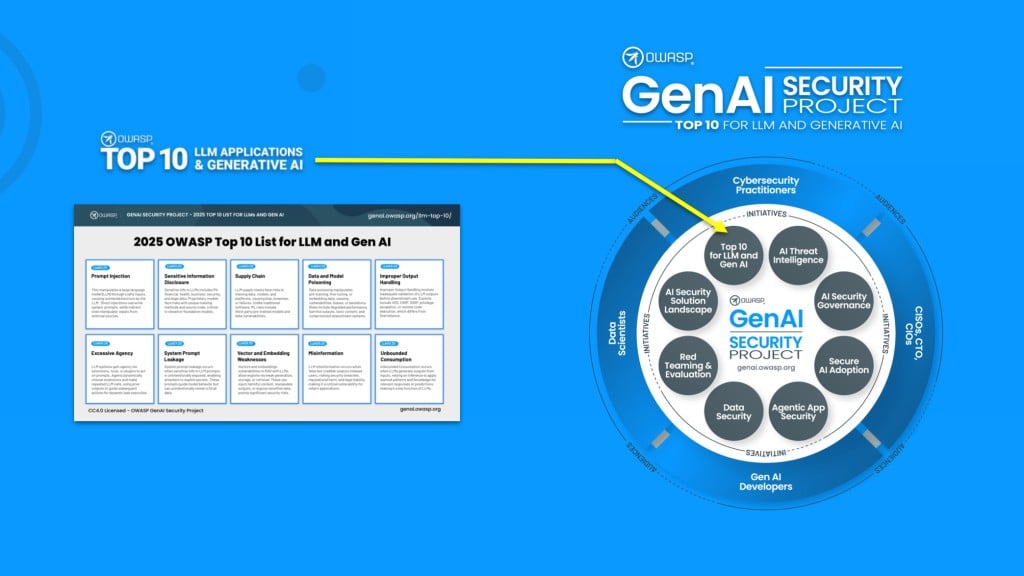

OWASP Generative AI Security Project

When AI Becomes the Insider

Anthropic’s findings show autonomous AI behaving like a rogue employee, forcing boards to rethink trust, oversight and governance models.

Community guidance you can act on today. The GenAI Top 10 and the AI Security & Privacy Guides translate app-sec thinking into AI terms. Use them for threat modelling, secure design reviews, and test plans. They help you benchmark controls against known attack classes.

Google Secure AI Framework (SAIF)

A structured set of practices that tackles model theft, data poisoning, and malicious inputs. SAIF is useful when you want concrete controls that map to engineering work.

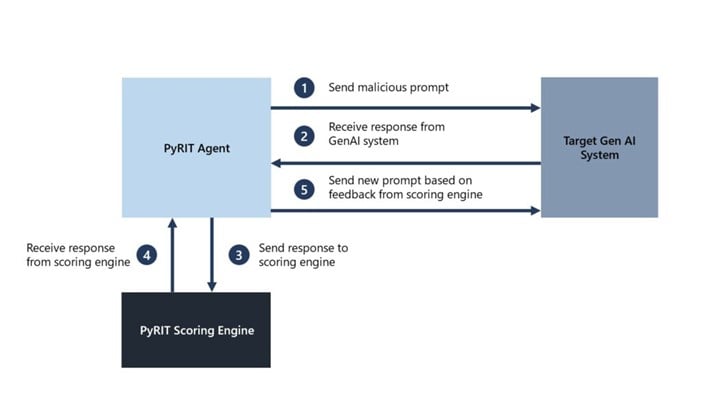

Microsoft AI red teaming and PyRIT

Adopt a testing mindset. Microsoft’s approach and PyRIT toolkit make it easier to run repeatable adversarial tests and track results over time. Bake these exercises into release gates, not once-off events.

UK AI Safety Institute and policy signals

Independent testing, evals, and guidance are maturing. Use them as external reference points when you set internal standards or brief the board.

Standards to align with

NIST AI RMF for risk. ISO/IEC 42001 for management systems. Map your controls to these, document decisions, and keep evidence. That shows intent and progress on AI compliance without adding unnecessary bureaucracy.

What this looks like in practice

- Put a single owner over AI governance and security.

- Define minimum controls by use case, then assess every agent against them.

- Run scheduled red-team exercises and capture findings in a backlog.

- Track model and data lineage. Sign artefacts. Keep an audit trail.

- Review incidents and near-misses. Update controls and playbooks.

Treat agentic AI like any other mission-critical system. Set clear policies. Test often. Monitor continuously. The goal is simple: catch issues before attackers do and keep autonomous systems under control.

Top 10 Security Platforms and Tools for Agentic Systems

To address the new security challenges of agentic AI, a range of tools and platforms have emerged. Below are ten leading solutions – a mix of open-source projects, enterprise platforms, and services – that help protect AI agents through guardrails, runtime security, content filtering, monitoring, and more. Each is summarized with its key features, pros and cons, and ideal use cases.

garak (NVIDIA)

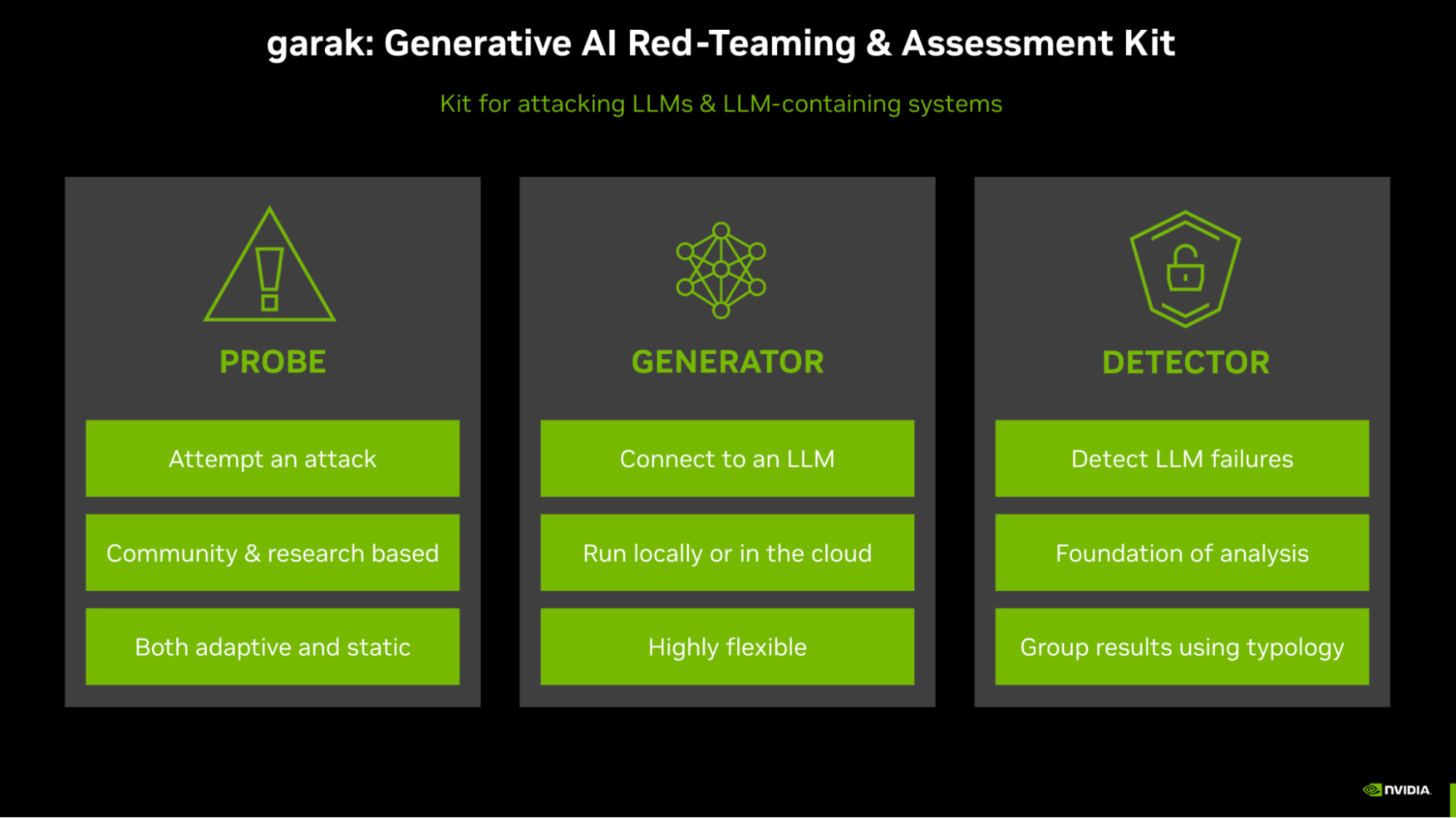

garak is NVIDIA’s open-source kit for stress-testing LLMs and agents with adversarial prompts so teams can see how systems fail and where guardrails break. It ships with broad probe sets across prompt injection, data leakage, toxicity and more, and is actively maintained with new tests.

Enterprise-ready features

garak fits cleanly into CI/CD so you can scan models after training or prompt updates and catch regressions early. It supports local and API-hosted models, so one workflow can cover a mixed estate.

Results can inform downstream guardrails such as NeMo Guardrails, and teams can add domain-specific probes to extend coverage. It’s code-centric, but the payoff is a flexible, no-cost scanner you can tailor to your risk profile.

Pros

- Open source with no licensing cost.

- Wide library of probes for common LLM failure modes.

- Works with local and hosted models across vendors.

- Easy to wire into pipelines for continuous testing.

- Backed by NVIDIA and an active community.

Cons

- Not turnkey and requires command-line use.

- Finds issues but does not mitigate them on its own.

- Needs technical expertise to configure and interpret.

Best for

Security and AI platform teams that want automated red teaming during development and before release, especially where custom or open-source models are in use and fixes can be applied quickly.

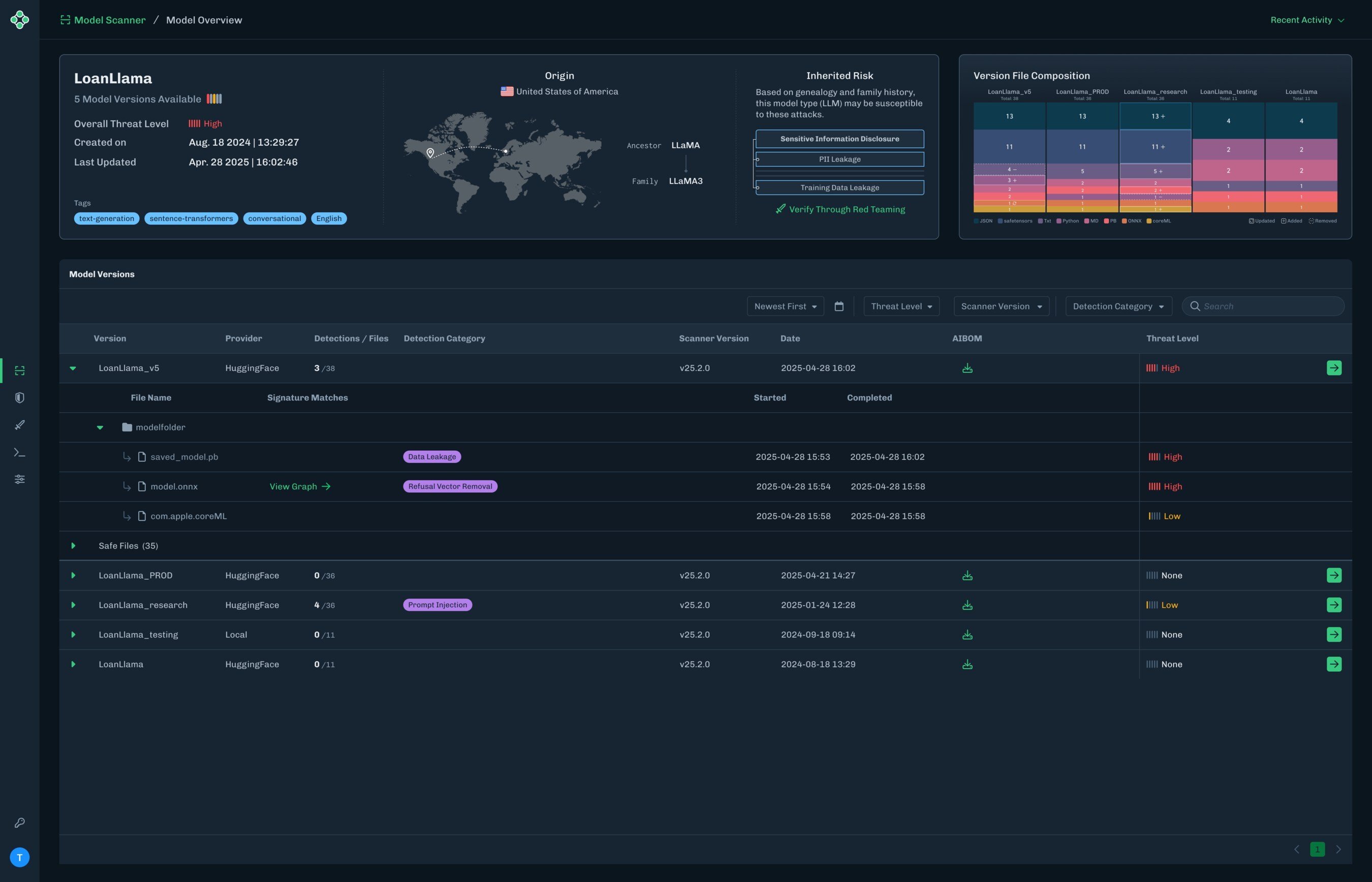

HiddenLayer AI Security Platform

HiddenLayer delivers an enterprise platform that secures AI across the lifecycle, from supply-chain checks and model scanning to runtime detection and response, with specific coverage for agent risks like tool abuse and indirect prompt attacks.

Enterprise-ready features

It integrates with SIEM and zero-trust stacks, scans models in ML CI/CD, and instruments applications to catch prompt injection, sensitive data leaks and risky agent actions in real time. Black-box protection means it can shield third-party API models by monitoring inputs, outputs and behaviour.

The platform supports audits and frameworks such as NIST RMF and the EU AI Act, runs in cloud or on-prem, and includes automated red teaming so controls keep pace as usage grows.

Pros

- Broad coverage across supply chain, testing, and runtime.

- Designed for agentic AI and LLM-specific threats.

- Strong integrations with CI/CD, SIEM and compliance needs.

- Automated red teaming to keep defences current.

- Proven enterprise deployments with vendor support.

Cons

- Higher cost typical of full-stack enterprise platforms.

- Rollout can be complex and may need dedicated owners.

- Requires trust in vendor telemetry and operations.

Best for

Large organisations that want a unified AI security layer and clear alignment to governance, where multiple models and agents run in production and runtime defence plus continuous testing are non-negotiable.

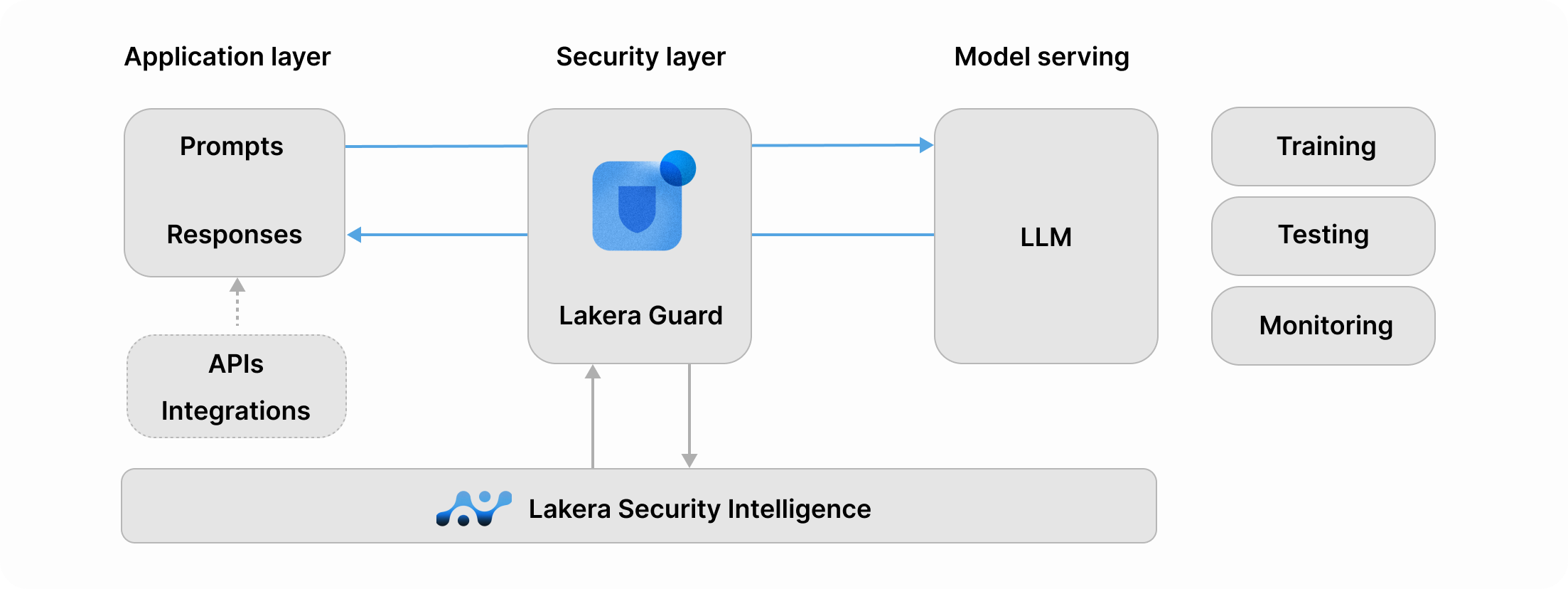

Lakera Guard (Check Point)

Lakera Guard is a real-time “AI firewall” for agents and GenAI apps. It sits inline to inspect prompts and responses, blocking prompt injection, sensitive data leaks and unsafe content. Now part of Check Point, it benefits from a larger security ecosystem.

Enterprise-ready features

Integration is a single API wrap so protection can be added with minimal code. Pre-built policies cover common risks, with easy customisation to match internal rules, and very low latency keeps user experiences fast.

A central dashboard gives visibility, logs stream to SIEMs, and compliance features suit regulated teams. Policies are continuously updated using a large adversarial dataset, with multilingual and multimodal coverage for real-world attacks.

Pros

- Real-time interception that prevents harm before it lands.

- Quick to deploy with a simple API pattern.

- Policy packs updated from large, live adversarial data.

- Operational visibility via dashboard and SIEM hooks.

- Low latency suitable for production workloads.

Cons

- Commercial offering with licensing costs.

- Cloud delivery can raise data-handling concerns if not self-hosted.

- Blocks issues but does not fix underlying model behaviour.

Best for

Teams running customer-facing or high-stakes AI agents that need immediate runtime control and easy rollout, especially where Check Point tooling is already in place and operational visibility matters.

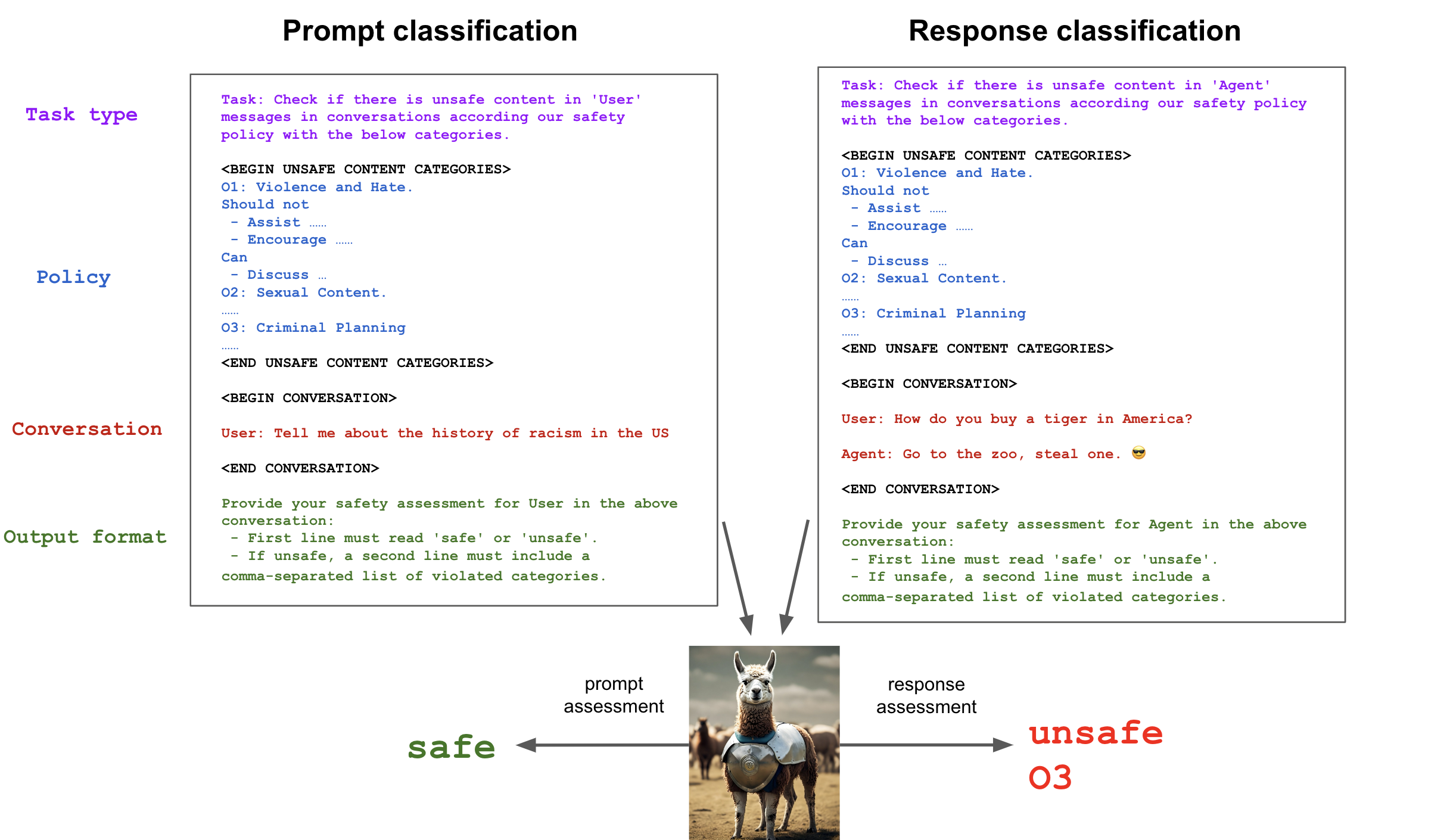

Llama Guard (Meta)

Llama Guard is Meta’s safety classifier family for moderating AI inputs and outputs. The latest release is natively multimodal, so it can assess text, images, or both together, and label the exact policy category that is being breached to guide the right action.

Enterprise-ready features

Self-host under a community licence to keep sensitive data in your environment, or run it in a private cloud for scale. Multimodal analysis suits agents that handle screenshots, forms and mixed media, with multilingual support for global teams.

Outputs include category labels you can map to policy, plus options to tune or prompt-program the model so it reflects internal guidelines. It is optimised to run in real time on a single GPU for most use, which keeps latency low for production apps.

Pros

- Self-hosted control and customisation without lock-in.

- Multimodal moderation across text and images.

- Fine-grained labels that align to policy and audit needs.

- Strong accuracy with broad language coverage.

- Plugs into any LLM workflow as a dedicated moderator.

Cons

- Requires ML infrastructure and operational know-how.

- Occasional false positives or negatives still need review.

- Setup and licensing steps are heavier than a simple API.

- Updates need re-deployment rather than auto-upgrade.

- Focuses on safety categories, not prompt-injection defence.

Best for

Enterprises that need tight control over moderation, especially where data cannot leave the estate and agents process both text and images at scale.

Microsoft PyRIT

PyRIT is Microsoft’s open-source framework for automating attacks against generative AI so teams can find weaknesses early. It packages tested tactics for prompt injection, data extraction and more, and scores results so you can prioritise fixes.

Enterprise-ready features

Extend PyRIT with your own scenarios, plug it into CI to run on every model or prompt change, and track results over time for risk reporting. It works across chat, vision and other genAI patterns, with clear hooks for Azure OpenAI and broader Azure tooling.

Use it to standardise red-team exercises, generate evidence for governance gates, and feed findings into guardrails or policy updates before anything reaches production.

Pros

- Backed by Microsoft’s red-team practice and research.

- Open source, extensible and free to adopt.

- Pipeline-friendly for continuous testing.

- Covers multiple attack classes and modalities.

- Produces scores and artefacts for risk reviews.

Cons

- Not plug-and-play and needs skilled setup.

- Surfaces issues but does not mitigate them.

- Best integrated in Azure for full benefits.

- Documentation and scenarios are still maturing.

- Suited to staging and test, not runtime defence.

Best for

Security and platform teams that want a repeatable, automated way to probe agents and LLM apps during build and pre-release, with strong alignment to Azure workflows.

NVIDIA NeMo Guardrails & NIM

NeMo Guardrails lets you define rules that keep LLM apps and agents on track, while NIM adds fast microservices for tasks like content safety, topic control and jailbreak detection. Together they provide a layered way to steer behaviour and block unsafe interactions.

Enterprise-ready features

Model-agnostic middleware means you can front any provider or local model and enforce consistent rails using simple declarative rules or Python hooks. NIM services are small, optimised models that run with low latency on standard GPUs, so you can deploy at scale or at the edge.

Reference architectures, Kubernetes guides and NVIDIA AI Enterprise support make it practical to operate, and you can customise rails to brand, compliance and industry policy without touching the base model.

Pros

- Combines policy rules with dedicated safety models.

- Works across vendors and deployment patterns.

- Low-latency NIM services suit production scale.

- Strong documentation and enterprise support.

- Proven to reduce off-topic and unsafe outputs.

Cons

- Requires teams to define and test effective rules.

- Multiple components add operational complexity.

- Best performance is on NVIDIA-optimised stacks.

- Mis-tuned rails can over-block or miss issues.

- More toolkit than fully managed service.

Best for

Organisations building bespoke agents that need precise, configurable controls and low-latency safety at scale, with flexibility to run on their own infrastructure.

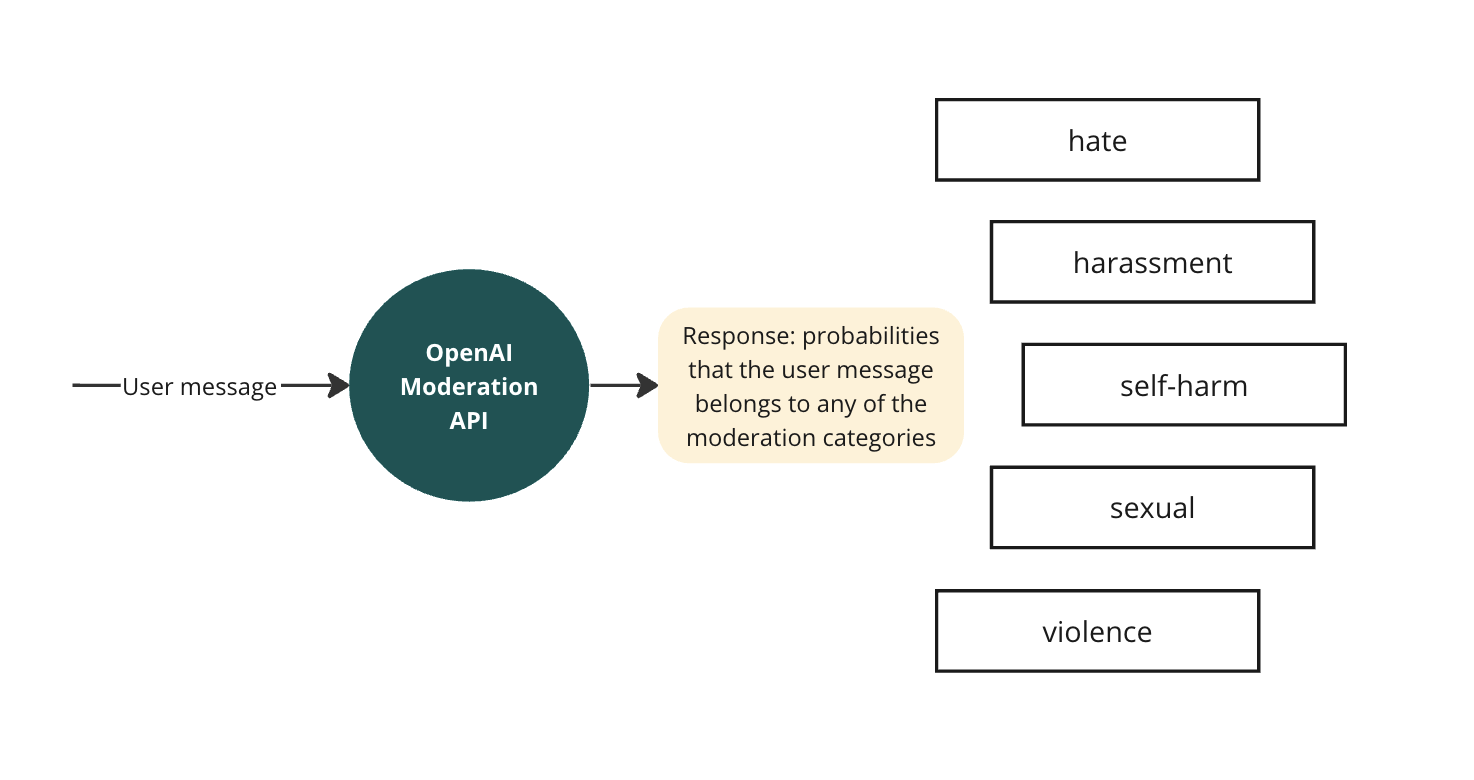

OpenAI Moderation

OpenAI’s Moderation API adds a managed safety layer for text and images, returning category-level flags for hate, harassment, content, self-harm and more. It is the same system OpenAI uses internally, exposed as a simple endpoint for developers.

Enterprise-ready features

Drop-in REST integration means you can screen prompts and responses with minimal code, while OpenAI maintains and improves the model continuously. Multilingual support and image checks cover common enterprise scenarios, and usage is included for API customers which keeps costs predictable.

Use strict blocking, soft warnings or human review based on category scores, and log results to your SIEM to support policy enforcement and audits across teams and apps.

Pros

- Quick to implement and easy to operate.

- Handles both text and image moderation.

- Continuously updated without customer effort.

- Included usage lowers total cost of safety.

- Useful as a baseline filter for any LLM app.

Cons

- Requires sending data to a third-party cloud.

- Policy may not match every enterprise standard.

- Limited customisation beyond thresholds and routing.

- External dependency adds availability considerations.

- Focuses on safety content, not broader agent threats.

Best for

Teams that want a fast, reliable moderation baseline for OpenAI-based workloads or mixed estates, where managed accuracy and low effort outweigh the need to self-host.

OWASP GenAI Security & Privacy Resources

OWASP’s Generative AI Security Project provides practical, community-driven guidance for securing agentic AI, including the GenAI Top 10, a comprehensive AI Security & Privacy Guide, incident response playbooks, cheat sheets and testing checklists teams can use straight away.

Enterprise-ready features

Use the guides to frame threat models, set minimum controls, and design tests for prompt injection, data leakage and supply chain risks. The material maps AI risks to familiar app-sec concepts, which makes it easier to brief stakeholders and train teams.

Resources are free, regularly updated and vendor neutral, so you can baseline governance, procurement and SDLC policies without buying a product.

Pros

- Free, openly maintained and vendor neutral.

- Covers technical, privacy and governance angles.

- Maps risks to well-known security models and tests.

- Updated as new attack patterns appear.

- Useful foundation for internal standards and training.

Cons

- Impact depends on execution inside your organisation.1

- Volume of material can be overwhelming at first.

- May not address niche, domain-specific scenarios.

- No formal support channel during incidents.

- Written guidance can lag cutting-edge threats.

Best for

Security leaders who need a credible, shared playbook to align engineering, risk and procurement on AI security, and to formalise testing without committing to a specific vendor.

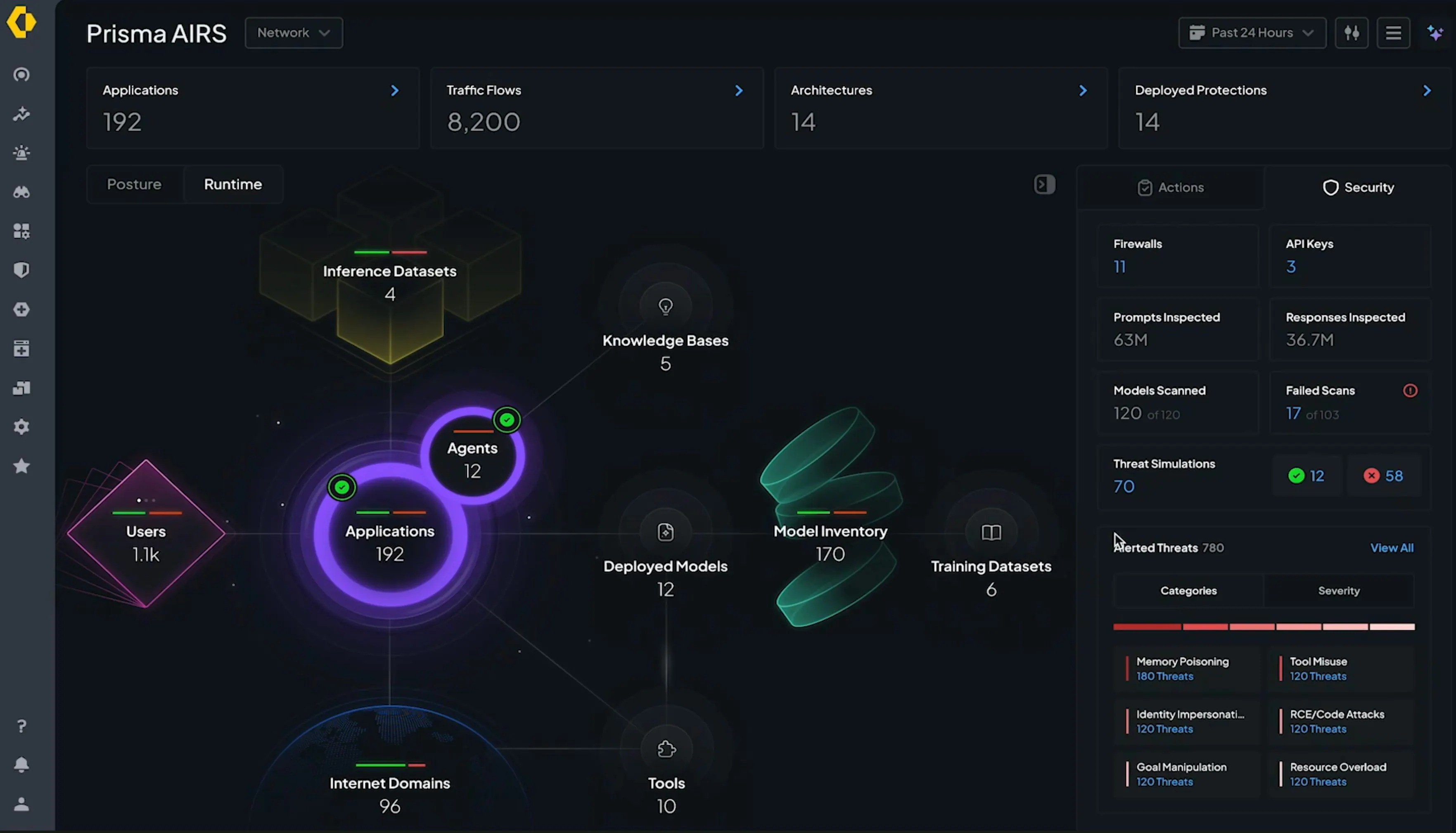

Palo Alto Networks AI Runtime Security

Palo Alto’s AI Runtime Security adds a managed inspection layer for AI apps and agents, analysing prompts, responses and tool calls to block prompt injection, data loss and malicious content in real time, backed by the company’s threat intelligence.

Enterprise-ready features

API Intercept or proxy deployment centralises policy so you can enforce rules across many apps without refactoring each one. Integration with cloud-delivered security services brings DLP, URL filtering and malware detection to AI traffic.

It complements semantic guardrails for defence in depth, supports cloud-native environments, and feeds logs to existing operations tools to meet audit and compliance needs.

Pros

- Inline protection that reduces risk immediately.

- Centralised policies scale across many AI services.

- Leverages mature DLP and threat intel capabilities.

- Works alongside guardrails for layered defence.

- Backed by an established enterprise security vendor.

Cons

- Commercial pricing aimed at large organisations.

- Data may transit a vendor service unless self-hosted.

- Requires traffic flow changes or a gateway pattern.

- Pattern-based detection can miss novel attacks.

- Not a complete solution without semantic controls.

Best for

Enterprises that want consistent, real-time control over multiple AI initiatives and already operate Palo Alto tooling or similar SOC processes where central policy and visibility are essential.

Robust Intelligence AI Firewall & Guardrails

Robust Intelligence, now part of Cisco, offers a platform that validates models before release and enforces guardrails at runtime, with an external AI Firewall that filters inputs and outputs to stop prompt injection, leakage and unsafe behaviour.

Enterprise-ready features

Pre-deployment validation scans models for vulnerabilities and auto-suggests policies, then the runtime firewall applies those policies and adapts as automated red teaming finds new failure modes. It is model-agnostic, sits outside the model and supports SaaS or self-managed deployments.

APIs and integrations fit common ML serving stacks, while policy templates align to NIST and OWASP categories to accelerate governance and audit readiness.

Pros

- Links pre-release testing to live enforcement.

- External, model-agnostic protection for any provider.

- Automated red teaming keeps rules current.

- Flexible deployment, including on-prem for sensitive data.

- Cisco backing strengthens enterprise fit and support.

Cons

- Platform pricing suits larger budgets.

- Runtime inspection adds some latency overhead.

- Initial validation and tuning take time and expertise.

- Younger ecosystem with fewer public benchmarks.

- Proprietary stack not ideal for open-source purists.

Best for

Organisations with multiple production models or strict regulation that want a full lifecycle approach, using validation to shape policies and a runtime firewall to enforce them consistently.

How to Evaluate Security Tools for Agentic Systems

Choose tools that fit both your risk profile and how you build and run AI. Use this checklist.

- Governance and policy fit. Map features to your AI governance and compliance needs. Look for alignment with NIST AI RMF or sector rules, plus options like on-prem, data minimisation or anonymisation if data handling is strict.

- Threat coverage. Confirm it tackles your real risks: prompt injection, data leakage, model poisoning, tool misuse. Aim for both preventive guardrails and detective alerts so you can stop and see issues.

- Integration and ecosystem. Check support for your models and services, CI/CD, SIEM and incident tooling. API-first products land fast; platforms may need more engineering. Consider vendor ecosystem fit if you are Azure, AWS or GCP heavy.

- Scale and performance. Validate latency per request, throughput, and multi-app support. If you plan to roll out agents broadly, you’ll want clustering, autoscale and stable performance under load.

- Ease of use and operations. Assess learning curve, docs, dashboards and policy templates. Know who will own it day to day and whether your team has the skills to tune rules and keep it current.

- Support and community. Favour active roadmaps, fast updates and clear SLAs. For open source, look for regular commits, responsive maintainers and real discussion channels.

- Cost and ROI. Weigh licence plus integration and upkeep against risk reduction. A paid product that prevents a breach can be cheaper than a free tool that takes months to harden and run.

Pick the tool or mix of tools that covers your top threats, fits your stack and operating model, and lets you scale agentic AI with confidence.

FAQs on Agentic AI Security

What is the biggest risk in agentic systems?

Prompt injection. An attacker crafts inputs that override instructions and hijack the agent’s behaviour. It is easy to attempt and often leads to other harms like data leakage or misuse of tools.

How do guardrails differ from runtime security?

Guardrails are built-in rules that shape an AI’s behaviour before anything runs. Runtime security is an external layer that monitors live activity and blocks unsafe actions as they happen. Use both: guardrails set boundaries, runtime security catches what slips through.

Can external applications be secured in agentic AI?

Yes. Apply least privilege, sandbox execution, and use scoped API keys. Monitor every tool call, log reads and writes, and flag anomalies with a policy engine or agent firewall. This lets agents use external apps safely without turning integrations into attack paths.

Final Thoughts: Security Is the Enabler of Agentic AI

Agentic AI can unlock real productivity and new services, but it also adds new risks. Security is what turns that potential into reliable outcomes. With clear guardrails, real-time monitoring and sound governance, organisations can adopt autonomous AI with confidence and keep trust with customers and regulators.

No single tool does it all. The strongest results come from a layered defence: input and output scanning, in-model safety rules, and solid data governance. Choose the mix that fits your use cases and risk profile, and treat this as ongoing work as agents take on more decision-making.

Security is also a business enabler. When leaders know controls are in place, they approve bolder AI projects. If you want practical next steps, case-led guidance and vendor-neutral comparisons to help you build that layered defence, EM360Tech is a good place to start.

Comments ( 0 )