In a world where things like automation, blockchain, and AI are as common as they are today — you’d be forgiven for thinking that technology alone can keep our digital ecosystems safe. But that’s because you’ve forgotten that people are still weak links.

And studies consistently find that the majority of security breaches involve some form of human involvement, whether this is a simple lapse or due to manipulation. For example the 2023 Verizon Data Breach Investigations Report found that 74% of all breaches include the human element.

According to Mimecast’s 2025 State of Human Risk report, this figure jumps to an insane 95%. The important thing to remember is that there’s no malicious intent involved in the majority of these cases. Instead, it’s simple mistakes or the ingenuity of attackers when it comes to exploiting human psychology.

And thanks to the very same technology we think will keep us safe, the scope and sophistication of social engineering attacks have exploded. In fact, SANS 2025 Security Awareness Report revealed that 80% of organisations now rank social engineering as their top human-related cybersecurity risk.

This is partly because advancements in AI are making these scams more convincing – even as AI also offers new tools for defence. The paradox of our time is that technology is amplifying both sides: it can supercharge how attackers deceive us but also help us detect and respond to an attack.

Human Error and Social Engineering Are Still The Problem

Whether it’s a mis-sent email, an incorrectly configured server, or a duped employee, human mistakes and manipulation are at the root of most breaches.

IBM’s analysis of breaches in 2024 found that 26% were caused directly by human error (e.g., negligent actions), with another 23% resulting from IT system glitches. These unforced errors collectively account for nearly half of all breaches – and that doesn’t even include attacks where humans are tricked rather than simply slip up.

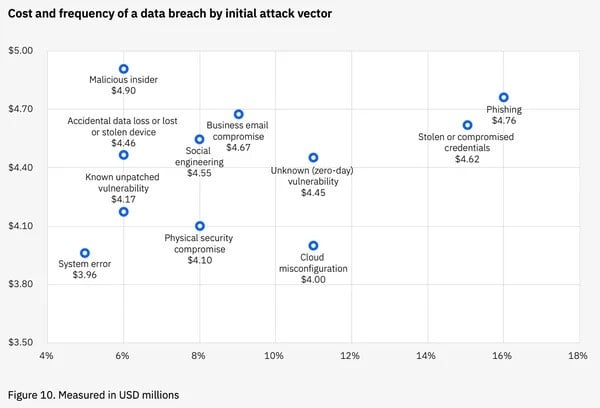

The top three ways that attackers get access to secure systems are through stolen credentials, phishing, and exploiting vulnerabilities. So two of them rely on human fallibility, and only one on technical flaws. Though it could be said that vulnerabilities in a system are also a type of human error — just one that was made at an earlier stage of a system’s life cycle.

What this means is that an attacker’s easiest path is usually through people, not around them. Because it’s far easier to con someone into giving up access than to break the hardened security-providing mechanisms. It’s a chilling thought when you consider digital marketplaces or financial platforms and how a single misstep can compromise thousands of customers.

But despite the billions spent on security tools, breaches with a human component are not declining – they’re rising. Importantly, the impact of these human-driven incidents can be massive.

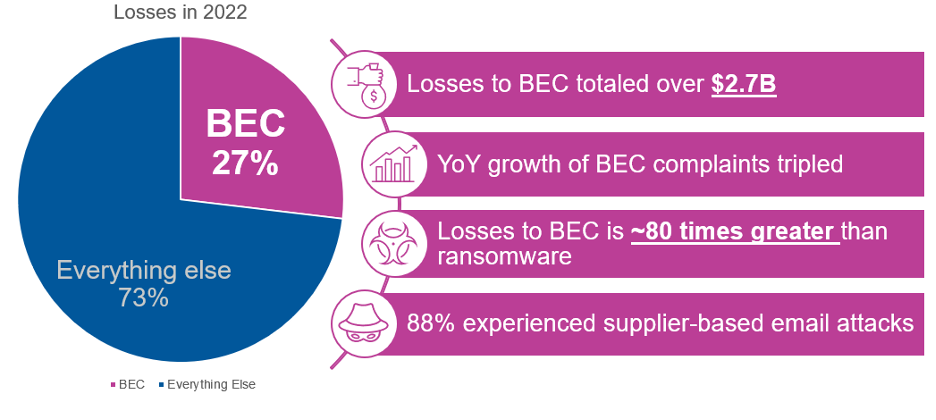

For example, the FBI said that losses from business email compromise (BEC), a type of very targeted spear-phishing, went from $676 million in 2017 to an unbelievable $2.7 billion in 2022. And in the finance world, social engineering attacks like fake fund transfers have become more daring than ever.

Phishing Becomes More Effective With Deepfakes

Social engineering has been effective since pretty much the beginning of time. And because human nature hasn’t changed all that much, the techniques haven’t changed all that much either. But that doesn’t mean it’s standing still. Instead, it’s evolving to take advantage of the latest tools and technology out there.

Attackers are constantly refining how they exploit human psychology, and the latest frontier is the use of AI-driven tools to create more believable lies.

Inside Global Payment Defense

How G2A aligns multilayer defenses, AI tools and monthly training to secure global digital marketplaces and reduce fraud losses.

Phishing emails today often arrive polished — perfect grammar, personalised details, real context. Generative AI has stripped out the small tells we used to rely on, and many are even written in the voice of the person being impersonated. Paired with attacks at the technical layer — for example MSISDN spoofing (impersonating a phone number) — these lures become brutally effective: the content looks right and the control checks fail.

Which is why enterprises need an advanced, well-integrated cybersecurity stack and highly qualified security staff to watch, verify, and respond in real time.

Meanwhile, deepfake technology has introduced a whole new layer in impersonation scams.

You might have heard about the case in the UAE, where criminals used AI to clone a company director’s voice and managed to convince a bank manager to transfer $35 million to fraudulent accounts. The call was backed by spoofed emails and fake contracts that helped sell the illusion — but the vishing element was the heart of the scam.

This elaborate con was an old trick — impersonation — supercharged by new tech in the form of deepfake audio. And it worked. The lesson is simple: even the best controls can be undone if a trusted person is pressured into bypassing them. That’s why teams must follow established verification procedures and approved tools, and refuse to shortcut them under urgency or authority pressure.

The numbers about these new threats are more than a little sobering. A global survey of fraud decision-makers found that half of all businesses around the world were the target of some kind of deepfake-based scam in 2024.

This was a big jump from just 29% two years ago, showing how quickly deepfakes have gone from being a novelty to a mainstream tool for criminals. And as always, some industries have been hit even harder than others.

For example, by 2024, 51% of financial services companies had encountered a deepfake audio attack, and the tech/fintech sector saw a similar rise in deepfake video schemes.

Old School Phishing Is Still Just As Effective

Inside AI Payment Control Stack

How banks are rebuilding fraud, KYC and AML stacks with adaptive models, biometric signals and auditable AI controls to meet PCI and PSD2.

It’s important to note that attackers don’t even need bleeding-edge AI to exploit human weaknesses; simpler techniques still wreak havoc daily. Phishing remains the number one threat action – whether delivered via email, SMS, or messaging apps – because it reliably tricks a portion of targets and provides a foothold for everything from ransomware to espionage.

Industries with high human interaction – such as digital marketplaces, finance, healthcare, and logistics – are especially ripe targets for these ploys.

- Financial services are tightening controls after a wave of high-profile social engineering heists. Banks now demand out-of-band verification for transfers, are exploring voice biometrics to spot AI-cloned imposters, and drill staff to trust but verify. But one misjudged request can still cost millions.

- Healthcare faces rising breach costs, with staff mistakes and phishing a constant risk. Misuse of generative AI adds a new layer, though, with clinicians exposing patient data through external tools.

- Logistics and supply chains have learnt the hard way how a single lapse can disrupt global commerce. Inadequate patching and human oversight failures, such as what happened with the NotPetya attack in 2017, have shut down warehouses and delayed shipments. And a single weak password decimated a UK-based company that had been in business for 158 years.

These types of companies are constantly under attack from social engineering, which targets both their customers (with fake support agents and scam listings) and their own employees or sellers. Which means that user verification is essential, whether you’re operating an e-commerce business like a small online shop or a global digital marketplace like G2A.COM.

In fact, G2A.COM's leaders say that security is one of their "top three" priorities and that they’re always defending against "malicious actors" who want to abuse the platform.

They’ve invested millions in fraud detection systems and rigorous KYC (Know Your Customer) verification for sellers in an effort to keep the marketplace safe. Still, G2A.COM’s Founder, Bartosz Skwarczek, emphasises that technology alone isn’t enough; people’s vigilance is key.

When LLM Power Meets AI Risk

Explore the tradeoffs behind LLM adoption, from bias and misinformation to multimodal capabilities and competitive pressure.

As he puts it, “At the end of the day, on the other side of the screen … is always a human being. My message is always: “Remember about the people.” That philosophy recognises that user education (e.g., monthly cybersecurity trainings for all staff) and a customer-centric mindset are critical to complement the tech measures.

The human-in-the-loop approach – blending automated security with human judgement – is often the only way to catch sophisticated fraud patterns without ruining user experience.

Why Security Awareness Training Alone Isn’t Solving the Problem

With human error and social engineering so dominant in breaches, you might expect that organisations are doubling down on security awareness training and employee education.

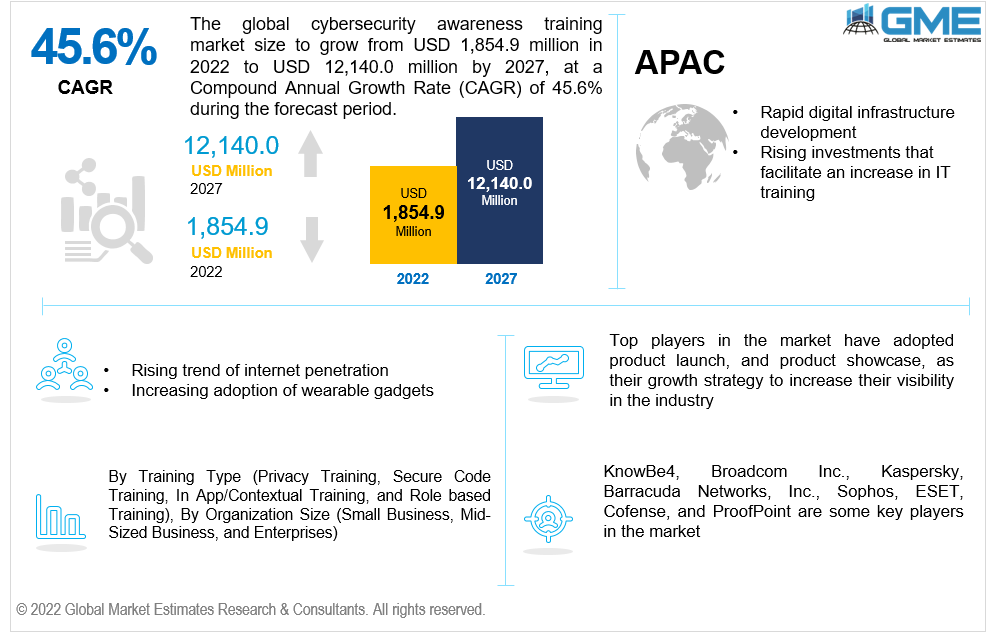

And investment in training has grown – it’s predicted that the security awareness training market will be valued at over $10 billion by 2027 – and most enterprises now run phishing simulations and yearly compliance courses.

But many programmes are falling short in changing behaviours long-term or reducing risk in any measurable way, and the breaches keep coming. This suggests a hard truth: traditional security training by itself is not enough.

But when security awareness research reveals that the average organisation has just ~2 full-time employees dedicated to awareness efforts, this starts to make sense. Because that’s barely enough to run basic training campaigns, let alone drive cultural change in a large workforce. Lack of time, lack of staffing, and lack of leadership support are problems that awareness teams face all the time, in fact.

Mimecast’s Human Risk report says that 87 per cent of organisations deliver quarterly training. But the problems listed above mean this training is often stale, generic, and disconnected from real-world threats. So insider incidents still increase. Yet, unsurprisingly perhaps, 27 per cent of security leaders blame fatigue and complacency for these ongoing lapses.

Experts argue for a shift from awareness to human risk management: identifying high-risk behaviours, quantifying them with data, and targeting repeat offenders. Mimecast notes that just 8 per cent of employees cause 80 per cent of insider breaches — a small group that focused coaching or stricter safeguards could address.

Inside Poe's Chatbot Aggregator

See how a unified access layer to GPT-4, Claude, Gemini and more is reshaping how enterprises test, compare and deploy generative AI.

The best companies go even further by making a security-first culture a part of their daily routine. Mature programmes make security everyone's job, reward safe behaviours, and encourage employees to report any suspicious activity.

For instance, at G2A.COM, employees complete mandatory training and are encouraged to take ownership by actively flagging risks — and they’re rewarded for doing so, not punished for pointing out something that may have been introduced in the past. The result is a workforce that protects the company rather than being a problem.

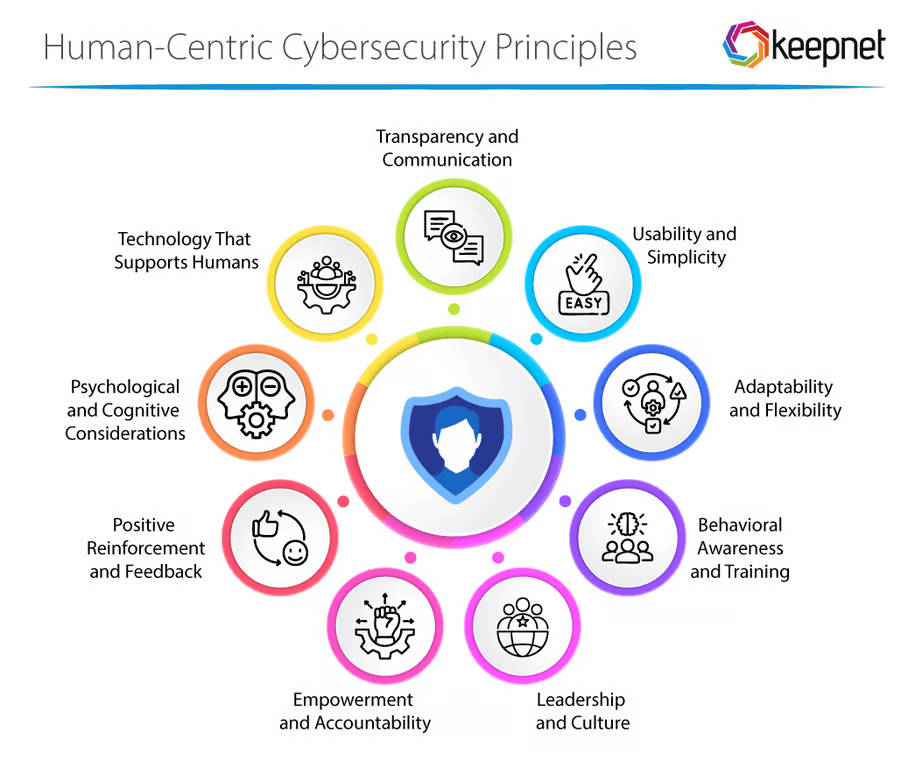

Building A Human-First Security Strategy

To secure today’s ecosystems, organisations must combine human-centred measures with advanced technology. It’s not people or automation — it’s the strengths of both.

- Make security cultural and led from the top: CEOs and CISOs must treat security as a business risk, not just IT’s problem. Embedding objectives in reviews, rewarding good practice, and creating psychological safety all help staff see security as their responsibility, not a burden.

- Move from awareness to human risk management: Training alone doesn’t work. Leading organisations track behaviours like phishing click rates or policy violations, assign a “human risk score”, and tailor interventions. Focusing on the small group responsible for most repeat lapses can cut risk dramatically.

- Use AI and automation to support people: AI excels at filtering threats and spotting anomalies, leaving humans to make judgement calls. But policies are essential to prevent misuse — 81 per cent of firms now restrict how staff use generative AI. Some even use “defensive AI” in phishing simulations to prepare employees for deepfake-enabled scams.

- Use Zero Trust to limit fallout from mistakes: By verifying every request, Zero Trust stops compromised accounts from compromising more of your systems. Employees need to know why more checks are important, though, which is why it requires change management. But if it’s done right, you get both a flexible work model and better security overall.

- Learn fast and adapt: Think of every security event as intelligence. By sharing the lessons they learnt from each one with all employees, keeping track of human risk factors, and updating training with the latest scam tactics, your organisation will be able to stay one step ahead of malicious actors.

Final Thoughts: People First, Technology Second

AI, blockchain, and automation are reshaping business, but people will always be the deciding factor in security. No system is fully “trustless” when humans are the operators, the targets, and the first responders. Forward-looking leaders know a technology-only strategy is incomplete. Real resilience comes from combining smart tools with a culture where security is built into every person's job.

Because a human-first approach doesn’t reject automation. Instead, it harnesses it to both strengthen human judgement and make up for human limits. With the right tool and Employees who are properly trained and supported – what is usually your weakest link can become your strongest defence.

For enterprises, the priority is clear: invest in your people with the same intent as you invest in preventative security and AI. Organisations that strike this balance will not only withstand threats but also build lasting trust with customers and partners.

To find more expert insights on cybersecurity, explore the rest of what EM360Tech has to offer. And to see how a global marketplace like G2A.COM puts people at the centre of its security, discover their story and lessons from the front line.

Comments ( 0 )