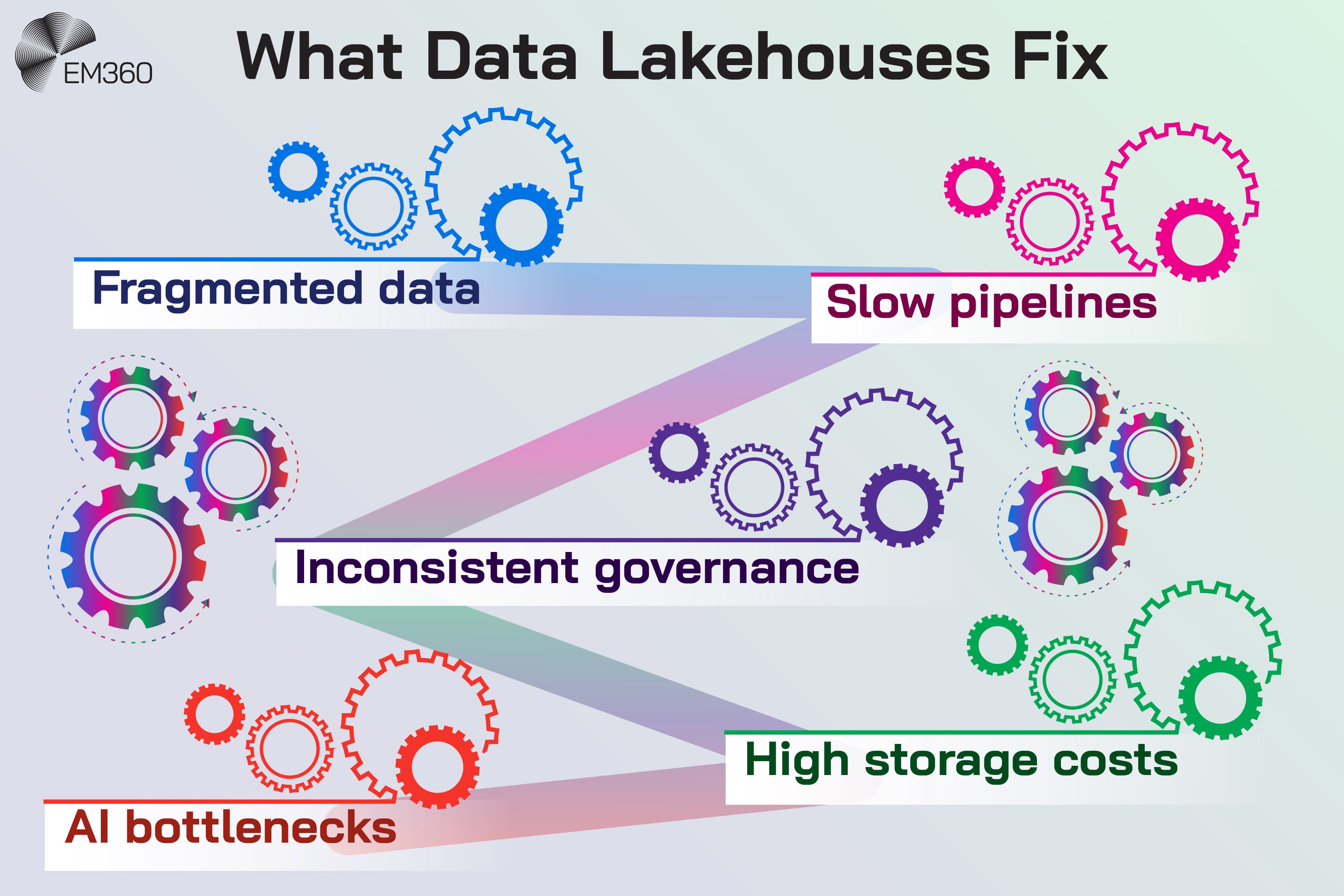

Most enterprises already have more data than they know what to do with. You have carefully modelled structured data in one place, oceans of unstructured and semi structured data in another, and teams trying to stitch it all together across tools that were never designed to work as one. The result is familiar: slow reports, gaps in governance, rising data storage costs and AI projects that stall because the estate underneath is too fragmented to trust.

A data lakehouse tackles that problem at the architectural level. It combines the scale and flexibility of a data lake with the reliability and performance of a warehouse, usually on top of cloud object storage, so data processing for analytics and AI does not depend on copying the same datasets into multiple systems. Instead, engineers, analysts and data scientists work from a single source of truth and build advanced analytics in a straight line rather than zig-zagging across stacks.

For infrastructure leaders, this is less about labels and more about pressure. Bu the first step is understanding what a lakehouse actually is and how it changes the relationship between your lakes and warehouses.

What Is Data Lakehouse Architecture?

The simplest way to think about lakehouse architecture is as a unifying pattern. Instead of treating the data lake and the data warehouse as two different worlds, it pulls them together and adds the missing controls that made lakes hard to trust.

Modern lakehouse architectures typically sit on cloud object storage, use open data formats, and rely on a central metadata layer that brings ACID guarantees, schema enforcement and performance tuning to data that would otherwise just be files.

Core definition

A data lakehouse is a unified data platform that keeps all data types in low cost object storage and uses a table and metadata layer to manage them like warehouse tables. The storage behaves like a lake. The management layer behaves more like a database. Together, they give you flexibility at the bottom and structure at the top.

Instead of loading data into one system for BI, another for advanced analytics and a third for AI, you keep a single logical data store and attach different compute engines to it. Reliability does not come from locking into a single appliance. It comes from the metadata layer that controls how data is written, read and evolved over time, so data integrity is preserved across workloads.

Why lakehouses emerged

The traditional pattern was a two tier model. Data flowed from operational systems into a data lake to keep costs down, then a curated subset was pushed into a data warehouse where it could be queried quickly. That worked until the gaps between those layers started to hurt.

Common problems included:

- Duplicated pipelines and multiple copies of the same raw data

- Delays between a change in source and its appearance in reports

- Inconsistent governance across the different layers of data warehouses and data lakes

- Cost storage issues as similar datasets appeared in warehouse, lake and various downstream tools

As AI and real time analytics became more important, that pattern creaked. Teams needed more freedom to bring in new data sources, but also tighter data governance and better performance. Lakehouse architecture emerged as a way to collapse those tiers into a single environment that could handle both exploratory work and stable, production grade analytics.

Data Lake vs Data Warehouse vs Data Lakehouse

It helps to look at the three models side by side.

Data warehouse basics

A data warehouse is built around well defined, structured data. Data is extracted from source systems, transformed through ETL processes and loaded into carefully designed schemas. The payoff is predictable performance for SQL queries and long term stability for reporting.

Inside 2026 Data Analyst Bench

Meet the analysts reframing enterprise data strategy, governance and AI value for leaders under pressure to prove outcomes, not intent.

The trade off is rigidity. Any change in source structures, business rules or use cases tends to ripple through models and ETL jobs. That slows down experimentation and can make it harder to support newer forms of data science or AI that need access to more varied data.

Data lake basics

A data lake is far more flexible. It stores information closer to its original form, including logs, clickstreams and semi structured records. It often uses a schema on read approach, where structure is only applied when someone wants to analyse the data.

This is attractive for ingestion speed and scale. It is also cheaper to keep large histories online. The downside is that, without strong data governance, a lake can become hard to navigate. Quality is uneven, context is missing, and most users do not know which datasets to trust.

How lakehouses unify these models

A lakehouse combines the strengths of both. It keeps the storage and openness of the lake while adding controls you would expect from a warehouse. Metadata layers sit on top of files and manage them as tables. ACID (atomicity, consistency, isolation, durability) guarantees ensure updates are safe. Schema enforcement and evolution rules keep structure explicit.

The result is one platform that can store, manage and serve data for BI, advanced analytics and AI. You still design models where it makes sense. You still curate data. You simply do it in a way that does not require separate systems and repeated copies.

The Main Components of a Data Lakehouse

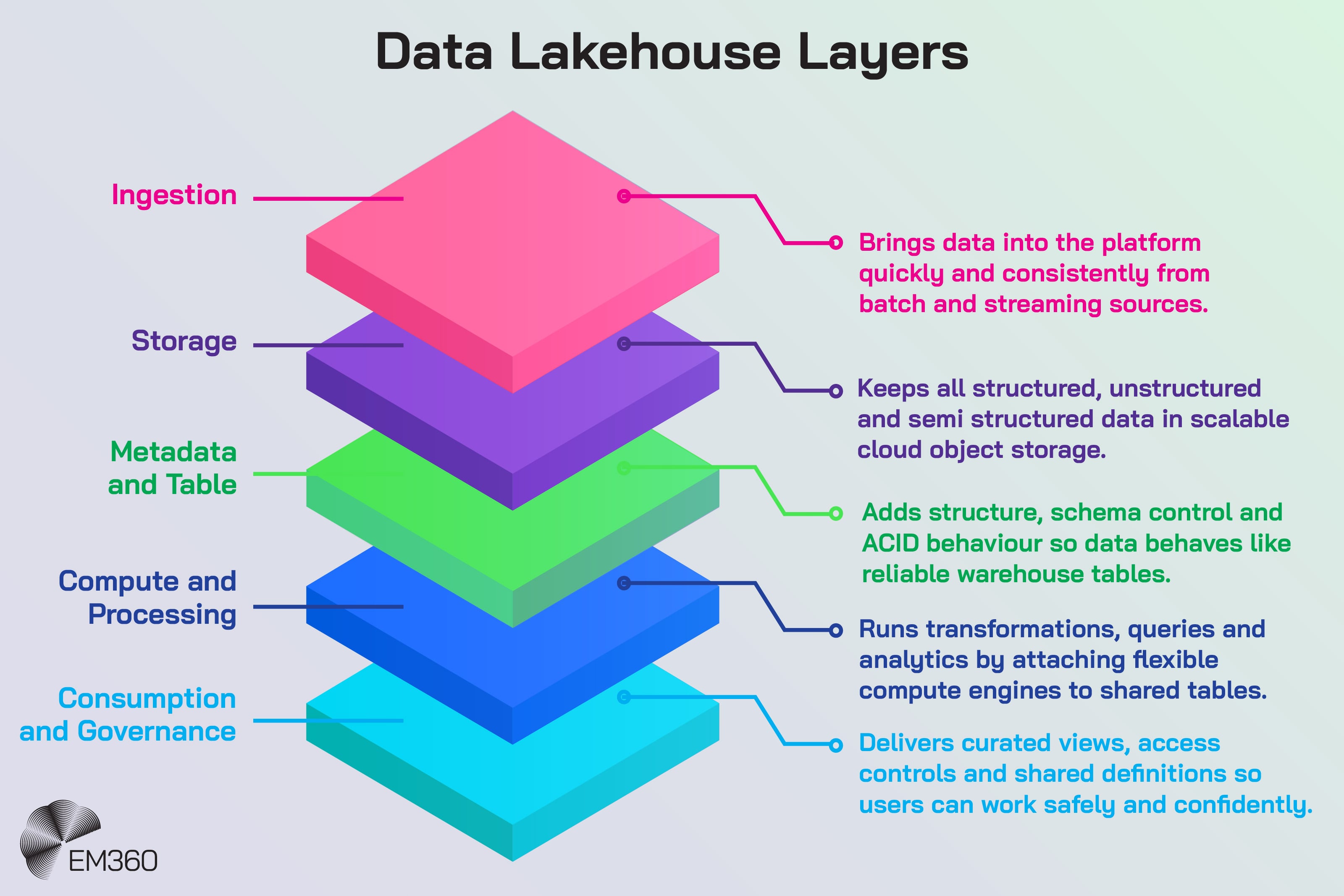

Most lakehouse designs follow the same broad pattern, even if the underlying products differ. You can think of them in five layers.

Ingestion layer

The ingestion layer is responsible for getting data into the platform. It handles batch loads from databases and files, as well as streaming feeds from queues and event platforms. The priority here is speed and safety. You want data to arrive quickly, with enough structure and metadata that it can be understood and governed later.

Engineering Continuous Compliance

Use cloud-native tooling, GRC platforms and pipelines to codify controls, centralise evidence and keep multi-cloud architectures audit-ready.

At this stage, data is usually written straight into cloud object storage and organised by source, domain or time. It can include structured, unstructured and semi structured data so you are not deciding too early which workloads it might serve.

Storage layer

The storage layer is where everything lives. It uses cloud object storage as a scalable, durable foundation and open data formats such as Parquet or ORC to store the contents efficiently. This is what keeps long term retention affordable and allows you to keep different data shapes together rather than scattering them across products.

Because the storage layer is decoupled from compute, you can scale capacity without changing how queries run. That separation also makes it easier to adapt to hybrid and multi cloud environments.

Metadata and table layer

The metadata and table layer is what turns a lake into a lakehouse. It keeps track of which files belong to which tables, how they are partitioned and what schemas they follow. It manages ACID transactions so multiple writers and readers can work concurrently without corrupting the data.

This layer is also responsible for:

- Enforcing or checking schemas at write time

- Maintaining table history and versions

- Providing statistics and indexes for query planning

- Handling compaction and file layout so performance stays consistent

Without this layer, you are simply querying files and hoping for the best. With it, you have tables that behave much more like those in a warehouse, even though they are backed by object storage.

Compute and processing layer

The compute and processing layer sits above storage and metadata. It runs transformations, queries and model training jobs. Different engines can be attached to the same tables for different purposes. For example, one engine might handle SQL analytics, another might handle large scale batch processing, and a third might focus on streaming or advanced analytics.

Because compute is separate from storage, you can align resources with workloads. Heavy processing jobs can run on independent clusters without affecting interactive reporting. Smaller, latency sensitive workloads can use lighter, always-on resources.

Avoiding BI Sprawl in 2025

BI tools promise insight, but fragmented stacks, weak governance and poor fit can waste 75% of data. See what to prioritise to avoid failure.

Consumption and semantic layer

The consumption and semantic layer is where people interact with the data. BI tools, notebooks, AI platforms and custom applications all connect here. A semantic layer on top can expose curated views, shared definitions and business metrics so different teams are not reinventing the same concepts.

This layer is also where a lot of data governance is felt. Access controls, masking rules and approval workflows sit close to the tools people actually use. When it works well, users do not need to know anything about metadata layers or table formats. They see clear datasets, understand what they represent and have the right level of access.

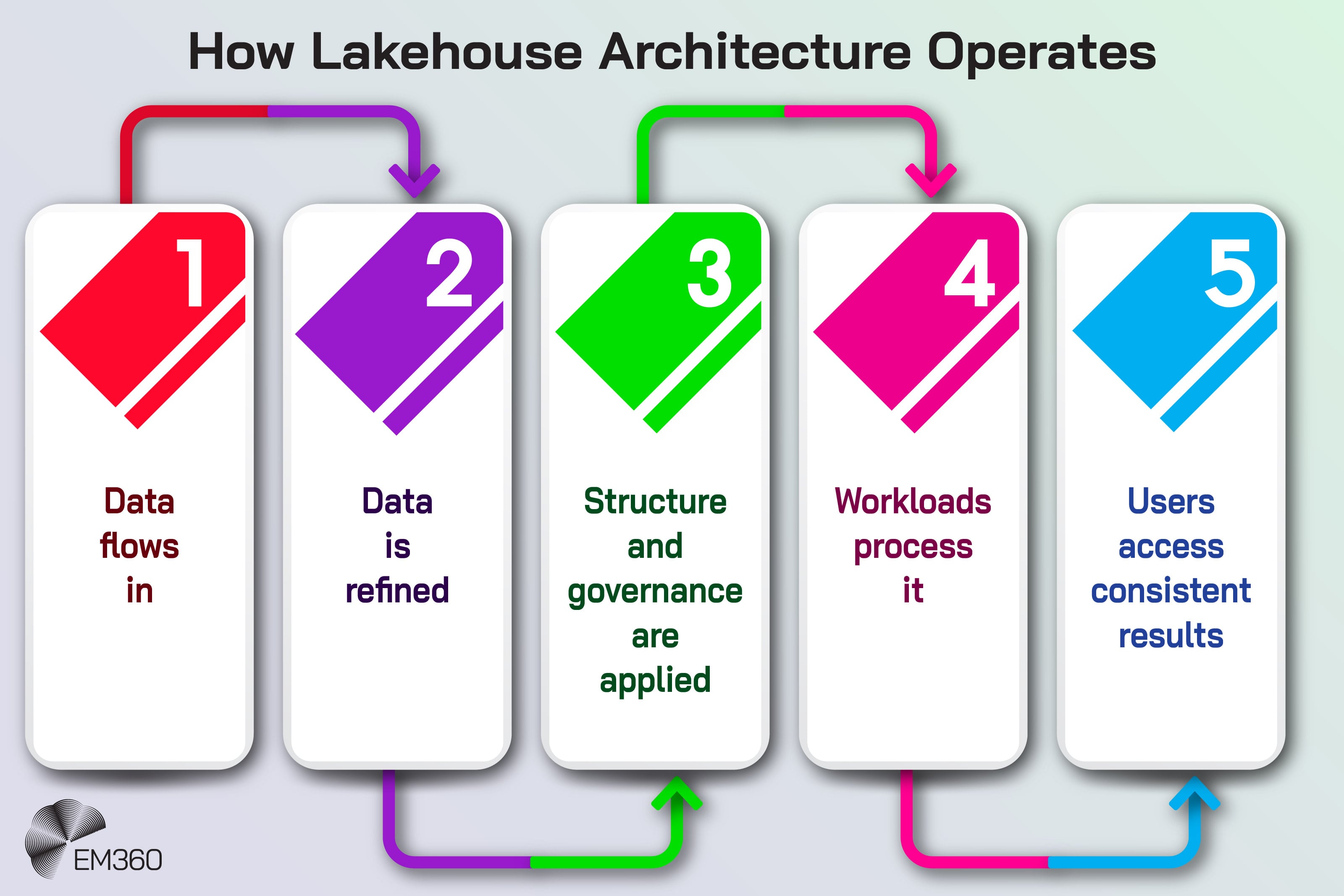

How Data Lakehouse Architecture Works in Practice

Once the layers are in place, the day to day flow becomes easier to picture.

From ingestion to enriched datasets

Data lands from source systems into the raw area of the lakehouse. From there, transformation jobs clean, join and standardise it into enriched tables. Those tables might represent customers, transactions, events, devices or any other core concepts the organisation relies on.

Because everything still lives in the same architecture, you can keep a clear path from raw to refined. Because everything sits in the same architecture, it’s much easier to follow how data moves from raw to refined. You can adjust transformations over time without shifting workloads into new systems. Teams also see where their numbers come from, which makes conversations about definitions and accuracy far simpler.

How ACID and metadata layers support reliability

The metadata layers coordinate who is writing and who is reading. With ACID guarantees, writers can update tables in a controlled way while readers continue to see a stable view. When changes commit, they appear as a single, consistent version instead of a half-finished update.

When Master Data Drives ROI

How disciplined MDM investment turns fragmented records into a single source of truth that lifts margins, CX quality, and decision speed.

That matters when you have multiple workloads depending on the same tables. A broken update should not take out operational reporting or delay AI pipelines. Transaction logs, version history and schema tracking also make it easier to investigate issues and prove data integrity to regulators and auditors.

How lakehouses support multiple engines and workflows

In a traditional environment, different tools often maintain their own copies of data. In a lakehouse, the common foundation is the file and table layer. SQL engines, machine learning frameworks and streaming tools all attach to the same tables.

That allows:

- BI teams to query curated tables directly

- AI teams to train models on shared datasets instead of private extracts

- Streaming jobs to maintain aggregates in the same platform used for reporting

You keep flexibility in how you process and consume data without losing visibility or control over where that data lives.

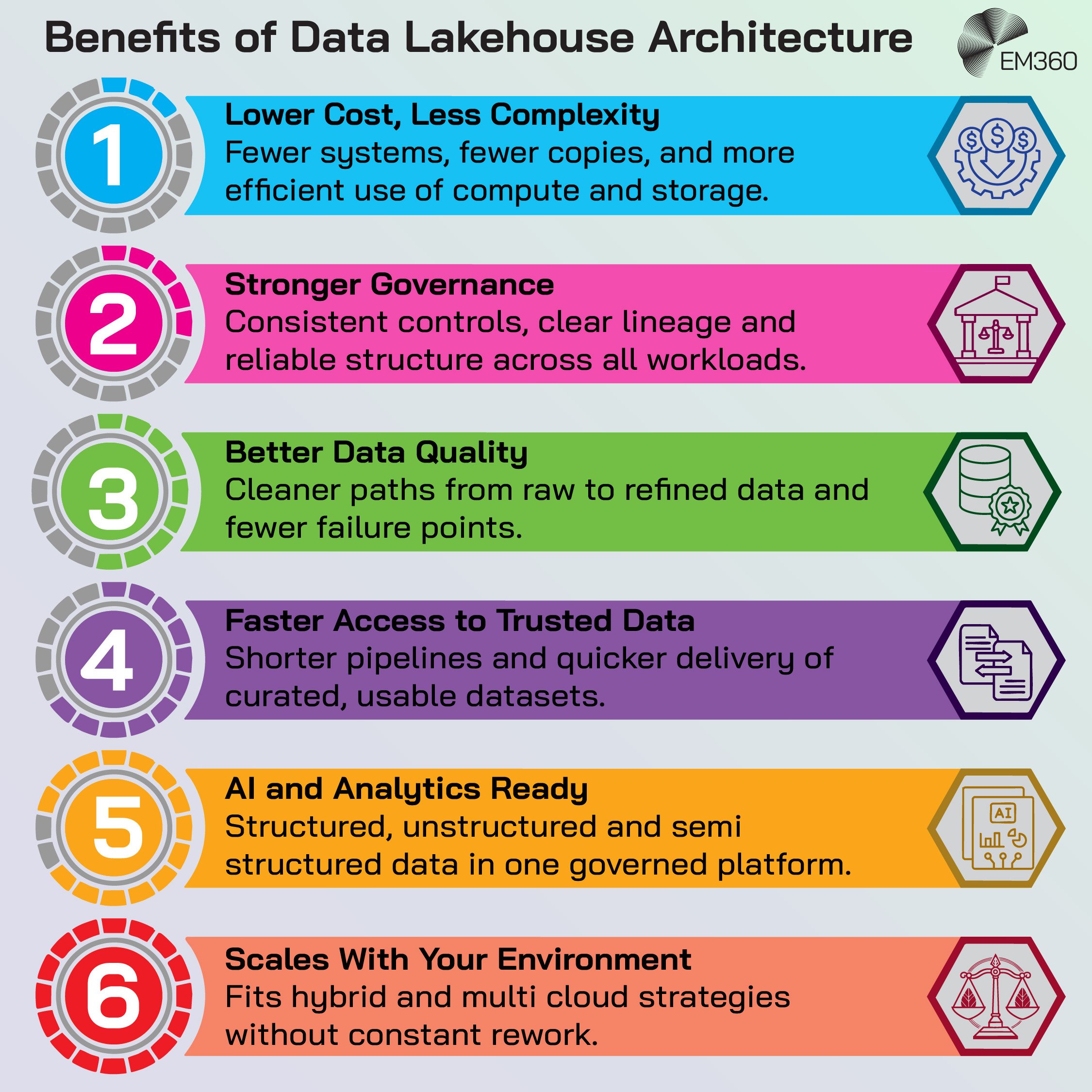

Benefits of Data Lakehouse Architecture for Infrastructure Leaders

The shift to a lakehouse model is not just about storage. It changes how platforms are run and how value is delivered from data.

Lower cost and simpler architecture

Consolidating lakes and warehouses into one pattern reduces duplication. The same datasets do not need to be stored and processed in three different tools to support slightly different workloads. You make fewer copies, manage fewer pipelines and reduce the number of moving parts.

That simplicity tends to show up in cloud bills and in operational effort. You are less likely to have overlapping systems doing similar jobs. You can also scale compute facilities more precisely, instead of growing an appliance every time one class of workload reaches its limits.

Stronger governance and data quality

A shared platform makes data governance more practical. Metadata layers and schema enforcement keep structure explicit. Lineage tools see the full path from source to consumption. Policy engines can apply consistent rules on who is allowed to see or change which datasets.

All of that helps you demonstrate data integrity and control when it matters. It also gives internal teams more confidence in the data they use, which is often the bigger hurdle.

Faster access to reliable data

Shorter, clearer paths between ingestion and consumption mean less waiting time. New sources can be onboarded once, then made available to multiple teams. When something breaks, it tends to be easier to find, because there are fewer hidden copies and side channels.

For the people trying to answer questions or build models, this simply feels like faster access to datasets they can trust. For the people running the platform, it feels like fewer surprises.

AI readiness

AI and modern data science work best when they can draw from a wide range of histories, signals and behaviours. That often means combining time series, logs, documents and classic analytics tables.

A lakehouse keeps that variety together inside a governed environment. It lets you expose well defined training and serving tables without dragging them through separate systems. That reduces friction when models move from experiment into production and when they need to be refreshed.

Scalability across environments

Most large organisations now operate across more than one environment. There might be on-premise systems that are not going away, one or more public clouds, and a growing list of SaaS platforms.

Because lakehouse architecture builds on cloud object storage, open data formats and decoupled compute, it fits more naturally into this mixed world. Workloads can move or be split across regions. New engines can be introduced without rebuilding the underlying store. That flexibility gives you more room to adapt as requirements change.

Challenges and Considerations (And How Enterprises Overcome Them)

Lakehouses offer a lot, but they are not trivial to build or run. Knowing where the challenges lie makes it easier to plan.

Complexity during initial implementation

Moving from existing warehouses and lakes to a lakehouse touches technology, processes and organisational design. Data domains need clear owners. Governance decisions need to be made explicitly. Pipelines need to be rebuilt or refactored.

Trying to do this everywhere at once is usually a mistake. Most successful implementations start with a small set of high value domains, prove patterns there and expand only when those patterns hold. That way, mistakes are easier to correct and wins are easier to demonstrate.

Managing metadata and table formats

Table formats such as Delta Lake, Apache Iceberg and Apache Hudi give you the capabilities you need at the metadata and table layer. They also introduce new operational duties. Someone has to care about compaction, retention rules, table evolution and how different engines interact with those formats.

Setting standards early helps. Choosing one primary format and being deliberate about where you use others keeps fragmentation under control. Treating the metadata services as critical infrastructure, not as invisible plumbing, is just as important.

Security and access control

Bringing multiple workloads into one platform raises the security bar. Access control needs to be fine grained and aligned with your identity systems. Sensitive datasets need clear classification, and logs need to be complete enough to support investigations.

The lakehouse does not remove this work. It concentrates it. The benefit is that you have one place to manage and audit access instead of several.

Avoiding data quality pitfalls

No architecture eliminates data quality issues. Schema drift, unexpected values and failed updates can still occur. Without monitoring, these issues simply propagate further because the platform is now central.

Enterprises that do well here bake checks into pipelines, track key quality indicators and use lineage to understand what is affected when something goes wrong. The aim is not perfection. It is early detection and controlled recovery.

Essential Technologies That Enable Lakehouse Architecture

The lakehouse idea is architectural, but certain technologies make it realistic to implement at scale.

Open data formats and table formats

Open file formats like Parquet and ORC and table formats such as Delta Lake, Apache Iceberg and Apache Hudi are part of the foundation. They make it possible to store data in standardised ways and still have rich table behaviour on top.

Using open formats helps you avoid being tightly bound to a single engine. It lets multiple tools read and write the same tables and keeps your metadata layers and schema enforcement rules portable.

Compute engines and analytics tools

Compute engines connect to the table and metadata layer rather than owning the data outright. That includes SQL engines, batch processing frameworks, streaming platforms and AI tooling.

This pattern means teams can pick the right engine for each workload while still staying inside the same lakehouse. It also reduces the pressure to standardise everything on a single tool just to keep data manageable.

Governance frameworks and catalogs

Governance frameworks and data catalogues provide the organising layer. They help you understand what datasets exist, how they relate to each other and who is responsible for them. They also give non-specialist users a way into the lakehouse that does not involve reading configuration files.

In practical terms, they become the front door to the platform. When someone needs data, they search the catalogue, see context and request access through defined channels.

When a Lakehouse Is the Right Choice (And When It Is Not)

It is easy to be drawn into new patterns because they are fashionable. Lakehouses are useful, but they are not automatically the right answer.

Signs that an organisation is ready

The case for a lakehouse is usually stronger when:

- AI and advanced analytics workloads are growing

- Similar datasets appear in multiple data storage systems

- Cloud costs are rising in ways you cannot easily explain

- Teams disagree about which datasets to trust

These are signs that consolidation and a clearer architecture would solve real problems, not just provide a new label for the same issues.

Signs that a lakehouse may be premature

If basic data governance is still missing, a lakehouse will simply expose that gap. When ownership of key domains is unclear, or when analytics and AI are still very limited, other investments might come first. Sometimes you need to stabilise simpler platforms before introducing a more powerful pattern.

Hybrid models

A lakehouse does not have to replace everything straight away. Many enterprises choose a hybrid approach. They keep a smaller, focused data warehouse for stable reporting and build a lakehouse alongside it for broader analytics and AI. Over time, as confidence and capability grow, more workloads can move into the lakehouse.

The important point is that the lakehouse is treated as a strategic foundation, not a side project.

Frequently Asked Questions About Lakehouse Architecture

What is data lakehouse architecture?

It is a unified data platform that keeps all data in low cost object storage and uses metadata layers and table formats to provide ACID guarantees, schema enforcement and high performance access for analytics and AI.

Is a lakehouse the same as Delta Lake?

No. Delta Lake is one table format that can be used to build a lakehouse. Apache Iceberg and Apache Hudi are others. A lakehouse is the broader architecture that uses these formats.

Does a data lakehouse replace a warehouse?

It can, but does not have to. Some organisations phase out their traditional warehouse as the lakehouse matures. Others keep a smaller warehouse for a defined set of workloads and use the lakehouse for everything else.

Is lakehouse architecture good for AI?

Yes. Lakehouses bring structured, unstructured and semi structured data into one governed environment. That makes it easier for data scientists to build and maintain models and for leaders to oversee how those models are fed and used.

What are the components of a lakehouse?

Typical components include an ingestion layer, a storage layer built on open data formats, a table and metadata layer that provides ACID behaviour, a compute and processing layer, and a consumption layer for BI, analytics and AI tools.

Final Thoughts: Unified Data Is the Only Sustainable Path Forward

Data platforms rarely become complex overnight. Layers build up one project at a time, one tool at a time, until the gaps between them start to slow everything down. At that point, it is not just about performance or cost. It becomes a question of whether you can still trust the data you are using to make decisions.

Data lakehouse architecture is one way of answering that question with a structural change instead of another patch. By treating unified, governed data as shared infrastructure, it gives you a platform where analytics, operations and AI can move in the same direction instead of pulling against each other.

The shift is not just technical. It is a decision to simplify, consolidate and make your data estate easier to understand. The organisations that are already further along this path are finding it easier to adapt to new demands, because they are not fighting their own architecture every time something changes.

If you are weighing how and when to move towards a unified data platform, it helps to see how others are handling the same trade offs. EM360Tech spends a lot of time with the people making these calls day to day, and brings those lessons together so you can test your own plans against real-world experience before you commit.

Comments ( 0 )