Artificial intelligence has become an integral part of business operations, reconfiguring everything from credit-risk assessment, healthcare tools for spotting potential issues early, to customer engagement and marketing automation. While generative AI tools are now assisting employees in preparing reports, coding software, and even creating multimedia content, they are also raising new questions about oversight and responsibility.

As organisations embrace AI faster than ever, the risks grow alongside it. From hiring algorithms that unfairly favour certain groups to chatbots giving incorrect medical advice, these high-profile incidents show just how easily AI mistakes can damage a company’s reputation, disrupt operations, and lead to hefty fines, penalties, or mandates to change practices.

Why AI governance matters now

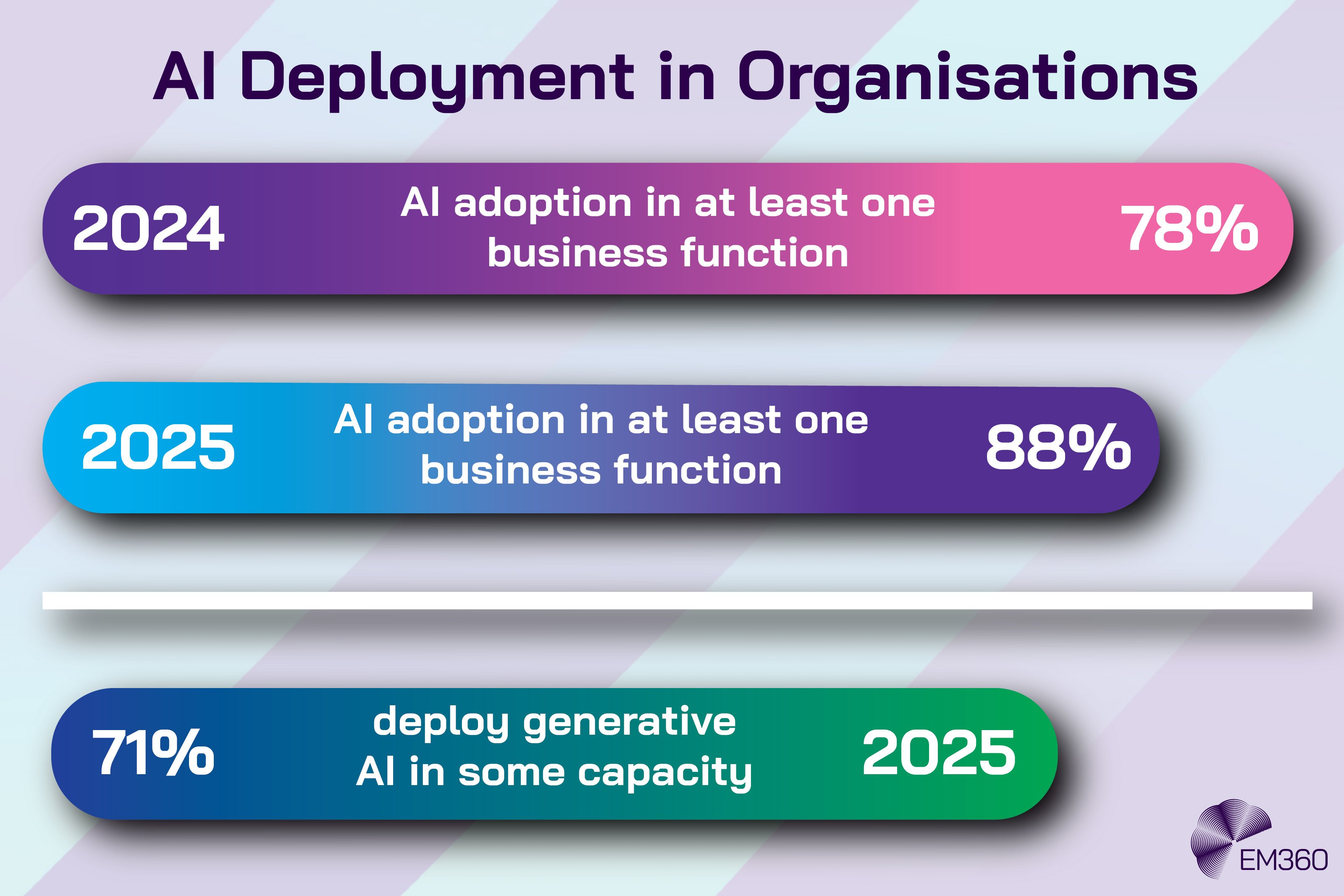

As we all know, in this day and age, the stakes are higher than ever. According to industry surveys, 88 per cent of enterprises in 2025 reported AI adoption in at least one business function, up from 78 per cent in 2024, while 71 per cent now deploy generative AI in some capacity. The majority of enterprises remain in the early stages of AI adoption, with only around a third having moved beyond pilots to broader, scaled implementation.

Meanwhile, regulatory pressures are mounting. The EU AI Act, which entered into force in August 2024, began phasing in obligations slowly from February 2025, introducing stringent requirements for transparency, risk assessment, and human oversight. The EU believes that with this act in place, AI will continue to advance, but now it will do so with clear rules and under a shared strategic vision.

In the United States, the withdrawal of Executive Order 14110 in January 2025 created uncertainty in AI regulatory expectations, forcing enterprises to navigate an unstable policy environment. With all this happening in the background, governance is no longer optional. At its core, it provides the rules, guidelines, and oversight needed to balance innovation with responsibility. This means that effective AI governance ensures that AI systems are transparent, ethical, and compliant, helping businesses implement tools safely, responsibly, and legally.

Defining AI Governance

AI governance means the rules, standards, and safeguards that guide how AI systems are built, used, and monitored. Unlike corporate governance, which focuses on finances and operations, or traditional data governance, which looks after the accuracy and privacy of information, AI governance deals with the specific risks that come from machines making decisions.

AI models are created by machine learning systems that learn from data produced by humans, which means they can reflect biases, make mistakes, or be misused. Governance frameworks help reduce these risks by providing clear rules and accountability. For example, a bank using an AI credit-scoring tool must make sure its decisions are fair, explainable, and follow lending laws. Likewise, healthcare providers need to ensure AI diagnostic tools give accurate results and don’t unfairly affect certain patient groups.

At the organisational level, AI governance is both a way to prevent problems and a strategic approach. It includes rules for building models, managing data, reducing risks, following ethical standards, and meeting regulations. By setting clear ownership, accountability, and reporting, governance makes sure AI is used safely and doesn’t harm public trust. Essentially, it builds responsibility into every step of an AI system’s life, right from collecting data to retiring the model.

Within an organisation, AI governance is both about preventing problems and planning strategically. It covers rules for building models, managing data, reducing risks, following ethics, and meeting regulations. Clear ownership and accountability make sure AI is used safely and keeps public trust. In short, responsibility is built into every stage of an AI system, from collecting data to retiring the model.

The Four Pillars of AI Governance

Inside 2026 Data Analyst Bench

Meet the analysts reframing enterprise data strategy, governance and AI value for leaders under pressure to prove outcomes, not intent.

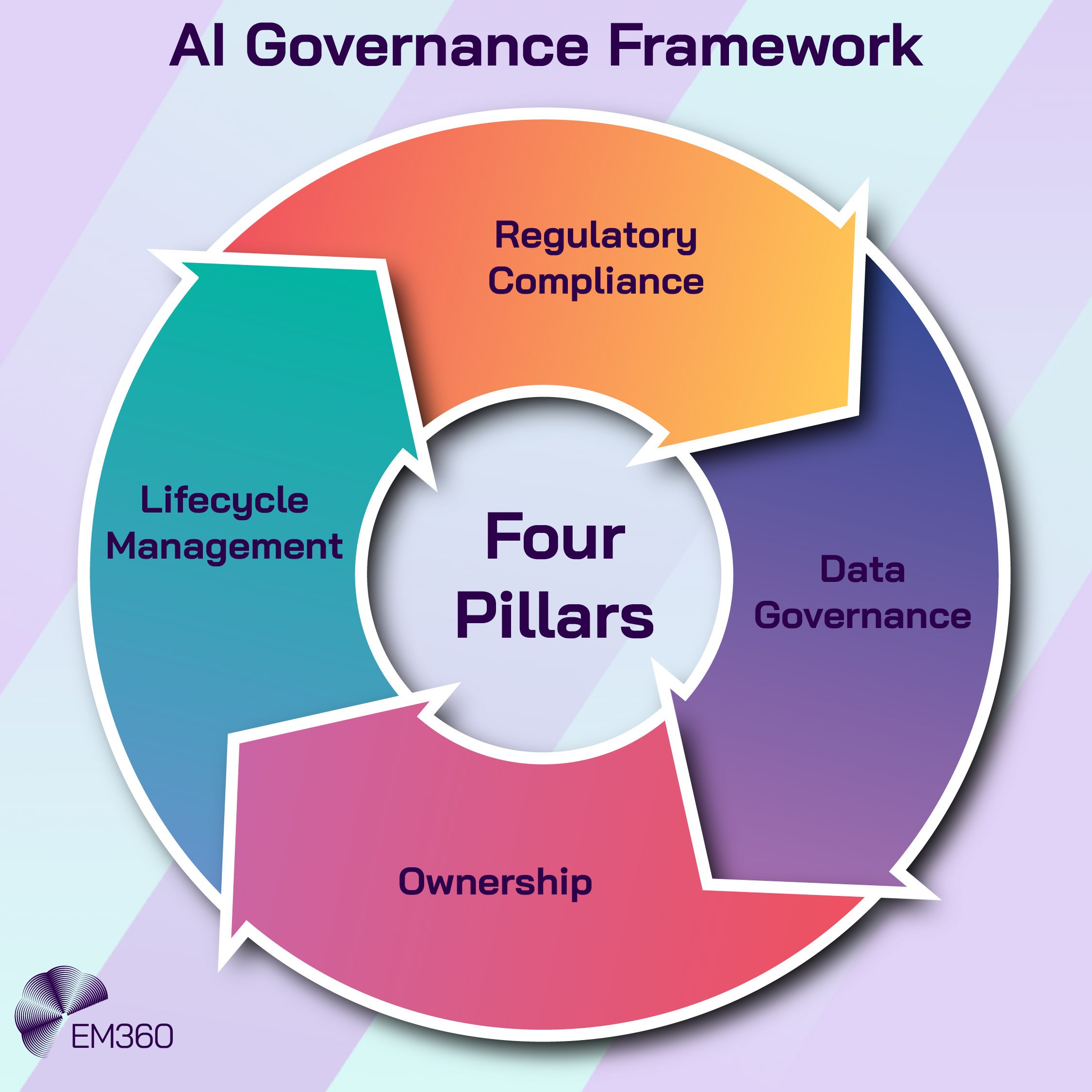

A strong AI governance framework isn’t just a static checklist; it’s a layered system built around four interconnected pillars: data governance, regulatory compliance, ownership, and lifecycle management. Each pillar addresses a key part of the ecosystem: from ensuring data is accurate and well-managed, to meeting legal requirements, defining accountability, and overseeing systems throughout their lifecycle. Together, they form a coherent structure that helps organisations manage risk, uphold ethical standards, and maintain operational discipline. Seen this way, governance moves beyond being an administrative task; it becomes a strategic tool that strengthens the organisation’s broader technology and innovation goals.

Let’s take a closer look at each pillar:

1. Data governance

Data is the foundation of any AI system. If the data is low-quality or biased, the results can be flawed, which can make decisions unreliable. Good data governance means keeping datasets accurate, secure, and ethically sourced throughout their use. This includes checking that data is correct, controlling who can access it, tracking where it comes from, and following privacy laws like GDPR or HIPAA.

Many organisations now use dashboards and real-time metrics to monitor the health of their data and models. For example, a hospital using AI to predict patient outcomes must make sure its data is up to date, representative of all patients, and securely stored. Ignoring these steps can lead to wrong predictions, put patients at risk, and create legal problems for the hospital.

2. Regulatory compliance

AI governance turns complex and ever-changing regulations into practical, day-to-day practices. The EU AI Act, for example, requires risk assessments, transparency for high-risk systems, and human oversight for critical applications. Around the world, organisations also have to follow rules like GDPR, HIPAA, and industry-specific laws.

Compliance isn’t a one-time task—it means constantly checking that AI systems meet legal and ethical standards. For example, banks need to make sure AI credit scoring doesn’t discriminate against protected groups, while government agencies must ensure AI tools used for public services are fair and transparent. In 2025, more companies are embedding compliance into everyday operations, using automated reports and audit trails to show they are following the rules.

3. Ownership and accountability

Inside Streaming Data Stacks

Dissects leading real-time platforms and architectures turning Kafka-era pipelines into a governed backbone for AI and BI.

Clear ownership is key to making sure AI systems are used responsibly. Without someone in charge, mistakes, bias, and ethical problems can go unnoticed. Typically, cross-functional teams including IT, data science, legal, ethics, and business leaders oversee the development, deployment, and maintenance of AI.

Having clear ownership gives a single point of responsibility, so issues can be addressed quickly if outcomes go off track. For example, a retail company might assign a team member to manage AI-driven demand forecasting, making sure the model reflects market conditions accurately and avoids operational problems. Assigning accountability isn’t just about following rules; it’s a strategic step that helps organisations manage risk proactively.

4. Lifecycle management

AI models are constantly changing, which makes lifecycle management important. This means monitoring, updating, and retiring models as needed. The process covers development, training, deployment, ongoing monitoring, performance checks, and eventually decommissioning.

For example, self-driving car AI models need frequent updates to keep up with new traffic laws, road conditions, and sensor adjustments. Without proper oversight, models can become unreliable, producing unsafe or biased results. Lifecycle management also ensures outdated models are retired, helping prevent errors and reduce risks for the organisation.

Bias, Risk, and Continuous Monitoring

Even with the four pillars in place, good governance also means tackling bias, managing risk, and keeping systems under continuous watch. Let's take a look:

- Bias and fairness require spotting and fixing discriminatory patterns. For example, hiring AI systems needs regular audits to make sure they don’t favour certain groups, and financial models must avoid unfairly affecting lending outcomes based on race, gender, or age.

- Risk management means looking ahead for operational, ethical, and security issues. Banks often check AI fraud-detection tools to prevent false alerts that could disrupt customers, while healthcare providers monitor diagnostic models to reduce clinical risks.

- Continuous monitoring keeps AI models accurate and compliant as conditions change. Generative AI systems bring extra challenges, like misinformation, hallucinations, or accidental leaks of sensitive data. Monitoring frameworks catch these problems early, allowing fixes before any harm occurs.

Regulatory Frameworks in AI Governance

Inside the New AI Attack Surface

Exposes the top attack paths into enterprise AI and compares tools built to stop prompt injection, data leakage and model theft in production.

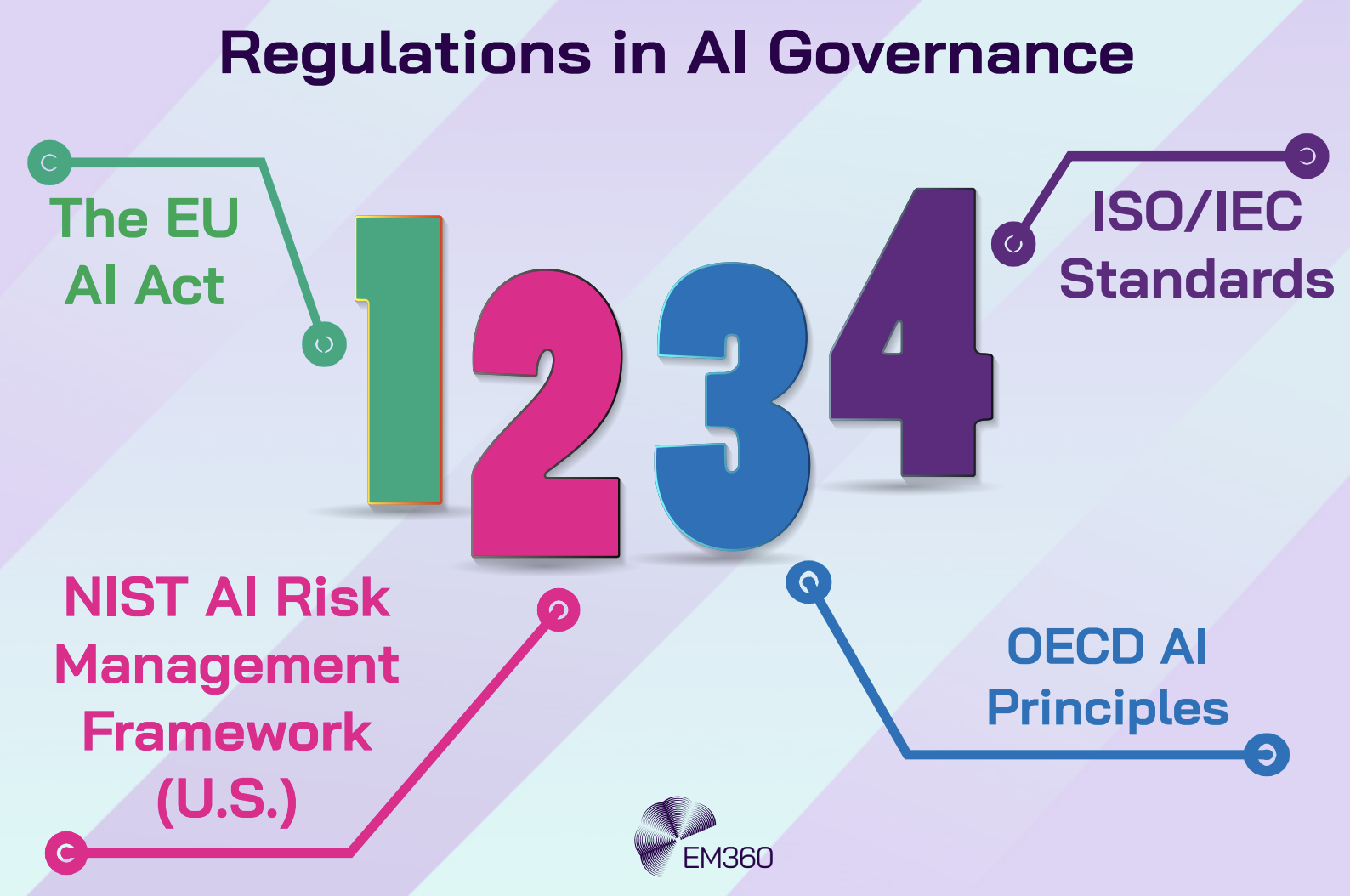

As AI use grows around the world, new rules and standards are emerging to make sure systems are safe, fair, and accountable. From the EU’s AI Act to U.S. NIST guidance, OECD principles, and ISO/IEC standards, companies now have several frameworks to follow. Understanding these rules is key to managing risk, staying compliant, and building trust in AI.

1. The EU AI Act

The EU AI Act, phased in from February 2025, is the world’s first comprehensive AI regulation. It classifies AI systems based on risk levels:

- Unacceptable risk: AI systems that manipulate human behaviour, exploit vulnerabilities, or enable social scoring are banned.

- High-risk: Systems in sectors like finance, healthcare, transportation, and law enforcement must comply with strict requirements for transparency, documentation, and human oversight.

- Limited risk: Systems like chatbots or recommendation engines face lighter obligations, such as clear disclosure when interacting with humans.

For enterprises, this means building risk classification into the governance framework, maintaining technical documentation, and ensuring that high-risk AI systems are auditable, explainable, and regularly tested for bias and accuracy.

2. NIST AI Risk Management Framework (U.S.)

The NIST AI RMF provides voluntary guidance to U.S. organisations on AI governance, focusing on trustworthiness and risk management. Its core functions are to map, measure, manage, and govern to help enterprises:

- Identify AI risks across development, deployment, and operation

- Measure performance, fairness, and security metrics

- Manage controls to reduce risk

- Establish ongoing governance practices

Even though it is voluntary, many U.S. enterprises adopt it to prepare for future federal regulations and to demonstrate due diligence.

3. OECD AI Principles

The OECD Principles on AI are global guidelines emphasising:

- Inclusive growth and well-being

- Human-centred values and fairness

- Transparency and explainability

- Accountability and robustness

These principles are particularly useful for multinational enterprises that must comply with multiple legal regimes while demonstrating responsible AI practices across borders.

4. ISO/IEC Standards

ISO/IEC standards, such as ISO/IEC 22989 (AI concepts and terminology) and ISO/IEC 23894 (AI risk management), provide operational guidance for technical robustness, testing, and auditability. These are essential for organisations seeking internationally recognised frameworks for quality and risk management.

Shadow AI: The Hidden Risk

When BI Becomes Enterprise DNA

How enterprise BI platforms with embedded AI, governance and scale are reshaping who makes decisions and how fast insights reach the board.

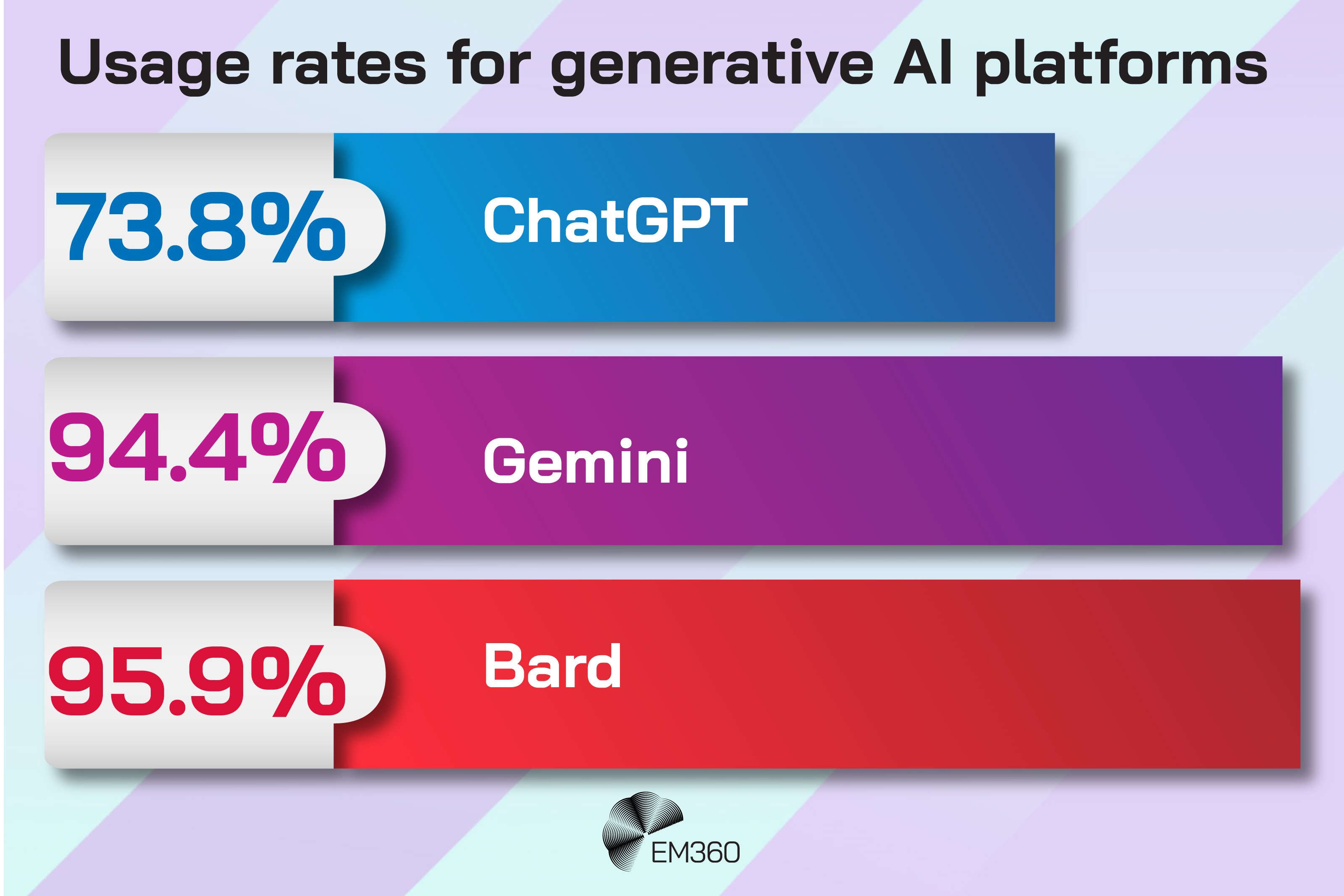

A significant governance challenge is Shadow AI, the unsanctioned use of AI tools by employees. Research in 2025 shows that 50 per cent of employees use Shadow AI, motivated by productivity gains, autonomy, or the lack of enterprise-provided tools. Usage rates for generative AI platforms are striking: ChatGPT (73.8 per cent), Gemini (94.4 per cent), and Bard (95.9 per cent) on public accounts.

Shadow AI brings new privacy, security, and compliance risks. When employees put data into consumer AI tools, it can unintentionally train outside systems, potentially exposing sensitive customer or company information. Governance needs to go beyond formal policies, including employee training, controlled access, and clear usage rules. Just like Shadow IT before it, Shadow AI shows that simply banning tools doesn’t work; structured oversight is what really keeps data safe.

Implementing AI Governance in Practice

Enterprises that succeed in AI governance integrate oversight, policy, and operational alignment into their business models. The main elements include:

- AI governance committee: Provides cross-functional oversight, reviews high-risk projects, and aligns policies with regulations and ethical standards.

- Policies and procedures: Operationalise compliance with frameworks such as NIST AI RMF, OECD Principles, ISO/IEC standards, and the EU AI Act.

- Data governance: Ensures datasets are high-quality, secure, and auditable.

- Training and literacy: Educates employees and executives on AI risks, ethical standards, and regulatory obligations.

Supplementary practices include visual dashboards for real-time monitoring, automated detection of performance drift, alerts for deviations, custom metrics linked to business KPIs, audit trails, and integration with existing IT infrastructure. Together, these mechanisms ensure AI systems are transparent, accountable, and aligned with organisational goals.

Real-World Applications

AI governance frameworks have moved beyond theory and are now actively applied across industries to ensure that AI initiatives generate value responsibly, ethically, and in compliance with regulations. They provide structured oversight across the entire AI lifecycle from data collection and model development to deployment and continuous monitoring, helping organisations manage risk, maintain compliance, and foster innovation.

Let's take a look at some of the sectors:

- Finance: AI credit-scoring and fraud-detection models require rigorous oversight to maintain fairness and regulatory compliance. Banks implement monitoring dashboards to detect anomalies in real time, reducing financial and reputational risk.

- Healthcare: Diagnostic AI tools must be continuously validated to prevent bias and ensure patient safety. Hospitals implement lifecycle management strategies to update models as new clinical data becomes available.

- Government and public services: AI tools for policy analysis, citizen engagement, and resource allocation must maintain transparency and trust. Oversight mechanisms ensure equitable service delivery while complying with data protection laws.

Across these sectors, AI governance frameworks provide a common foundation that balances innovation with accountability, ensuring that AI technologies deliver reliable, ethical, and legally compliant outcomes.

The Future of AI Governance

As AI becomes ingrained in enterprise operations, the need for resilient governance has never been more urgent. By 2025, organisations will be facing serious pressure to balance innovation with ethical, legal, and operational responsibility. AI is no longer just a tool; it is a strategic asset, and managing it effectively requires structured oversight that spans the entire lifecycle, from data ingestion to model deployment and ongoing monitoring. AI governance is evolving fast in 2025; it’s being shaped by both regulatory urgency and technological advances.

Key emerging trends include:

- Automated monitoring: Real-time systems are increasingly used to detect drift, bias, and security vulnerabilities proactively. As governance matures, organisations are embedding continuous validation mechanisms to support model robustness.

- Global standards convergence: Frameworks like the EU AI Act, NIST AI RMF, OECD AI Principles, and ISO standards (e.g., ISO/IEC 42001) are gaining traction, helping to harmonise cross-border compliance.

- Generative AI oversight: With the rapid proliferation of large language models, new governance guardrails are being built to address hallucination risks, copyright issues, and data privacy.

- Regional adaptation: Governance approaches are being tailored to local contexts — especially in emerging markets — to reflect language diversity, cultural norms, and distinct regulatory requirements.

- Executive engagement: AI governance is becoming a board‑level mandate. According to a 2025 research, more companies are delegating governance responsibility to C‑suite executives and board members.

These developments go far beyond simple compliance. The global AI governance market, projected to grow from approximately USD 309 million in 2025 to USD 4.83 billion by 2034, is expanding rapidly as organisations invest in platforms, frameworks, and processes. By integrating these governance practices, enterprises can turn oversight from a mere cost centre into a strategic advantage, ensuring AI initiatives are ethical, compliant, and deliver measurable business value.

AI Governance

AI governance is no longer just a technical detail; it’s a strategic necessity. By combining the four pillars—data governance, regulatory compliance, clear ownership, and lifecycle management with bias checks, risk management, and ongoing monitoring, organisations can use AI responsibly, ethically, and effectively.

In 2025, AI governance bridges the gap between innovation and accountability. Companies that invest in structured governance not only protect themselves from operational, reputational, and regulatory risks but also set a standard for responsible AI use worldwide. The question is no longer whether to adopt AI, but if it can be done transparently, safely, and ethically. Organisations that get this right will shape the future of intelligent enterprise.

Comments ( 0 )