Privacy regulations are tightening. AI systems are accelerating. And the pressure to extract value from sensitive datasets has never been higher. Security and compliance leaders are being asked to enable innovation and defend against risk.

Often at the same time, and often using the same dataset.

It is no surprise that 74 per cent of organisations still haven’t seen real value from their AI investments. The barriers are not only technical. They are legal, ethical, and architectural — and they start with data.

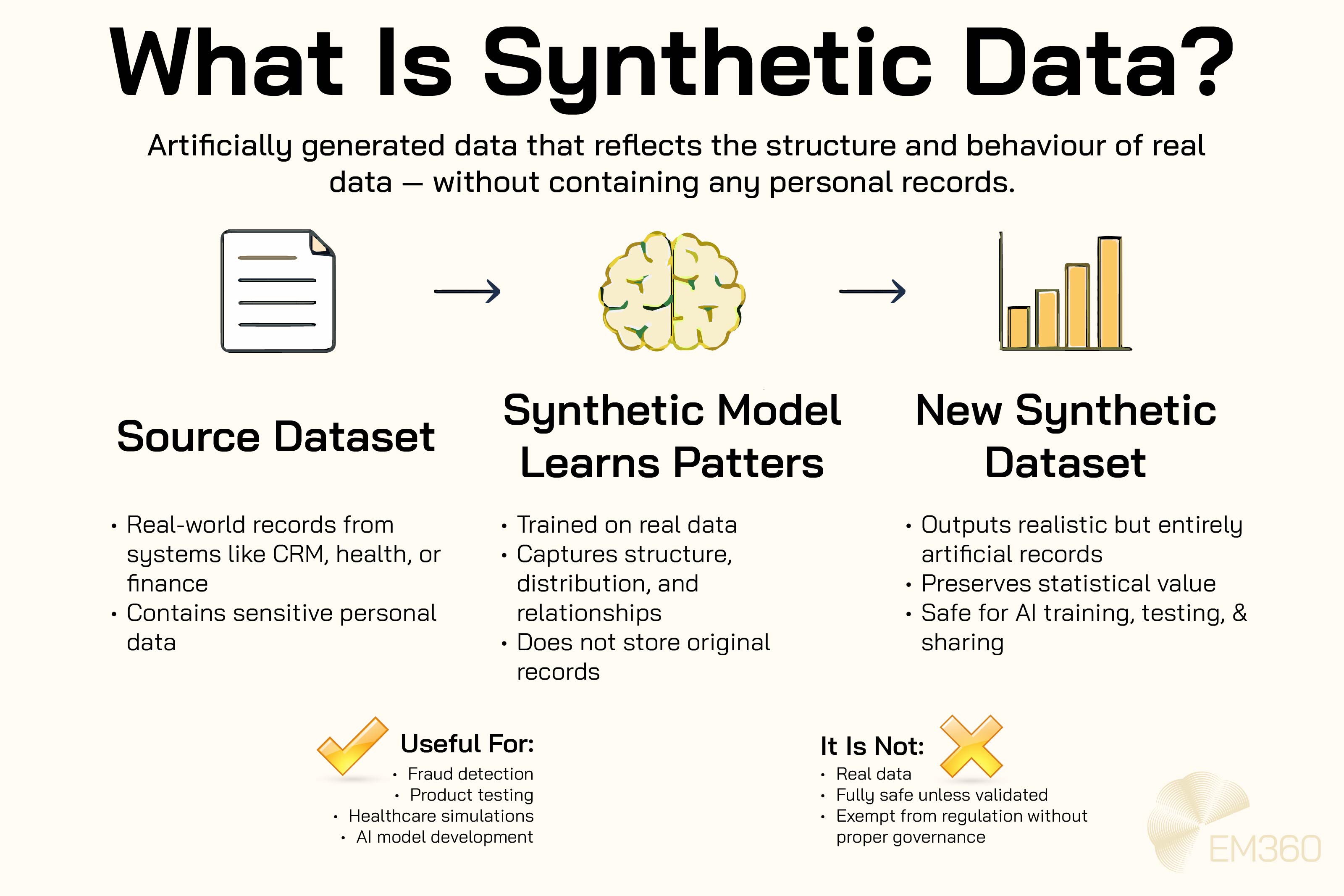

For many, synthetic data has emerged as a promising solution. Synthetic data replaces real records with artificial versions that reflect the same statistical patterns. This allows organisations to train AI models, test fraud systems, and simulate healthcare scenarios — without handling direct personal information.

At least, that’s the theory.

In practice, privacy-preserving AI isn't guaranteed just because the data is synthetic. The line between anonymous, pseudonymous, and fully synthetic data is more complex than most vendors admit.

Under regulations like the GDPR, this difference matters. It determines whether synthetic data is regulated, reportable, and legally usable at scale.

For CISOs, data leaders, and governance teams, this means one thing. Synthetic data can support compliance, but it cannot replace it.

What Is Synthetic Data and Why Does It Matter for Compliance?

Synthetic data refers to information that is artificially created rather than collected from real-world interactions. It is typically produced by machine learning models trained on original datasets.

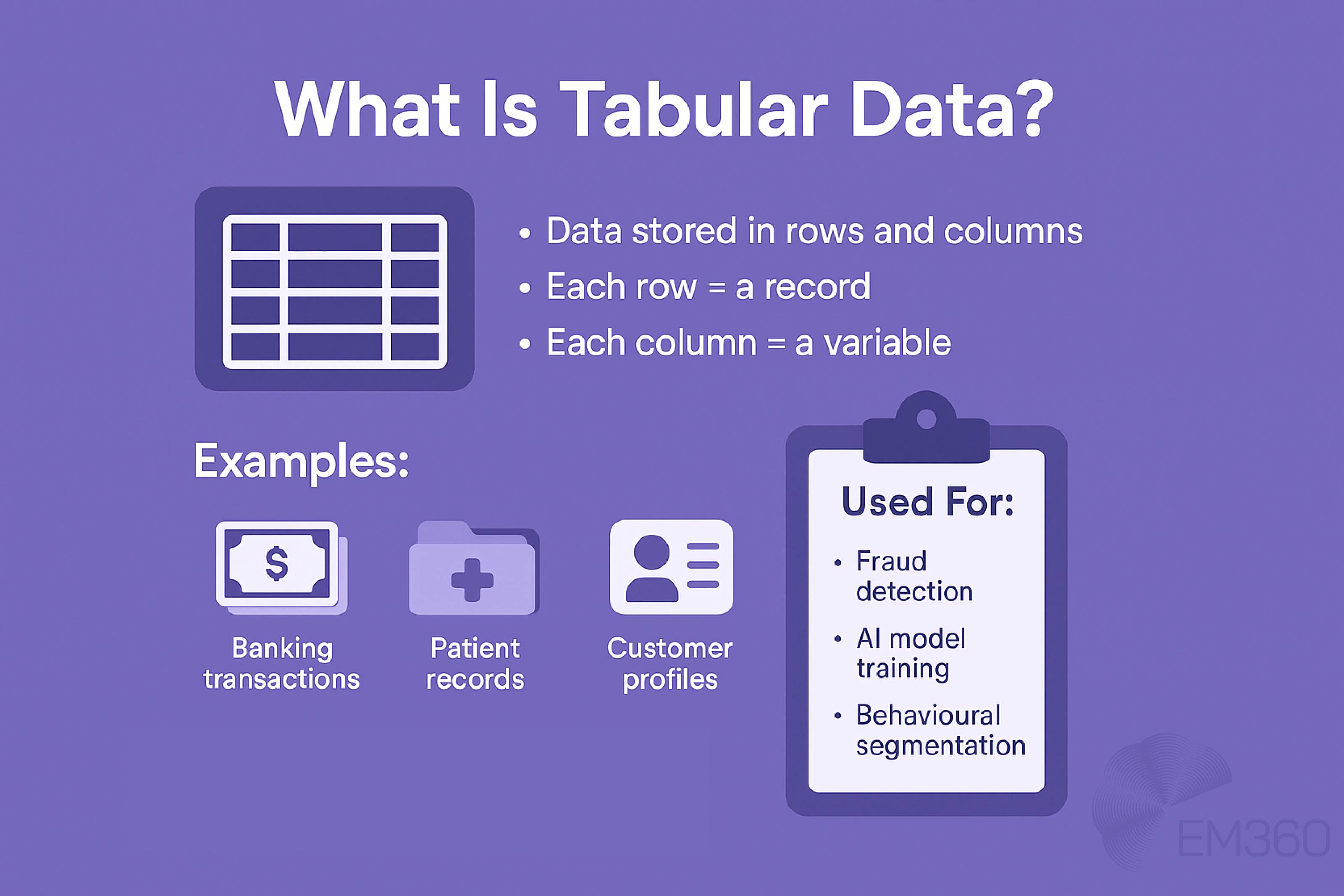

These models generate new records that mimic the structure and patterns of the original data, without copying any individual’s personal details. The most valuable form for enterprise use is tabular data, such as financial transactions, healthcare records, or customer journeys.

These datasets often carry sensitive attributes and are tightly regulated under laws like the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA).

What synthetic data offers is the ability to model those behaviours or outcomes without directly exposing their underlying subjects. But synthetic does not automatically mean compliant.

Too often, synthetic data is confused with anonymised or pseudonymized datasets. To qualify as anonymised, data must be stripped of all identifiers — even when combined with other datasets that might reveal identity.

Pseudonymization, by contrast, still allows reidentification through a key or additional context. Mock data is usually random and rule-based. It lacks the accuracy and statistical depth needed for serious enterprise use.

High-quality synthetic data exists somewhere between these categories. If generated correctly, synthetic data can be a true alternative to real-world datasets. It enables AI training, testing, and research — all without touching regulated data.

However, this is only true if the synthetic data avoids memorising, overfitting, and indirect reidentification. That’s where compliance comes into play.

Understanding GDPR: When Is Synthetic Data Out of Scope?

Not all synthetic data is exempt from regulation. Under GDPR, what matters isn’t how the data was created. It’s whether someone can be identified from it. That means even artificial data must be analysed through a legal lens.

Recital 26 is the key benchmark. It says GDPR does not apply to data that has been fully anonymised. This means that if no person can be reidentified by any likely method — even with other data — then GDPR does not apply.

This is where many synthetic data strategies begin to falter. Generating artificial data points is easy. Demonstrating that no personal attributes can be inferred or reconstructed is significantly harder.

For privacy-preserving AI to stand up under regulatory scrutiny, the data must pass the same threshold applied to any anonymisation technique.

Inside 2026 Data Analyst Bench

Meet the analysts reframing enterprise data strategy, governance and AI value for leaders under pressure to prove outcomes, not intent.

Defending against linkage attacks, inference risks, and any residual signals that could triangulate identity is part of this. Without validation, synthetic data becomes a compliance illusion.

Recital 26 and the anonymisation test

The bar for anonymisation is higher than many organisations realise. Recital 26 defines anonymisation as removing any chance of identification — even when the data is combined with other sources.

That includes methods available to attackers, regulators, or even internal teams with access to auxiliary information. For synthetic data, this means more than just removing names or direct identifiers.

It requires robust statistical analysis to confirm that no personal information can be reconstructed or guessed with confidence. With the right safeguards, generative models can break the link between original data and synthetic outputs — making true anonymisation possible.

But if the model memorises or mimics real sequences too closely, the synthetic output may still qualify as personal data. Which would trigger full GDPR obligations.

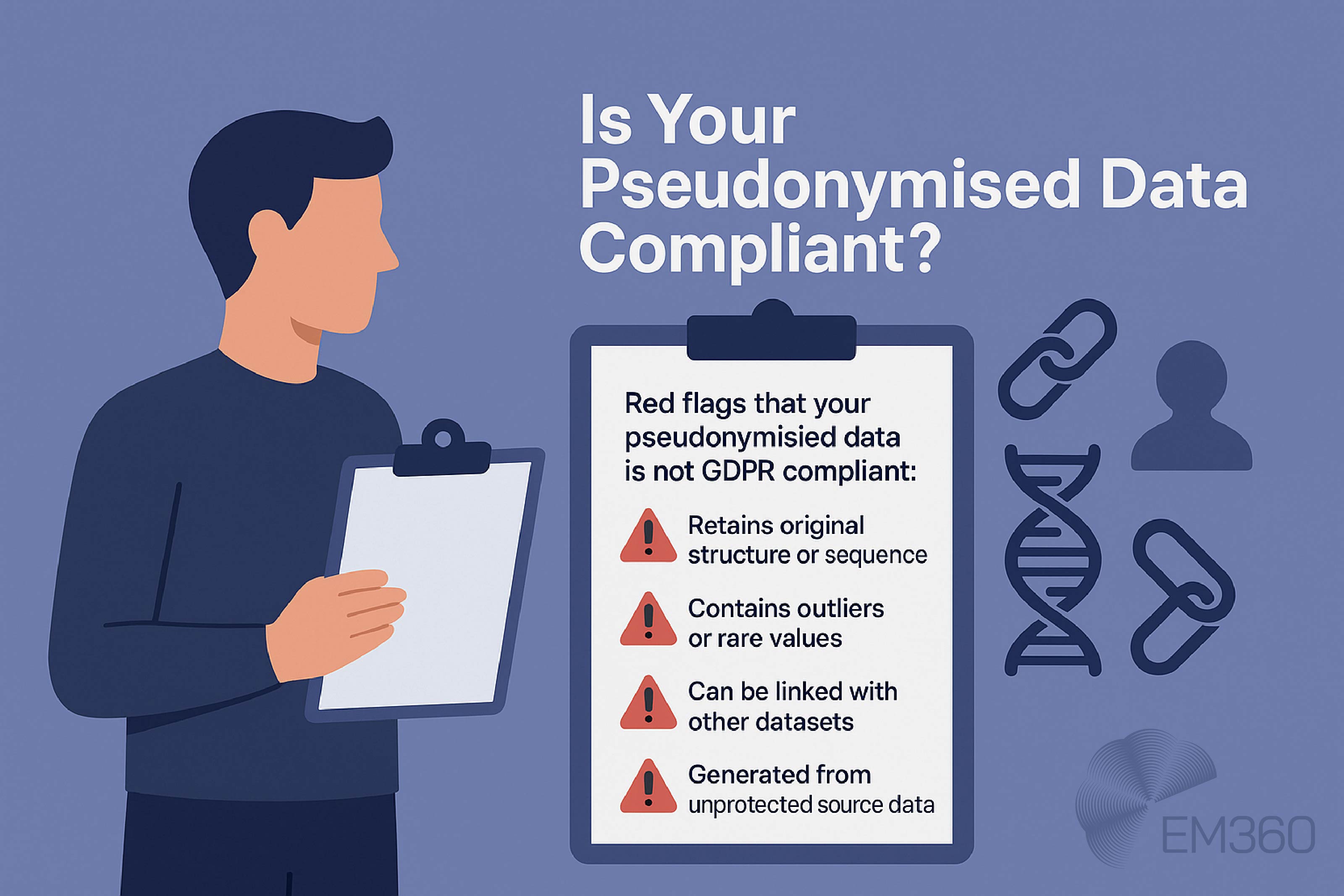

Why pseudonymised synthetic data may still be risky

Pseudonymization often creates a false sense of security. Masking names or swapping identifiers helps, but it doesn’t eliminate risk. Especially when structure or outliers still point to individuals.

Synthetic data that is poorly generated faces similar problems. If the underlying model overfits, it can leak fragments of the original data. If it fails to remove demographic outliers or high-cardinality patterns, those records can become anchors for inference.

The European Data Protection Board (EDPB) made this distinction clear in its 2024 opinion on AI models and personal data. Even synthetic-looking outputs may fall under GDPR if they carry traits from real people or come from unprotected training data.

Security and compliance leaders should prioritise tools with built-in safeguards — like differential privacy, rejection sampling, and attribute-level filters. Tools like the Synthetic Data Vault offer formalised risk assessments. But they must be tailored to enterprise data types and use cases.

Engineering Continuous Compliance

Use cloud-native tooling, GRC platforms and pipelines to codify controls, centralise evidence and keep multi-cloud architectures audit-ready.

Ultimately, the data anonymisation threshold is not a one-time test. It requires ongoing validation and a deep understanding of how statistical properties can expose sensitive patterns. A dataset that looks synthetic may still behave like the original. And that is exactly what regulators are watching for.

Where Synthetic Data Is Working — And Where It Still Needs Oversight

Adoption of synthetic data is accelerating across sectors where access to sensitive datasets is a barrier to progress. Whether for regulatory compliance, innovation speed, or risk reduction, many organisations are replacing real-world records with statistically similar, synthetically generated alternatives.

But this shift is not just about technology. It is about governance. Security and privacy teams must shape how synthetic data is used, validated, and shared. Especially in industries with high compliance burdens, decentralised access, or long-standing data exposure risks.

Healthcare: privacy-driven innovation at scale

Few industries sit at the intersection of opportunity and scrutiny quite like healthcare. AI offers the ability to improve outcomes, reduce system strain, and enable personalised care. But only if data can be accessed without breaching the trust or protection of the individuals behind it.

That tension is already playing out across health systems. According to the 2024 Medscape and HIMSS AI Adoption report, 86 per cent of healthcare organisations are already using AI. Yet 72 per cent cite data privacy as a significant risk. Adoption is high, but so is the anxiety.

Synthetic healthcare data is gaining ground as a middle path. By generating artificial patient journeys that maintain the same statistical properties as real ones, healthcare organisations can test models, simulate treatments, and train algorithms. Without relying on protected health information.

Where it's being used:

- Simulating rare disease patterns for early detection models

- Creating training data for imaging diagnostics without licensing real scans

- Building virtual patient cohorts for ethical drug trial comparison

- Sharing medical datasets across borders without triggering data transfer restrictions

But the risk lies in the details. Most health data is longitudinal. It's temporal, behavioural, and highly granular.

Avoiding BI Sprawl in 2025

BI tools promise insight, but fragmented stacks, weak governance and poor fit can waste 75% of data. See what to prioritise to avoid failure.

Even if names and IDs are removed, a synthetic dataset that preserves unique sequences, outlier combinations, or demographic distributions could still risk re-identification. Especially when cross-referenced against other datasets.

The regulatory pressure is real. GDPR, HIPAA for health-specific privacy, and region-specific laws like Germany’s BDSG or France’s CNIL guidelines all apply if synthetic data isn’t truly anonymised. A dataset that leaks one patient's cancer journey through its sequence or structure could be enough to violate Article 5 of the GDPR.

Security leaders must ensure synthetic health data is validated by independent anonymisation audits. Not just privacy by design — but privacy by evidence.

Finance: compliance without compromise

The financial sector has long understood that customer data is both an asset and a liability. Fraud prevention, risk modelling, and compliance testing all rely on sensitive behavioural data. Yet most institutions cannot afford to expose real accounts or transactions during development.

Synthetic data offers a way forward. With the right tooling, banks can generate high-fidelity tabular data that mimics patterns in spending, saving, loan defaults, or transaction anomalies. And this is not just a vendor claim.

The UK’s Financial Conduct Authority (FCA) has recognised synthetic data as a viable privacy-enhancing technology that can expand data usage and support data sharing without revealing underlying sensitive information.

How it's being applied:

- Building predictive modelling pipelines for credit risk without using real FICO scores

- Creating diverse fraud detection scenarios across multiple regions

- Testing internal controls and AML systems without introducing production records

- Modelling real-time payment systems with synthetic network-level behaviour

But again, control is everything. Poorly synthetically generated records can create misleading patterns or remove subtle fraud signals. A model trained on generic synthetic data might learn to ignore low-volume anomalies. The exact red flags that real fraud often exploits.

Worse, if the synthetic generation process memorises or replicates real outliers from the original dataset, institutions risk violating GDPR or even local financial regulatory rules, like PSD2 or DORA.

When Master Data Drives ROI

How disciplined MDM investment turns fragmented records into a single source of truth that lifts margins, CX quality, and decision speed.

Finance teams must document the full lineage of synthetic datasets: source, method, purpose, and exposure. If a regulatory model or board-level policy relies on synthetic data, its provenance must be audit-ready.

Security and software testing: Protecting environments from within

Security teams are under growing pressure to deliver both resilience and agility — but most development environments are still underpowered when it comes to data access. Using real production data in test environments introduces unacceptable risk, but fabricating mock data often leads to unrealistic system behaviour.

Synthetic data offers a more operationally accurate middle ground. By capturing the structure, relationships, and behaviours of production datasets, teams can simulate real workloads without using the original content. This allows them to test new code and run performance benchmarks with greater confidence.

Where it adds value:

- Creating identity and access logs for IAM testing

- Generating synthetic session data for SIEM validation

- Stress-testing zero trust policies under varied workload conditions

- Injecting edge-case scenarios into intrusion detection tools

This is especially critical for internal red teams, DevSecOps squads, and platform engineers who need to balance speed with containment.

But caution is required. Some synthetic tools may introduce uniformity, rounding errors, or unrealistic entropy. Meaning attack simulations look clean in tests but fail in production. Others may miss context across system boundaries, which can affect API interaction modelling or cloud-native behaviour.

For security-focused use cases, synthetic data must be validated against both statistical properties and operational relevance.

Does it reflect adversarial use cases? Can it break systems in meaningful ways? Most importantly, can we trace it back to real data through inference? If the answer is yes, then it cannot be used safely.

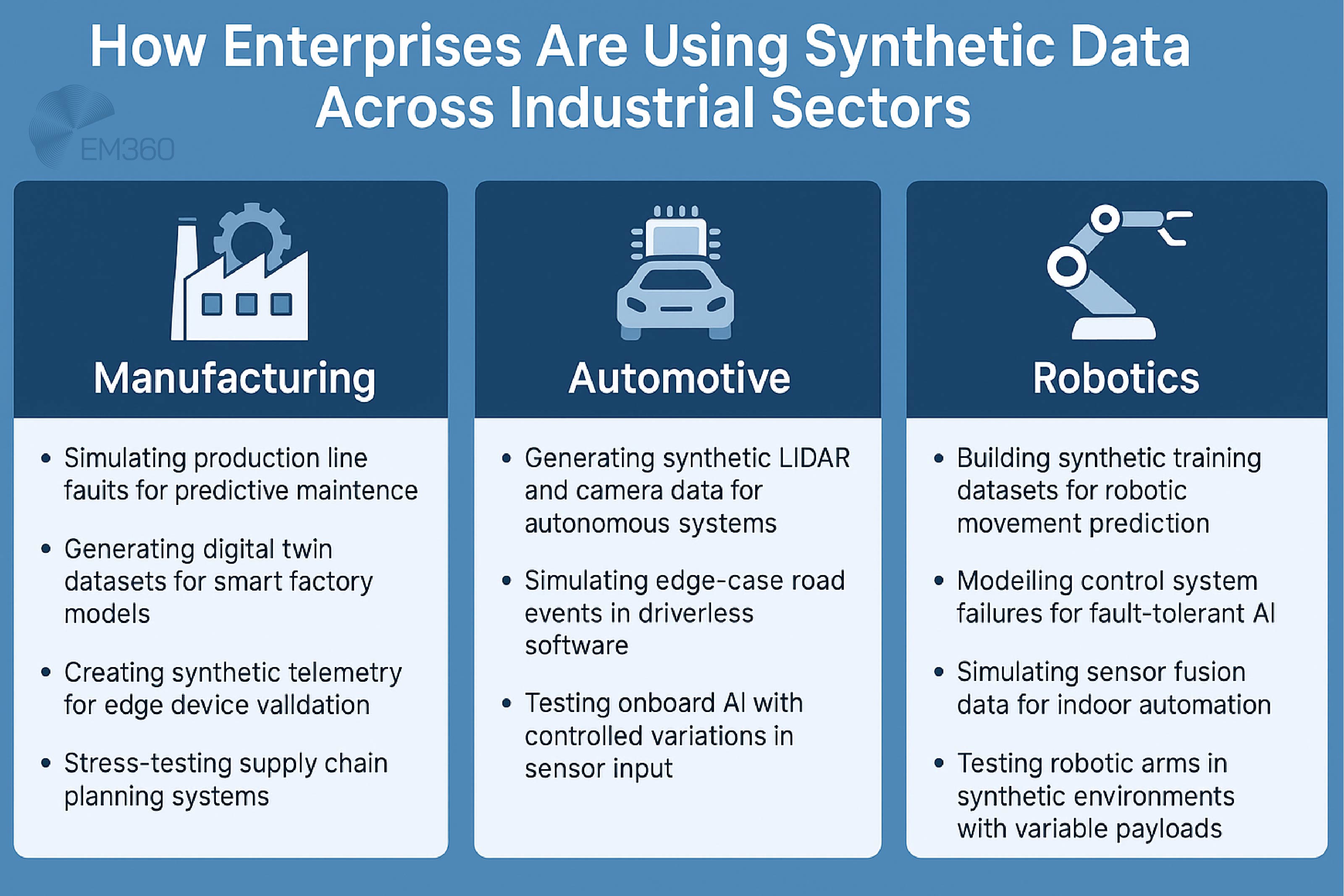

Manufacturing, automotive and robotics: training smarter systems

In industrial environments, real data is often too sparse, too sensitive, or too expensive to collect at scale. Smart factories, autonomous vehicles, and robotics systems all need rich datasets to train algorithms — but capturing every possible edge case or system failure in the real world is slow, risky, or infeasible.

Synthetic data fills this gap with structured, data-driven training scenarios that preserve mechanical patterns, sensor variability, and rare system behaviours.

Examples include:

- Creating failure simulations for predictive maintenance

- Generating synthetic LiDAR and visual input for autonomous navigation

- Building control system simulations for robotics under stress

- Generating synthetic telemetry for IIoT data layers in smart factories

The benefit here is scale. Once validated, synthetic datasets can be produced rapidly and repeatedly, allowing models to evolve without constant new data collection.

Still, accuracy is non-negotiable. If a dataset omits rare but critical failure modes, the model may operate well in test environments but fail in production. These synthetic datasets must be calibrated against engineering constraints and physical system tolerances. Otherwise the AI agent will learn a fiction, not a reality.

Research and development: removing friction from data access

In research-heavy environments — particularly in pharmaceuticals, the public sector, or academia — data access delays are one of the biggest blockers to progress. Legal reviews, DPO oversight, and cross-border restrictions can stall early-stage innovation for months.

Synthetic data offers acceleration. Teams can generate alternatives to real-world data based on small reference samples, allowing initial experiments, proof of concepts, or AI prototyping to begin while governance catches up.

Common use cases:

- Creating datasets for academic ML competitions

- Testing new analytic tools on realistic but unregulated inputs

- Sharing data models with external collaborators without breaching agreements

- Simulating multi-country datasets for global research validation

But governance still applies. Just because the data is synthetic does not mean it is free of regulatory consequence. If the model leaks patterns that originated in sensitive datasets, the use still falls within GDPR scope.

R&D leaders need to work closely with data protection teams to define clear documentation standards. That includes how synthetic data was created, whether personal data was involved in training, and how outputs have been validated against re-identification risks.

Privacy-Preserving AI and the Role of PETs

As synthetic data adoption rises, so does the need to validate its safety. That validation is not just technical. It is legal, operational, and reputational.

For synthetic data to meet regulatory standards and support secure AI development, it must be backed by robust privacy-preserving AI techniques. And that is where privacy-enhancing technologies (PETs) come in.

These tools are no longer experimental. The OECD’s 2023 report confirmed that PETs are now being widely adopted by governments, statistical agencies, and regulated industries to safely use sensitive data at scale. That shift signals a growing expectation: privacy is not optional — it must be engineered in.

PETs provide the mathematical and procedural guarantees that synthetic data alone cannot. They help data scientists verify that re-identification is not possible.

They allow teams to simulate worst-case scenarios without breaching policy. They also provide security leaders with a framework to evaluate if the used data is truly beyond the scope or just hidden.

Some PETs strengthen the quality of the synthetic data itself. Others assess whether the synthetic process preserves too much of the source material. Either way, they allow enterprises to move forward with innovation while staying in alignment with privacy law, industry expectations, and internal risk appetite.

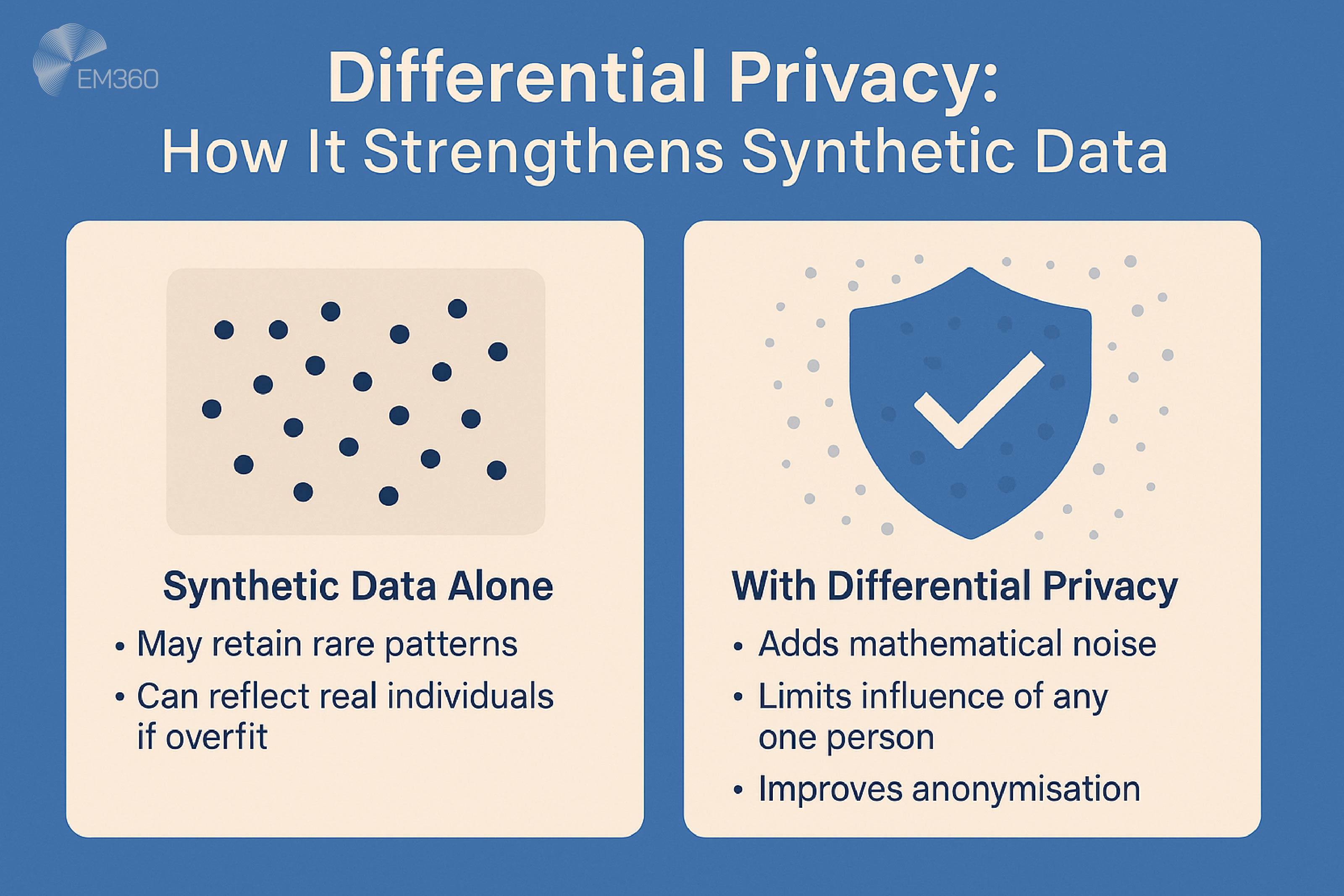

Differential privacy and synthetic generation

The most effective pairing for regulated environments is synthetic data generation with embedded differential privacy. This approach introduces randomness into the data generation process, ensuring that no single individual’s attributes can influence the output too closely.

The result is a dataset that maintains statistical properties — but does not preserve traceable connections to any individual record.

Why this matters: many synthetic datasets claim to be safe because they don't have direct identifiers. However, in reality, outliers, demographic overlaps, or rare behavioural sequences can re-establish linkages. Differential privacy protects against this by deliberately weakening those linkages.

In doing so, it reinforces GDPR’s core standard of “not reasonably identifiable by any means”.

The SafeSynthDP framework, published in late 2024, demonstrated how differentially private synthetic data consistently scored higher on privacy audits than standard synthetic models. All without significantly degrading performance in downstream predictive modelling tasks.

Many regulated sectors, including life sciences, fintech, and national statistical offices, are now formalising the adoption of these techniques.

For privacy-preserving AI to be taken seriously by regulators, it must move beyond buzzwords. Differential privacy provides a mathematically rigorous foundation that shows your data is not just transformed. Instead, it is high quality, provably secure, and auditable.

Toolkits and platforms with built-in compliance workflows

The rise of synthetic data has led to a new generation of tools promising automated privacy. But not all of them are created equal. Security and governance teams need platforms that go beyond generation, offering baked-in controls, documentation support, and compliance-ready outputs.

Some examples worth evaluating:

- Gretel.AI: Offers pre-trained models and workflows for creating synthetic data across structured, time-series, and unstructured formats. Its compliance suite includes entity classification, risk scoring, and synthetic lineage mapping — all designed to support audits, DPIAs, and cross-border data transfer assessments.

- Synthetic Data Vault (SDV): An open-source framework originally developed at MIT. SDV provides a modular pipeline for generating, validating, and benchmarking synthetic datasets. Its governance utility lies in transparency — all steps are configurable and reproducible, making it ideal for R&D-heavy environments or organisations with internal privacy engineering teams.

- MOSTLY.AI: Focused on synthetic data generation tools for enterprise clients, especially in banking, insurance, and telco. MOSTLY.AI models are optimised for GDPR compliance and come with integrated privacy risk metrics. The platform also includes bias detection, ensuring that synthetic datasets do not reinforce skewed outcomes in algorithmic decisions.

When selecting a synthetic data platform, CISOs and compliance leads should prioritise tools that embed documentation in the workflow. That means model cards, re-identification tests, and detailed audit trails that can be reviewed internally or shared with regulators if needed.

A platform that generates data but cannot prove its safety is a liability. A platform that can demonstrate exactly how privacy was preserved — and what thresholds were tested — is an asset.

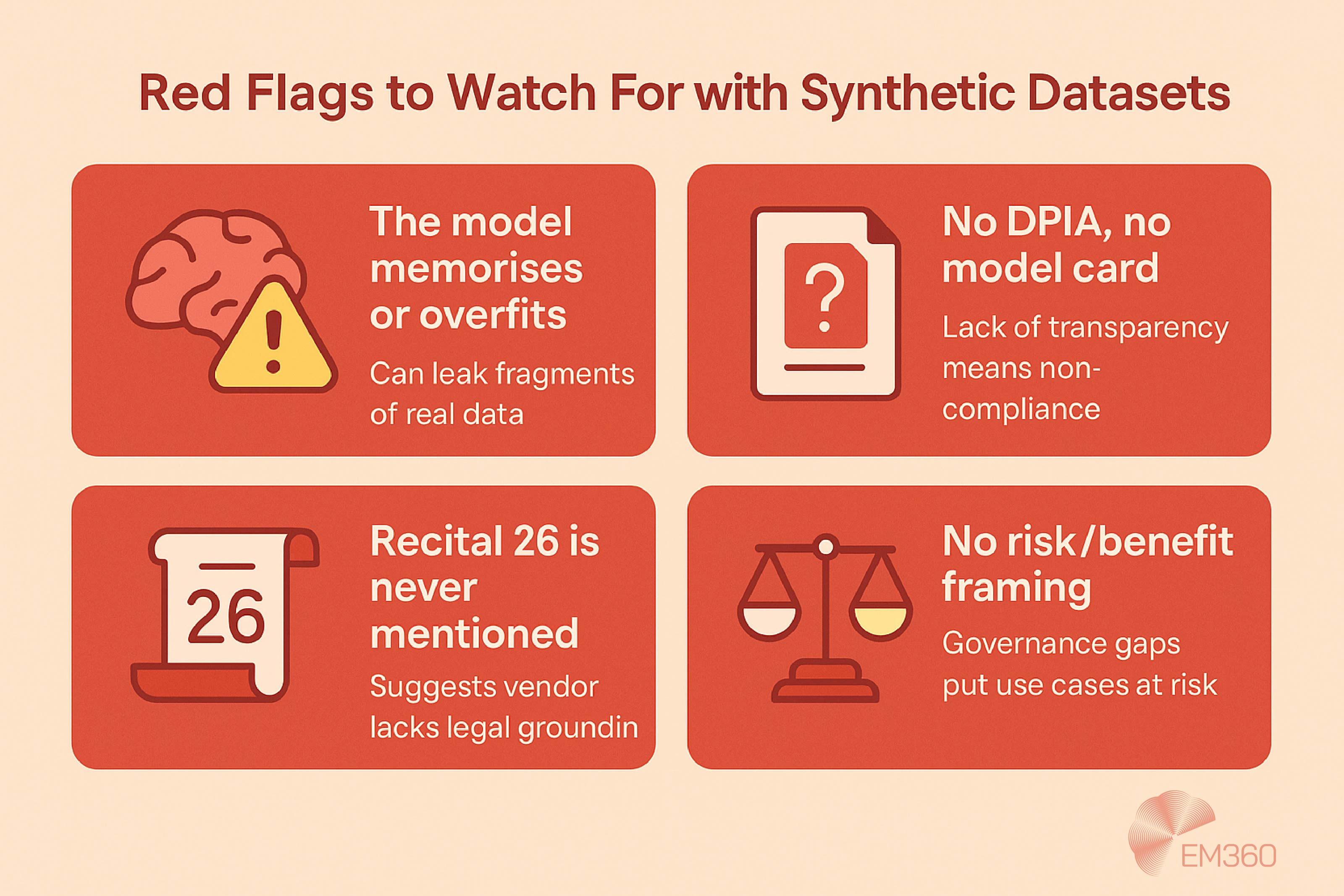

Red Flags and Governance Gaps to Watch

Synthetic data is often presented as the compliance-friendly answer to AI and analytics. But when vendors overpromise or teams rush ahead without safeguards, synthetic datasets can quietly reintroduce the same risks they were meant to eliminate.

For CISOs and privacy leads, the question has shifted from whether we can use synthetic data to whether we should use it — and under what conditions?

Below is a checklist of warning signs that a synthetic data initiative may be non-compliant, poorly designed, or expose the organisation to hidden liabilities. These are the patterns to look for, challenge, and document.

1. The model memorises — or overfits — the original data

High-fidelity synthetic data is valuable. But if a model has memorised the training data, the synthetic output may still include fragments that directly reflect real individuals in the source dataset.

Indicators:

- Repeated sequences or rare edge-case combinations

- Output that aligns too closely with a known record

- Lack of variation in low-frequency patterns

Any synthetic data that behaves like a proxy for identifiable personal data is not exempt from regulation. That includes datasets used for testing, modelling, or internal benchmarking.

2. No DPIA, no model card, no transparency

A privacy-preserving AI pipeline is only defensible if the process behind it is clear. If a vendor cannot produce a data protection impact assessment (DPIA), document the generation logic, or show how risk was assessed, that should stop the conversation immediately.

A minimum viable compliance trail should include:

- The source and format of the original dataset

- The generation method and parameters

- Risk analysis results and re-identification scores

- How fairness and bias were evaluated

This is not just best practice. It is often a legal requirement under GDPR’s accountability principle.

3. Recital 26 is never mentioned

Recital 26 of the General Data Protection Regulation sets the standard for what qualifies as truly anonymised. It is not a side note. It is the legal foundation for claiming synthetic data is out of scope.

If a platform or consultant offering synthetic data generation tools avoids the subject or uses vague language like “de-identified” or “GDPR-safe”, proceed with caution. These are not regulated terms, and they may be used to mask non-compliant methods.

Ask explicitly:

- What legal standard of anonymisation does this process meet?

- How do you validate outputs against Recital 26 criteria?

- What types of synthetic data are still regulated under GDPR?

If they cannot answer clearly, the data is not safe to use.

4. There is no risk/benefit framing in the use case

Even well-generated synthetic data can cause harm if used inappropriately. That includes:

- Replacing real-world training data in safety-critical systems

- Using synthetic datasets to benchmark high-stakes model performance

- Building AI outputs into customer-facing services without validation

A mature governance team should assess not just whether data is synthetic but whether the privacy benefit outweighs the functional, ethical, or operational risks. If there is no framework in place to do that, then the organisation is relying on blind trust.

Governance Framework: What Security and Legal Teams Should Do Next

For synthetic data to be safe, it must be governed. The entire lifecycle of the data, from its creation to its audit, sharing, and model development, requires governance.

Security and legal teams have a shared responsibility here. Legal defines the regulatory boundaries. Security ensures technical compliance and containment. And both must align with data teams on usage intent, validation, and risk mitigation.

This is not just about avoiding a breach. It is about ensuring that every dataset claimed to be anonymous or out of scope truly behaves that way, even under adversarial conditions.

Below is a governance blueprint to help structure decision-making, approvals, and internal alignment for any organisation planning to use synthetic data.

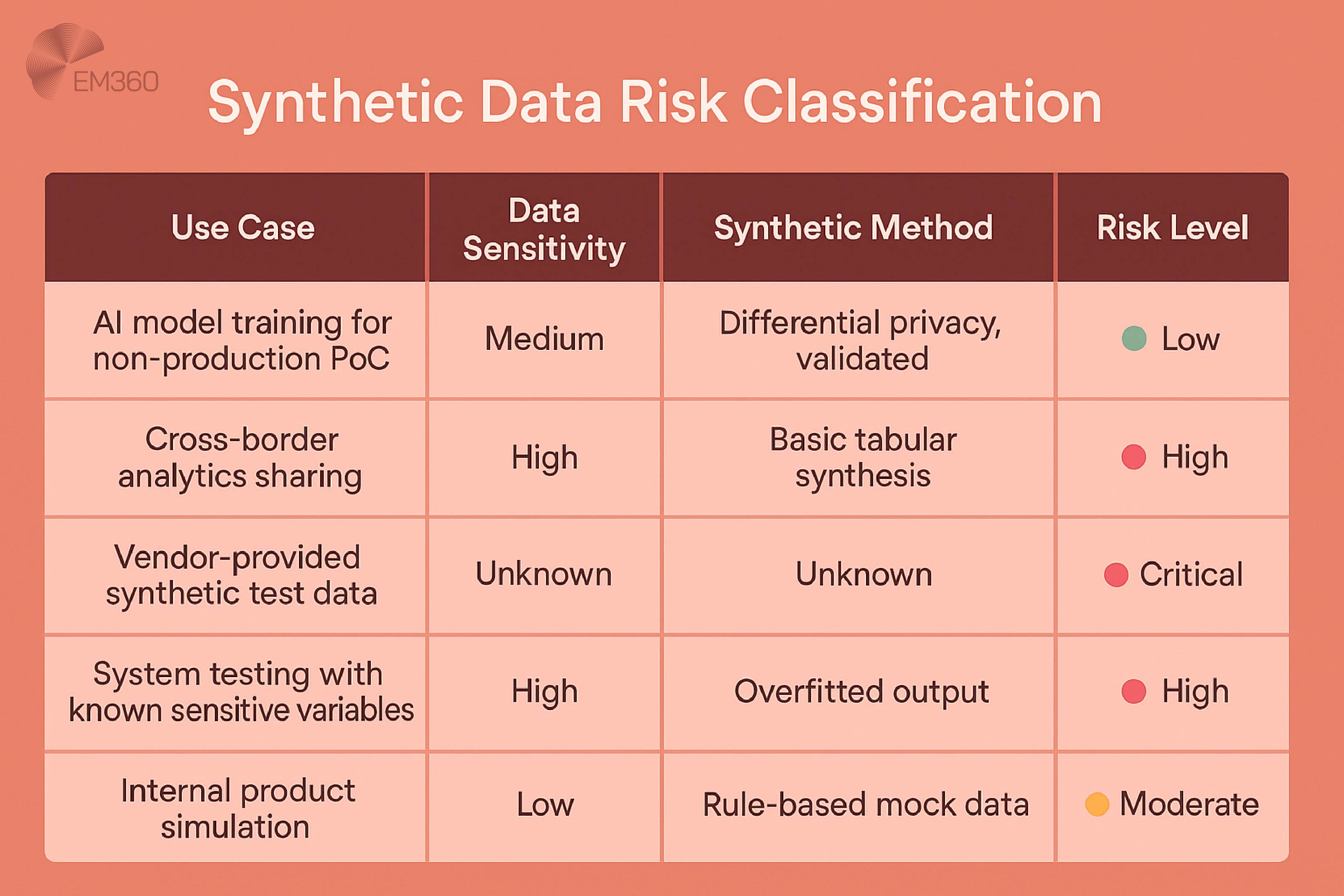

1. Risk classification: not all synthetic data is equal

Start with a clear risk matrix to classify synthetic data use cases. This helps prioritise oversight, shape the documentation depth, and inform board-level risk acceptance.

Risk increases when:

- The personal information in the source dataset is high-risk or special category

- The training data used for generation was poorly anonymised or undocumented

- The synthetic method is not explainable or auditable

- Output will be shared externally or drive decision-making

Security leaders should ensure that every synthetic data project has a documented classification, updated as use evolves.

2. Governance structure: who approves and who owns the risk

A synthetic data pipeline should not sit solely with engineering or data science. We must integrate it into existing governance models, ideally within the same framework that handles data minimisation, DPIAs, and third-party data sharing.

Recommended roles:

- Data Protection Officer: signs off on legal scope, Recital 26 thresholds, and lawful basis

- CISO or Security Architect: validates risk controls, synthetic tooling, and output security

- Data Science Lead: owns generation method, performance metrics, and technical documentation

- Compliance or Risk Officer: flags regulatory impacts, records accountability, and tracks reuse exposure

Use a centralised intake form or review board to assess each proposed use of synthetic data. Projects involving unvalidated tools, high-risk categories, or external data sharing should be escalated to privacy or legal functions.

This is not just bureaucracy. It creates accountability. Without it, even privacy-preserving AI becomes a theoretical claim rather than an operational standard.

3. Internal audit checklist: validating that synthetic data stays compliant

To support continuous compliance, organisations should develop or adopt a lightweight internal maturity model. This allows security and privacy leaders to benchmark how well synthetic data is governed. And also where gaps need to be closed.

Key audit questions:

- Is there a record of what data was used to generate synthetic outputs?

- Was the generation method validated against Recital 26 requirements?

- Have privacy risks (e.g., re-identification, demographic leakage) been scored?

- Can we demonstrate how outputs are high quality without being high risk?

- Is there documentation of the purpose, access controls, and sharing permissions?

- Was the output dataset tested for edge-case memorisation or statistical drift?

Answers should be stored with the synthetic dataset as part of its lineage. If it is reused for future AI development or shared with third parties, all documentation must travel with it.

Mature organisations go further, running red team simulations to test whether synthetic outputs can be reverse engineered. This provides direct evidence that personal information cannot be extracted. Which is exactly what regulators want to see.

When synthetic data is treated like a first-class governance object, it becomes a strategic asset. When it is treated like a shortcut, it becomes a hidden liability.

The difference lies in ownership, documentation, and proof. Compliance leaders who build these frameworks now will be better positioned to scale privacy-centric AI in the months ahead.

Final Thoughts: Governance Is What Makes Synthetic Data Safe

The real value of synthetic data lies in what surrounds it. Without documentation, oversight, and validation, even the most advanced tools can replicate risk instead of removing it. The line between compliant and non-compliant use is rarely technical — it is procedural.

For CISOs, data leaders, and legal teams, the opportunity is clear. With the right controls in place, synthetic data GDPR strategies can unlock faster model development, broader data sharing, and safer AI experimentation. But that value only holds if the data is proven to be out of scope, not just assumed to be.

This is not about slowing innovation. It is about making privacy-preserving AI operationally viable — and defensible when challenged. Every organisation working with personal data at scale will face scrutiny. Those with a governance foundation in place will be ready to answer it.

To explore how other security and data leaders are navigating this balance, visit EM360Tech’s Security hub. You’ll find expert-led frameworks, failure forensics, and real-world case studies that can help turn compliance pressure into strategic advantage.

Comments ( 0 )