For all the noise around microservices, serverless computing, and AI-powered edge systems, much of the modern enterprise still runs on a foundation that predates them: the client-server model.

At its core, client-server architecture is simple. A client requests something — data, processing, access — and a server delivers it. But scale that across tens of thousands of devices, layered networks, hybrid deployments, and distributed teams, and you’re no longer talking about textbook simplicity.

Now you're talking about a deeply embedded structure that things like banking systems, ERPs, government platforms, and supply chain tools around the world are still built on. What’s changed between then and now is the context.

The client-server computing architecture of today isn’t a relic, though some would tell you otherwise. Instead, it’s a reshaped, retooled framework that’s being reimagined so it is still as effective with modular server infrastructures, multi-cloud deployments, and real-time visibility.

It remains critical not because it’s flashy but because it’s foundational — and quietly being optimised to meet the demands of performance, resilience, and governance at enterprise scale.

Client-server architecture is still here. The difference is that now, it's being asked to evolve.

The Basic Concept but with Enterprise Context

At its most fundamental level, client and server architecture is a method of splitting responsibilities across a network. A client makes a request — typically for data or functionality — and a server processes and fulfils that request. It’s the handshake that underpins everything from loading a website to executing a dashboard query.

But this basic interaction is no longer happening between a single desktop and a local server rack. In enterprise environments, that handshake might involve multiple regions, hybrid infrastructure, edge nodes, and containerised services — all coordinated to deliver one seamless response.

Understanding client-server components today means understanding how each of their roles has expanded and how the model has changed so that flexibility is built into it.

What defines a client and a server today?

In most early explanations, clients are user-facing devices — desktops, laptops, and phones — while servers are centralised machines sitting in a back room or data centre. That still holds true at the surface, but enterprise infrastructure has become far more distributed.

Today’s “client” might be a mobile device, a branch office terminal, a smart sensor, or even another application. And today’s “server” might be physical, virtual, containerised, or entirely cloud-based.

In hybrid environments, some client-server components are now deployed at the edge — closer to where data is generated — while others remain centralised for stability or compliance. Systems may offload real-time processing to edge nodes while relying on central servers for analytics, backups, or authorisation.

The architecture hasn’t disappeared; it’s just expanded to meet the realities of distributed workloads and low-latency expectations.

How client-server differs from peer-to-peer and microservices

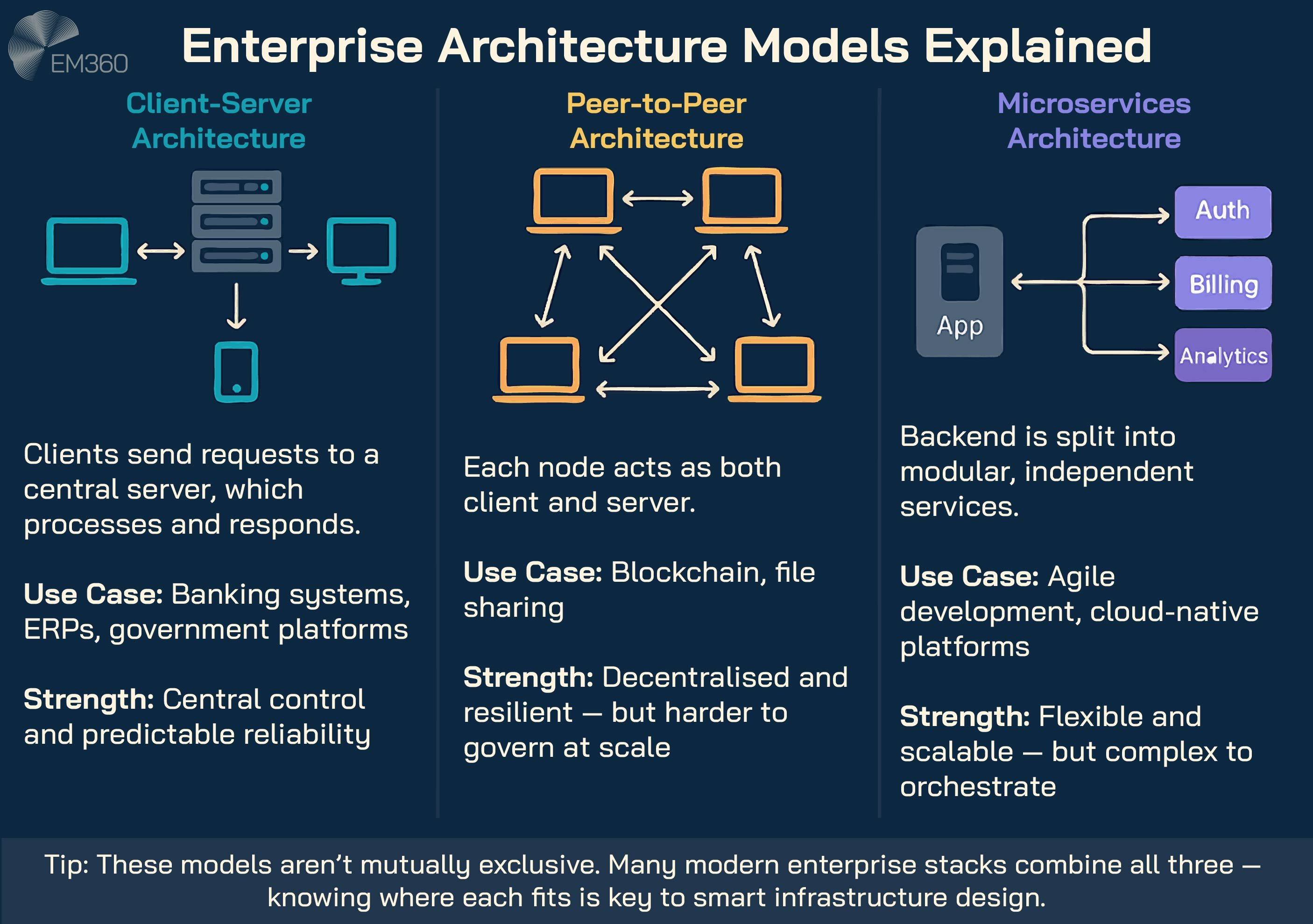

It’s common to see client-server compared to peer-to-peer or microservices models, but the distinctions are more structural than ideological.

In a peer-to-peer network, there is no central authority, and each node acts as both a client and a server. This model gives you flexibility and resilience, but it also makes things harder when it comes to security, consistency, and governance. Especially on a large scale.

So it works well in decentralised platforms or blockchain settings, but not in systems where control and oversight are important.

Microservices, on the other hand, are often misunderstood as a replacement for client-server. They are not. They are a way of architecting backend services to be modular, independently deployable, and loosely coupled.

These services still interact through client-server protocols — whether HTTP, gRPC, or messaging queues — but they do so within a broader application scope.

Why Observability Now Matters

How telemetry, tracing and real-time insight turn sprawling microservices estates into manageable, high-performing infrastructure portfolios.

So while microservices may modernise the server side, the underlying client-server components still exist. And it’s not about choosing one over the other. It’s about knowing how they fit together.

Anatomy of the Modern Client-Server Stack

Modern enterprise systems don’t just run on client-server architecture — they are layered with deployment models, placement strategies, and access mechanisms that shape how responsive, secure, and cost-effective those systems are.

This isn’t about a single server room anymore. It’s about how infrastructure is orchestrated to serve a globally distributed business, without breaking performance or visibility.

Server types and deployment models

At the heart of the stack sits the server. In today’s enterprise, that could mean several things — a traditional on-premise machine, a virtual server in a public cloud, a modular unit dropped into a regional data centre, or a containerised instance deployed on demand.

Each of these options carries different trade-offs. On-premise servers give you maximum control but less flexibility. Virtualised servers reduce hardware dependencies but require smart orchestration to avoid sprawl.

Cloud-hosted and modular deployments offer scalability but can create new dependencies and cost management challenges. Choosing the right fit isn’t about picking one model.

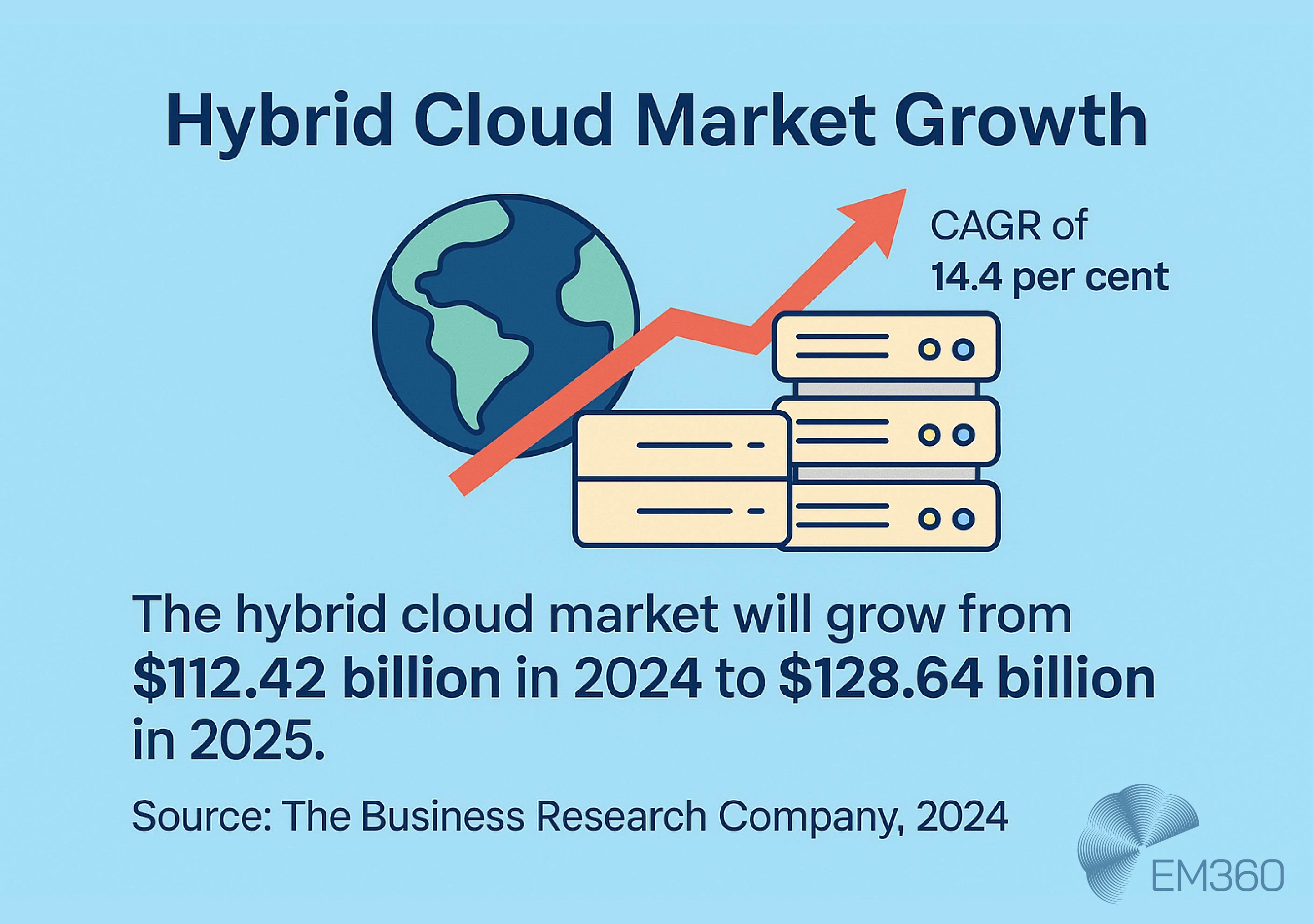

Most enterprises now blend all of them into a hybrid architecture, shifting workloads based on cost, security, latency, or compliance needs.

And it’s a strategy that’s rapidly scaling across industries. The hybrid cloud market is projected to grow from $112.42 billion in 2024 to $128.64 billion in 2025, with a compound annual growth rate of 14.4 per cent, underscoring its central role in enterprise infrastructure planning.

Networking and latency: Why placement matters

Where your servers live — and how they connect — is just as important as what they’re running.

For latency-sensitive applications like real-time analytics, video processing, or transactional systems, geography matters. Placing processing power closer to the edge can reduce lag and free up bandwidth, but it also requires tight coordination across nodes.

Inside AIOps Infrastructure Bets

A look at the platforms CIOs trust to correlate telemetry, automate remediation, and hardwire AI into multi-cloud operating models.

That means robust routing, failover paths, and an observability layer that can track and manage everything in motion. With cloud server integration and regional deployments becoming the norm, the network is now a performance layer, not just a connectivity one.

And that has a direct impact on how teams plan availability, redundancy, and server workload balancing.

Client access models: Thin, thick, and now distributed

On the client side, access models have evolved just as much. The old distinction between thin and thick clients still applies — thin clients offload most processing to the server, while thick clients carry more local logic and resources — but the lines have blurred.

Today’s distributed clients might be mobile apps, browser-based tools, embedded systems, or remote agents that operate in low-bandwidth environments. They are still clients in the architectural sense, but they often contain lightweight processing capabilities, adaptive sync protocols, and endpoint protection that would once have been considered server-side territory.

It’s not just about how they connect. It’s about how much autonomy they need, how much control you retain, and how effectively you can monitor and secure them. That’s where unified IT monitoring comes in, giving teams visibility across everything from legacy endpoints to ephemeral containers.

Why Client-Server Architecture Persists in Enterprise Systems

Client-server architecture isn’t outdated — it’s embedded. And in enterprise environments where reliability, security, and governance still carry weight, it continues to provide the foundation for core business operations.

The model has evolved, but the value proposition remains the same: predictable performance, role-based access control, centralised oversight, and the ability to scale with purpose.

Where it still dominates: ERP, finance, healthcare, and government

You’ll still find client-server systems at the heart of sectors where consistency is non-negotiable. Enterprise resource planning platforms, core banking engines, electronic health records, and government data services all rely on client-server logic.

When CI/CD Becomes a Liability

Slow runs, leaked secrets and ignored failures erode trust and speed. Expose the anti-patterns quietly undermining your release cadence.

These systems are too critical — and too interdependent — to be rapidly deconstructed. They require high transaction integrity, persistent state, and rigorous uptime controls, making them a natural fit for structured, well-architected enterprise infrastructure.

And because many of these applications interact with legacy systems or regulated data sources, the architecture needs to support long-term compatibility without compromising on control.

Reliability, security, and control in regulated industries

In sectors bound by compliance frameworks — from HIPAA and PCI DSS to GDPR and beyond — security architecture is not a bolt-on. It’s built in.

Client-server setups make it easier to centralise authentication, monitor access logs, and enforce security policies at the server level. That gives teams a level of control and auditability that distributed or peer-to-peer models often struggle to match.

It also simplifies disaster recovery and continuity planning. If the client side fails, the server retains the data. If the server is compromised, segmented architecture can help contain the blast radius. And because these systems are built to operate in known environments, they’re easier to test, harden, and verify.

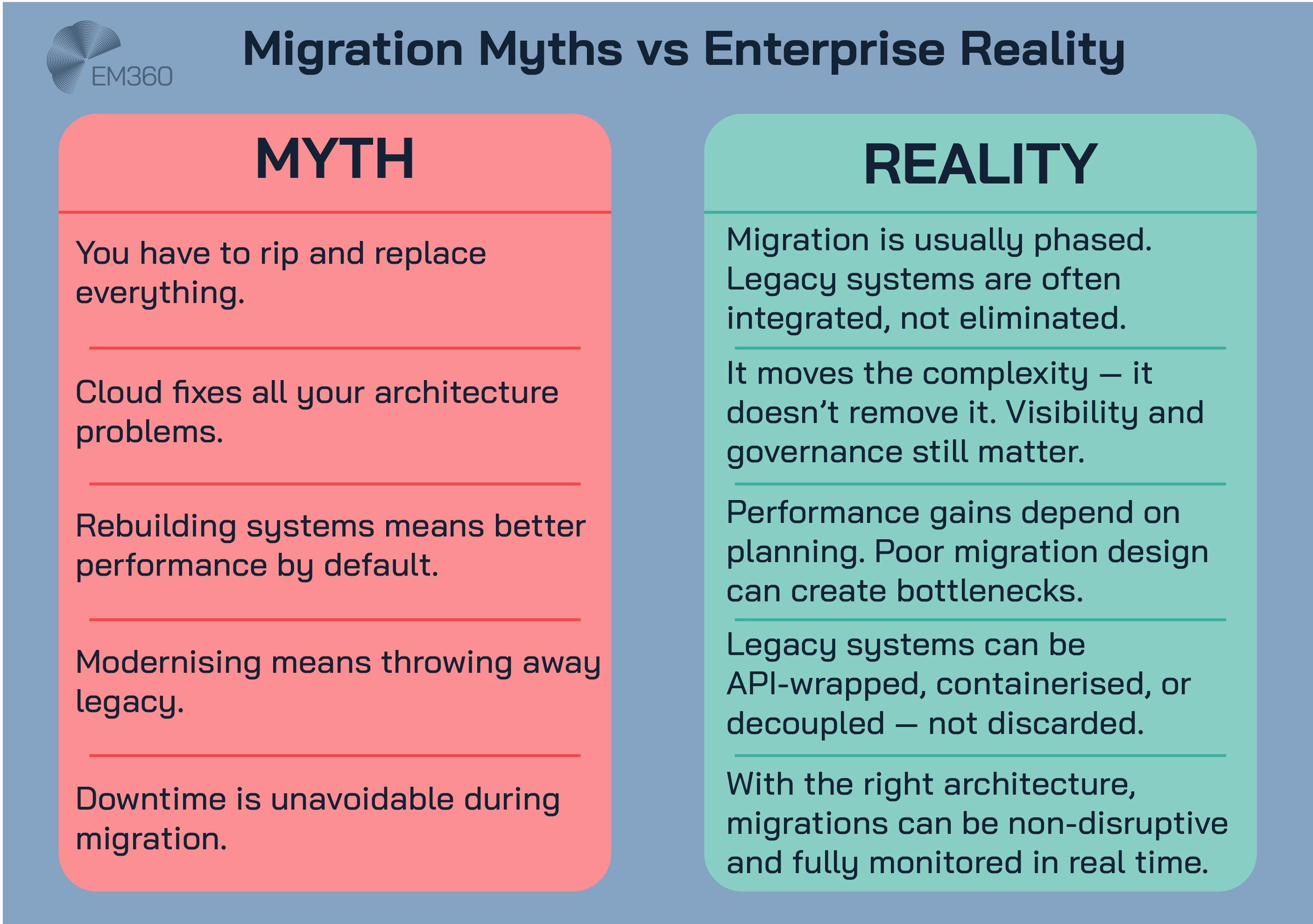

The myth of full replacement: Why migration is gradual

There’s a persistent idea in tech circles that legacy architectures like client-server are just waiting to be “ripped and replaced”. The reality is slower — and more strategic.

For most enterprises, these systems are too valuable to abandon outright. The risk of disruption, data loss, or workflow fragmentation outweighs the theoretical benefits of an overnight migration.

That’s why many modernisation strategies favour augmentation over replacement. Client-server systems are containerised, integrated via APIs, or wrapped in orchestration layers that make them behave like cloud-native services — even if their core remains unchanged.

Because when the goal is application reliability, the right move isn’t always to rebuild. Sometimes it’s to reframe.

Client-Server vs Modern Architecture Models — A Strategic Comparison

When Platforms Become Products

IDP frameworks shift DevOps from tool sprawl to productized platforms, boosting DORA performance, governance, and developer experience.

Modernising infrastructure isn’t about throwing out the old and embracing the new at full speed. It’s about understanding what each model does well, where it fits, and how to make them work together across a real-world tech stack.

Client-server isn’t wrong. It’s just one piece of a bigger puzzle.

| Model | Strengths | Limitations | Best Fit |

| Client-Server | Predictable, controllable, reliable | Less flexible, slower to scale | Regulated industries, core platforms |

| Microservices | Modular, independently deployable, resilient | Complex orchestration, more moving parts | Agile environments, product development |

| Containerised Architecture | Portable, consistent across environments | Requires robust tooling and monitoring | Hybrid/multi-cloud, DevOps-first organisations |

| Serverless | Cost-efficient, auto-scaling, fast to deploy | Cold starts, vendor lock-in, limited control | Event-driven apps, short bursts of processing |

Client-server vs microservices and containerisation

Microservices and containerisation are often linked — and for good reason. Microservices rely on being independently deployable, while containers provide the isolation and portability needed to make that work.

Where they differ from client-server is in scale and autonomy. A client-server system tends to be monolithic in nature, with a centralised server handling logic and requests. Microservices split that logic into small, purpose-built services, each responsible for a specific function.

That shift makes systems more agile and easier to evolve — but also more complex to monitor, test, and maintain. If visibility is limited, or if teams lack the right observability tools, the benefits can quickly become liabilities.

This is why many enterprises choose to modernise the server layer incrementally, rather than rebuild the entire application into microservices from day one.

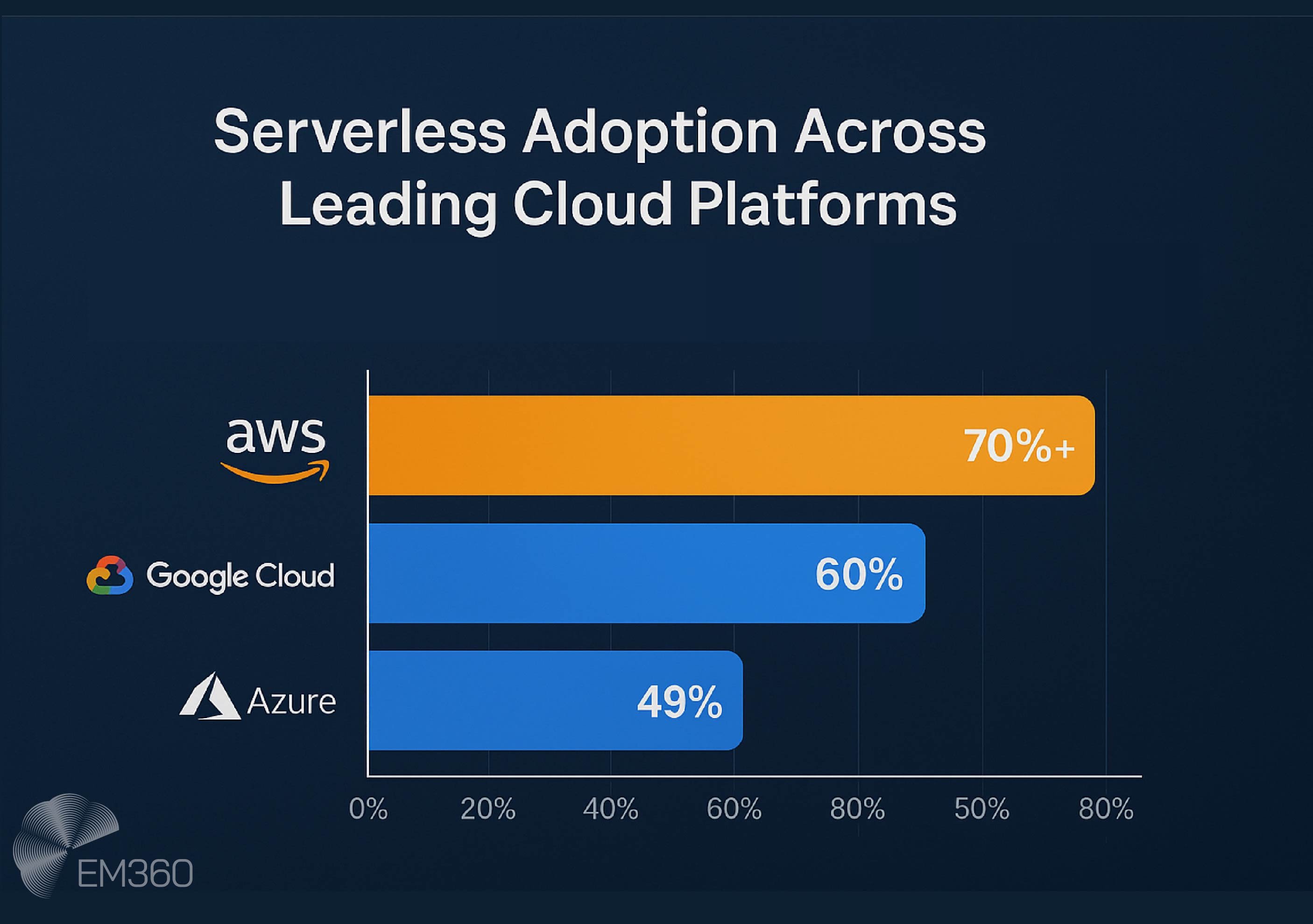

Client-server vs serverless computing models

Serverless doesn’t mean no servers. It just means you don’t manage them.

Serverless computing allows developers to run code in response to events — like file uploads, API requests, or database triggers — without provisioning or managing infrastructure. The cloud provider handles all that behind the scenes.

It’s powerful for short, stateless tasks and spiky workloads but less suited to long-running processes or systems with persistent state.

Compared to client-server models, serverless offers speed, elasticity, and cost-efficiency. But it also introduces concerns around cold start latency, platform limitations, and vendor lock-in.

That makes it better for extensions and offloaded logic, rather than full-system replacements in most enterprise environments. Adoption reflects that nuance. Over 70 per cent of AWS customers and 60 per cent of Google Cloud customers currently use at least one serverless solution, with Azure following at 49 per cent.

Serverless is becoming a standard part of cloud strategy — but not necessarily a replacement for client-server architecture.

When legacy is still the right call — and how to modernise it

Legacy doesn’t mean broken. It just means built before cloud-native was the default.

Some of the most stable, mission-critical systems in enterprises still run on older stacks. They perform consistently, integrate cleanly with upstream systems, and support workflows that haven’t changed in a decade — or don't need to.

Where it gets risky is when those systems are locked out of innovation. That’s why modernisation often means re-platforming, not rewriting. Wrapping client-server systems in modular architecture and API layers allows them to function like newer systems without exposing the business to unnecessary risk.

The goal of legacy system migration should never be transformation for its own sake. It should be control, continuity, and long-term adaptability.

Evolving the Model — What a Hybrid Client-Server Architecture Looks Like Today

Client-server isn’t locked in the past. It has evolved into something more flexible, more distributed, and more strategic. In a world where business operations stretch across cloud regions, edge locations, and containerised environments, the client-server handshake hasn’t disappeared. It’s just happening across a much wider surface area.

This shift isn’t about abandoning the model. It’s about adapting it.

Using modular servers and hybrid clouds to extend the model

One of the most significant changes is the rise of hybrid cloud as the new default. Enterprises no longer choose between on-premise or cloud — they use both. Client-server applications may still anchor their logic in a core server environment, but the way that environment is built and deployed has changed.

Modular servers are now being used to create fast-deploy infrastructure in regional data centres. They offer the control of on-premise with the speed and agility of the cloud. Combine these systems with virtualisation and orchestration layers to position them wherever latency, data sovereignty, or compliance demands dictate.

Instead of routing every request back to a central server, hybrid client-server setups use localised compute to handle high-speed processing while keeping analytics, archiving, and authentication in the cloud. It’s the same architectural handshake — just stretched across locations.

Integrating client-server into observability frameworks

As systems become more distributed, visibility becomes harder to maintain. That’s why IT observability is now a core architectural concern — not just a monitoring function.

Enterprises are embedding observability into every layer of the stack, from client endpoints to server clusters. That includes real-time performance metrics, dependency mapping, anomaly detection, and tracing across transactions that span both legacy systems and cloud services.

A modern client-server environment must feed into this observability framework. If your central server drops packets or your edge client experiences a timeout, your teams need to know why — and they need to see that insight in context. Not after the fact. Not after a customer complains.

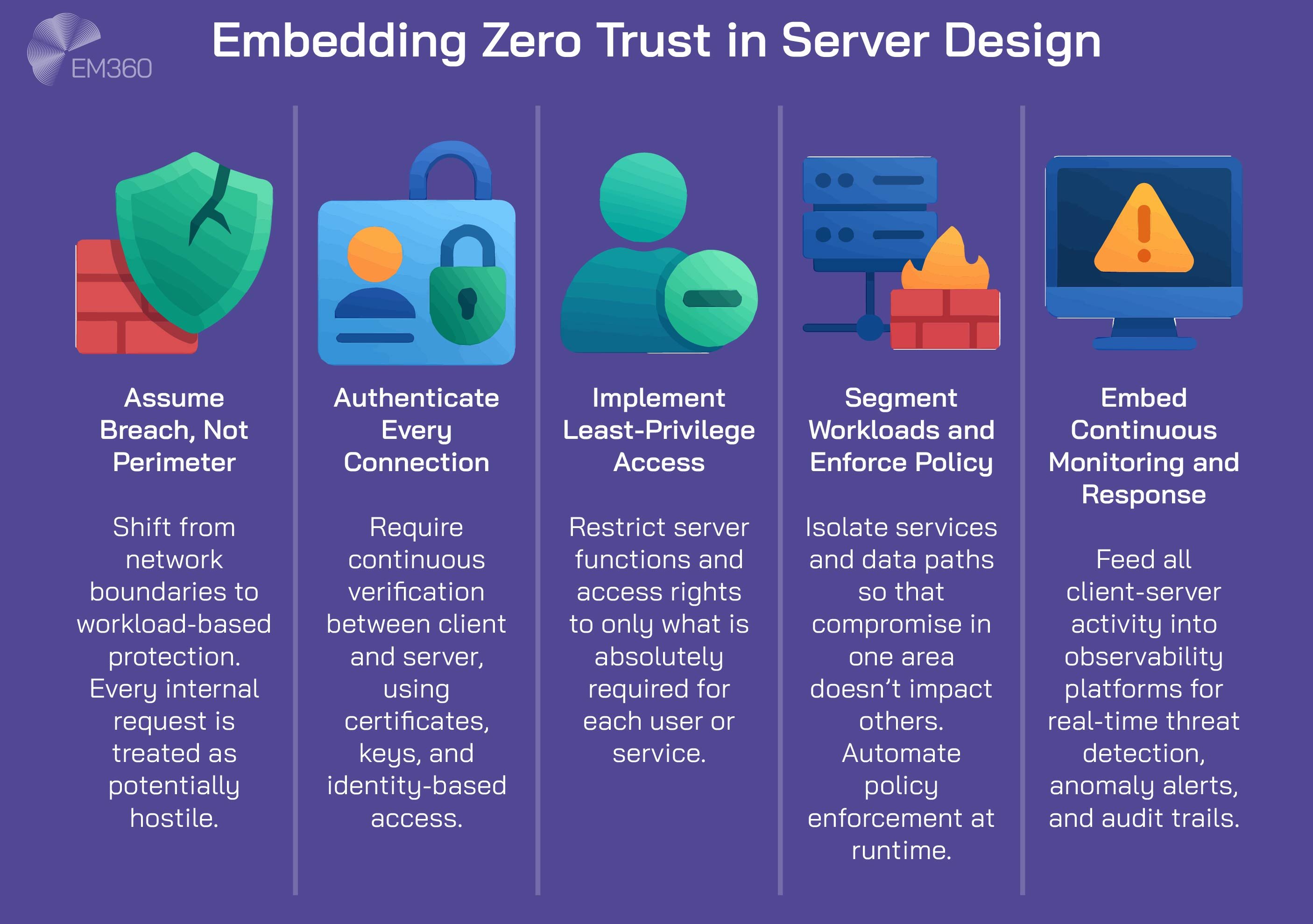

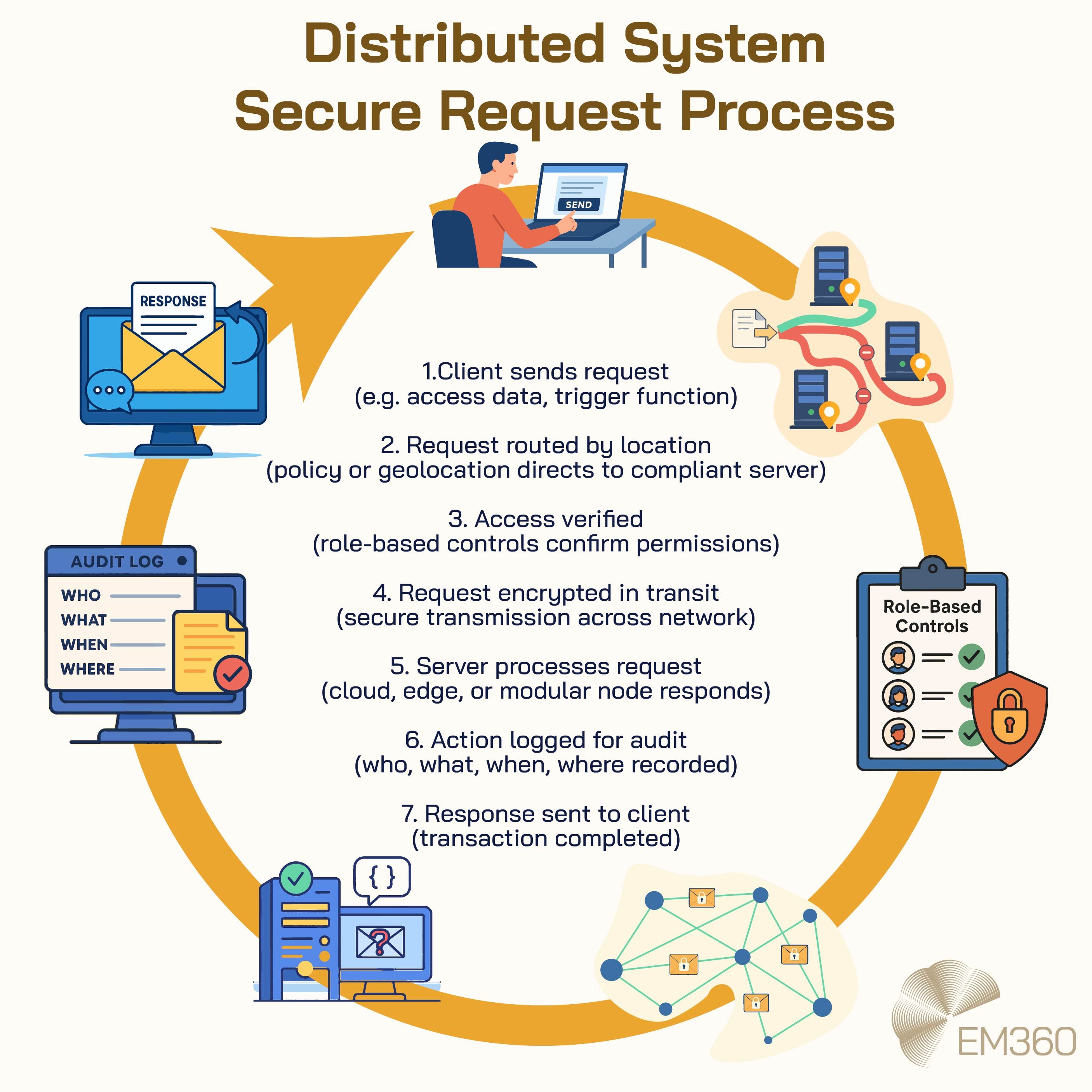

How enterprises embed zero trust and resilience into server design

The traditional client-server model relied heavily on perimeter-based security. That’s no longer enough.

Enterprise architecture now assumes breach, not just prevents it. That’s where zero trust strategies come in. Every connection between client and server is authenticated, every access request is evaluated, and every movement of data is logged.

This process has a direct impact on how servers are designed, managed, and segmented. It also shapes how resilience strategies are implemented. Redundancy, failover, and self-healing services are built into the server layer — not added afterwards. And with modern server management tools, teams can dynamically shift traffic, reallocate workloads, and recover from failure without manual intervention.

Hybrid client-server architecture is no longer a compromise. It’s a design choice that balances control with flexibility, performance with observability, and resilience with speed.

Enterprise Migration Paths — From Traditional Client-Server to Cloud-Enabled

Not every enterprise system can be rewritten. And in many cases, it shouldn't be. For companies still running mission-critical workloads on traditional client-server setups, the smarter path often lies in modernising the architecture, not replacing it.

That shift is already underway. The global cloud market is projected to hit $912.77 billion in 2025, with expectations to reach $5.15 trillion by 2034 — growing at a compound annual rate of 21.2 per cent. For enterprise teams, that trajectory is a signal: the cloud isn’t optional. It’s the backdrop against which all infrastructure decisions will be made.

That means finding ways to make legacy systems cloud-ready, API-accessible, and easier to monitor — without breaking what already works.

Steps for gradual modernisation without service disruption

The first step in any modernisation journey is mapping out system dependencies. That includes user access points, data flow paths, integrations, and regulatory touchpoints. Understanding what connects to what — and how it behaves under pressure — creates the baseline for any safe transition.

From there, modernisation typically moves in phases:

- Decouple the interface: Move the client-facing layer to the cloud or a web-based environment while keeping backend processes in place

- Wrap legacy logic in APIs: This enables interoperability without needing to rebuild core functionality

- Virtualise and containerise: Use orchestration tools to manage workloads in a more agile way, even if the logic remains monolithic

- Embed observability: Make sure performance data and error tracing are part of the architecture before migration begins

- Introduce automation gradually: Automated deployment, scaling, and failover should be introduced in isolated modules before rolling out across the system

Each of these steps reduces dependency on physical infrastructure, increases resilience, and builds a path toward cloud-native operations — without triggering a full-scale rebuild.

What not to break: The risk of removing reliable foundations

In many enterprises, traditional client-server systems still support processes that haven’t changed in years. That stability is often taken for granted — until it’s lost during a rushed migration.

One of the biggest mistakes teams make is assuming that moving to the cloud will solve architectural complexity by default. It doesn’t. It simply changes where that complexity lives.

When systems are performing reliably, the goal of migration should be optimisation, not reinvention. That might mean keeping certain processes on-premise for compliance or maintaining synchronous data replication models that existing applications depend on.

The most effective migration paths are designed around continuity. They preserve the strengths of the current system while introducing flexibility, observability, and cloud-ready tooling over time.

Because when core platforms fail, it’s not the architecture that gets blamed. It’s the business function that depends on it.

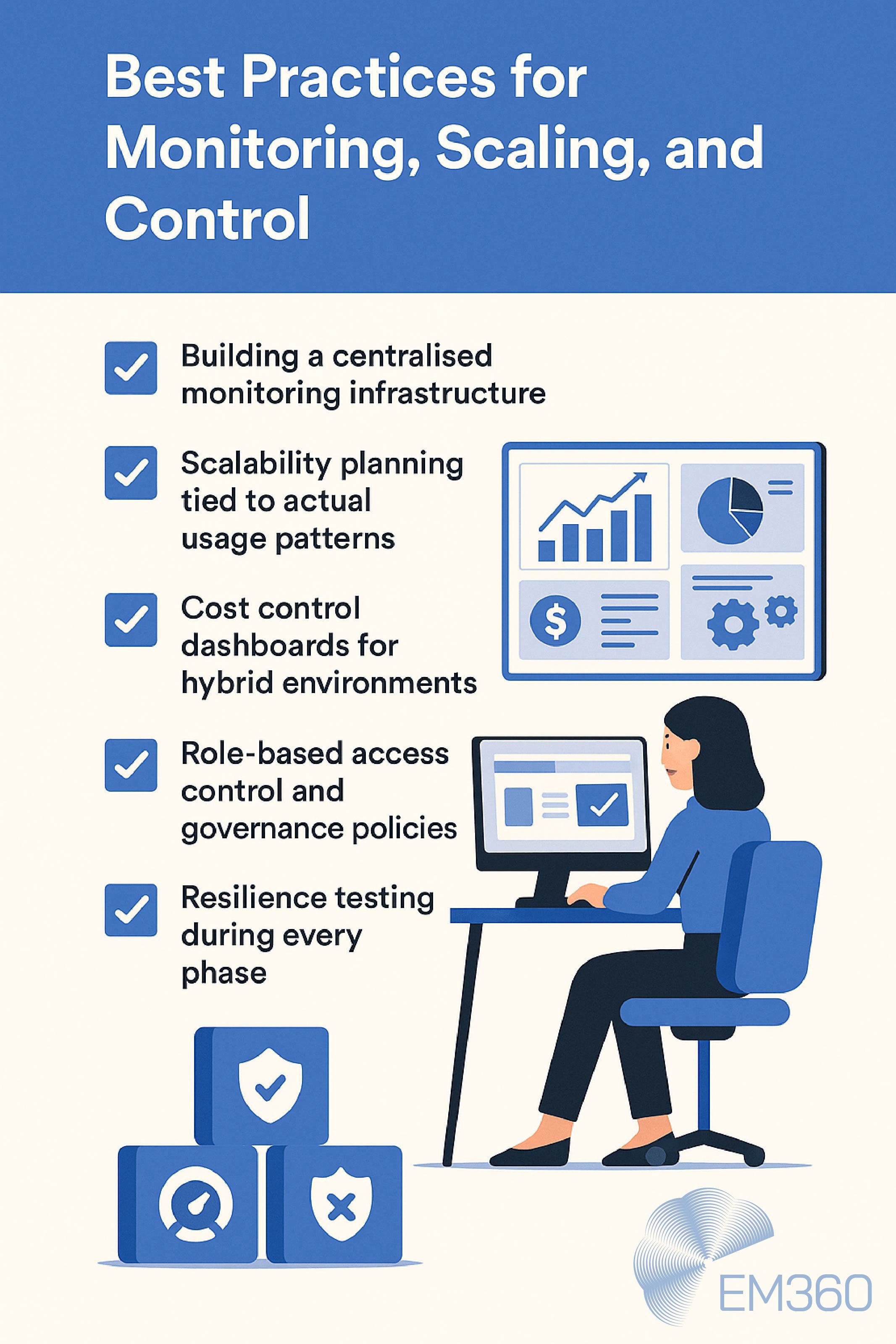

Best practices for monitoring, scaling, and maintaining control

No matter how gradual the migration, the operational stakes are high. Teams must be able to track system health, balance loads, and scale services without losing visibility or control. And as infrastructure becomes more distributed, the margin for error gets smaller.

Some of the most effective practices include:

Building a centralised monitoring infrastructure

Don’t wait until after modernisation to implement observability. Monitoring should be baked in from the outset, with a unified view across both legacy systems and cloud-native components. This includes real-time alerts, historical trend analysis, and dependency mapping — all visible from a single dashboard. Tools like Prometheus, Grafana, and commercial platforms like Datadog or Dynatrace can help bridge the visibility gap across hybrid environments.

Scalability planning tied to actual usage patterns

Scalability isn’t just about capacity. It’s about knowing when and where to scale. Teams should analyse traffic patterns, seasonal demand, and resource utilisation to determine when to spin up or down.

Without this, autoscaling can introduce inefficiencies or cost spikes. This step is especially important for client-server applications that may not have been designed to scale horizontally.

Cost control dashboards for hybrid environments

As workloads shift between environments, so do cost dynamics. Without clear reporting, costs can balloon quickly — especially when idle compute, data egress fees, or duplicated services go unnoticed. Building a cost control layer into the architecture helps teams monitor real-time spend, flag anomalies, and align usage with business value. Tagging, cost allocation, and forecasting should be treated as part of infrastructure planning, not just finance reporting.

Role-based access control and governance policies

Governance tends to break down during change. As systems evolve, access expands — sometimes unintentionally. Enterprise teams should audit permissions regularly, apply role-based access controls, and enforce least-privilege principles at every layer. This protects sensitive workflows during migration and reduces the attack surface created by expanding system complexity.

Resilience testing during every phase

Just because systems are more flexible doesn’t mean they’re more resilient. Client-server environments often rely on predictable behaviour — when components are decoupled, failure paths can multiply. Enterprises should run failover simulations, load testing, and disaster recovery drills as part of the migration process. This ensures continuity planning keeps pace with infrastructure change.

These practices aren’t just technical checkboxes. They are the operational safeguards that make migration sustainable. Without them, modernisation may succeed architecturally but fail strategically.

Client-Server Architecture in a Post-AI, Multi-Cloud World

The modern enterprise no longer operates in a single environment or on a single timeline. Workloads are distributed. Decisions happen at the edge. AI models are embedded in every layer of operations. And infrastructure strategies have to keep up.

That includes client-server systems.

The model still holds up — but now it has to support more complexity, more velocity, and more regulation than ever before.

Supporting AI workloads with hybrid setups

AI workloads place enormous demand on infrastructure. They require high-throughput compute, accelerated storage, and tight integration with training and inference pipelines. For most enterprises, this means splitting the load across environments.

Client-server models are being adapted into AI-ready infrastructure by integrating with GPU-enabled servers, offloading training to cloud platforms, and embedding inference capabilities at the edge. The client might trigger a model request, the server might run inference on a dedicated node, and the result might be pushed back to the client within milliseconds.

This doesn’t replace the client-server paradigm — it extends it. And it allows teams to balance performance with control, using hybrid deployments to move sensitive or resource-heavy operations closer to the business.

That shift is accelerating. Gartner predicts that 90 per cent of organisations will adopt a hybrid cloud approach by 2027 and identifies data synchronisation across hybrid environments as the most urgent GenAI challenge to solve in the coming year.

For infrastructure leaders, that means adapting traditional client-server frameworks to support faster data movement, tighter integration, and smarter orchestration across the entire environment.

Edge acceleration and real-time decision systems

Real-time systems can’t afford round trips. Whether it’s fraud detection, predictive maintenance, or supply chain rerouting, decisions need to happen where the data lives.

That’s where edge computing comes in. Client-server roles are now being replicated at the edge, with miniaturised servers, data caches, and analytics engines handling logic on-site. The client might be a camera, a sensor, or a remote terminal. The server might be a ruggedised device sitting on a factory floor or in a retail location.

In this model, centralised systems are still in play — but they provide oversight, coordination, and large-scale analysis, not immediate execution. This makes it possible to reduce latency, preserve bandwidth, and maintain uptime even in disconnected environments.

Compliance, sovereignty, and governance in distributed models

The more distributed your systems become, the harder it is to enforce policy. That includes data governance, access controls, and audit trails — all of which are under increasing scrutiny from regulators.

Modern client-server architectures must account for regulatory compliance at every touchpoint. That means building in location-aware routing, encryption across all connections, and role-based access across environments. It also means deploying infrastructure in ways that respect national boundaries and data residency requirements.

In many cases, client-server models help reinforce these requirements. They offer predictable, controllable flows of data and logic. With orchestration and logging, they can show who accessed what, when, and where.

What used to be a simple model for resource delivery is now becoming a strategic framework for server-side intelligence, system-wide visibility, and policy enforcement — all without giving up the clarity and control enterprises rely on.

Final Thoughts: Client-Server Still Matters, but It Must Evolve

Client-server architecture isn’t going anywhere. It remains the backbone of critical enterprise systems — not because it resists change, but because it adapts to it.

From financial systems and EHRs to AI pipelines and edge deployments, the model continues to deliver on what matters most: reliability, control, and structured scalability. But its value today depends on how it’s integrated into broader strategies. That means rethinking placement, observability, governance, and resilience — not just uptime.

For teams responsible for infrastructure or data architecture, the goal is not to discard legacy models but to refine them. Hybrid deployments, modular server designs, and embedded security frameworks are making it possible to carry forward what works while building for what’s next.

Modernisation doesn’t always mean rebuilding. Sometimes it means knowing exactly what to keep — and how to make it stronger.

If that’s the kind of clarity your team is working towards, EM360Tech’s library of expert insights on infrastructure, data, and architecture resilience is a good place to start.

Comments ( 0 )