Small data is having a moment. In an era defined by petabytes and streaming pipelines, the ability to extract insight from a small dataset is becoming a competitive advantage in its own right.

Whether it’s the curated records feeding a predictive maintenance model, a few hundred high-quality patient profiles informing personalised treatment, or the finely segmented customer lists guiding a marketing campaign — the value lies in precision, not volume.

This doesn’t mean that enterprise AI and data leaders are rejecting big data. It’s simply recognising that the choice between big data and small data is strategic and not binary. In other words, the most effective organisations are learning that sometimes scaling up is the best option and sometimes narrowing the focus would be best.

It’s all about applying the right tools to the right context. For AI strategy leaders, this might mean overcoming limited training data by using models optimised for few-shot learning or transfer learning.

For data and analytics teams, it could be making sure the proper governance and quality controls are in place so those smaller datasets stay accurate, unbiased, and compliant. The challenge — and opportunity — is to handle small data with the same rigour and innovation usually reserved for large-scale datasets.

Which means you can trust it to deliver actionable, trustworthy intelligence that drives enterprise-level outcomes.

What Is Small Data and Why It Matters Now

Small data refers to data that is small enough to be processed and understood by humans without complex, large-scale infrastructure. But still valuable enough to deliver meaningful insight.

It is often a small dataset that can be easily reviewed, analysed, and acted upon, especially when supported by AI and machine learning techniques optimised for limited samples. The focus is on clarity, context, and actionability rather than sheer volume.

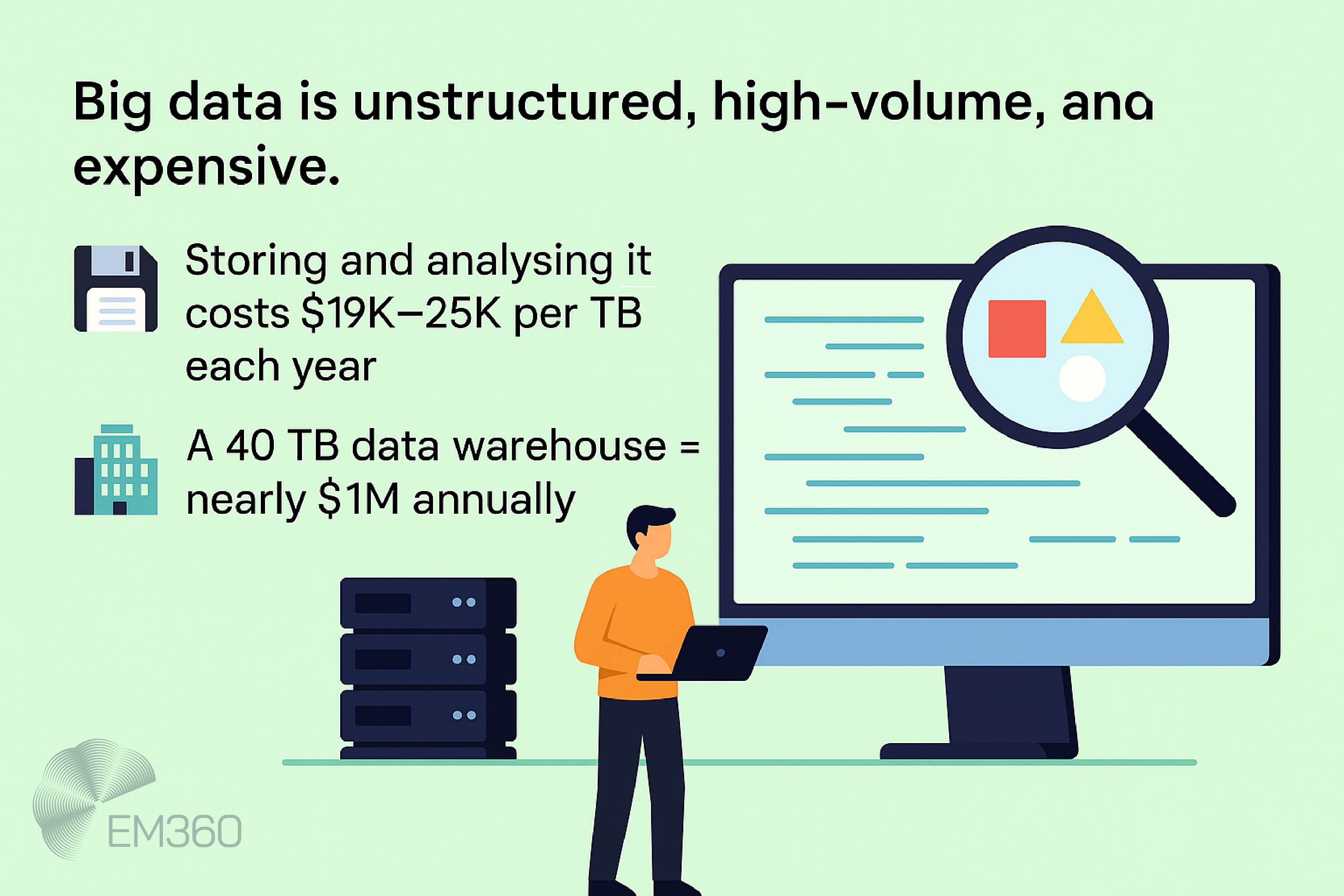

The distinction between big data vs small data is not simply about size. Big data is typically unstructured, high-volume, and demands massive amounts of computing power to store and analyse — with costs that can reach $19,000 to $25,000 per terabyte each year, or close to $1 million annually for a 40 TB warehouse.

Small data, in comparison, is more structured, and is often curated for the purpose of quickly answering very specific questions.

In many enterprise environments, the advantage of a small dataset lies in it how quickly it can be put together. And also how easy it is to maintain high standards of data governance and quality assurance. The relevance of small data is only being emphasised by recent trends.

These include the rise of generative AI which created a need for highly curated, domain-specific datasets to fine-tune large models. Edge and IoT devices are producing streams of data that, when filtered and condensed, become targeted small datasets ideal for near-real-time decision-making.

In specialised domains such as healthcare, manufacturing, or financial services, small data often represents the highest-quality source of truth available, making it a strategic asset for informed, high-stakes decisions.

When To Choose Small Data Over Big Data

Knowing when to work with a small dataset instead of pursuing large-scale collection is a strategic decision that can save time, reduce costs, and improve focus. Big data has its place in complex modelling, broad market analysis, and long-term trend discovery.

Small data, on the other hand, can deliver faster time-to-insight, cost efficiency, and highly targeted results. In situations where speed is critical, a smaller, well-curated dataset can be analysed and acted upon in hours or days rather than weeks or months. This is especially valuable for time-sensitive decisions, rapid prototyping, or operational adjustments.

From Audits to Continuous Trust

Shift compliance from annual snapshots to embedded, automated controls that keep hybrid estates aligned with fast-moving global regulations.

The cost of big data can also be prohibitive when factoring in storage, processing infrastructure, and the skilled resources needed to maintain it. A focused approach often delivers a better ROI from small data, especially when the goal is to answer a specific business question rather than build a fully generalised model.

In fact, studies show that small and mid-size businesses using AI strategically have achieved cost reductions of around 15 per cent, revenue gains of nearly 16 per cent, and productivity improvements of more than 40 per cent—results that reflect the outsized impact of working with smaller, high-quality datasets when applied to the right problems.

Small data also shines in scenarios where precision is more important than scale. For example, a targeted customer segmentation model can often perform better with fewer, cleaner records than with a massive dataset full of noise. The same applies in manufacturing quality control or clinical research, where every record is deeply validated.

There are limits, of course. Small datasets can carry a higher predictive performance risk if they're not representative of the wider population. They’re more prone to sample bias, and in some cases can’t be scaled to meet broader enterprise needs without further collection.

That’s why strong governance, rigorous validation, and careful model selection are essential to making small data a reliable decision-making tool.

Key Challenges in Working With Small Datasets

Working with a small dataset comes with its own set of challenges, both technical and strategic . The most well-known of these is the risk of overfitting. When a model is trained on a very limited number of records, it can seem like it's highly accurate during testing. But then fails as soon as it's exposed to new or live data.

This inflated performance is common in scenarios with fewer than 300 samples, and it can give decision-makers a false sense of confidence in the results.

Another frequent challenge is class imbalance. If one category is significantly overrepresented, the model may learn to favour it by default, reducing its ability to make fair or accurate predictions.

Inside Tumblr’s Policy Overhaul

Tumblr’s path shows how moderation tooling, ad tech integration and data policies can erode a platform’s core value proposition.

In fields such as fraud detection or rare disease diagnosis, where certain outcomes occur infrequently, imbalance can distort the insights and lead to missed opportunities or costly errors.

Missing data also has more of an impact when you're working with a smaller quantity of data. With less records overall, each missing value compounds the related issues until eventually the entire dataset is all but worthless.

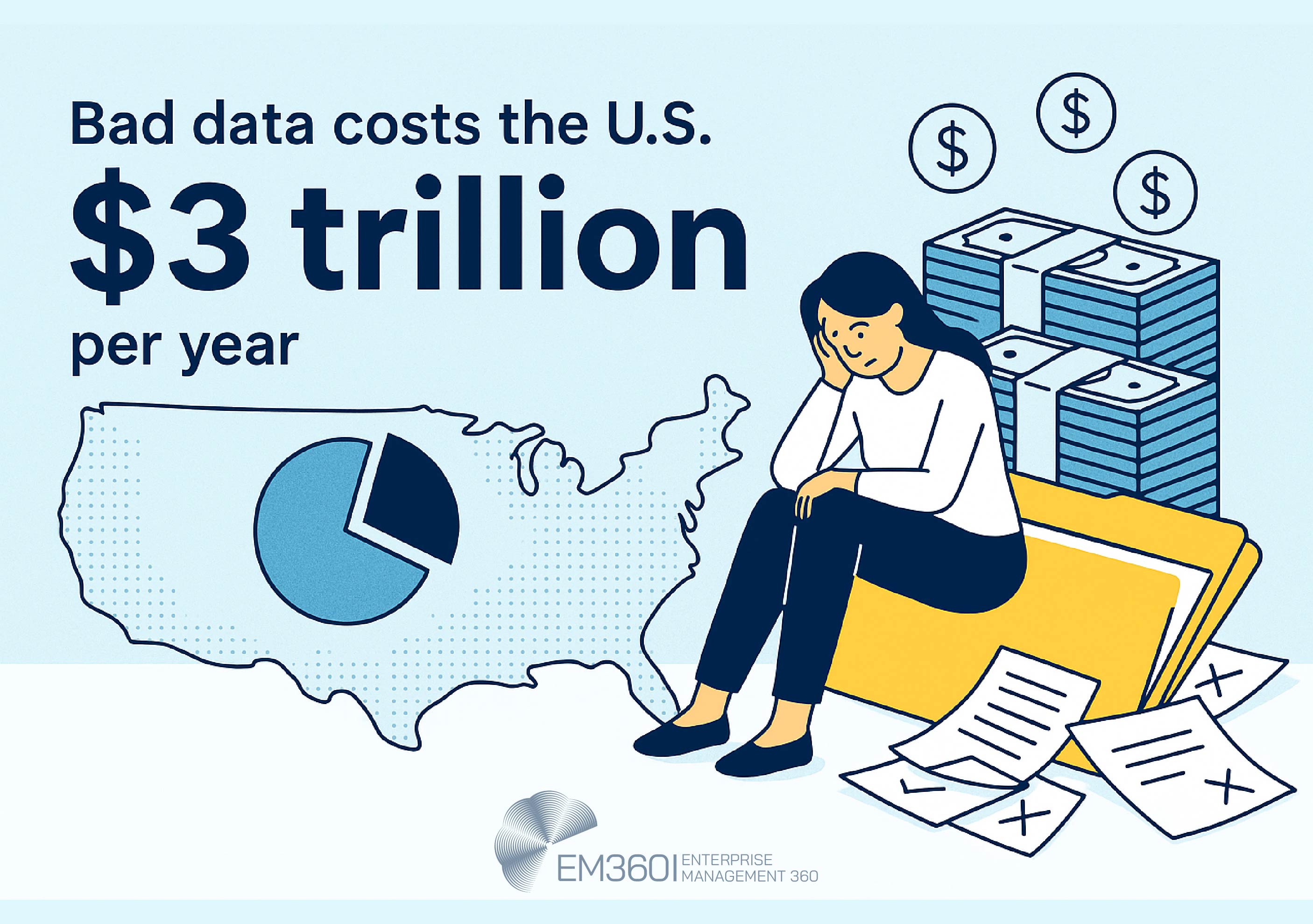

According to Gartner, poor data quality already costs organisations an average of $12.9 million per year, which shows just how quickly the risks add up when gaps are left unaddressed. Potential issues include an increased risk of bias, and more difficulty detecting patterns.

Similarly, datasets that contain large amounts of unlabeled data can limit the ability to train supervised models effectively, reducing the quality of any predictions. Finally, certain sectors face domain-specific scarcity by default. In healthcare, patient privacy rules can restrict the amount of data that can be shared.

In manufacturing, highly specialised equipment may only produce limited performance records over time. In R&D, years of experimentation might yield only a small number of validated results. Each of these cases demands careful handling and often specialised techniques to make the most of what is available.

Proven Techniques for Maximising Value From Small Data

Working with limited samples does not mean lowering expectations. There are proven techniques that allow organisations to make the most of a small dataset while reducing the risks that come with it. Each approach helps increase reliability, improve model performance, and ensure the insights generated are still meaningful at enterprise scale.

Data augmentation and synthetic data generation

One of the most practical ways to overcome a small dataset is through data augmentation. In image recognition, this could mean flipping, rotating, or slightly altering images to create new variations.

For text, it can involve paraphrasing or using language models to expand training examples. Even in tabular data, small shifts in values can increase diversity.

The Cost of Clinging to No-Code

No-code dashboards lock logic in opaque UIs. Enterprises that don’t expose data workflows as text will struggle to operationalize AI safely.

Synthetic data is another powerful option. By generating new records that mimic the statistical properties of the original dataset, organisations can address class imbalance and strengthen training without collecting more real-world data.

Used carefully, this can support imbalanced learning in areas like fraud detection or medical diagnosis where positive examples are naturally rare.

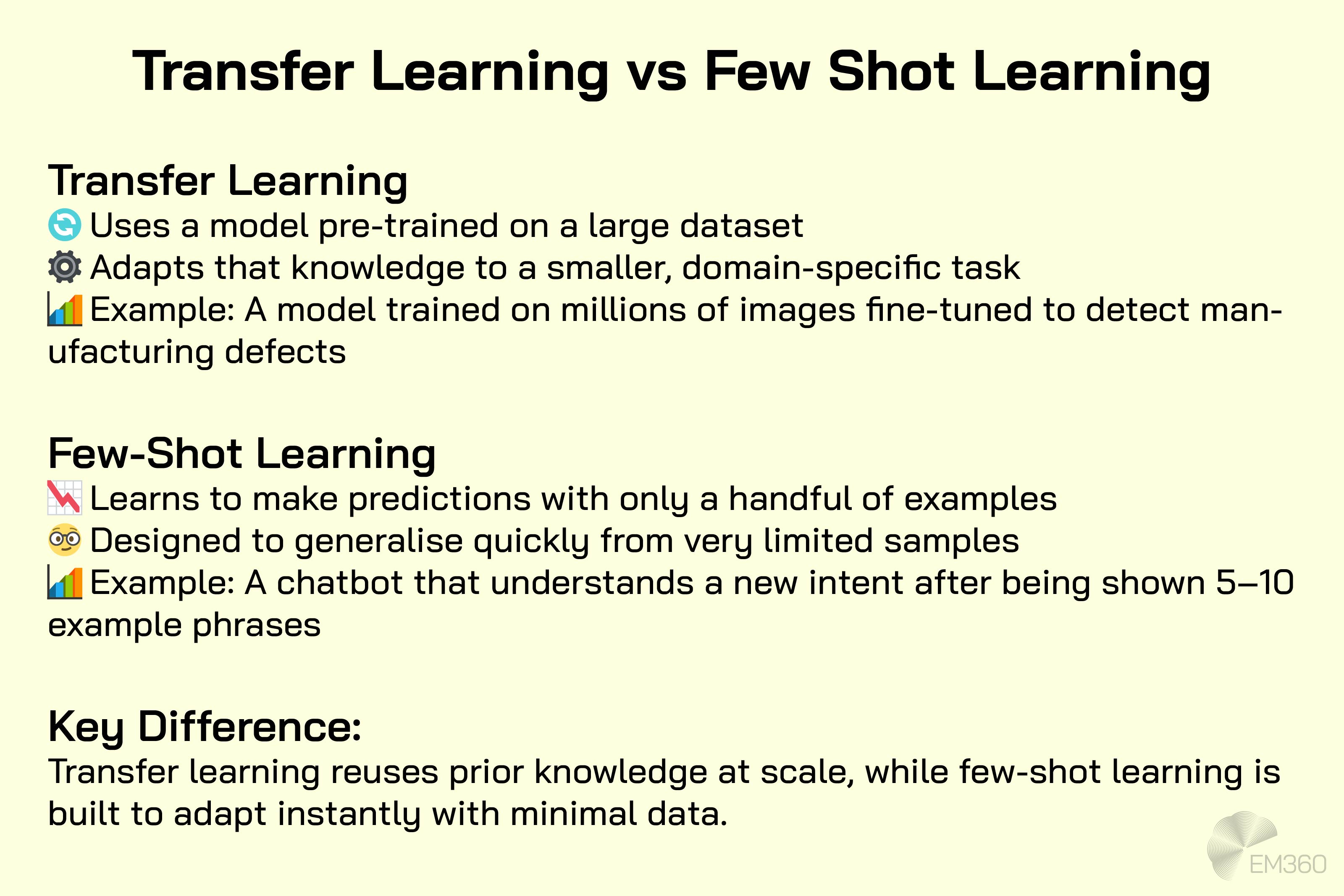

Transfer learning and few-shot learning

Rather than starting from scratch, organisations can build on what already exists. Transfer learning makes use of pre-trained models that have already been trained on large datasets, adapting them to a smaller, domain-specific task. Few-shot learning takes this further, where models are designed to work effectively even when given only a handful of examples.

These methods are especially useful in natural language processing, computer vision, and even tabular AI tasks where labelled data is hard to come by. The result is higher accuracy and faster deployment with significantly less training data.

Cross-validation and regularisation discipline

When data is limited, testing becomes even more important. Cross-validation techniques such as stratified k-fold ensure that every record is used for both training and validation, maximising the information gained from a small dataset. Bootstrapping can provide additional reliability by resampling the data to test stability across multiple models.

Adding regularisation (such as L1 or L2 penalties) helps reduce overfitting by discouraging overly complex models. Together, these practices create a stronger framework for trustworthy predictive performance, even when samples are few.

Bayesian and probabilistic methods

Bayesian approaches give teams another way to deal with uncertainty in a small dataset. Instead of locking in a single answer, these models use prior knowledge and update probabilities as more information becomes available. In practice, that means you’re not just getting a prediction — you’re also getting a clear sense of how confident the model is in that result.

When BI Becomes Enterprise DNA

How enterprise BI platforms with embedded AI, governance and scale are reshaping who makes decisions and how fast insights reach the board.

This perspective is especially useful when working with limited samples, where variability is high and outcomes carry weight. In fields like healthcare or advanced manufacturing, knowing the range of possibilities can often be more valuable than aiming for one definitive number.

Emerging Innovations in Small-Data AI

New research is proving that small data AI is not just about working around limitations. It is also driving some of the most interesting innovations in machine learning today. Two examples stand out for their potential to change how enterprises think about modelling with limited samples.

TabPFN is a new transformer for tabular data that has been trained in advance to recognise a wide variety of patterns. Instead of spending days tuning models, TabPFN can classify small datasets more than 5,000 times faster than standard approaches, and perform regression tasks around 3,000 times faster. This makes it a strong candidate for scenarios where time-to-insight matters and the available data is modest but reliable.

Another breakthrough comes from quantum machine learning. The QKAR (Quantum Kernel-Aligned Regressor) model was recently used to predict semiconductor properties with only 159 samples, outperforming classical approaches. For industries that work with extremely scarce, high-value data, this is a signal that quantum-enhanced methods may soon open doors that traditional AI cannot.

Both innovations show that progress in AI is not only about handling bigger and bigger datasets. It is also about designing smarter techniques that can extract value from the smaller, curated datasets enterprises already have.

Where To Find High-Quality Small Datasets

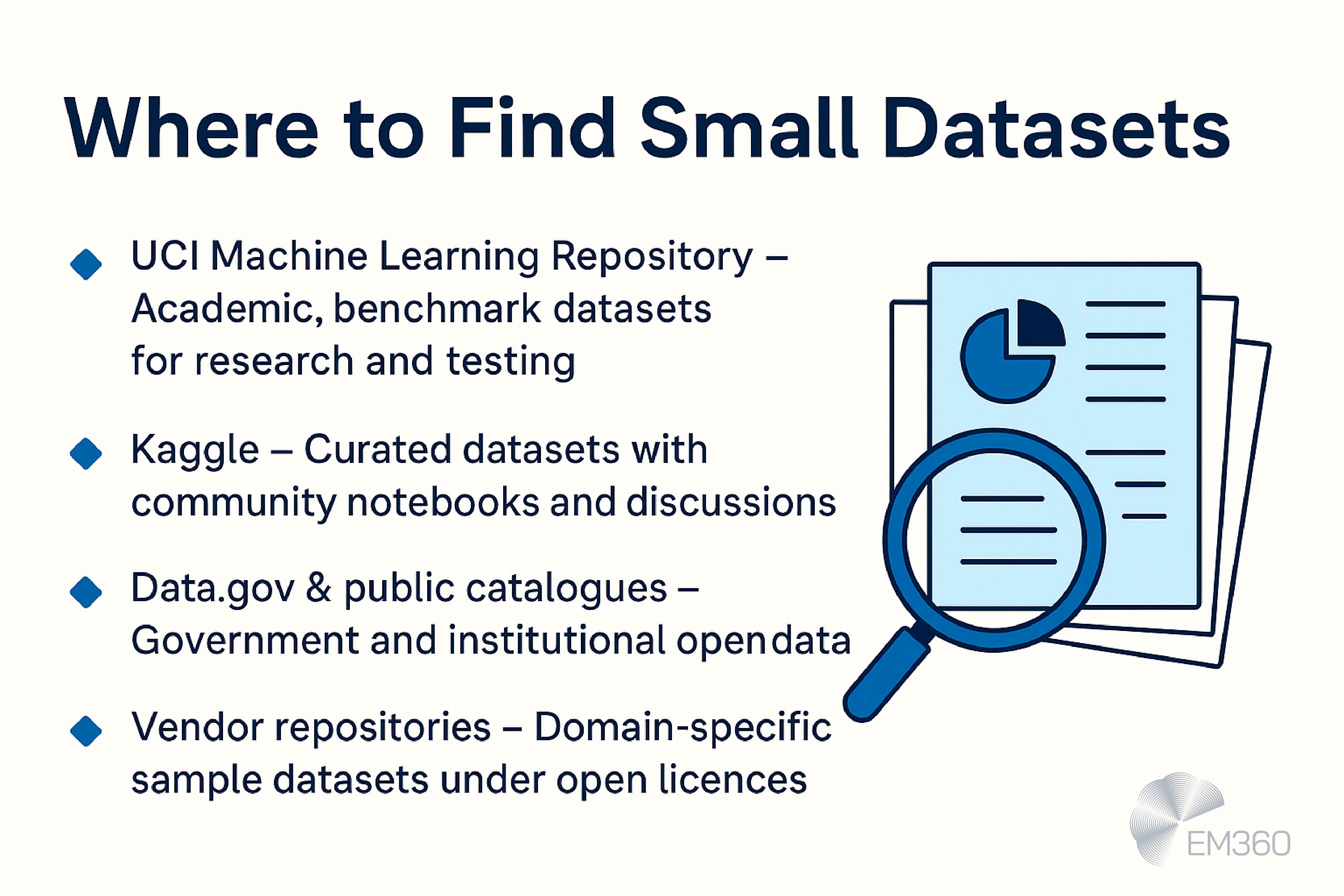

The value of small data depends on quality. A smaller dataset can only be useful if it is accurate, relevant, and well structured. For leaders looking to experiment, prototype, or put models into production, there are several trusted sources worth considering.

The UCI Machine Learning Repository is one of the most established academic resources, offering a wide range of carefully curated datasets for research and practice. Many benchmark models have been tested against UCI collections, making it a reliable place to build and compare results.

Kaggle datasets are another strong option. Alongside the data itself, Kaggle provides notebooks and community discussions, giving teams practical examples of how to work with limited samples. This makes it especially useful for training, experimentation, and sharing techniques.

Public sector and institutional sources such as data.gov also provide access to a vast public data catalogue. These datasets are often structured for transparency and accountability, which makes them valuable for real-world modelling while keeping compliance in mind.

Finally, some vendors now offer open data repositories or provide sample datasets under permissive licences. These collections can help teams explore domain-specific use cases while reducing the barriers to entry.

Governance and Quality Assurance for Small Data

The usefulness of a small dataset depends entirely on how well it is governed. With fewer records to work with, each one carries more weight. Which means accuracy, security, and trust are non-negotiable.

The first step is creating a proper data catalog. Capturing details like source, format, and update frequency makes it easier to check quality and track how the data is being used. It also reduces duplication across teams and helps ensure that everyone is working from the same trusted foundation.

Strong bias detection is equally important. A skew in a small dataset has a much bigger impact than in a larger one, and it can easily distort predictions. Running fairness checks and correcting imbalances is essential if the data is going to support responsible decision-making.

Finally, privacy and security need to be built in from the start. Smaller datasets often contain high-value or sensitive records, making secure data handling and compliance with regulations critical. Good governance is what turns small data from a potential risk into a reliable source of intelligence.

Real-World Use Cases for Small Data

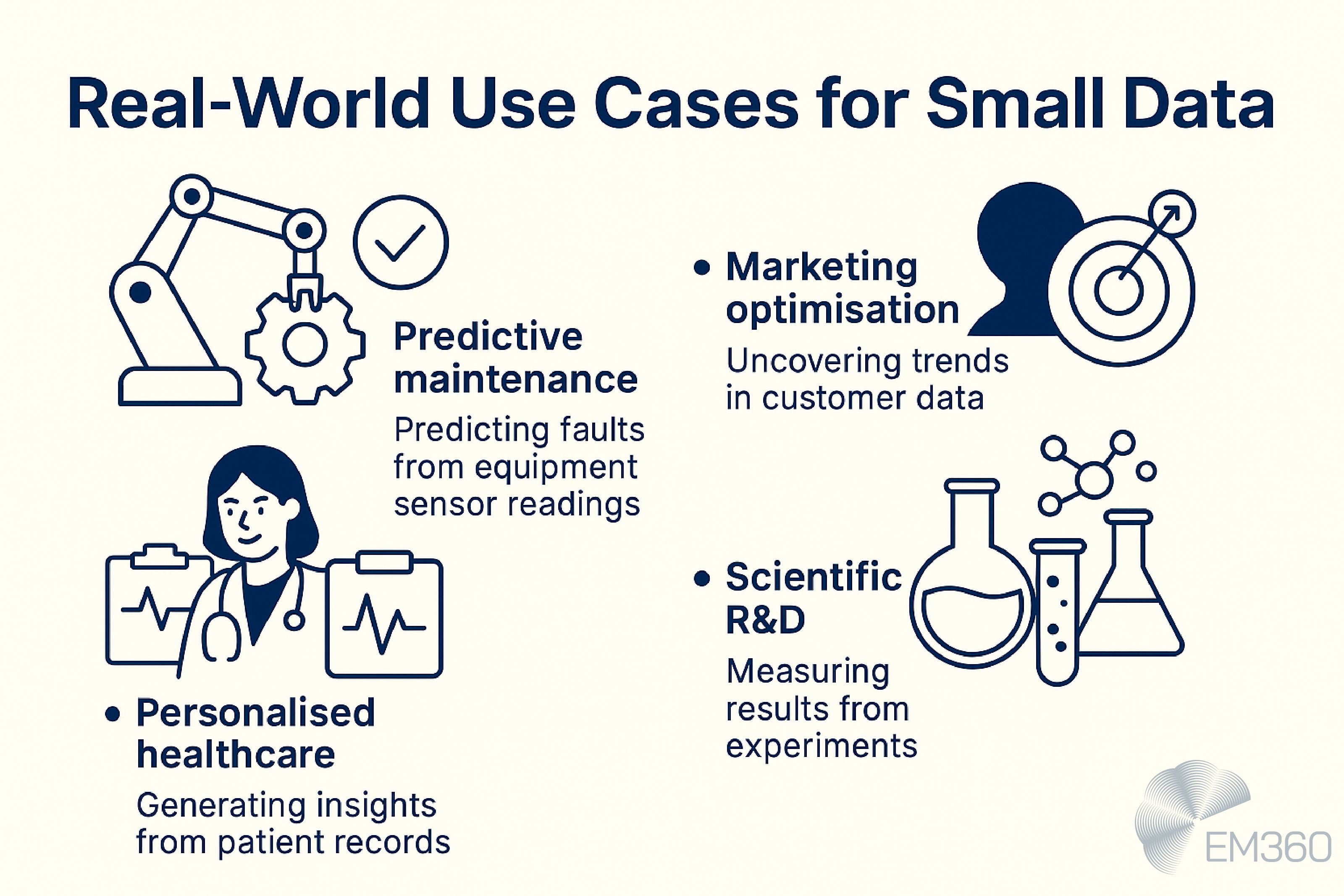

The strength of small data is most obvious when it is applied to targeted, high-value problems. Across industries, smaller datasets are already shaping critical decisions and driving measurable outcomes.

Predictive maintenance in manufacturing

Manufacturers are using IoT data from equipment sensors to predict faults before they occur. Even a relatively small subset of sensor readings can reveal patterns that indicate wear, vibration, or temperature changes. By acting on this information early, companies can reduce downtime and extend the life of their machinery.

Personalised healthcare insights

In healthcare, a small dataset of carefully validated patient records can be more useful than a massive but inconsistent one. With fewer, high-quality samples, models can generate accurate insights into treatment effectiveness or early diagnosis. This kind of personalised healthcare relies on precision rather than scale.

Marketing optimisation

Marketing teams often get more value from micro-segmentation than from analysing entire customer populations. A smaller dataset of specific customer groups can uncover buying behaviours and preferences that would otherwise be hidden in the noise. The result is more accurate targeting and a better return on campaign spend.

Scientific R&D

In areas like materials science or molecular research, experiments may only generate a handful of results each year. Yet these materials science datasets carry enormous value. By applying small data techniques, researchers can accelerate discovery, test new hypotheses, and make reliable predictions even when samples are scarce.

Common Pitfalls and How To Avoid Them

Working with a small dataset can produce strong results, but it also creates space for mistakes that can undermine trust in the outcome. Three pitfalls in particular are worth watching for.

One is the tendency to overgeneralise from too few records. A model trained on limited samples may look convincing in testing, but it is at higher risk of overfitting. Without proper validation, the predictions it produces can collapse when applied to live data. The fix is to test thoroughly and ensure models are stress-tested against as many realistic scenarios as possible.

Another is leaving out domain expertise. Even the best technical methods can misfire if the dataset is not interpreted within the context of the business or sector it comes from. Engaging subject matter experts helps ensure that insights are grounded and that any anomalies are correctly understood.

Finally, correlation and causation are often confused. With fewer data points, patterns can appear that look meaningful but are not actually driving the outcome. Distinguishing correlation vs causation is essential to avoid costly missteps and poor decisions.

FAQs on Small Data for Enterprise Leaders

What counts as a small dataset?

A dataset is considered small when it can be reviewed and processed without large-scale infrastructure, yet still provides enough information to generate meaningful insights.

Which AI models work best with small data?

Techniques such as transfer learning, few-shot learning, and Bayesian methods are often the most effective, as they can adapt quickly with limited samples.

Can deep learning work with very small datasets?

Yes, but only with careful use of augmentation, regularisation, and pre-trained models. Without these safeguards, deep learning is more likely to overfit.

How do I reduce overfitting with a small dataset?

Cross-validation, bootstrapping, and regularisation are reliable ways to test robustness and improve model stability.

Where can I find clean small datasets?

Trusted sources include the UCI Machine Learning Repository, Kaggle datasets, and public data catalogues such as data.gov, which all provide well-documented, accessible datasets.

Final Thoughts: Small Data Delivers Big Strategic Value

Handled with the right techniques, small data is not a limitation but an opportunity. It gives enterprises the ability to move quickly, target precisely, and maintain high standards of quality without the overhead of managing massive datasets.

The key is to approach it with the same rigour normally reserved for big data projects. That means using proven modelling methods, building in strong governance, and applying domain expertise to keep insights relevant and accurate.

For data and AI leaders, the real advantage lies in recognising that small and big data are not opposing forces. They are complementary strategies. Knowing when to scale up and when to narrow the focus is what sets the most effective organisations apart.

Small data, when managed well, becomes a reliable source of actionable intelligence and a competitive differentiator in today’s enterprise landscape. To explore more strategies shaping the future of enterprise data and AI, visit EM360Tech for insights from industry experts and practitioners.

Comments ( 0 )