Enterprise quantum computing is no longer an abstract promise of the distant future. Regulators have set firm deadlines, with the European Union requiring organisations to begin their transition to post-quantum cryptography by 2026 and complete it across critical infrastructure by 2030.

In the United States, NIST has finalised its first suite of post-quantum standards, forcing boards and CISOs to act. At the same time, enterprises are already experimenting with hybrid applications: pharmaceutical leaders have used quantum algorithms to model RNA structures, while telecoms are running optimisation pilots through cloud-accessible platforms.

The business case is becoming just as compelling as the technical one. Billions are flowing into quantum research and development, with private investment climbing sharply and governments funding large-scale national initiatives. For CIOs and CTOs, this signals a turning point.

The timeline to prepare is tightening, and the competitive risk of waiting is rising. Enterprises that treat quantum adoption as a strategic horizon issue will be better positioned to capture quantum advantage while ensuring their organisations are protected against cryptographic threats.

The State Of Quantum Computing In 2025

Quantum computing has moved beyond proof-of-concept experiments and into a phase where progress is measured by practical readiness. Enterprises are no longer asking what quantum is, but when fault-tolerant systems will be available and how current Noisy Intermediate-Scale Quantum (NISQ) devices can be applied today.

The focus has shifted from raw qubit counts to the development of logical qubits, breakthroughs in error correction, and credible roadmaps toward large-scale, fault-tolerant quantum computing.

This means that the technology isn't ready for businesses to use yet, but there are clear signs of progress and the potential for hybrid quantum-classical approaches.

Hardware progress

In the race to scale qubits, vendors are looking into different options. Superconducting qubits are still the most advanced method right now. For example, Google's Willow processor shows below-threshold error correction, which suggests that logical qubits will be possible in the future.

IonQ and Quantinuum are making progress with trapped-ion systems, which are slower but have high fidelity. Photonic qubits are also becoming more popular as a way to scale up. Microsoft has shown progress in topological qubits with its Majorana prototype, where the focus right now being on improving error correction.

There’s also been progress in terms of hardware strategies, with AWS conducting research on cat qubits. Together, these efforts show an industry working toward stability and scalability, even if each pathway comes with trade-offs.

Software and algorithms

Hardware is only part of the story. Advances in algorithms are making NISQ systems more relevant for enterprise pilots. Variational quantum algorithms are already being tested in pharmaceutical and materials science use cases, offering incremental improvements when combined with classical systems.

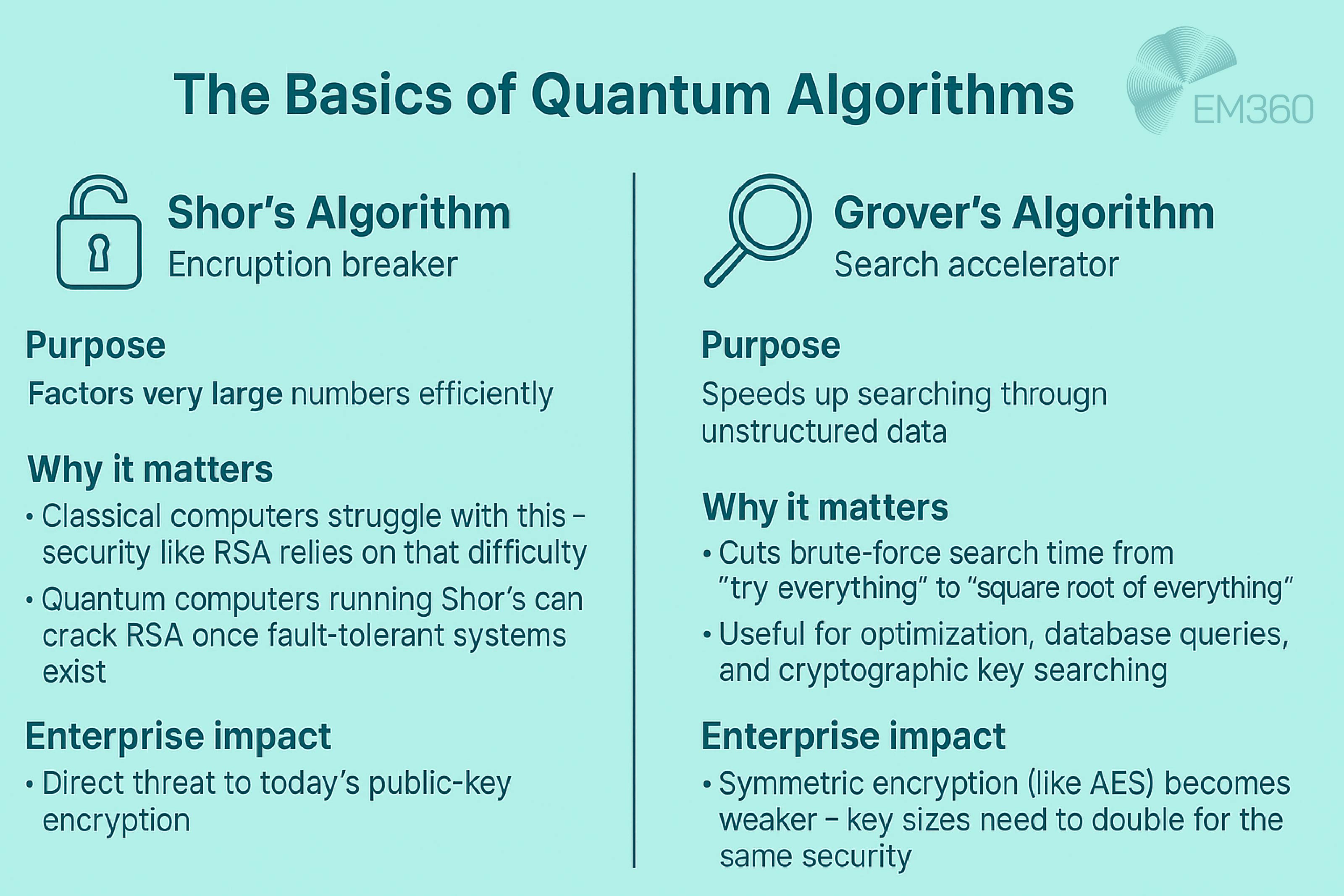

Established algorithms such as Shor’s algorithm and Grover’s algorithm are critical for illustrating both the potential and the risks of quantum capability once true fault tolerance is achieved.

More and more enterprises are using quantum cloud services like AWS Braket, Microsoft Azure Quantum, and IBM Quantum to connect to this ecosystem and explore hybrid workflows, build internal expertise, and prepare for future integration. Without needing to invest in any specialised hardware right now.

Enterprise Use Cases Emerging Today

Quantum computing in business is still new, but pilot programs are showing where business applications are starting to take shape. The goal is not to replace current systems, but to try out new ones that use both quantum and classical methods.

These experiments let businesses look into possible benefits, build their own knowledge, and get ready for wider use in the industry.

Pharmaceuticals and healthcare

Inside Enterprise Quantum Bets

How IBM, AWS, Google and challengers are turning quantum pilots into enterprise advantage, and which bets CIOs should back through 2030.

Drug discovery is one of the most promising areas for quantum simulation. IBM and Moderna recently used a quantum processor to model RNA structures that had been out of reach for classical systems. This type of molecular modelling could shorten the path from research to treatment by accelerating how new compounds are analysed.

For pharmaceutical research teams, the value lies in testing whether quantum methods can cut time and cost in the most computationally intensive parts of the pipeline.

Financial services

Financial firms are turning to quantum computing for risk analysis and optimisation problems. Portfolio construction, fraud detection, and market forecasting all rely on calculations that scale poorly on classical machines. Early pilots show that quantum optimisation could improve accuracy and efficiency in these areas.

For banks and investment houses, the goal is not full deployment today but preparing models and data strategies that can take advantage of quantum hardware as it matures.

Supply chain and logistics

Telecom operators have begun using hybrid quantum-classical systems to optimise backhaul networks. These pilots highlight how supply chain optimisation and logistics planning can benefit from quantum approaches to route planning and resource allocation.

For enterprises that manage complex distribution networks, even small efficiency gains can translate into measurable cost savings and stronger resilience.

Cybersecurity and cryptography

Quantum adoption also brings a quantum threat. Algorithms such as Shor’s make it possible to break RSA encryption once fault-tolerant systems arrive. This is why Y2Q planning is now tied to post-quantum cryptography migration.

For CISOs and IT leaders, enterprise strategy must include both exploring new applications and preparing for the risks. Securing data through PQC is a priority even before the benefits of quantum computing are fully realised.

Challenges To Enterprise Readiness

Quantum computing is advancing quickly, but enterprises need to be realistic about the barriers that remain. Current progress shows promise, yet significant hurdles must be addressed before large-scale adoption is feasible.

Scalability and error correction

Inside GPU-Accelerated Privacy

Examines GPU-based cryptography stacks, performance tradeoffs, and what quantum-resistant schemes mean for future data infrastructure.

Most of today’s systems are limited by the challenge of scaling qubits while maintaining accuracy. Noisy Intermediate-Scale Quantum devices suffer from instability and high error rates, which restrict the complexity of problems they can solve.

The breakthroughs in error correction, such as the ones demonstrated by Google’s Willow chip and IBM’s quantum low-density parity check (qLDPC) codes, are important markers of the progress in quantum computing technology.

But fault-tolerant quantum computing is still several years away. Which means that any quantum projects will have to be framed as pilots or proofs of concept rather that production-ready solutions for the time being.

Talent and skills gap

Even as hardware and algorithms improve, enterprises face a severe shortage of quantum talent. Deloitte projects that up to 250,000 specialised jobs will be needed globally by 2030 to support the growth of the ecosystem.

Quantum physics, computer science, and cybersecurity expertise must converge in ways that most organisations are not yet structured to support. This talent shortage creates dependency on vendors and academic partnerships.

For enterprise leaders, the immediate priority is to identify internal champions, fund training programmes, and build relationships with universities and specialist providers.

Cost and access

The cost of building or operating quantum hardware places it far beyond the reach of individual enterprises. On-premises investment requires high capital expenditure and specialised facilities, making it impractical for most organisations.

As of right now, the dominant model organisations are using is cloud based access through providers like IBM, Microsoft, and AWS.

This makes it easier for organisations to experiment with quantum computing, but might also cause concerns about data governance and vendor lock-in. Which means that enterprise quantum adoption has to included in the budgets of broader innovation portfolios rather than as standalone projects or infrastructure.

Integration with existing infrastructure

VPN Cryptography After Quantum

Assesses encryption durability, quantum threats and protocol obsolescence as VPNs move beyond PPTP, L2TP and bulky OpenVPN deployments.

Quantum systems won’t replace classical computing — not anytime soon at least. Instead they will complement the methods in use at the moment. So the challenge for the majority of enterprises will be figuring out how to integrate quantum workflows into their existing IT environments, data pipelines, and compliance frameworks.

However, these hybrid quantum-classical methods will need to be carefully planned so that they add value without breaking missin-critical systems.

So CIOs and IT leaders need to plan for staged integration, with quantum pilots running in controlled environments and then slowly moving into production workflows as get their best practices and standards hammered into shape.

The Security Imperative – Preparing For Y2Q

The arrival of fault-tolerant quantum computers will break the cryptographic systems that secure the internet today. This event, often referred to as Y2Q, has shifted from a theoretical concern to an active planning priority.

Enterprises cannot wait for the technology to mature before acting, because data being harvested now could be decrypted later. For CISOs and CIOs, the message is clear: post-quantum cryptography (PQC) migration must start immediately.

Standards and regulations

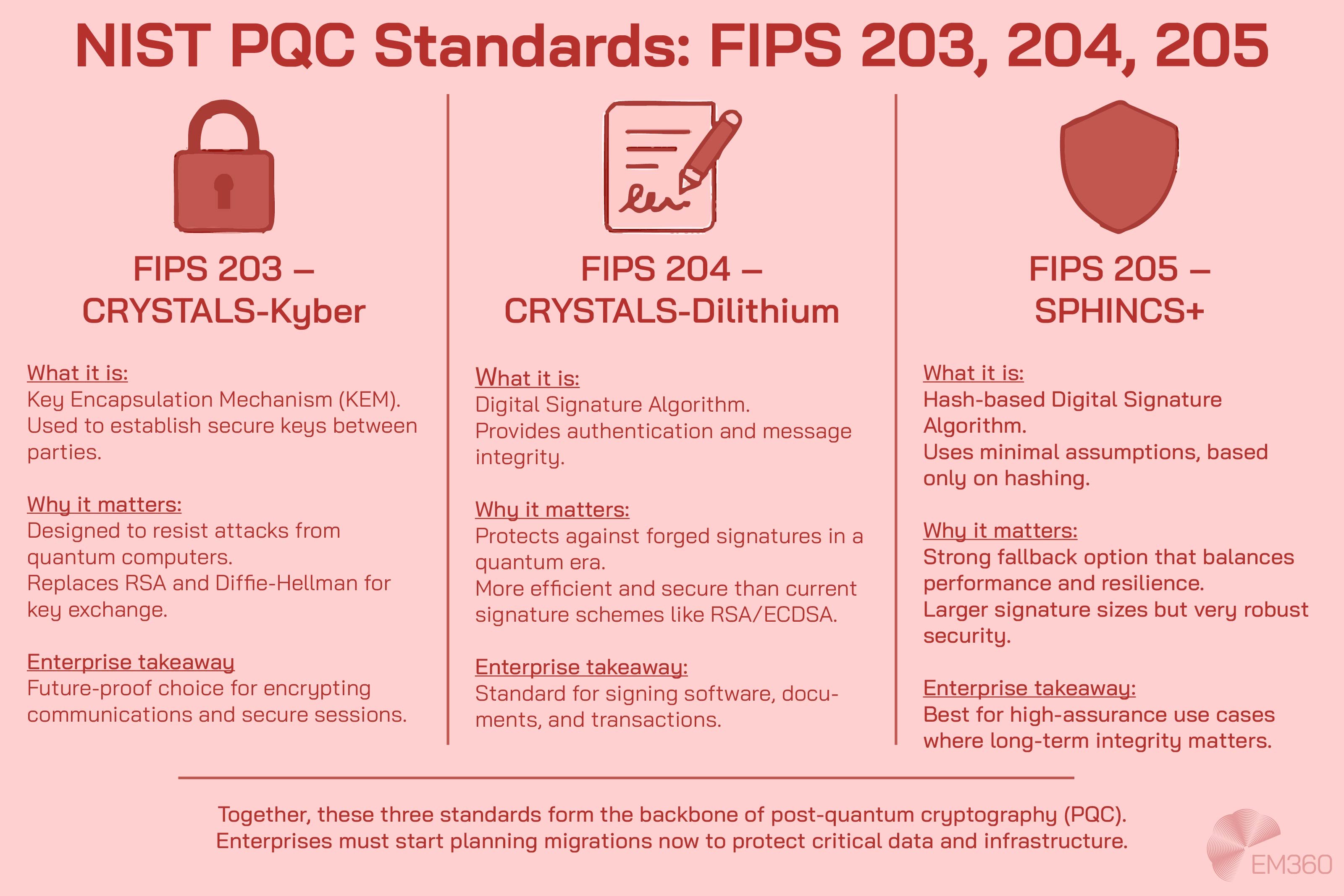

Governments have already set the timelines. In the United States, NIST has finalised its first PQC standards with FIPS 203, 204, and 205, covering key encapsulation and digital signatures, and selected HQC for further standardisation.

The European Union has set a 2026 deadline for organisations to begin transitioning to PQC and a 2030 deadline for completion across critical infrastructure. In the United Kingdom, the National Cyber Security Centre (NCSC) is advising organisations to act now and align with NIST and EU guidance.

Together, these standards create a clear regulatory baseline that enterprises must meet.

Migration priorities for enterprises

The first step is to inventory all cryptographic assets. Many organisations don’t have a full map of where cryptography is used across applications, devices, and supply chains. But without this visibility, migration will be fragmented and risky.

Once the relevant assets are identified, the next step is building crypto-agility directly into your systems. This is so that algorithms can be swapped without any major re-engineering being needed.

Quantum Computing in Strategy

See how quantum capabilities in AI, finance, pharma and climate modeling are reshaping long-term technology and investment roadmaps.

Enterprises should also run pilot projects with hybrid PQC to test new standards they’ve implemented as well as their existing encryption, so that it’s easier to roll out the updates at scale. For CIOs, the top priority is makeing PQC part of broader security and compliance roadmaps rather than treating it as an isolated project.

Internet adoption trends

The change is already in progress at the infrastructure level. Cloudflare and Google Chrome telemetry shows that around 38 per cent of HTTPS traffic is now PQC-enabled, reflecting rapid adoption of hybrid protocols.

This trend demonstrates that vendors and service providers are moving ahead of many enterprises. CISOs should see this as a signal that PQC migration is not optional but essential, and that delay increases both operational and regulatory risk.

Enterprise Strategy For The Quantum Decade

Quantum adoption is not about rushing into production systems. It is about laying the groundwork for when fault-tolerant computing arrives and ensuring that security and compliance are not left behind. CIOs and CTOs should focus on practical steps that reduce risk while building enterprise capability.

Invest in pilots, not production

Quantum hardware is not ready for large-scale deployment, which means that enterprises should limit their investments to controlled pilot projects. Running pilots through cloud-accessible platforms lets teams test hybrid quantum adoption without committing major budgets to hardware.

The value is in building institutional knowledge and benchmarking what quantum can do for your specific business problems.

Build partnerships with vendors and academia

No one company can handle the complexity of quantum computing by itself. Which means that enterprises should start working with universities, research centres, and tech vendors now already.

This way they’ll have easy and affordable access to the latest developments in both the hardware and algorithms behind quantum computing. And by building these partnerships now already, organisations are in a stronger position from which to scale adoption later.

Develop a quantum-ready workforce

One of the biggest problems that businesses face when they want to adopt new technologies is a lack of specialised skills. CIOs should start training their IT and data science teams on quantum concepts and also hire carefully in important areas.

Developing a quantum-ready workforce ensures that knowledge is distributed and that the business is not entirely dependent on vendors. Even a small amount of in-house expertise can help pilot projects be effective, and also help shape long term strategies.

Link quantum planning with security and compliance

Quantum projects shouldn't be developed in a vacuum. Any quantum strategy for a business should instead be directly linked to the security and compliance frameworks that are already in place. This way planning and post-quantum cryptography work together to make sure that innovation goals aren’t creating security risks.

This alignment makes it easier for boards to approve funding because they see that the investment both mitigates risk and is already future-proofed.

Future Outlook – 2029 And Beyond

By the end of this decade, the milestones for quantum computing start to look clearer. IBM has set 2029 as the target year for delivering a fault-tolerant quantum computing system. Their roadmap points to large-scale error-corrected machines that can run complex algorithms reliably.

Microsoft is pursuing its Majorana-based topological qubits, which are designed to be more stable and scalable once proven at larger volumes.

On the funding side, both the United States and the European Union have committed billions into long-term research programmes. These investments are not only about hardware, but also about building the supply chains, software ecosystems, and workforce training that will be needed to sustain adoption. For enterprises, this signals that governments are betting heavily on quantum as a strategic capability.

The reality is that most businesses will not be running production quantum systems by 2029. Instead, the next five to ten years should be seen as the horizon for building the enterprise adoption roadmap. That means using the time to strengthen pilots, invest in crypto-agility, and grow internal expertise while monitoring vendor progress.

The point where enterprises start to see consistent quantum advantage is still in the future, but the direction of travel is set. CIOs and CTOs should plan on quantum becoming a mainstream part of their infrastructure in the early to mid-2030s, with the organisations that prepare now standing to benefit first.

Final Thoughts: Security First, Strategy Always

Enterprise quantum computing is moving from concept to commitment. The deadlines for preparing for Y2Q are already set, and the first pilots are proving that value can be found through hybrid approaches. But the path forward is not about rushing. It is about balancing urgency with realism.

Enterprises need to make sure that security comes first by aligning with post-quantum cryptography standards. Pilots should come next, allowing teams to test hybrid workflows and build institutional knowledge. And strategy must always stay aligned with vendor roadmaps and regulatory timelines.

The organisations that treat quantum adoption as part of their long-term enterprise planning will be the ones that capture the advantage when the technology matures. Those that wait risk falling behind both in security and in competitiveness.

To stay ahead of the shifts that quantum will bring, CIOs and CISOs should keep watching developments closely and continue shaping their roadmaps as standards and vendor milestones evolve. For regular updates and expert insights on how this landscape is changing, keep following EM360Tech.

Comments ( 0 )