Artificial intelligence (AI) now touches every part of how money moves. Banks, marketplaces and payment providers deploy machine‑learning models to scan enormous volumes of data for signs of fraud; identity checks have been automated with facial recognition and document readers; and regulatory reporting relies increasingly on predictive analytics.

At the same time, AI has given criminals new tools. Generative models craft flawless phishing emails, voice‑cloning services mimic executives to approve fraudulent transfers, and synthetic identities built with AI slip through onboarding checks. The result is a paradox: the same technology both shields and threatens our payment systems.

Visa’s AI‑driven platforms blocked 80 million fraudulent transactions worth around US$40 billion in 2023, yet cybercrime losses are projected to exceed US$10.5 trillion by 2025.

G2A.COM CEO Bartosz Skwarczek summed up the dilemma succinctly when he said that AI is a double‑edged sword; G2A.COM and its partners use AI to deliver secure services while “malicious actors… also use AI to be smarter with cheating.” Understanding this duality is the first step toward harnessing AI responsibly.

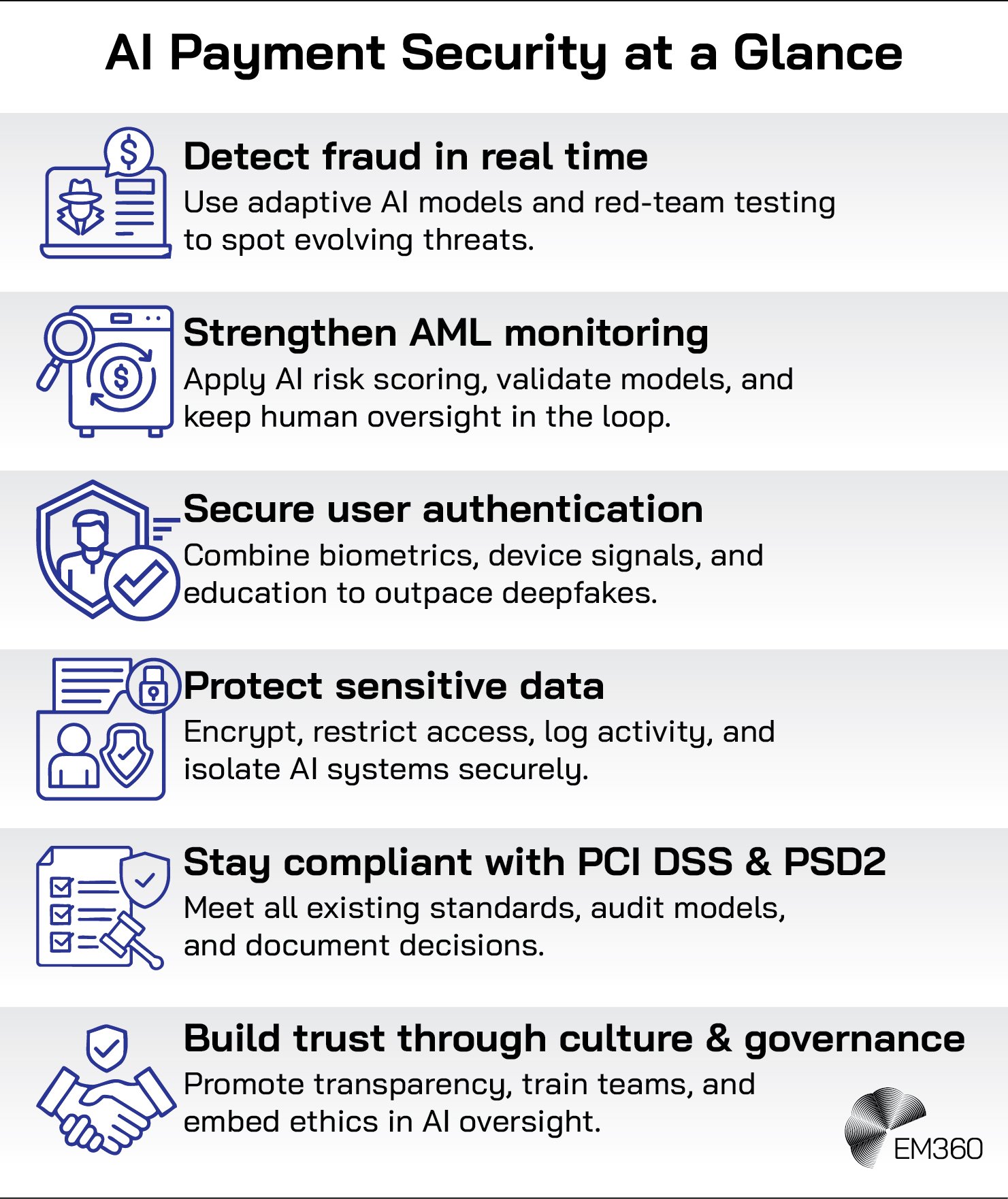

Real-Time AI Fraud Detection and the Deepfake Threat

AI’s ability to process data quickly has transformed fraud prevention. Sophisticated machine‑learning engines can analyse patterns across billions of card swipes, e‑commerce purchases and wire transfers to flag anomalies and suspicious activities faster than any team of human analysts.

The PCI Security Standards Council notes that payment providers rely on AI to spot anomalies in enormous global datasets. Systems like Mastercard’s Decision Intelligence examine thousands of variables per transaction, helping to reduce false declines while also catching more fraudbeing better at spotting actual fraudulent transactions.

Surveys show that around three‑quarters of financial institutions now use AI for fraud and financial crime detection and that most report faster response times thanks to automation.

Despite these gains, fraudsters are keeping pace. Generative AI has lowered the bar for phishing and social‑engineering attacks. Sift’s 2025 Digital Trust Index found that AI‑enabled scams and phishing reports increased by more than 450 per cent over the previous year.

Deepfake technology is especially dangerous; in February 2024, criminals used a deepfake video call to impersonate a company’s CFO and trick an employee into transferring HK$200 million (roughly US$25 million). Europol warns that organised crime rings are now using AI‑generated fingerprints, faces and voices to bypass biometric checks.

Synthetic identities, which blend real and stolen data, are now the fastest‑rising threat according to the Federal Reserve, and more than half of banks and fintechs have already run into them during onboarding. That reality demands a layered defence. Swap out static rules for adaptive models that learn from fresh patterns, and complement those with regular red‑team drills and model checks to expose blind spots.

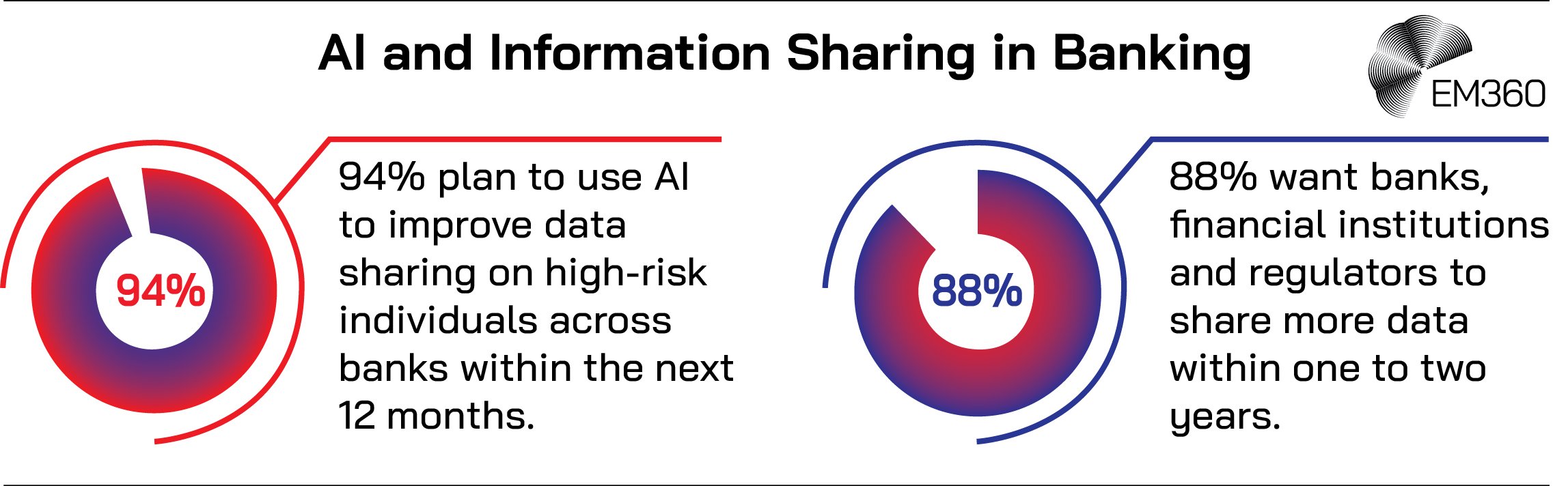

Think of your fraud controls as a muscle: the more you test and strengthen it, the better it performs against sophisticated attackers. Human factors matter too: G2A.COM trains its staff monthly to recognise phishing and social engineering. Information sharing is vital; 88 per cent of fraud leaders in BioCatch’s 2024 survey believe stronger collaboration between institutions and regulators is required.

In short, fighting AI‑driven fraud demands a mix of smarter algorithms, proactive testing and well‑trained people.

AI‑Powered Transaction Monitoring and AML Risk Scoring

Detecting fraud is one side of the coin; tracing and preventing money laundering is another. AI now plays a central role in transaction monitoring and AML compliance, delivering significant performance gains.

Inside Global Payment Defense

How G2A aligns multilayer defenses, AI tools and monthly training to secure global digital marketplaces and reduce fraud losses.

The Financial Action Task Force (FATF) notes that AI can identify up to 40 per cent more suspicious activities while reducing compliance costs by 30 per cent. Machine‑learning models evaluate each payment’s risk in real time, allowing banks to intervene instantly when patterns suggest smurfing, layering or sanctions evasion.

This real‑time risk scoring underpins Europe’s transaction risk analysis (TRA) exemption in PSD2, which allows low‑risk transactions to skip strong customer authentication if providers keep fraud rates below strict thresholds (0.13 per cent for transactions up to €100).

Regulators want financial institutions to harness AI responsibly. When the U.S. Treasury and other federal agencies urged banks to innovate with AI for anti‑money‑laundering in mid‑2025, they paired that call with strict expectations around accountability and transparency.

The European Banking Authority took a similar stance, insisting that transaction‑risk models remain open to audit. Meanwhile, criminals are already using AI to hide illicit flows: smart mixers reroute crypto transactions through labyrinthine paths, and adversarial data tweaks make funds look clean.

This arms race means your models must be tested, retrained, and overseen by people. Compliance officers should always review flagged transactions and own the final decision. It also means you need to know and trust your data.

Combine multiple sources, verify where they come from, and apply digital signatures and provenance tracking as recommended by NSA and CISA so the AI you build rests on solid ground.

The Challenge of User Authentication and Verification

AI‑powered verification tools have made user onboarding and authentication smoother and safer. Facial recognition, fingerprint scanning and voice ID are now standard for payments.

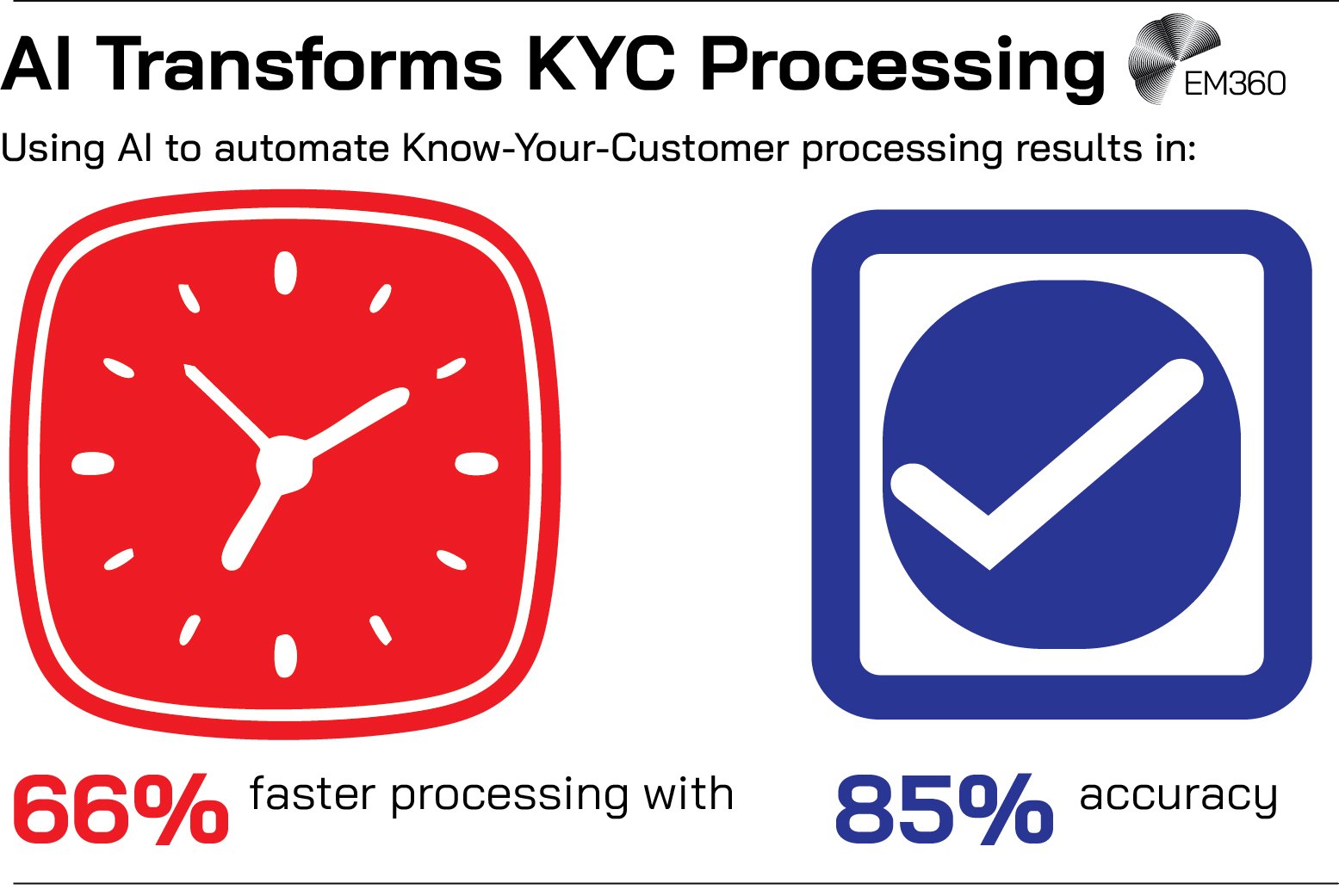

Behavioural biometrics,monitoring how users type, swipe or hold a device,provide a passive layer of security that is hard to spoof; BioCatch emphasises that these patterns are “almost impossible to replicate”. AI also speeds up Know‑Your‑Customer (KYC) checks by automatically reading documents and cross‑matching selfies or live videos.

Inside AI-Ready Security Culture

How AI, Zero Trust and human-in-the-loop operations reshape defence stacks to contain inevitable mistakes and social engineering.

In one case study, automating KYC with AI cut processing times by 66 per cent and achieved 85 per cent accuracy. Yet AI also enables identity fraud. Deepfake generators can create faces and voices that fool facial recognition and voice ID, prompting 91 per cent of financial institutions to reconsider voice‑based authentication.

Synthetic identities slip through onboarding because AI can fabricate realistic documents; an estimated 85–95 per cent of synthetic identities evade detection. Voice‑cloning attacks (“vishing”) have already convinced bank managers to authorise transfers.

Automation can’t replace human judgement; organisations need multi‑layered authentication combining biometrics, device trust signals — like IP address, geolocation, and device fingerprinting — and one‑time passcodes. Advanced liveness checks (detecting micro‑expressions or unnatural pixels) help detect deepfakes. AI can help, but it isn’t everything.

Behavioural analytics can spot subtle patterns in how users type or move that signal a bot or a deepfake. But education matters just as much. Customers need to know that a bank will never ask for a password or a one‑time code by phone or email, and employees must be trained to recognise and resist social engineering.

Securing Data with AI‑Driven Threat Detection and Encryption

Protecting data is fundamental to payment security. AI helps by finding and securing sensitive information. Intrusion detection systems powered by AI sift through logs and traffic to spot anomalies quickly. Visa credits its AI tools with cutting breach response times in half.

AI‑based data discovery scans databases and file systems to locate card numbers or personal details, tagging them for encryption or tokenisation. Automated key management and classification reduce misconfigurations by as much as 90 per cent.

Right now, most AI tools don’t fully understand the complex maths behind cryptography, so they can’t do much to improve it. Still, researchers are experimenting with ways to use AI to strengthen encryption—like spotting weak random number generation or suggesting stronger cipher setups.

When Data Leaders Need Outsiders

How trusted analysts help executives navigate AI-driven complexity, de-risk data decisions and avoid governance failures at scale.

However, AI can introduce risks. Models trained on confidential data might inadvertently leak it if prompts elicit memorised content; the PCI Council warns against feeding AI systems high‑impact secrets like API keys or raw card numbers.

Generative code assistants may propose insecure implementations unless developers review their output. Criminals aren’t just using AI to create deepfakes or phishing emails; they’re also turning it against our core defences. Machine‑learning models can automate brute‑force attacks, while poisoned training data can distort an AI’s logic from the inside.

The remedy is straightforward: be ruthless about what data goes into your models, restrict who has access, and make sure every action is logged. Host your AI systems in secure, isolated environments, have security specialists validate their outputs, and build a “kill switch” into your incident‑response plan so you can shut down any compromised model immediately.

Navigating PCI DSS and PSD2 Compliance in the AI Era

On the compliance front, AI doesn’t lower the bar—it raises it. The Payment Card Industry Data Security Standard (PCI DSS) still governs how cardholder data is handled, whether by a human‑written script or an AI.

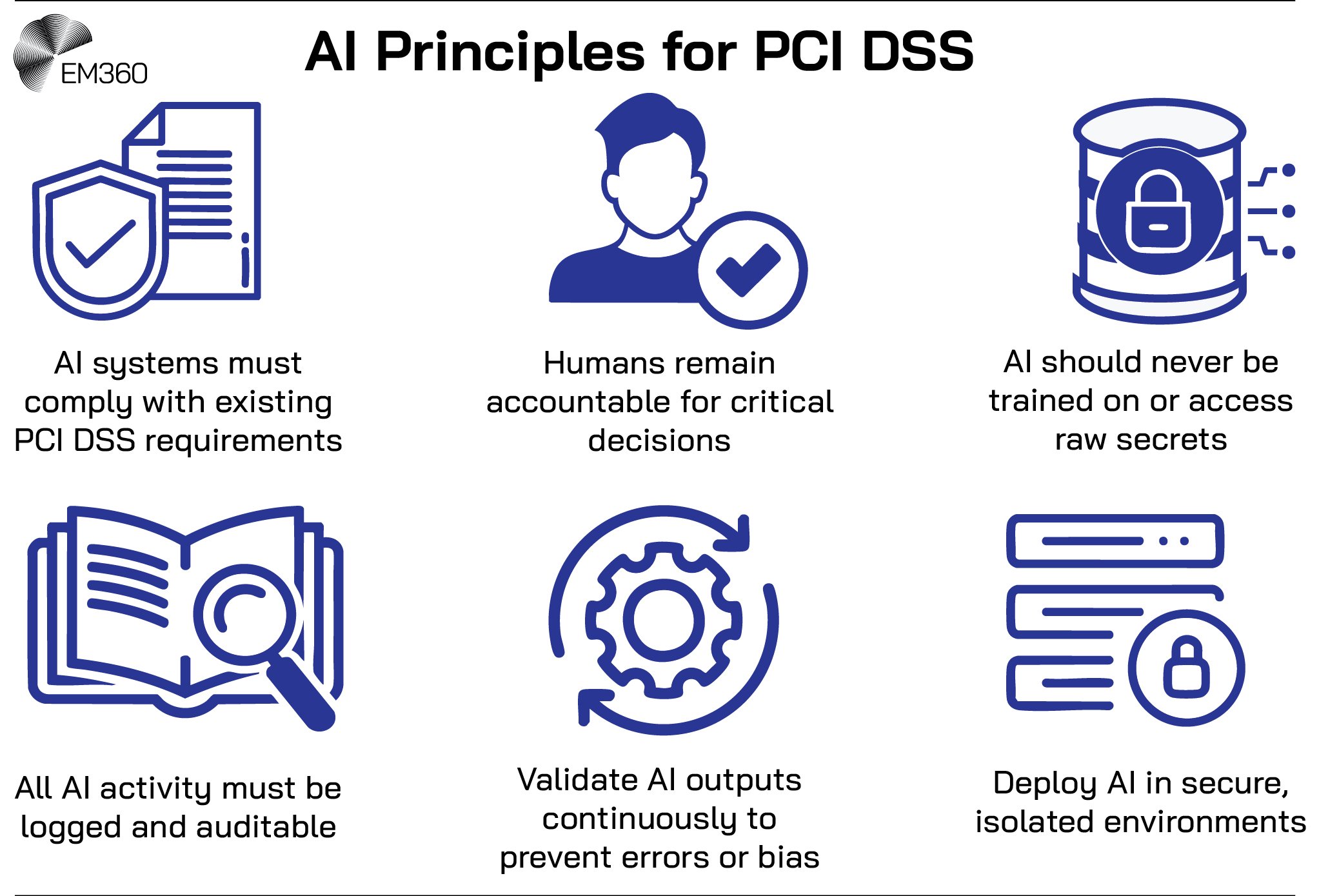

When the PCI Council issued AI‑specific principles in 2025, they didn’t reinvent the rules; they restated them for a new context. AI systems must meet all existing requirements, human owners remain responsible for critical decisions, raw secrets never belong in training data, and every AI action must be logged and auditable.

In short, treat your AI like any other sensitive component in your stack: control it, document it, and scrutinise it. These principles underscore that AI is simply another component to be secured and audited within the cardholder data environment.

The EU’s PSD2 regulation introduced strong customer authentication (SCA) but allows transaction risk analysis to exempt low‑risk payments. Organisations that keep their fraud rate under 0.13 per cent for transactions below €100 qualify for a transaction risk analysis exemption.

Boardroom Guide to AI Defense

Why model threats are now material risks, and how CISOs are using specialised tools to turn AI from unmanaged exposure into governed capability.

Real‑time AI models are critical here, assessing risk instantly to ensure they stay within this threshold. But European regulators, notably the EBA, insist that these models remain transparent and subject to regular audits. Remember that your exemption will be revoked if your fraud rate goes even a fraction over the limit.

So continuously tuning and monitoring your AI is non negotiable. The message from regulators is clear: AI is welcome, but only with both strict control and human oversight.

Building Trust in AI Payments through Governance, Culture, and Human Oversight

No amount of technology can succeed without trust and good governance. Customers must believe their data and money are safe; regulators must trust that institutions are compliant; and employees must trust the AI tools they work with. Transparency helps build that trust.

Customers will only trust AI in payments if they understand how it works for them. That means explaining, in plain language, how you use AI to spot fraud or monitor transactions and offering simple ways for users to appeal decisions. As G2A.COM’s Bartosz Skwarczek has noted, even in a digital marketplace there’s always a person on the other side of the screen. Treat them with respect and openness.

AI is a tool, not a substitute for people. Human oversight anchors good decision‑making, and diverse fraud and compliance teams bring cultural nuance and challenge blind spots—particularly for global businesses like G2A.COM that serve customers in nearly 200 countries.

Continuous training keeps everyone on the front foot against new threats, while regular audits and red‑team drills expose weaknesses before criminals do. Adding an AI ethics committee helps you check models for bias and fairness and stay aligned with emerging regulations such as the EU AI Act.

Technology alone won’t win the security battle; culture will. A company that prizes communication, collaboration and accountability will get far more value from its AI investments than one that simply deploys the latest tool.

Final Thoughts: Payment Security Demands Smarter, Safer AI Adoption

AI is changing payment security at pace. Used wisely, it blocks billions in fraud and streamlines compliance, authentication and data protection. Used maliciously, it fuels deepfakes, automated money laundering and synthetic identities. The main challenge that the industry faces is harnessing the power of AI while also neutralising it’s threats.

But the way to do that is already clear: emply adaptive models alongside regular rigorous testing, share any intelligence you’ve gathered with everyone who needs to know it, and train your people on how to spot the latest forms of social engineering attacks.

Also ground your AI in tried and true compliance frameworks like PCI DSS and PSD2, with clear human oversight and audit trails. Strong encryption to secure your data is a given, as well as tight access controls and sound development practives.

But most importantly of all — put trust and transparency at the heart of everything you build. Because when you balance innovation with responsibility, AI can become a powerful shield rather than a weapon used against your organisation.

EM360Tech’s ongoing research and insights help organisations decode this complex landscape and translate regulatory requirements into practical steps. Organisations like G2A.COM illustrate the results: as a global marketplace for video game keys, software, subscriptions and gift cards, their commitment to secure, trusted transactions builds a platform that serves millions of users worldwide.

Comments ( 0 )