Enterprises of all shapes and sizes have data pouring in faster than ever. And not only does governance have to keep pace with this, but it also has to be turned into actionable intelligence a lot faster than before. Because the gap between enterprises that act on data in the moment and those whose insights lag days or even just hours behind is widening every day.

Real-time data analytics offers a solution—it's how organisations can keep decisions as dynamic as the data itself. Consider that 86 per cent of IT leaders now prioritise streaming data as a strategic imperative. Speed to insight is now table stakes.

But getting to real time at an enterprise scale still depends on clean integration, dependable data quality, and the right tools at your fingertips.

What Real-Time Data Analytics Really Means for Enterprises

Real-time analytics is not just a prettier, faster dashboard. It is analysis that happens as data arrives, so teams see and act while events are still unfolding. Unlike traditional batch processing, you’re not waiting on a schedule. Instead, you’re working on and with live streams of data from across your enterprise.

In practice, that could mean detecting an operational issue within seconds of it occurring, rather than hours later in a daily report. The aim is low-latency data in motion: capture events, process them, and trigger an insight or action within seconds. This underpins AI and automation at work.

Think on-site personalisation as a customer clicks, or network telemetry raising an alert the moment behaviour shifts. Done right, real-time pipelines are highly available (no downtime) and scalable, yet also governed to ensure data quality. Crucially, “real time” is not absolute; in enterprise settings it often means sub-second to a few seconds latency, as appropriate for the use case.

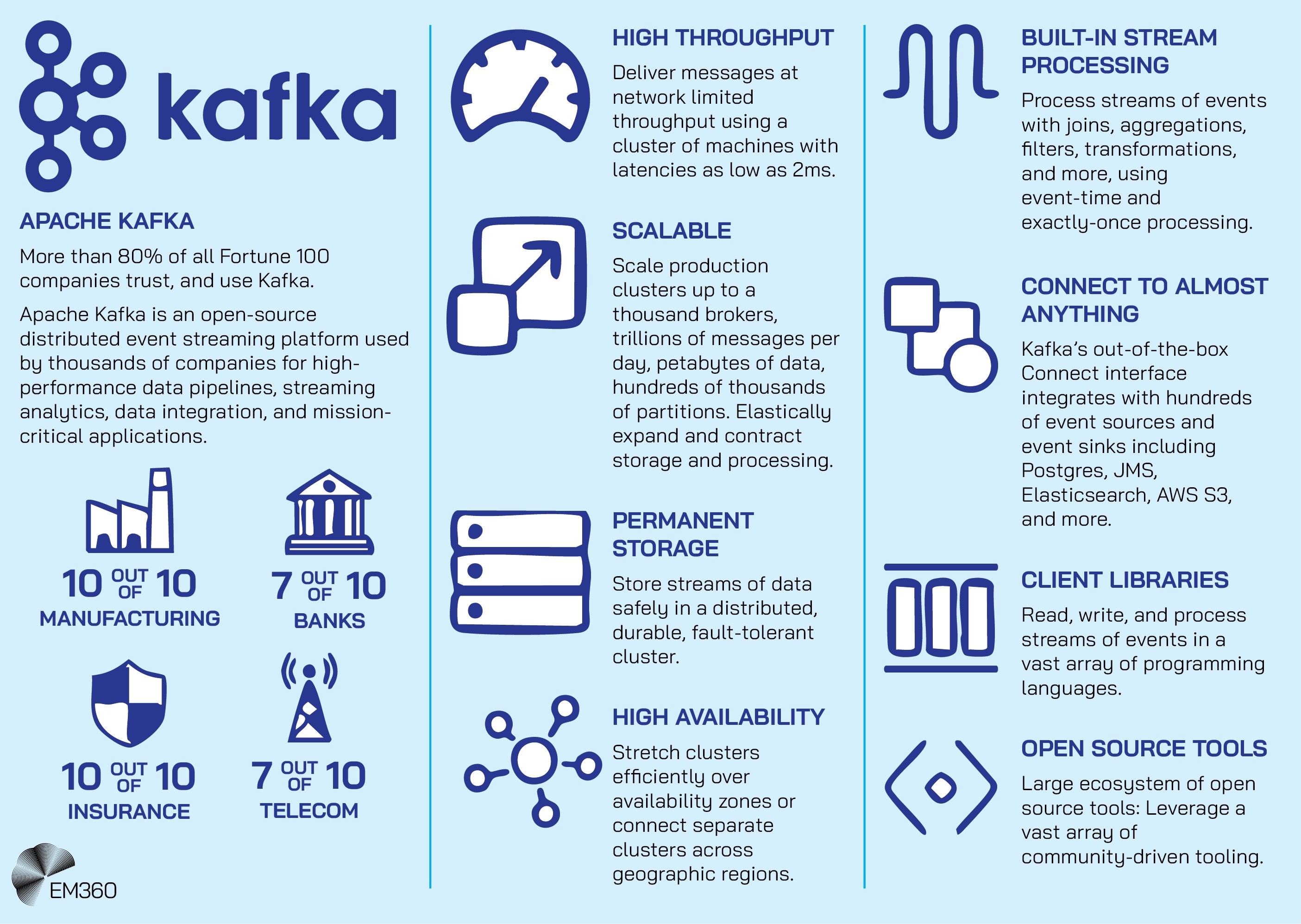

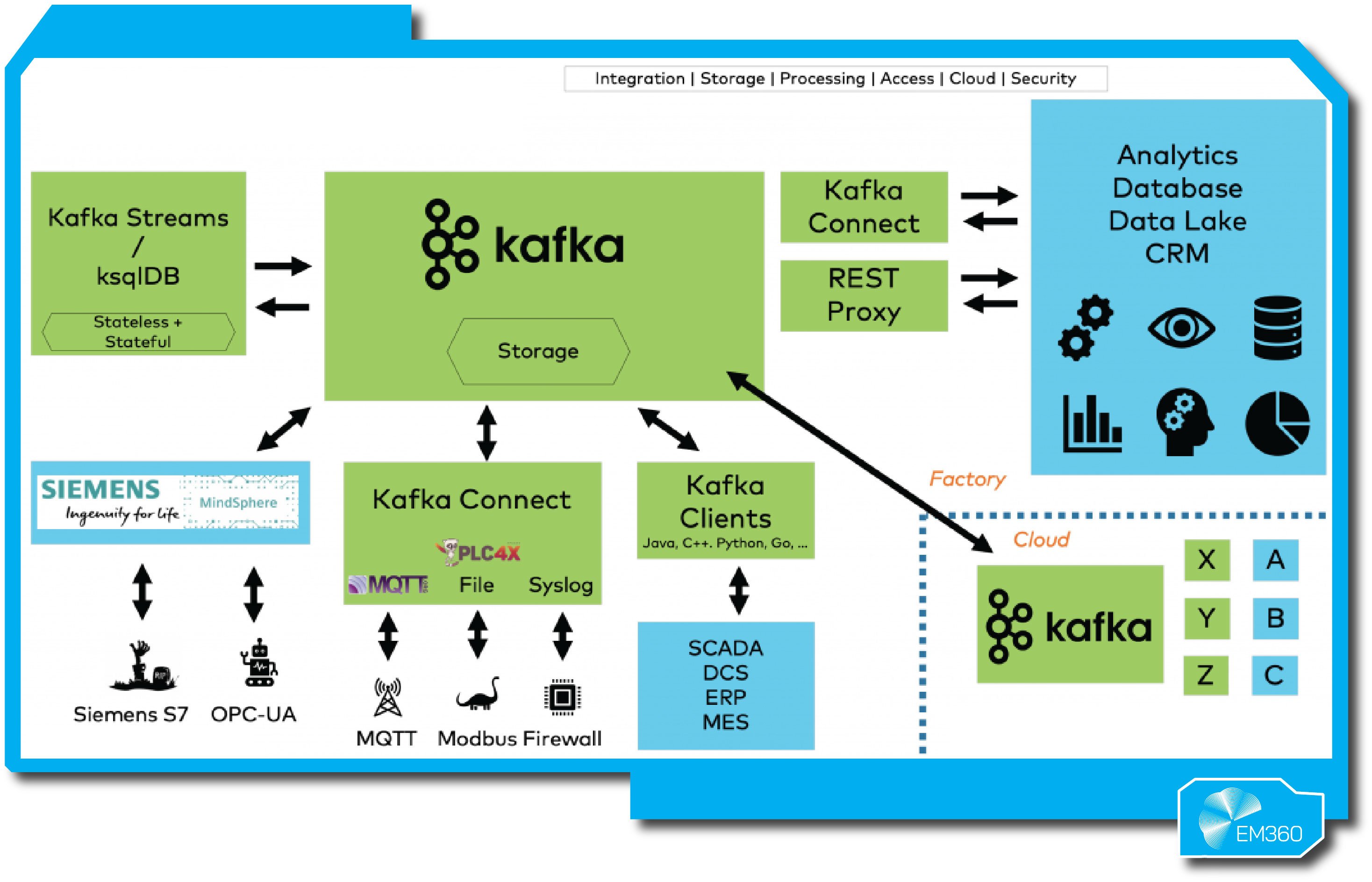

What’s changed is that today’s technologies strive to make analytics as close to instantaneous as needed, blurring the line between operational and analytical systems. In fact, the majority of Fortune 100 companies already rely on streaming platforms like Apache Kafka to infuse real-time data into their architecture, underscoring how prevalent this paradigm has become.

Why Real-Time Data Analytics Matters in 2025

Enterprises in 2025 are expected to act on information the moment it arrives. Real-time data analytics enables that immediacy, turning data velocity into business agility. The result is faster, sharper decision-making — and measurable gains.

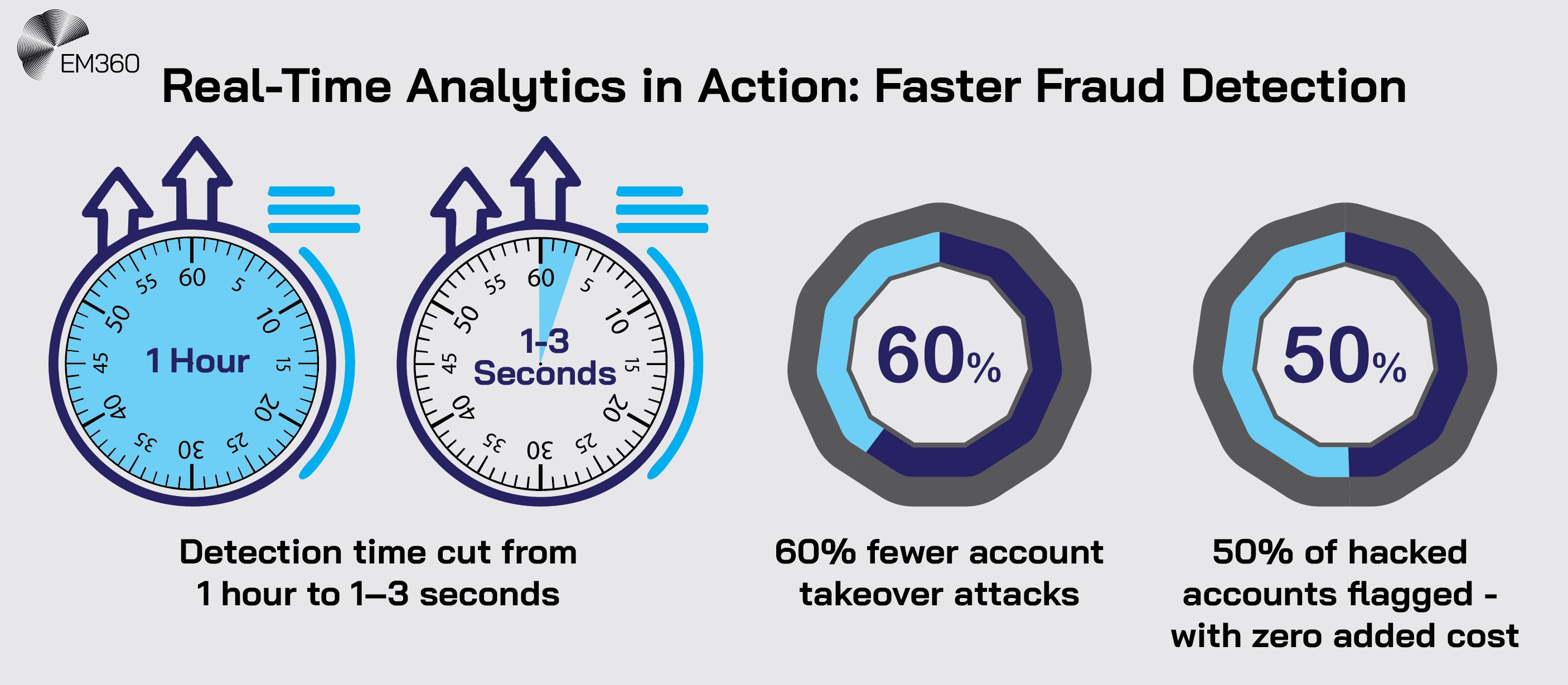

One financial services firm, for example, cut its fraud detection time from an hour to seconds, driving a 60 per cent reduction in account takeovers. That level of responsiveness is impossible with batch reporting. For most organisations, the first impact is operational efficiency.

Real-time pipelines let teams detect and resolve issues as they happen, rather than after they escalate. The second is customer experience. In an era of always-on expectations, businesses that personalise interactions in the moment earn stronger loyalty. Continuous analytics supports 24/7 service personalisation, where offers, pricing, and content adjust dynamically to live behaviours.

The third is risk control. Instant visibility across transactions, systems, and supply chains helps prevent losses and reputational damage before they occur. The returns are tangible. Forty-four per cent of enterprises report a fivefold ROI on streaming analytics investments, with some saving over $2.5 million within three years.

By acting on current, trusted data, organisations can lower costs through real-time optimisation and capture revenue opportunities as they emerge. Crucially, real-time analytics now underpins AI itself. Machine learning models depend on fresh data to stay accurate, making streaming pipelines the foundation for AI-powered decision-making.

In one global survey, 63 per cent of organisations said streaming data platforms directly fuel their AI initiatives. From automated trading to dynamic supply chains, real-time analytics turns AI from a theoretical advantage into a practical one.

Being data-driven is no longer enough; being data-driven in real time is what keeps enterprises proactive, resilient, and ahead of change.

Key Trends Powering the Real-Time Analytics Revolution

When SOAR Redefines Defense

Boards are backing SOAR to turn fragmented tools into coordinated, automated response and shrink breach impact windows across the enterprise.

The shift to continuous intelligence is reshaping how enterprises manage infrastructure, deliver insights, and embed AI into everyday operations. Three trends stand out in 2025: cloud-native streaming, streaming databases, and the growing demand for data freshness driven by AI and automation.

Cloud-native streaming architectures are becoming the norm

Enterprises are opting for managed, cloud-native stacks for streaming and analytics. AWS, Azure and Google now provide fully managed engines, messaging and real-time stores that scale automatically and reduce operational drag.

At the same time, warehouses have added streaming ingestion and live queries, while lakehouse platforms unify batch and stream on one plane. The result is end-to-end pipelines for ingestion, processing, analytics and ML inside a single ecosystem. Governance is simpler, and latency falls because data no longer hops between silos.

The market reflects the shift, with streaming analytics projected to reach $176 billion by 2032. Cloud-native streaming is becoming the default, even for smaller teams.

Streaming databases bridge the gap between analytics and operations

Streaming databases make continuous data instantly queryable with SQL. Platforms such as Materialise, RisingWave and Apache Pinot (via StarTree) maintain materialised views so KPIs and application features update in sub-seconds as events land.

That supports live dashboards, anomaly detection and user-facing analytics that must respond while behaviour changes. These systems blend incremental processing with distributed, low-latency storage to deliver immediacy and consistency.

Adoption is accelerating alongside event backbones like Kafka, already used by 80 per cent of the Fortune 100. Expect deeper convergence between streaming databases and traditional analytics platforms through 2025.

AI and automation are driving demand for data freshness

Production AI only performs as well as the recency of its inputs. Fraud models, personalisation engines and predictive maintenance systems need events from the last seconds, not hours. That reality is driving investment, with nearly 90 per cent of IT leaders increasing spend on streaming platforms to power AI and real-time automation.

AI-Powered Procurement Balance

How AI-driven matching, anonymity and verified capabilities are reshaping sourcing infrastructure while leaving final judgment to people.

Practical examples are everywhere: contact-centre bots adapt to live sentiment; supply chains reroute as risks emerge. Generative AI copilots add another push, requiring current data to produce trustworthy guidance. The pattern reinforces itself. You need fresher data if you want more automation, and having fresher data tends to lead to more automation.

The pattern is reinforcing: more automation needs fresher data, and fresher data unlocks stronger automation.

How To Evaluate a Real-Time Analytics Platform

Choose on evidence, not hype. Focus on how each option performs under load, fits your architecture, and supports where you are taking AI and automation next.

Core evaluation criteria

- Latency and throughput: Measure end-to-end latency and peak event rates for your specific use cases, watching for consistent sub-seconds where it matters and capacity with flexibility for spikes.

- Scalability and reliability: Prioritise auto-scaling, multi-AZ or regional resilience, checkpointing, and exactly-once guarantees to prevent loss or duplication.

- Integration: Verify native connectors and APIs for Kafka, Kinesis, databases, object storage, business intelligence tools, and alerts; fewer custom bridges mean faster delivery.

- Security and governance: Require encryption, fine-grained access controls, schema validation, lineage, and audit logs so streaming data stays compliant.

- Developer experience and observability: Prefer familiar interfaces (SQL, Python) plus clear metrics, logs, and alerts; an observable system reduces time to diagnose issues.

Architectural fit

- Streaming databases / real-time OLAP: Best for ultra-fast queries on fresh events and high concurrency. Examples include Apache Pinot and ClickHouse services. Expect sub-second responses on recent data, typically with narrower retention to keep speed.

- Lakehouse platforms: Unite batch and streaming on shared storage for analytics and ML. Databricks is a common choice, using open formats and ACID to keep streams reliable while historical data stays accessible.

- Warehouse-native streaming: Extend your warehouse with streaming ingestion and dynamic tables for near-real-time BI using familiar SQL and governance. Snowflake and BigQuery fit here. Latency is low, though not always millisecond-class like specialised engines.

Choose streaming DBs for speed at scale, lakehouse for one platform across analytics and AI, and warehouse-native when tight BI integration and existing skills are the priority.

Future alignment

Taming Agentic AI in Operations

Shift the risk question from trusting the agent to defining which workflow steps stay rule-bound, making autonomy auditable and safe.

- AI readiness: Ensure simple paths to feed and score models in real time, with built-in support for Python or SQL-based ML and on-the-fly inference where needed.

- Event-driven action: Look for native triggers, webhooks, and workflow or serverless integrations so insights become actions instantly.

- Deployment flexibility: Plan for compliance and latency needs with cloud-agnostic options, hybrid support, and edge processing where appropriate.

Picking for tomorrow avoids rip-and-replace. Aim for platforms that advance automation, maintain strong observability, and scale as data and AI workloads grow.

Leading Real-Time Data Analytics Platforms for 2025

With the landscape defined, let’s examine ten leading platforms enabling real-time analytics in enterprises, plus a couple of innovative up-and-comers. These were selected for their maturity, enterprise-ready capabilities, innovation in streaming technology, and market traction going into 2025. Each offers a distinct approach to real-time data analytics:

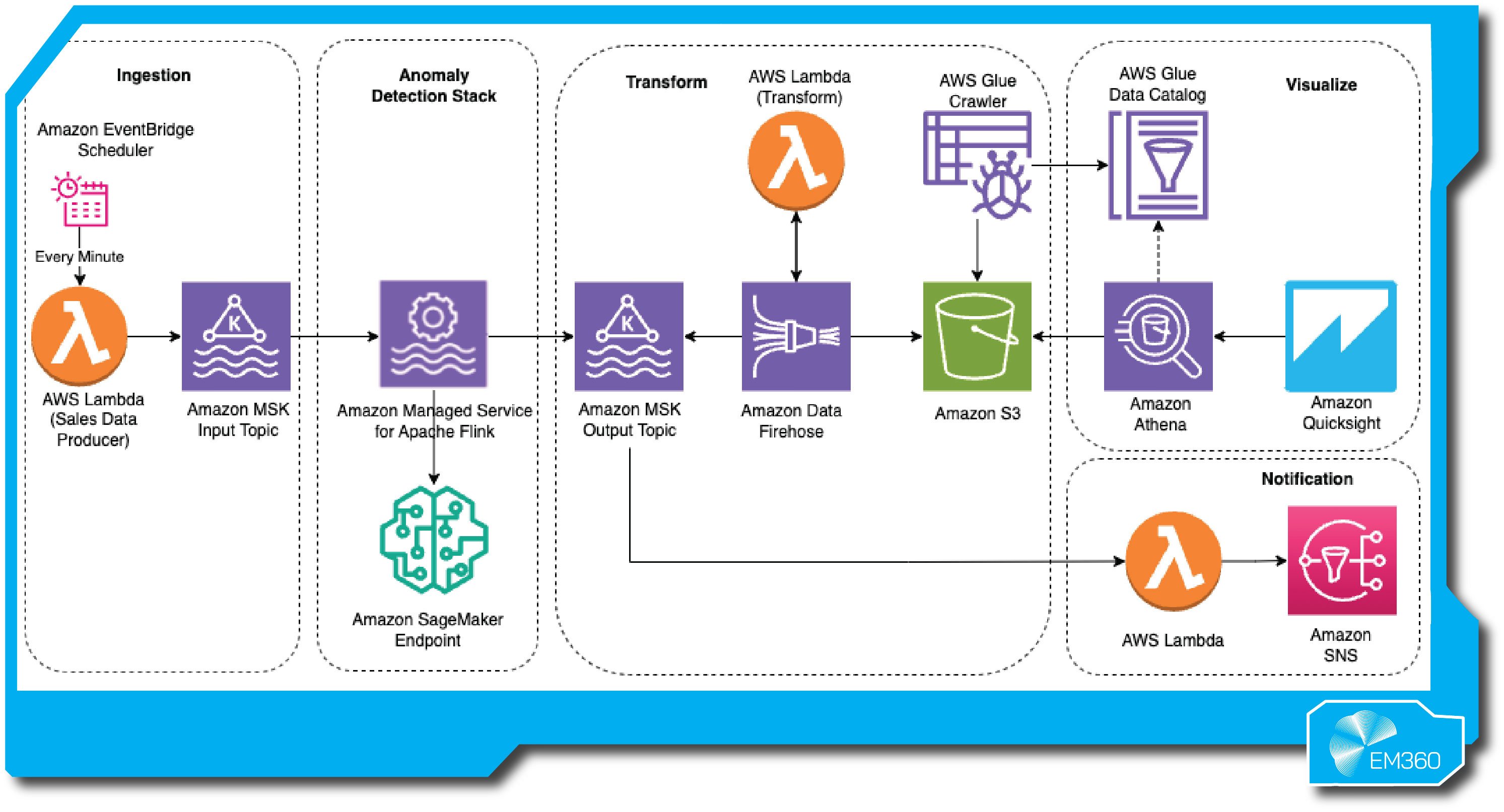

1. AWS Managed Service for Apache Flink

AWS offers a fully managed Apache Flink service for real-time stream processing, so teams can build and run applications without managing clusters. It integrates tightly with the wider AWS stack and supports Java, Scala, Python, and SQL for event-driven pipelines across clickstreams, IoT, and transactional data.

Enterprise-ready features

The service delivers low-latency, stateful processing with event time, windowing and complex event logic, backed by exactly-once guarantees. High availability comes from automatic checkpointing to Amazon S3 and fast recovery, with CloudWatch for end-to-end observability and alerting.

Native connectors simplify integration with Kinesis, Amazon, MSK, S3, DynamoDB, and Redshift. Security is handled with VPC isolation, IAM controls and encryption, giving governed, auditable pipelines that scale horizontally with demand.

Pros

- Deep integration across AWS services speeds ingestion and delivery.

- Managed infrastructure cuts operational overhead and scales automatically.

- Exactly-once semantics protects accuracy in financial and operational workloads.

- Flexible development in Java, Scala, Python and SQL.

- Enterprise security comes with VPC, IAM, and encryption by default.

Cons

- Best for AWS-first strategies rather than multi-cloud or on-prem.

- Flink’s learning curve can slow inexperienced teams.

- Continuous high-throughput jobs can be costly without optimisation.

Best for

Organisations standardised on AWS that need reliable, low-latency streaming ETL and event processing at scale, from IoT telemetry to real-time metrics and enrichment, with minimal platform management.

2. ClickHouse Cloud

When GPS Fails: Inside AQNav

How AI and quantum magnetometry create GPS-independent positioning for defense, aviation and critical infrastructure operations.

ClickHouse Cloud is the managed service for the ClickHouse OLAP database, engineered for sub-second SQL analytics at massive scale. It ingests data in near real time and serves interactive queries for dashboards, APIs and monitoring without the burden of running clusters.

Enterprise-ready features

Automatic clustering, replication and upgrades keep performance high while reducing ops. Streaming ingestion via Kafka and common pipelines makes fresh data instantly queryable, with materialised views and a powerful SQL dialect for complex aggregations and joins.

Access control, encryption and audit logging support governance, and efficient columnar storage with compression keeps costs down as data grows. Elastic scaling lets teams handle bursty workloads and pay only for what they use.

Pros

- Sub-second analytics on billions of rows for real-time use cases.

- Continuous ingestion makes new events immediately queryable.

- Fully managed service removes cluster toil and speeds delivery.

- Rich SQL feature set supports advanced analytical logic.

- Storage efficiency and compression help control total cost.

Cons

- OLAP focus requires thoughtful modelling to sustain peak speed.

- Not suited to OLTP transactions or workflow updates.

- Visualisation typically relies on external BI or custom apps.

Best for

High-concurrency, real-time analytics on large datasets, such as user-facing dashboards, telemetry analysis and log or network monitoring, where speed and cost efficiency both matter.

3. Confluent Data Streaming Platform (Kafka)

Confluent, founded by the creators of Apache Kafka, provides an enterprise streaming platform in the cloud and on premises. It acts as the backbone for data in motion, connecting applications and stores through real-time event streams with governance and processing built in.

Enterprise-ready features

Confluent Cloud abstracts cluster operations and scales Kafka with high reliability, while Schema Registry and Stream Governance enforce contracts, lineage and quality across producers and consumers. ksqlDB enables continuous SQL transformations, and managed Apache Flink adds stateful stream processing for complex aggregations and windowing.

A large connector ecosystem accelerates integration with databases, SaaS and cloud storage. Security spans encryption, fine-grained ACLs and audit logs, giving teams a governed, production-grade streaming fabric that supports millions of messages per second.

Pros

- Kafka foundation proven at extreme scale in global enterprises.

- Managed service reduces operational risk and speeds adoption.

- Schema Registry and governance improve data quality and trust.

- ksqlDB and managed Flink enable powerful real-time transformations.

- Broad connector library shortens time to integrate sources and sinks.

Cons

- Kafka concepts and capacity planning add complexity for new teams.

- Requires pairing with analytic stores for querying beyond transport.

- Large-scale retention and heavy connector use can raise costs.

Best for

Enterprises building event-driven architectures that need a dependable streaming backbone to connect microservices, modernise data integration and feed real-time analytics with strong governance.

4. Databricks Lakehouse Platform

Databricks unifies data engineering, analytics and AI on a lakehouse architecture that treats streaming and batching as first-class citizens. Teams ingest live data into Delta Lake, analyse it with SQL or notebooks, and operationalise ML in the same environment.

Enterprise-ready features

Structured Streaming treats streams as incrementally updated tables, so the same code works for batch and real time. Delta Lake adds ACID transactions and schema enforcement for reliable upserts, CDC and late data. Delta Live Tables provides managed, declarative pipelines with built-in monitoring and data quality checks.

Operational control comes through streaming observability, Unity Catalog for governance, and autoscaling Spark clusters for throughput. MLflow, model serving and Photon acceleration round out end-to-end performance across analytics and AI.

Pros

- One platform for batching, streaming, and ML simplifies architecture.

- Delta Lake ensures reliability and consistency on live data.

- DLT reduces pipeline ops with declarative orchestration and monitoring.

- Strong ML tooling and real-time model serving in the platform.

- Autoscaling and Photon deliver performance at a large scale.

Cons

- Breadth introduces a learning curve for new teams.

- Micro-batch model may not meet ultra-low latency needs.

- Continuous clusters require careful cost management.

Best for

Enterprises standardising on a single environment for pipelines, analytics and AI where streaming must sit beside historical data with shared governance and skills.

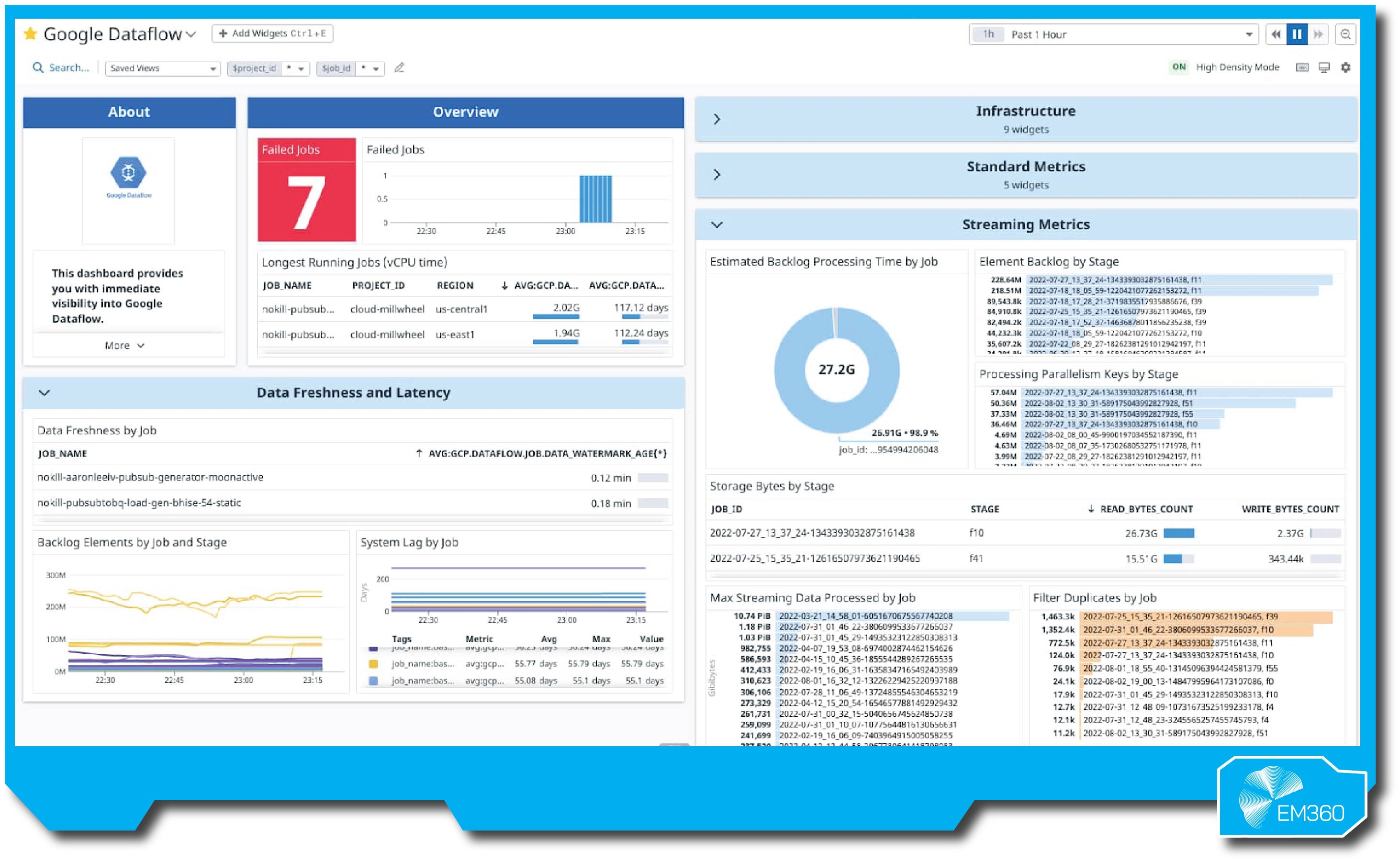

5. Google Cloud (Pub/Sub, Dataflow and BigQuery)

Google Cloud pairs Pub/Sub for messaging, Dataflow for processing and BigQuery for analytics to deliver a serverless, real-time stack. Events flow from producers into Pub/Sub, transform in Dataflow, then land in BigQuery for instant SQL.

Enterprise-ready features

Pub/Sub offers global, low-latency delivery with elastic scale. Dataflow, built on Apache Beam, provides stateful stream processing, windowing, and event-time semantics with autoscaling, as well as templates for common patterns.

BigQuery ingests via the Storage Write API for near-immediate queryability, while Dynamic Tables keep transformations current. Governance, IAM, encryption and Stackdriver monitoring apply end to end, with Looker and BigQuery ML on top for BI and ML.

Pros

- Fully managed services reduce operational burden.

- Unified pipeline from ingest to analytics in minutes.

- Near real-time SQL in BigQuery with familiar tooling.

- Strong AI and BI integrations out of the box.

- The global footprint supports multi-region reliability.

Cons

- Deepest value comes if you commit to GCP services.

- Beam/Dataflow concepts add a learning curve.

- Always-on streaming can drive costs without guardrails.

Best for

Teams that want a fast, serverless path to real-time dashboards and operational analytics using standard SQL, with tight integration to Google’s AI and BI ecosystem.

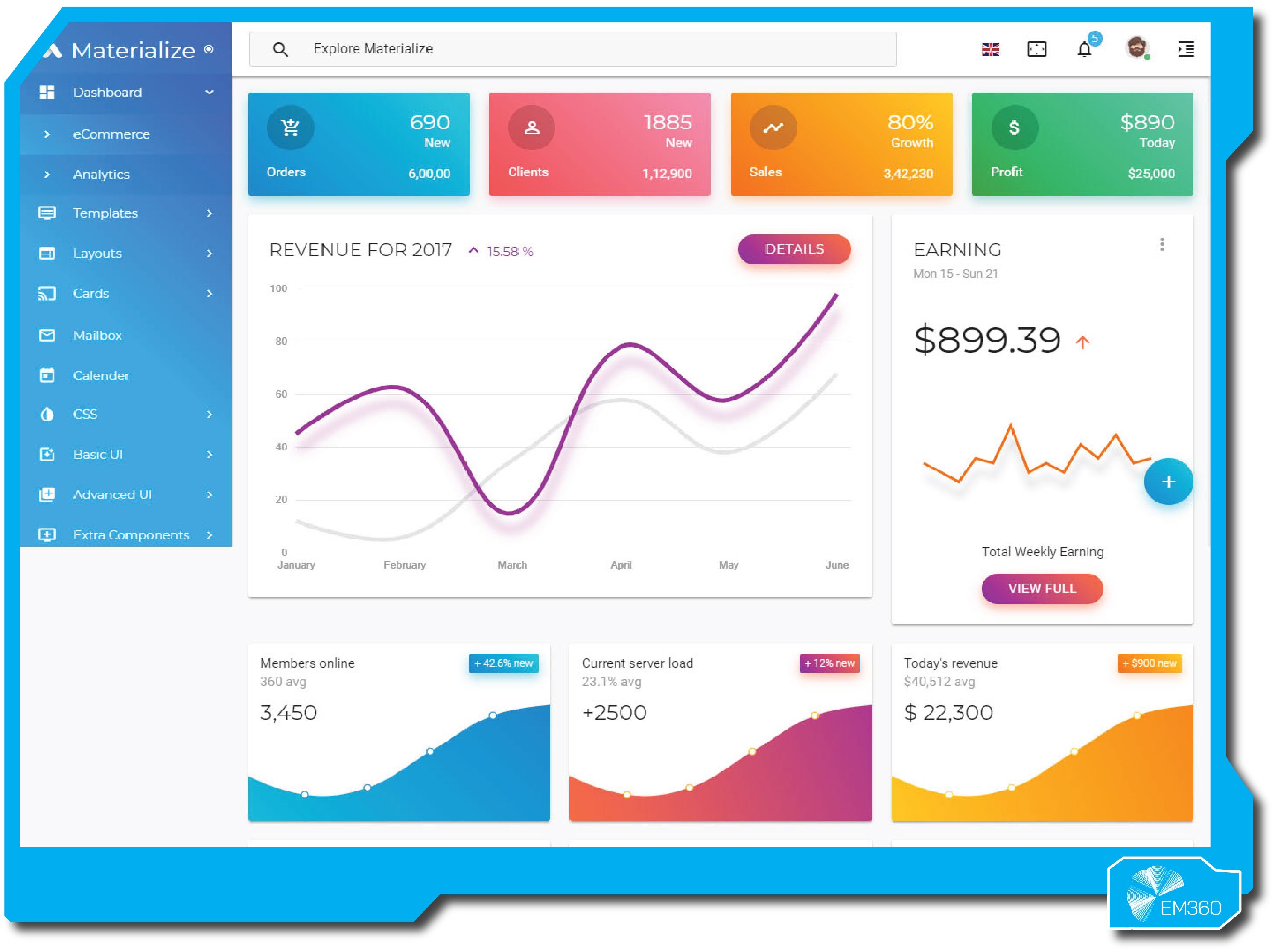

6. Materialize

Materialize is a streaming database that keeps SQL results continuously up to date. You write standard SQL for joins and aggregations, and it maintains materialised views so queries reflect the latest events.

Enterprise-ready features

Incremental computation and exactly-once ingestion deliver low-latency, correct answers as data arrives. It ingests from Kafka, Kinesis and CDC sources, exposes a PostgreSQL interface for easy connectivity, and supports complex joins and windowing with ACID guarantees.

Available as a managed service or self-hosted, it provides metrics for lag and performance, with features to optimise state and memory as workloads grow.

Pros

- True streaming SQL lowers the skills barrier.

- Views update in milliseconds for live analytics.

- ACID semantics provide trustworthy results.

- Handles complex joins that are hard in low-level engines.

- Postgres wire compatibility simplifies integration.

Cons

- Stateful views can be memory-heavy at a large scale.

- Newer technology with a smaller production footprint.

- Source support outside Kafka and Postgres may need extra work.

Best for

Product and data teams that want real-time dashboards, alerts and user-facing features built quickly with SQL, without managing a separate stream-processing codebase.

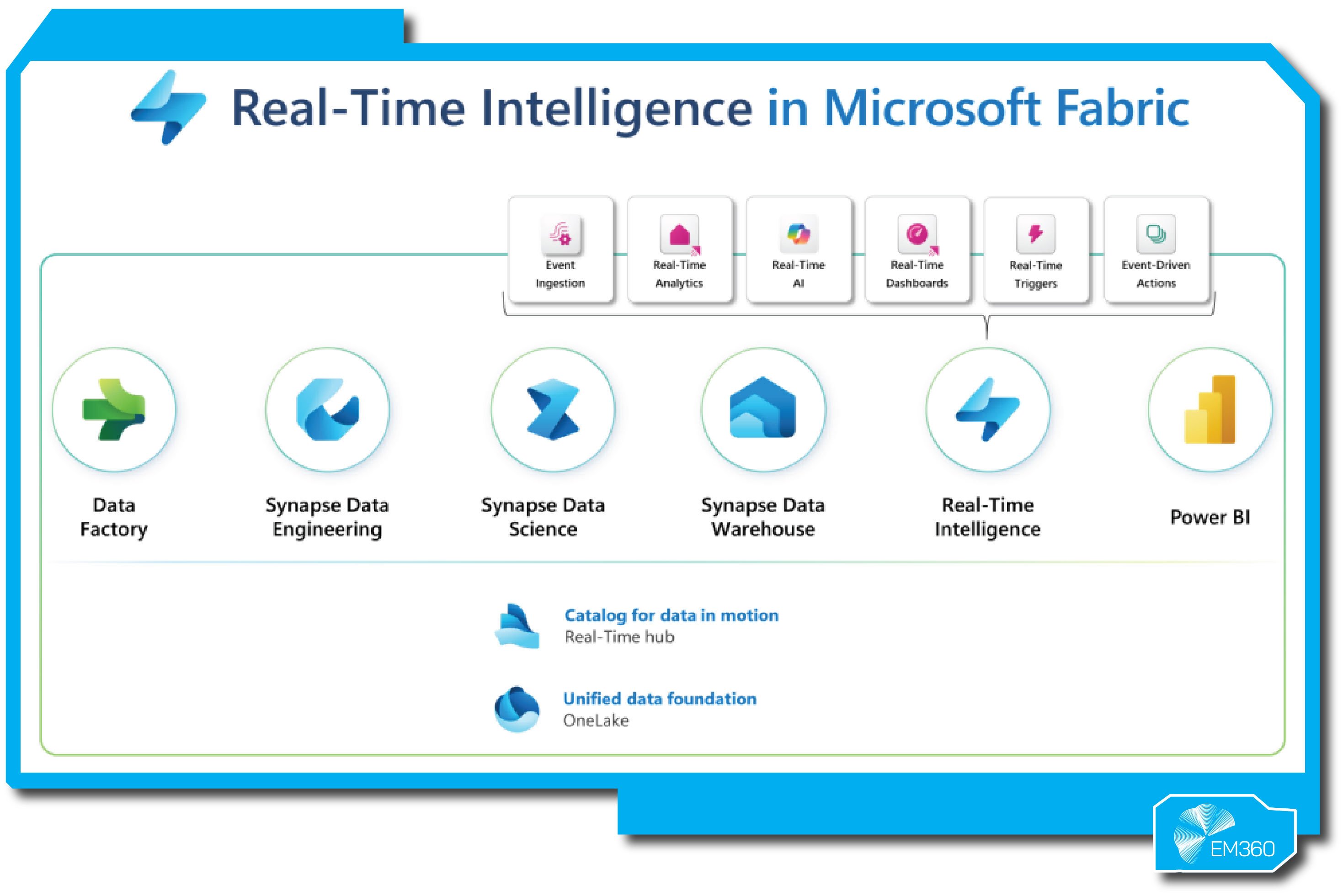

7. Microsoft Fabric Real-Time Intelligence

Microsoft Fabric brings streaming analytics, warehousing and BI into a single SaaS experience. Real-Time Intelligence uses Eventstream and the Kusto engine to feed live insights directly into Power BI and OneLake.

Enterprise-ready features

OneLake mirroring enables zero-ETL data movement from operational stores into Fabric. Eventstream provides no-code pipelines to capture, route and shape events, while KQL delivers fast queries on time-series and log data.

Governance is unified through Purview, with security and capacity controls across items. Native ties to Power BI enable live dashboards and alerts, and Fabric manages scale and updates behind the scenes.

Pros

- Unified stack for streaming, BI and governance.

- Zero-ETL mirroring cuts integration effort.

- Kusto engine excels at telemetry analytics.

- No-code pipelines speed up first value.

- Tight Power BI integration for real-time reporting.

Cons

- New platform with features still maturing.

- Best fit for Microsoft-centric environments.

- Capacity planning and tuning need attention at scale.

Best for

Organisations on Microsoft stacks that want governed, real-time dashboards and analytics without stitching multiple Azure services, prioritising fast rollout and shared tooling.

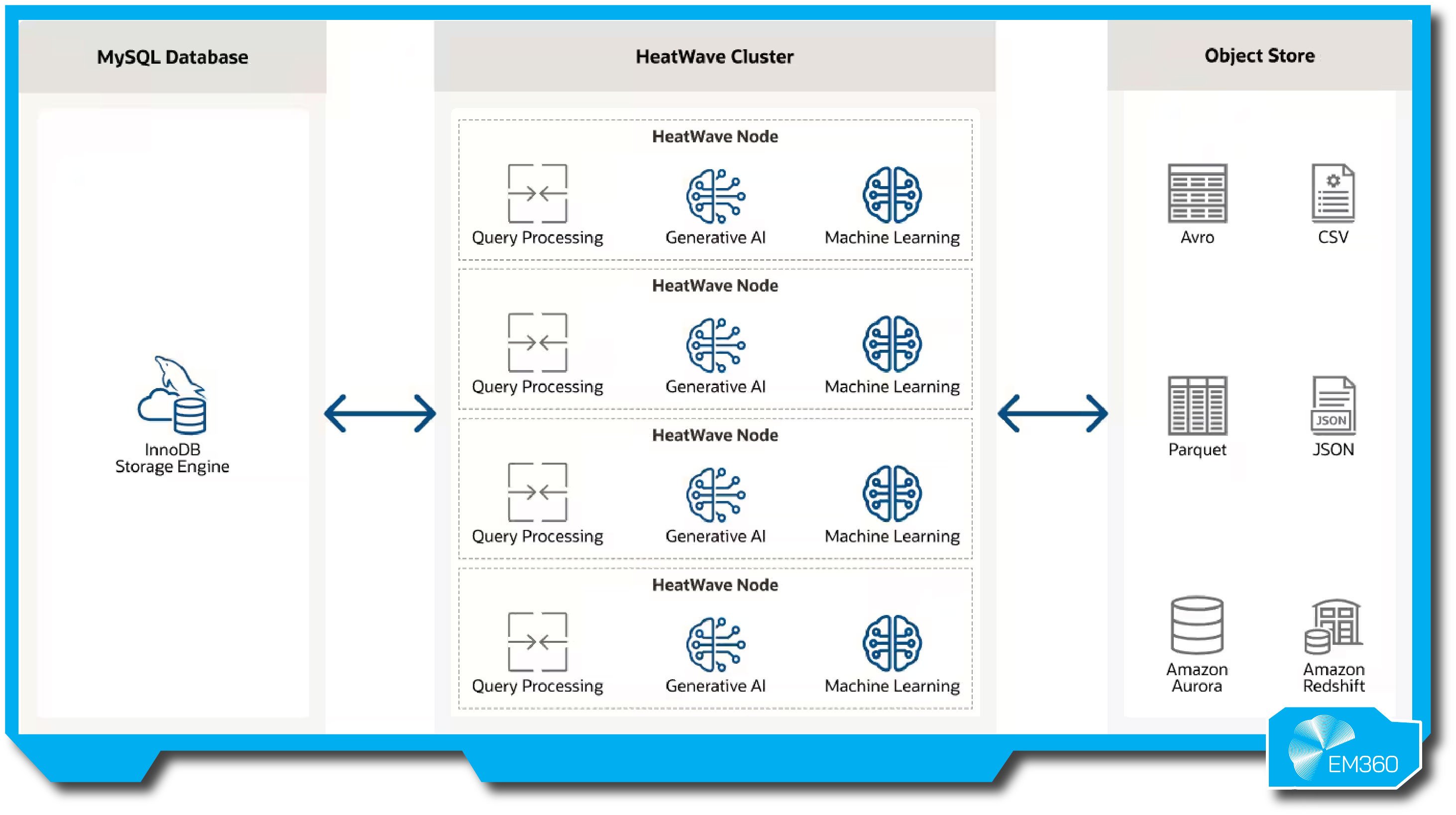

8. Oracle MySQL HeatWave

MySQL HeatWave extends MySQL with an in-memory, massively parallel engine for analytics and ML. It turns operational MySQL into an HTAP system so you can run real-time queries and models on live transactional data.

Enterprise-ready features

HeatWave accelerates joins, aggregations and training with vectorised execution and distributed memory, removing the lag of ETL into a separate warehouse. AutoML enables model training and inference in SQL, while HeatWave Lakehouse queries external object storage for larger data.

High availability, MySQL auth, encryption and managed operations on OCI and AWS deliver governed performance without changing applications.

Pros

- Real-time analytics on operational MySQL with no ETL.

- In-memory MPP engine delivers major speedups.

- Built-in AutoML keeps ML close to the data.

- Fewer data copies reduce risk and complexity.

- Managed service simplifies deployment and scaling.

Cons

- Active dataset size is constrained by memory.

- Cloud availability is limited to supported providers.

- Pure OLTP workloads may not justify the extra cost.

Best for

MySQL-centric teams that need immediate insight from live transactions, from fraud checks to real-time reporting, and prefer a unified system over adding a separate analytics database.

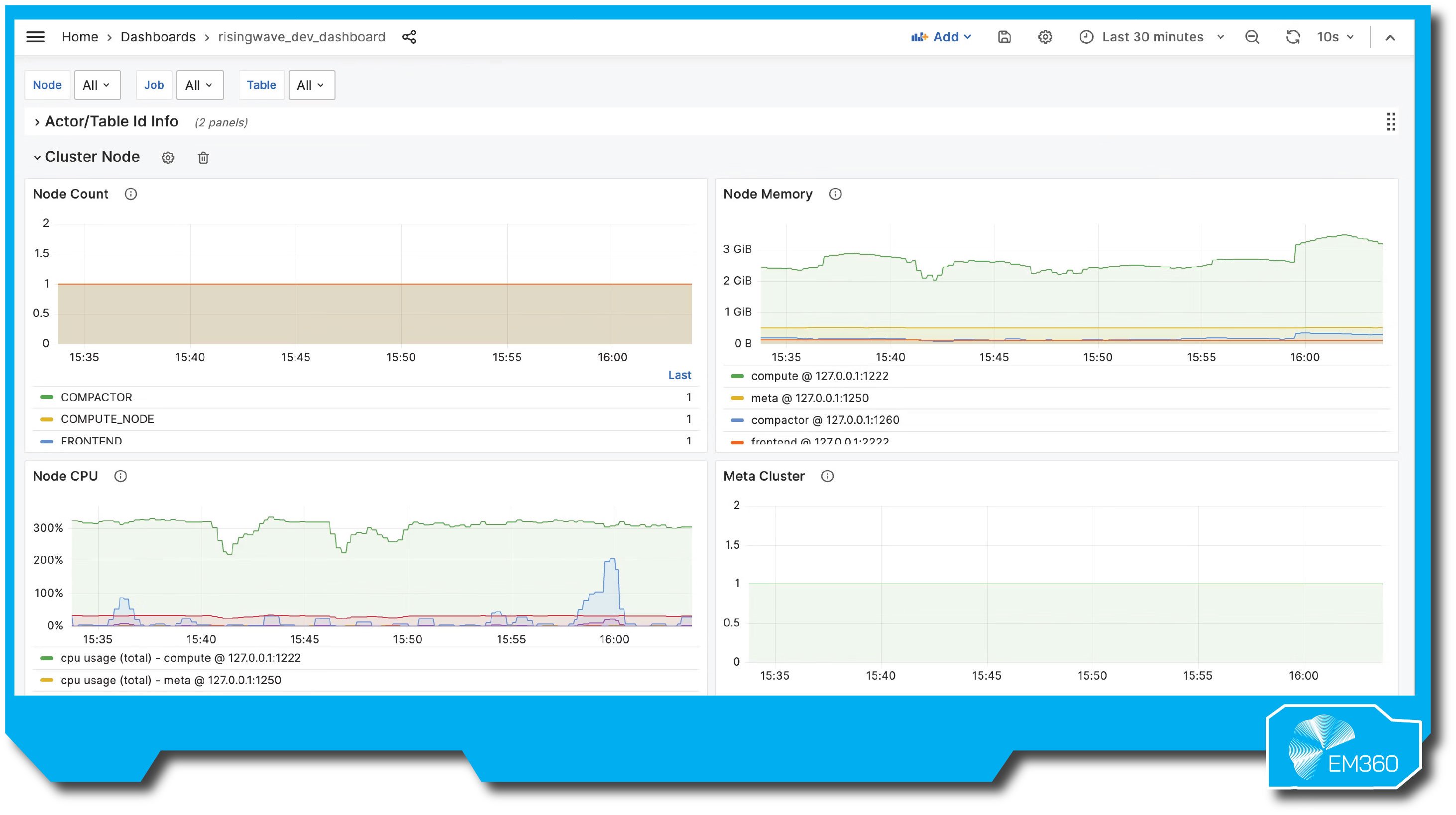

9. RisingWave

RisingWave is an open-source streaming database for cloud-native workloads that keeps SQL results current as events arrive. Its disaggregated architecture separates compute and storage to deliver real-time processing with a focus on simplicity and cost control.

Enterprise-ready features

Incremental computation maintains materialised views as data lands — supporting windowed aggregations, joins across streams and reference tables, and user-defined functions with familiar SQL. Compute–storage separation lets the system spill state to disc or object storage, reducing memory pressure for large stateful jobs and improving cost efficiency.

Built in Rust and designed for Kubernetes, RisingWave ingests from Kafka, Redpanda and Debezium CDC, exposes Postgres-compatible endpoints, and integrates with Prometheus and Grafana for monitoring. A managed cloud option handles provisioning, scaling and resilience via state recovery on failure.

Pros

- Cloud-native design with separated storage reduces memory costs at scale.

- Standard SQL lowers the learning curve for streaming analytics.

- Open source avoids lock-in and enables self-hosting.

- Horizontal scaling and Kubernetes readiness fit modern deployments.

- Rapid development cadence brings watermarking and windowing best practices.

Cons

- A younger ecosystem and fewer production references at the Fortune 500 scale.

- Smaller community and tooling compared with Kafka or Flink.

- Some advanced features are still maturing.

- Early adopters may encounter edge cases and performance tuning needs.

- Overlaps with existing Kafka+Flink stacks may limit adoption in entrenched estates.

Best for

Teams building fresh, cloud-native streaming pipelines that want SQL-first development, lean operations and lower memory costs, from IoT time windows to real-time product analytics.

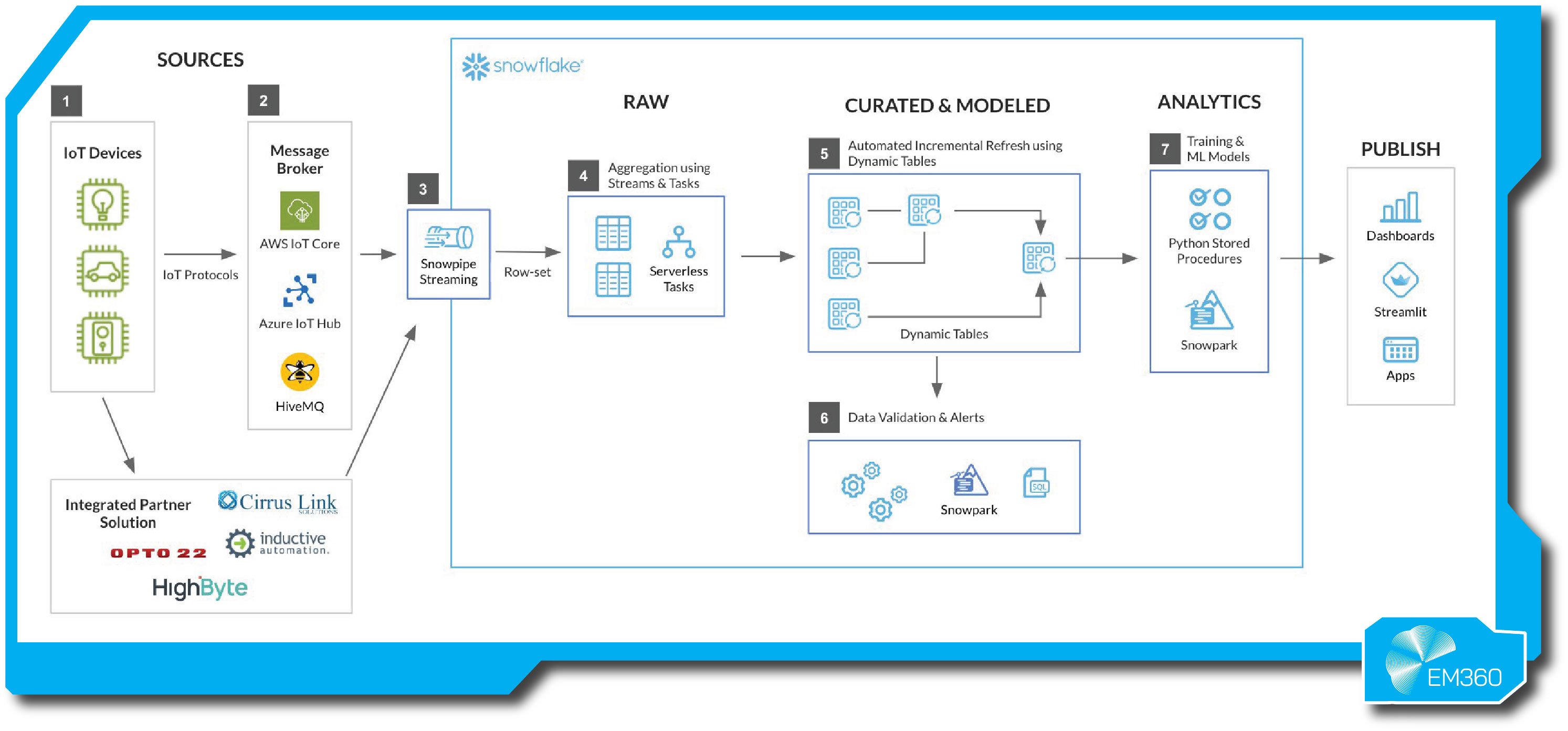

10. Snowflake (Snowpipe Streaming and Dynamic Tables)

Snowflake extends its warehouse with Snowpipe Streaming for low-latency ingestion and Dynamic Tables for continuous SQL transformations, bringing real-time data alongside historical analytics in one governed platform.

Enterprise-ready features

Snowpipe Streaming pushes events directly into Snowflake with exactly-once delivery, removing staging delays. Dynamic Tables act like continuously updated materialised views, keeping downstream models and BI current without external stream processors.

Elastic virtual warehouses isolate ingest and query workloads, while governance, lineage, security and cross-cloud availability apply uniformly to streaming and batch. Snowpark and external functions let teams embed Python or Java logic and ML scoring in the same environment.

Pros

- Real-time data and analytics in a familiar warehouse with standard SQL.

- Dynamic Tables reduce streaming ETL engineering effort.

- Strong governance, security and multi-cloud operations.

- Connectors and APIs simplify ingestion from Kafka and apps.

- Unified skills and tooling accelerate adoption.

Cons

- Newer streaming features may have limits as they scale.

- Continuous workloads can increase credit spend without controls.

- Latency is low but not always millisecond-class.

- Proprietary platform reduces on-prem flexibility.

- High-volume raw streams may require pre-aggregation to manage cost.

Best for

Enterprises already on Snowflake that want near real-time dashboards and pipelines without adding a separate streaming stack, keeping BI, models and governance in one place.

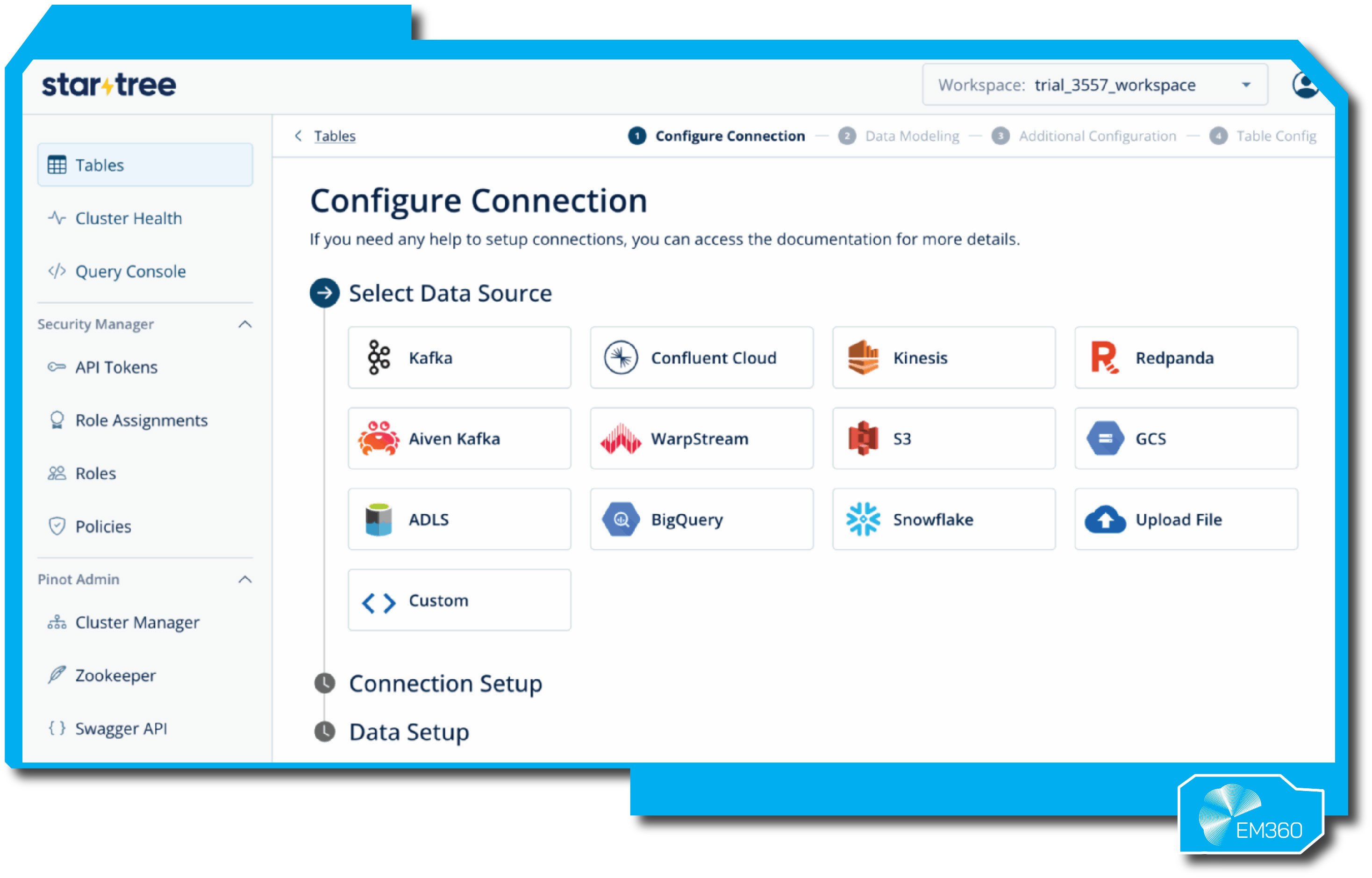

11. StarTree (Apache Pinot)

StarTree delivers a managed platform for Apache Pinot, the real-time OLAP datastore built for sub-second, high-concurrency analytics on fresh event data powering user-facing products and operational dashboards.

Enterprise-ready features

Pinot ingests streams from Kafka or Kinesis and blends them with historical data, using columnar storage and indexes such as inverted, sorted and star-tree to answer aggregations quickly at scale. StarTree adds cloud operations, multi-tenancy, security, ingestion tooling and ThirdEye anomaly detection to speed production rollout and ongoing performance tuning.

Latency from ingest to query is typically seconds or less, and the platform is built to sustain heavy QPS for interactive exploration of time-series and dimensional metrics.

Pros

- Proven sub-second analytics at scale for user-facing workloads.

- Real-time plus historical data in a single fast store.

- Managed service reduces cluster complexity.

- Rich indexing accelerates selective and high-cardinality queries.

- Built-in anomaly detection assists metric monitoring.

Cons

- Analytic focus means no transactional workloads.

- Self-managing Pinot can be complex without StarTree.

- SQL is purpose-built and may limit cross-table joins.

- Resource demands require careful capacity planning.

- Vendor maturity may be a factor for risk-averse buyers.

Best for

Applications and internal portals that need interactive, sub-second analytics on fresh events at high concurrency, from product telemetry and metrics exploration to anomaly detection.

12. Tinybird

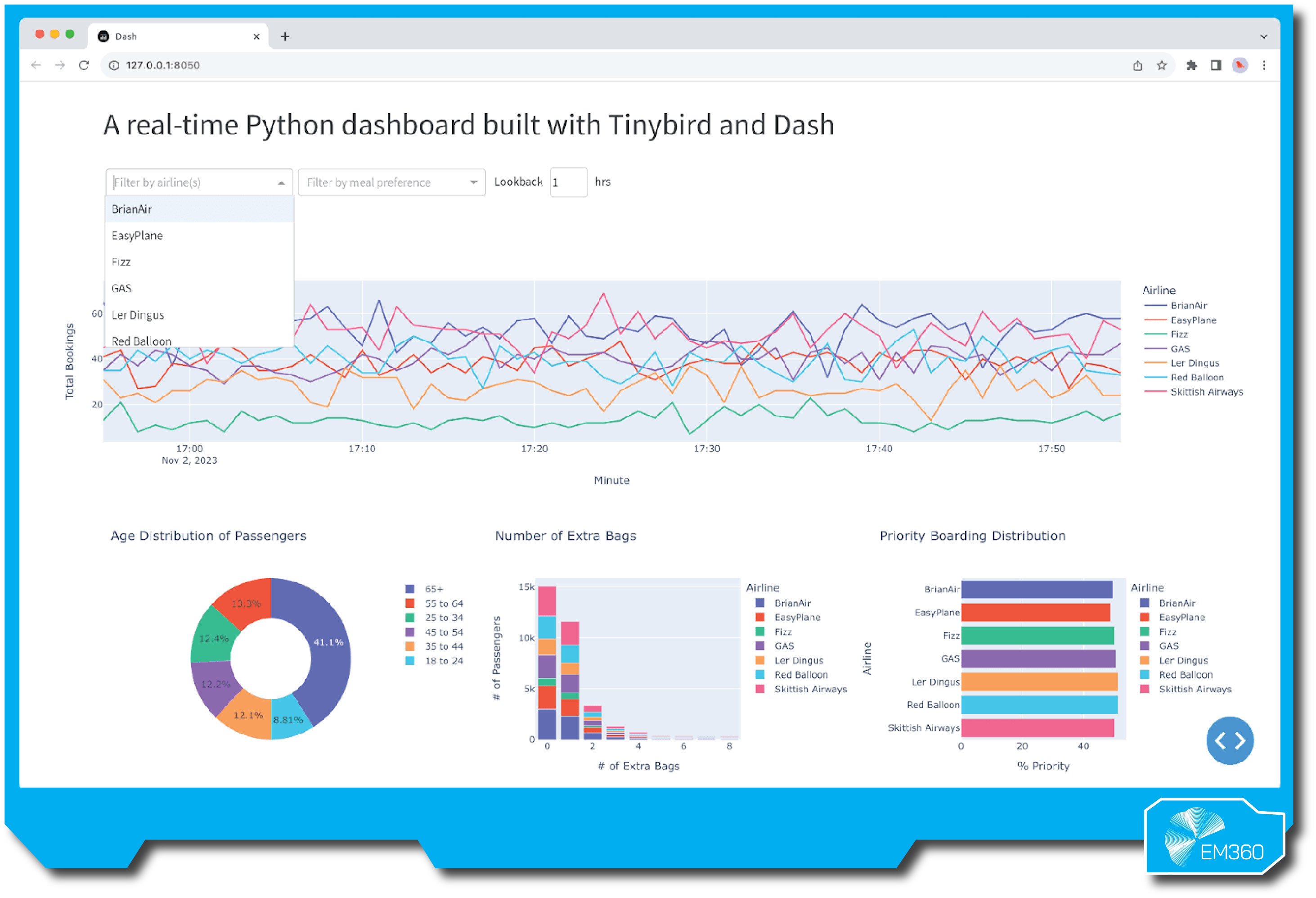

Tinybird turns streaming and batch data into low-latency APIs backed by ClickHouse. Teams ingest events, build transformations as SQL “Pipes”, and publish results as HTTPS endpoints for apps and dashboards.

Enterprise-ready features

Managed ClickHouse handles ingestion from Kafka, webhooks and databases, with incremental updates and materialisations for fast queries. Pipes are version-controlled and CI/CD-friendly, with detailed query observability to tune performance.

Governed access comes via tokens and roles, while deployment options include hosted and private to meet compliance needs. The platform serves millisecond API responses at scale without teams managing clusters.

Pros

- Developer-first: SQL-to-API flow shortens time to product.

- ClickHouse performance delivers sub-second responses at scale.

- Managed service removes operational overhead.

- Strong CI/CD integration supports data-as-code practices.

- Ideal for rapid prototyping and iterative delivery.

Cons

- Newer vendor may raise longevity questions for some buyers.

- The cloud-managed model can be sensitive for strict data policies.

- Very complex logic may need external services beyond SQL.

- Usage-based pricing needs active cost monitoring.

- Advanced teams may prefer direct ClickHouse control.

Best for

Teams that need real-time metrics and aggregation APIs quickly — powering customer dashboards, product analytics, and operational views — without building and running a custom data backend.

Common Implementation Challenges (and How To Overcome Them)

Even with the right platform, real-time programmes can stumble without clear guardrails. Focus execution on four areas.

Cost and complexity: Real-time pipelines can be expensive at 24/7 scale. Use managed and serverless services so capacity tracks demand, filter and aggregate early to cut throughput, and set budgets and alerts for streaming jobs. Prefer integrated stacks over tool sprawl to reduce engineering effort and ongoing run costs.

Data governance and quality: Build trust into the stream. Enforce schemas and access controls from the start, use a schema registry or data contracts, and run automated quality checks that quarantine bad records. Document streams, track lineage, and tie pipelines into existing catalogues and security controls so compliance is continuous, not an afterthought.

Integration complexity: Connecting many sources and sinks adds friction. Lean on unified platforms, iPaaS, and pre-built connectors; when custom work is needed, standardise on CDC for databases, and webhooks or REST for apps. Decouple producers and consumers through an event bus, such as Kafka or Pub/Sub, and agree on common data models early to minimise one-off transformations.

Skills and culture gap: Streaming needs new habits as well as new tools. Invest in training, choose higher-level or SQL-first options where they fit, and start with contained use cases to build confidence. Create a cross-functional squad that owns outcomes, and bring in expert partners for the first implementation to accelerate skills transfer.

The Future of Real-Time Analytics

Real-time analytics is moving from dashboards to decisioning. AI-native systems will ingest live signals and act in the moment, from personalising a page to tuning a machine, which means tighter coupling between analytics platforms and ML frameworks. Expect more built-in capabilities such as anomaly detection and predictive scoring powered by streaming data.

Generative AI will sit on top of live streams. Leaders will ask questions in plain language and get immediate, narrative answers. These co-pilots will also surface insights unprompted, scanning sales, marketing and operations to flag what matters now.

Observability is set to mature. Monitoring will focus on the data itself, not only the infrastructure. Pipelines will detect lag, drift and drops, then repair themselves or reroute automatically. End-to-end lineage and real-time alerts will sustain trust in fast-moving data.

Edge analytics will expand. Processing will happen on factory floors, in vehicles and in stores where bandwidth and latency make cloud-only designs impractical. Devices will analyse locally, then send summaries or exceptions upstream.

Automation will converge with streaming. Event-driven architectures and digital twins will trigger actions as conditions change, from dynamic pricing and automated trading to supply chains that reroute in real time. Systems will prevent outages by responding to early signals before users experience any impact.

Strategy will trump speed for speed’s sake. Enterprises will define where real time creates outsized value and keep batch for the rest. The winners will blend human judgement with automated intelligence, closing the loop so outcomes feed models continuously and the organisation learns while it operates.

Final Thoughts: Speed Only Matters When It’s Strategic

Speed without purpose achieves little. Real-time analytics only creates value when it enables sharper, faster decisions that serve the organisation’s wider goals. Whether it's an AI model rebalancing inventory in seconds or executives tracking live market shifts, the advantage lies in clarity and confidence — powered by trusted data.

The priority is intentional use. Focus real-time capabilities where they move the needle — improving customer experience, reducing risk, or accelerating innovation. When applied strategically, streaming data becomes a competitive differentiator and a foundation for AI-driven agility.

In a business landscape defined by volatility, real-time intelligence separates leaders from followers. The enterprises that will thrive are those that treat data speed as a tool for foresight, not just velocity — and that keep investing in the systems, governance, and culture to make insight actionable.

For more perspectives on turning real-time data into enterprise advantage, explore EM360Tech’s latest insights on data strategy, AI integration, and intelligent automation.

Comments ( 0 )