Modern data teams are under constant pressure to move faster without losing control. New AI workloads, real time analytics and rising regulatory demands all depend on data that is not only available, but explainable, traceable and trusted. That is impossible to deliver with pipelines alone.

The real engine behind reliable DataOps is metadata. When it is captured consistently and activated across the data lifecycle, metadata becomes the connective tissue that keeps pipelines observable, governance enforceable and data products usable at scale.

This is the shift many analysts are now pointing to. Gartner highlights metadata management as a top data and analytics trend for 2025, noting that effective practices start with technical metadata and then expand into business context so that catalogs, lineage and AI use cases can work together.

Forrester frames it as a “metadata activation” imperative, where governance evolves from passive documentation into active workflows that power governed data products and AI pipelines. The question for data leaders is no longer whether metadata matters.

It is how to turn it into the operational backbone of DataOps, rather than a static side project that lives in a catalog nobody trusts.

What Metadata Means Inside Modern DataOps

At a simple level, metadata is data about data. It captures what a dataset is, where it came from, how it is structured, who owns it and how it has changed over time. In a modern DataOps context, that description is not enough.

For DataOps, metadata is the context layer that tells pipelines how to behave. It describes sources, transformations, quality checks, business definitions, usage patterns and access policies in a way that both humans and automation can work with. When your DataOps practices mature, that context stops being an afterthought and becomes a core part of the architecture.

Three families of metadata are especially relevant here:

- Technical metadata describes schemas, tables, columns, data types, jobs, APIs and workflows. It is the backbone for lineage, impact analysis and automated quality checks.

- Business metadata captures definitions, KPIs, owners, usage descriptions and glossaries. It links pipelines and models to business meaning, which is where trust lives.

- Operational metadata tracks runtime behaviour. It holds metrics such as job durations, failure rates, data freshness, data volumes and access patterns. It feeds DataOps monitoring and observability.

When these metadata types are captured in a coherent architecture and stored in a shared repository, they become the raw material for automation, governance and collaboration.

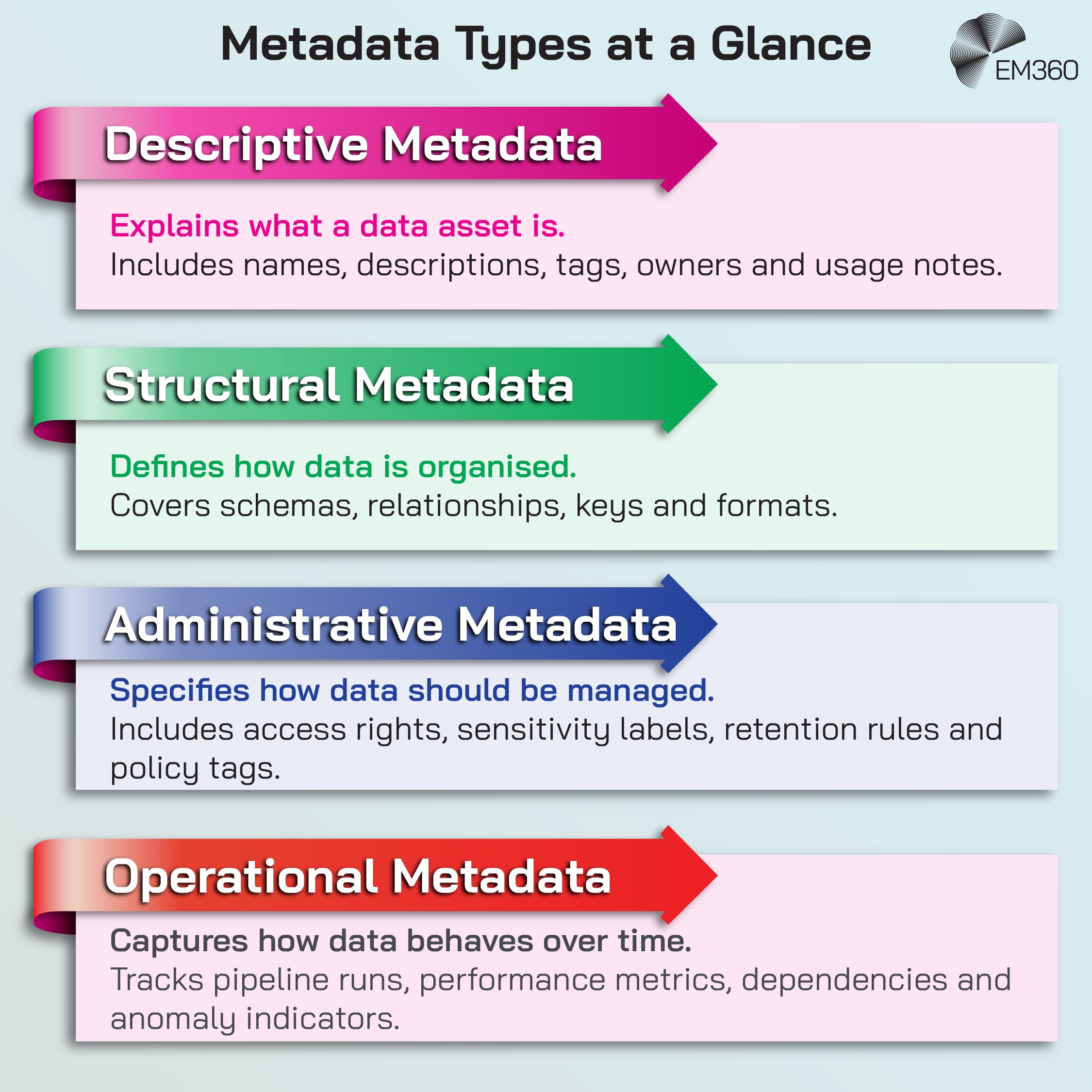

Types of Metadata Data Leaders Actually Rely On

From an enterprise perspective, it helps to translate the classic taxonomy into real uses:

- Descriptive metadata tells users what a data asset is. It includes names, descriptions, tags, owners, related products and usage notes. This is what makes a catalog searchable and understandable.

- Structural metadata describes how data is organised. It covers schemas, primary keys, relationships, hierarchies and formats. This is what allows tools to join datasets safely and understand how changes will ripple through.

- Administrative metadata focuses on management and control. It holds access rights, retention policies, sensitivity labels, licensing details and regulatory tags. This is essential for governance and compliance.

- Operational metadata tracks behaviour over time. It records run logs, pipeline dependencies, performance indicators, anomaly flags and downstream usage. This is the heartbeat DataOps teams watch to keep pipelines healthy.

All of these rely on consistent metadata schemas and standards so that different tools can exchange and interpret context automatically. Without that, every integration becomes a one off and the DataOps experience degrades into manual mapping and guesswork.

Why Metadata Is the Foundation Of Scalable DataOps

When Data Trust Becomes Strategy

Why observability, quality, and governance are collapsing into a single data trust mandate for CIOs and CDOs.

Many organisations still treat metadata as a documentation exercise. Someone fills in descriptions after a project goes live, then everyone forgets about it. That approach does not scale.

In a mature DataOps practice, metadata is the system of record for how data flows across the organisation. It tells you:

- Which datasets feed which products

- Which checks were applied

- Which policies govern access and retention

- Which teams rely on which pipelines

That level of visibility unlocks several critical capabilities:

- Data lineage that shows end to end flows, so leaders can understand blast radius before a change lands in production.

- Data quality monitoring that uses structural and operational metadata to detect anomalies, schema drift and stale data.

- Stronger data governance by linking policies to specific assets, roles and workflows rather than storing them in static documents.

- Pipeline observability that gives DataOps teams insight into performance, bottlenecks and failure patterns without manual investigation.

The result is an environment where change can move faster without raising risk. That is impossible to achieve if metadata is scattered across tools or trapped in the heads of a few experts.

The DataOps Architecture Is Now Metadata Driven

As architectures become more distributed and hybrid, metadata is becoming the design language that keeps everything coherent. Analysts often describe this as a metadata driven data fabric that captures and analyses metadata across the entire pipeline to support orchestration and Operational excellence through DataOps.

In practice, a metadata aware DataOps architecture typically includes:

- A central metadata repository or catalog that aggregates technical, business and operational metadata from multiple sources.

- Data pipeline automation that reads from this repository to understand dependencies, apply checks, enforce policies and route data to the right products.

- A semantic layer that exposes consistent business definitions and metrics across tools, powered by shared metadata.

- Governance workflows that use metadata to drive approvals, access requests, policy enforcement and incident response.

Inside Unified Lakehouse Stacks

Break down the ingestion, storage, metadata and compute layers that turn cloud object storage into an enterprise-grade analytics backbone.

When this works well, teams can build new pipelines and products by composing from known, well described building blocks instead of starting from scratch every time.

Active Metadata and Real Time DataOps

The most significant change in recent years is the move from static catalogs to active metadata. Instead of only storing descriptions and lineage for humans to browse, active metadata platforms continuously collect, infer and apply metadata in real time.

This includes:

- Automatically discovering new datasets and schema changes

- Inferring relationships based on usage patterns

- Updating lineage graphs as pipelines run

- Highlighting quality issues or policy breaches as they occur

Forrester and other analysts describe this as the shift from passive metadata management to metadata that actively powers data products and AI pipelines. For DataOps teams, that means metadata is no longer a background concern. It shapes pipeline behaviour through automated lineage, dynamic routing, adaptive testing and policy aware transformations.

Where Metadata Lives in the DataOps Toolchain

To make this real, data leaders need a clear mental model of where metadata is generated and consumed:

- Source systems and ingestion tools generate technical and operational metadata about schemas, APIs, logs and extracts.

- Transformation and orchestration tools generate lineage, dependency graphs, run histories and error logs.

- Catalogs and dictionaries consolidate descriptive, structural and administrative metadata and expose them through search and APIs.

- Governance platforms and workflow tools consume metadata to drive approvals, policy checks, exception handling and attestations.

- CI/CD pipelines use metadata to understand which tests to run, what to deploy and where to notify when something breaks.

- Observability and monitoring tools rely on operational metadata to detect anomalies, drift and performance degradation.

The more tightly these components are integrated around a shared metadata layer, the more effective DataOps becomes. When they are not, teams fall back on manual spreadsheets and ad hoc knowledge, which defeats the purpose of automation.

How Metadata Strengthens Governance and Risk Controls

The Hidden Cost of Bad Data

Quantifies the revenue and compliance risks of poor data quality and shows how modern tools reduce exposure across the enterprise.

From a governance perspective, metadata is how policy becomes practice. It connects what organisations say they do with what actually happens in pipelines and products.

Regulations such as GDPR and CCPA, along with sector specific rules, are pushing organisations to invest in tools that enhance data lineage, quality and accessibility so they can demonstrate compliance. None of that is possible without consistent metadata.

Lineage and Impact Analysis as Executive Safety Nets

Executives care about exposure. When a critical data element changes, or when a quality issue shows up in a key report, they need to know:

- What is affected

- Who will notice

- How quickly it can be fixed

Rich lineage graphs and impact analysis powered by metadata give a credible answer. They show which products, dashboards, models and decisions rely on specific sources or transformations. They clarify dependency chains and highlight potential blast radius, so teams can remediate before customers or regulators see the impact.

This is why many organisations treat lineage and traceability as non negotiable capabilities for strategic data assets. Good metadata is what makes those capabilities reliable.

Metadata as Guardrails for AI and Data Products

As more enterprises operationalise AI, attention is shifting to AI governance and model transparency. Gartner expects both AI agents and data fabric architectures to rely on connected, metadata driven access across fragmented environments.

Metadata plays several roles here:

- It records training data provenance, which is essential for understanding bias and answering regulatory questions.

- It connects models to business definitions, owners and policies, which clarifies who is accountable.

- It captures usage and performance metrics, which supports monitoring and fair use.

- It underpins data contracts between producers and consumers of data products, making quality and service level expectations explicit.

Recent research on modern AI in metadata management shows how machine learning is being used to automate classification, quality tagging and relationship discovery, which further strengthens these guardrails.

What The Latest Research Shows About Metadata Maturity

When Synthetic Data Scales

Why pilots stall and which operating disciplines turn synthetic data into a board-level asset instead of another stranded AI PoC.

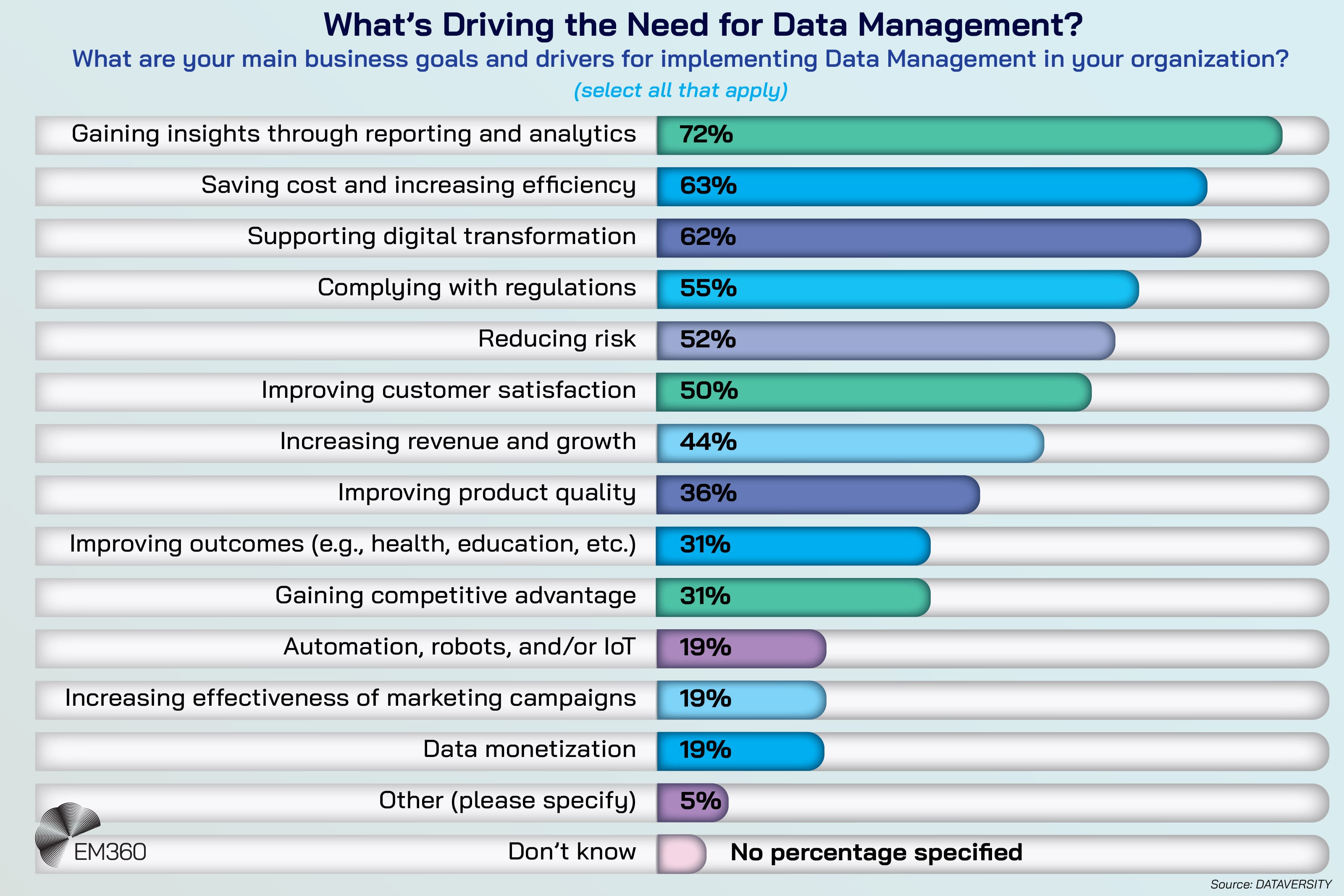

Industry research over the past year paints a consistent picture. Organisations are moving metadata from the sidelines into the core of their data management strategy, but maturity is uneven.

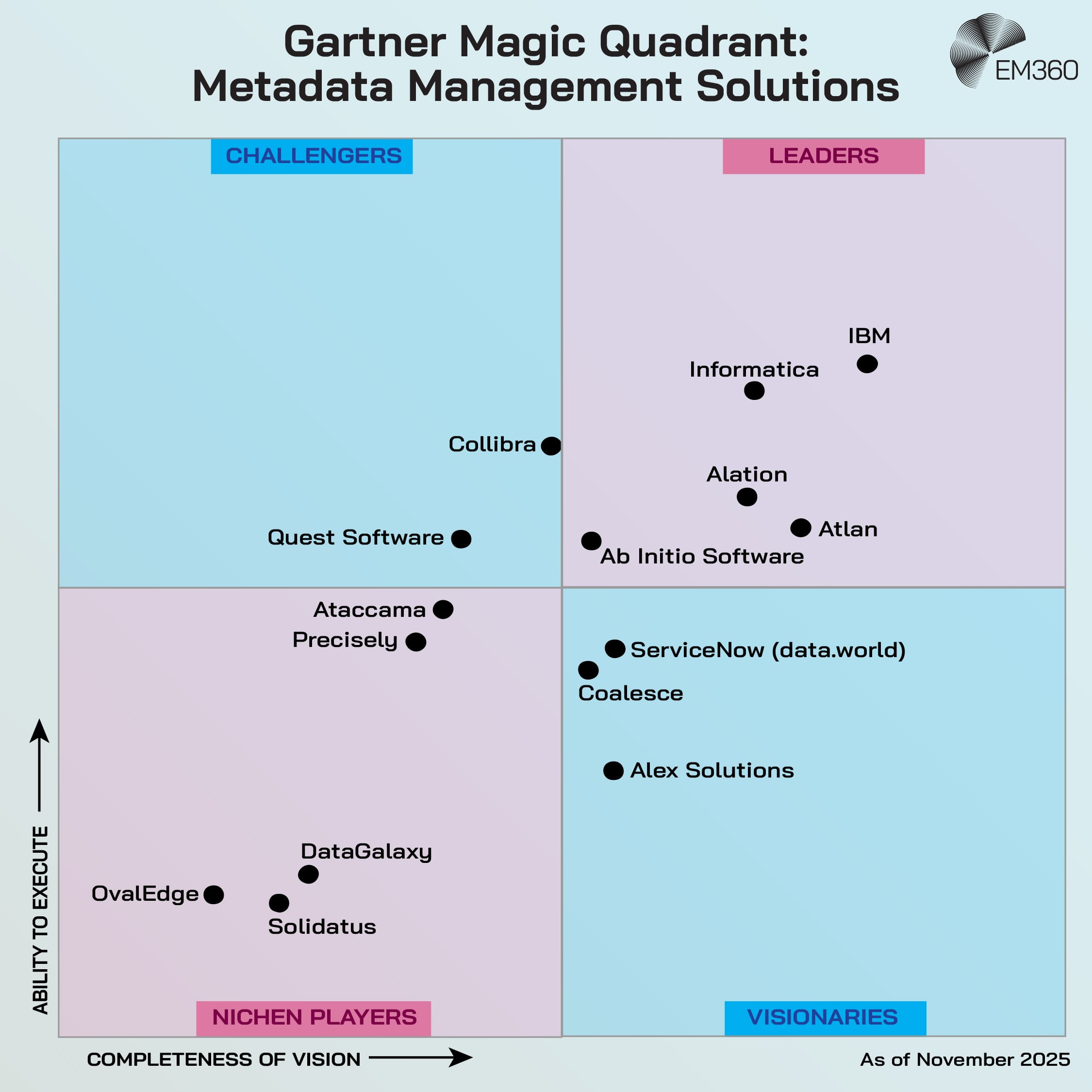

A 2025 survey from DATAVERSITY, based on responses from more than 200 organisations worldwide, highlights metadata management and automation as key emerging priorities in data management. Gartner’s 2025 trends for data and analytics show metadata management solutions and multimodal data fabric as central levers for orchestrating pipelines and improving DataOps performance.

On the tooling side, multiple market studies point to rapid growth. Grand View Research expects the global metadata management tools market to reach around USD 36.44 billion by 2030, at a compound annual growth rate of about 20.9 per cent from 2025 to 2030. That growth is driven by centralised data administration, rising demand for data security and the need for trustworthy analytics.

The Shift From Passive to Active Metadata Is Accelerating

Analyst commentary is converging on one theme. Metadata that only documents the past is not enough.

Forrester’s recent evaluations of data governance solutions describe an “active, AI native” future where governance is embedded into daily workflows, and metadata is tied directly to measurable business outcomes rather than static registries.

Combined with the data fabric view of metadata driven access and orchestration, this points to a clear direction. Metadata needs to be always on, dynamically updated and wired into automation. DataOps is the operational layer that turns that principle into day to day practice.

Metadata Tooling and Market Growth Are Climbing Fast

Rapid market growth also tells us something about expectations. As organisations spend more on metadata management tools, they will expect more than search and glossaries.

They will look for:

- Embedded lineage and impact analysis in their DataOps workflows

- Automated classification and policy enforcement

- Rich integration with cloud native pipelines and observability tools

- Support for both technical and business users, not just data specialists

For data leaders, this makes the business case straightforward. Metadata centric operations are no longer a niche capability, they are becoming a baseline requirement for competitive data and AI programmes.

Practical Steps To Make Metadata Work For DataOps

Turning these ideas into reality does not happen through one tool purchase. It requires a deliberate strategy and a staged approach.

Start With a Metadata Strategy That Matches Your Operating Model

Before investing in new platforms, leaders need clarity on their operating model. Key questions include:

- Which domains and products create the most value or carry the most risk

- How teams currently build, deploy and manage pipelines

- What governance framework already exists, even if it is informal

From there, you can define a metadata roadmap that aligns with the way your organisation actually works. That roadmap should describe target capabilities, from foundational cataloging and ownership through to active metadata, automated policy enforcement and AI readiness.

The aim is not to reach an abstract maturity score. It is to ensure that metadata practices support the decisions and outcomes that matter most to the business.

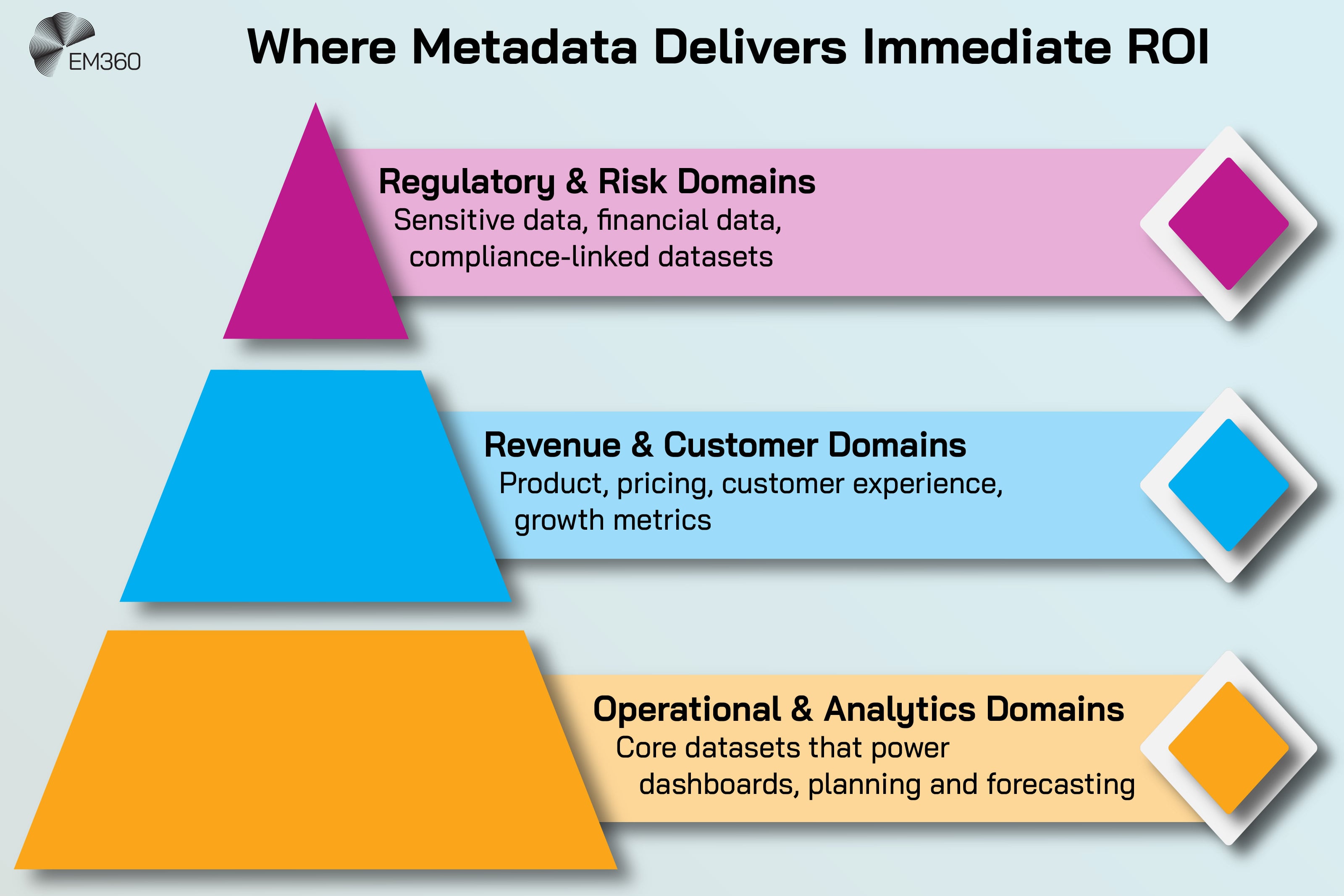

Prioritise High Value Domains First

Trying to capture perfect metadata for every asset at once is a recipe for stalled programmes.

A more effective approach is to identify critical data elements and domains where better metadata will reduce risk or accelerate delivery immediately. Examples might include:

- Customer and product data feeding revenue reporting and financial planning

- Sensitive personal data subject to regulatory requirements

- Core features that drive flagship data products or AI services

By focusing on these areas first, teams can prove value quickly and refine their approach before rolling out across the wider estate.

Automate Metadata Capture and Lineage Everywhere You Can

Manual metadata capture does not scale, and people avoid it once the novelty wears off. To support DataOps, collection and update must be automated wherever possible.

This includes:

- Scanning schemas and logs to populate technical metadata

- Generating lineage graphs from orchestration and transformation tools

- Using pattern detection and AI to suggest tags, classifications and relationships

- Integrating CI/CD pipelines so they update metadata as code changes are deployed

The goal is to keep metadata fresh without adding friction to delivery. When teams see accurate lineage and context appear automatically, they are far more likely to trust and use it.

Use Metadata To Improve Collaboration and Data Literacy

Metadata is not only for the data platform team. It is how different parts of the organisation agree on what data means and how it should be used.

A clear business glossary, visible stewardship roles and intuitive catalog interfaces make it easier for analysts, product managers and other stakeholders to find and understand data. This reduces misinterpretation, duplicate work and reliance on a small group of experts.

As literacy grows, so does the value of DataOps. Pipelines and processes become easier to reason about, because everyone is working from the same shared context.

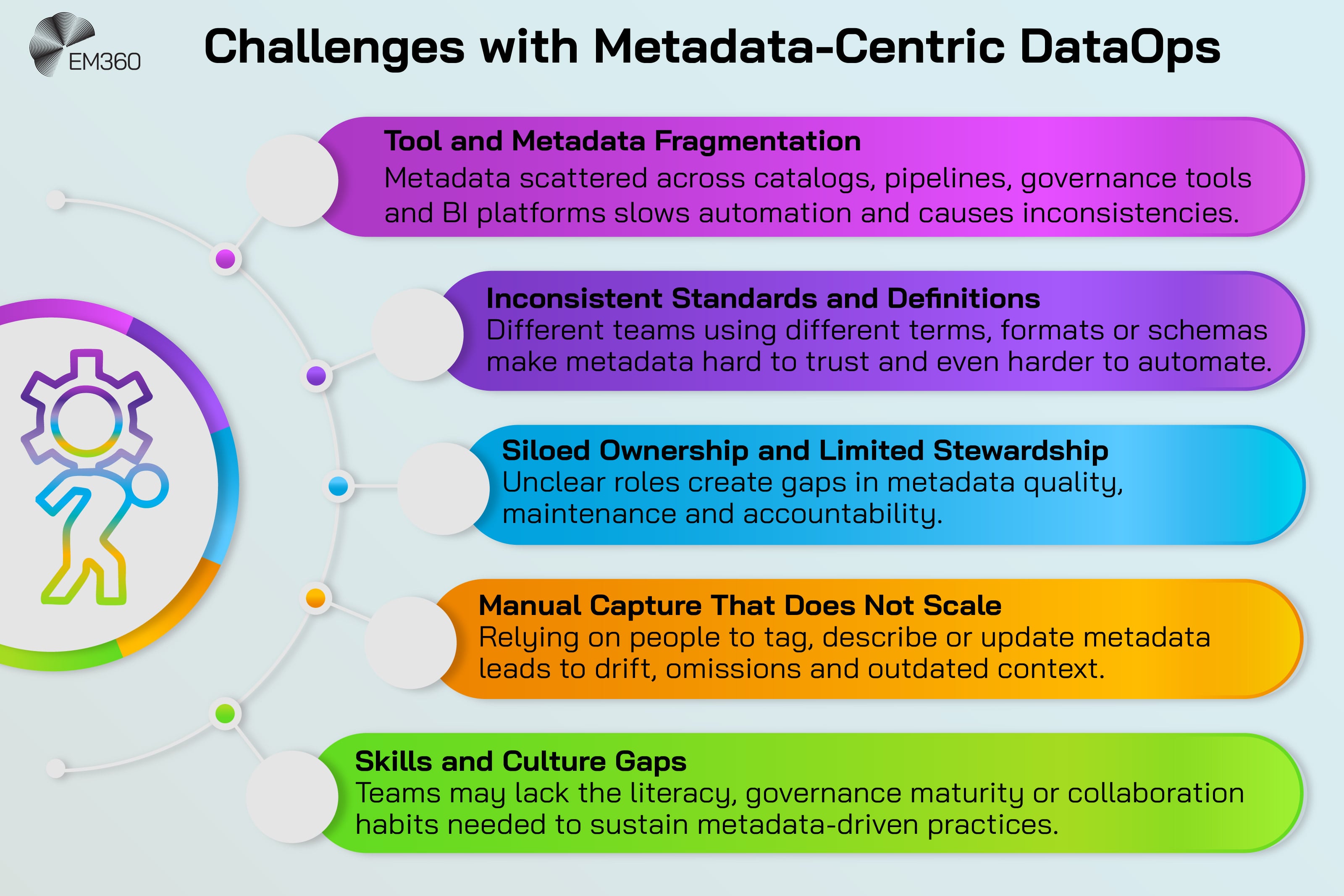

Common Challenges When Embedding Metadata Into DataOps

Even with a strong strategy, organisations often encounter friction when they try to operationalise metadata. Naming these challenges helps leaders address them directly.

Tool Fragmentation and Siloed Ownership Slow Everything Down

Many enterprises already own several tools that collect or use metadata. Catalogs, lineage solutions, governance platforms, observability tools and orchestration engines all come with their own views of the world.

If these tools are not integrated, metadata becomes fragmented. Different teams maintain their own lists, graphs and glossaries. Pipelines cannot rely on a single source of truth and automation is limited to narrow slices of the estate.

Solving this does not always mean buying a single platform. It does mean defining an integration strategy and assigning clear ownership so that metadata can flow across systems and support end to end DataOps.

Culture, Skills and Governance Maturity Are Often the Real Blockers

Technology is only part of the picture. Surveys on data management trends still highlight data literacy, cross functional collaboration and governance maturity as persistent challenges.

Metadata centric DataOps requires:

- Leaders who treat data and metadata as strategic assets, not side projects

- Stewards and product owners who accept responsibility for definitions and quality

- Engineers and analysts who see metadata as a core part of their work, not a compliance chore

Without that, even the best tools will struggle to deliver. Pipelines will continue to rely on tacit knowledge and manual exceptions, which is exactly what DataOps is meant to replace.

Final Thoughts: Metadata Only Works When It Powers DataOps

Metadata has always been part of data management, but its role is changing. It is no longer enough to describe assets in a catalog and hope people read the entries.

For modern DataOps, metadata needs to drive how data flows, how policies are enforced and how decisions are made. When it does, you get pipelines that are observable, governable and resilient, even as AI workloads and business demands keep expanding. When it does not, every new use case simply adds more complexity to an already fragile estate.

The organisations that pull ahead will be the ones that treat metadata as an operational engine, not just a documentation layer. They will invest in active metadata, align it with their operating model and use it to give teams a truthful view of how data behaves across the business.

If you are mapping your own path to metadata driven DataOps, this is the moment to look beyond tools and ask how your architecture, governance model and culture need to evolve. EM360Tech will keep tracking how data leaders are making that shift, so you can benchmark your progress against what is working across the wider market and borrow the ideas that fit your own environment.

Comments ( 0 )