Enterprises are not paid for data. They are paid for outcomes that data makes possible. The strongest returns come from decision-ready intelligence that customers can drop into a workflow without friction. That usually means turning model outputs and signals into products with owners, controls, and clear unit economics.

Raw feeds still have a place, but value compounds when buyers get answers, not fields. Monetising AI data is a go-to-market and engineering problem in equal measure. The rails matter as much as the insight. Marketplaces, clean rooms, and APIs move value safely without exposing raw assets.

Pricing must match how value is consumed, so subscription for predictable access and usage for event-driven demand. Privacy and IP protection are not a final step. They are part of the design.

Get those foundations right, and new revenue stops being a slide in the board pack. It becomes a line you can forecast, defend, and scale.

What Monetising AI Data Means Today

Enterprises are moving beyond simple data resale. The frontier is decision-ready intelligence, where models and analytics deliver context-specific recommendations rather than undifferentiated feeds. Recent analysis highlights the shift from static products to AI-powered solutions that produce tailored, actionable outputs for specific users and workflows.

In practice, monetisation spans three buckets. First, improving work by using data and AI to reduce cost or risk. Think fewer incidents through earlier anomaly detection, lower compute spend through smarter autoscaling, faster MTTR through root-cause suggestions, and tighter controls on sensitive data via policy-as-code.

Value shows up on the P&L as avoided cost and reduced exposure, so track unit economics and publish clear SLOs. Second, wrapping products with features that customers value and will pay more for.

This is where predictive maintenance, personalised recommendations, intelligent routing, or automated insights justify premium tiers, higher renewal rates, or lower churn. Delivery is through the rails customers already use, whether that is an API, a plugin, or a managed inference endpoint, with pricing aligned to usage or outcomes.

Third, selling information solutions outright. Here you package curated datasets, scores, features, or model outputs as products with documentation, versioning, lineage, and access controls. Distribution can run through marketplaces, exchanges, or clean rooms to keep raw data protected.

Across all three, success depends on product ownership, cost visibility, privacy by design, and operational reliability that infrastructure teams can support at scale.

The Four Enterprise Paths To Monetisation

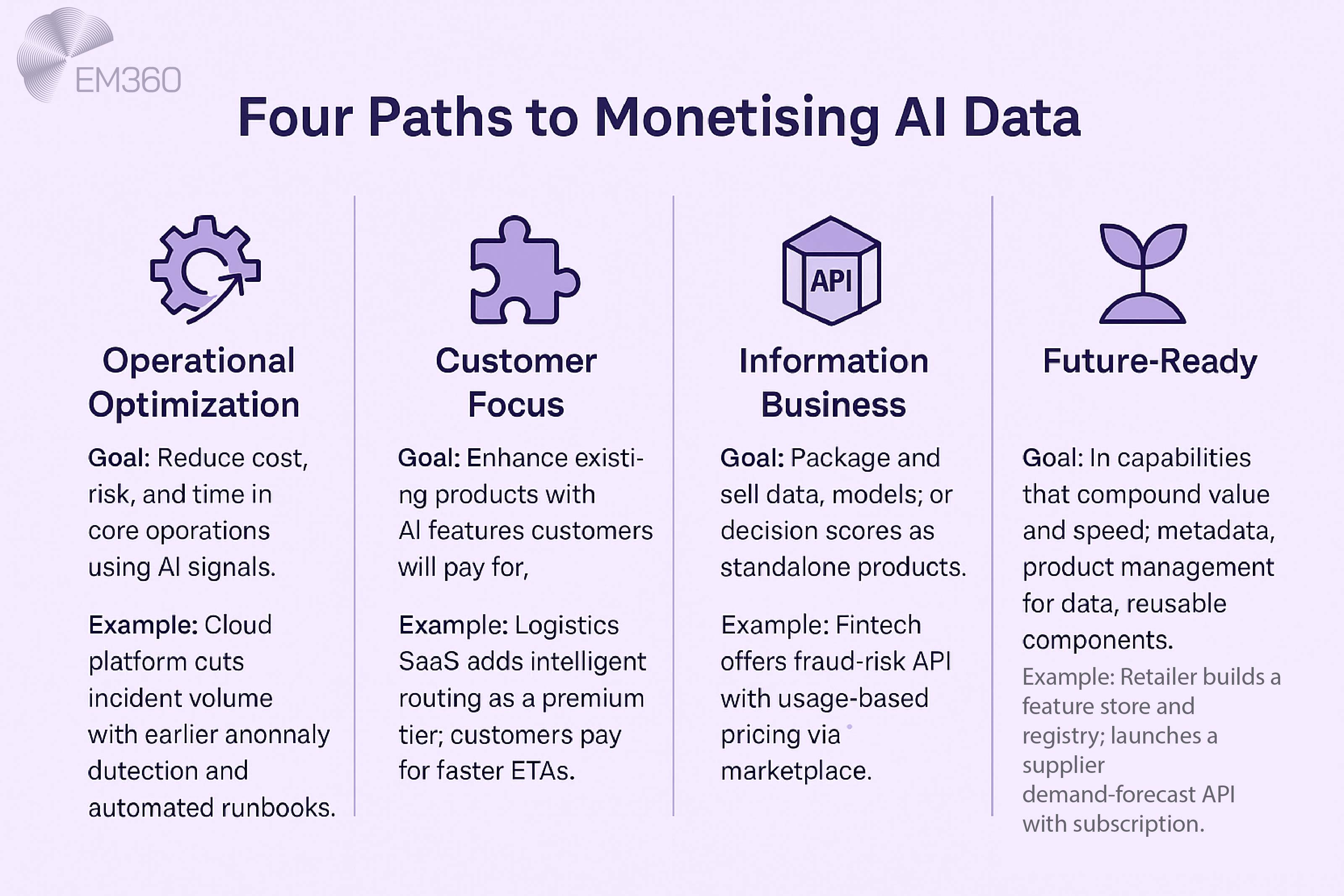

Before choosing routes to market, decide how you expect value to show up. There are four strategies that map well to enterprise reality.

Operational optimisation

Use ML and decision intelligence to remove waste, speed decisions, and lift reliability. Returns often arrive quickly because you monetise through avoided cost and improved margin rather than external sales. Track impact on the P&L so savings are not lost in organisational slack.

Customer focus

Wrap existing products with AI-driven features that customers love. Think predictive maintenance in equipment, intelligent routing in logistics, or adaptive experiences in software. These features drive price realisation and retention, even if you never sell a dataset directly.

Information business

Package data, analytics, models, or APIs as standalone products. This includes curated datasets, feature stores, inference endpoints, and decision scores. Growth depends on scalable distribution, clean contracts, and pricing discipline.

Future-ready

Build capabilities that compound over time: metadata excellence, product management for data, and reusable components. Organisations that apply product practices report better consumer satisfaction, pricing, and sustained profit from data products.

Routes To Market For Data And AI Assets

The go-to-market mechanics decide how safely and quickly value moves. Choose rails that protect IP, respect regulation, and simplify consumption.

Marketplaces and exchanges

Data and analytics exchanges reduce integration friction, centralise entitlements, and standardise billing.

When Platforms Betray Communities

How ownership changes, ad demands and moderation choices turned a creative social hub into a case study in misaligned platform strategy.

- Snowflake Marketplace lets providers list paid datasets, models, and applications for direct invoicing and usage monitoring. Providers can select subscription or usage-based plans and track paid-listing consumption with built-in views.

- AWS Data Exchange supports subscription offers for files, tables, and APIs, with provider financials, metering, and disbursements handled by AWS Marketplace rails.

- Databricks Marketplace distributes datasets and AI assets using the open Delta Sharing standard, reducing replication and lock-in.

- Google Cloud Analytics Hub enables secure cross-organisation sharing of datasets and analytics assets, giving subscribers linked, read-only datasets inside their own projects.

Clean rooms and privacy-preserving collaboration

Clean rooms enable multiple parties to run approved joins and analytics without exposing underlying data. They are essential when monetising audience insights, healthcare data, or any sensitive asset.

- BigQuery Data Clean Rooms provide policy-based analysis rules, aggregation, and privacy controls such as differential privacy, with export restrictions by default.

- Databricks Clean Rooms use Delta Sharing and serverless compute to collaborate across platforms without data movement, with controlled output tables.

- The IAB Tech Lab has released industry guidance and an interoperability standard to make clean room interactions more consistent.

APIs and embedded intelligence

Many buyers want decisions, not datasets. Expose scores, recommendations, or events via REST or streaming APIs, and bill per call or per thousand events. AWS and Snowflake support API-fronted products through their marketplaces, making discovery and billing easier.

Synthetic data and simulations

Where privacy or scarcity blocks access, synthetic data allows monetisation without sharing PII. Providers list synthetic datasets and generators on marketplaces, and large vendors are formalising synthetic data for enterprise AI agents and testing.

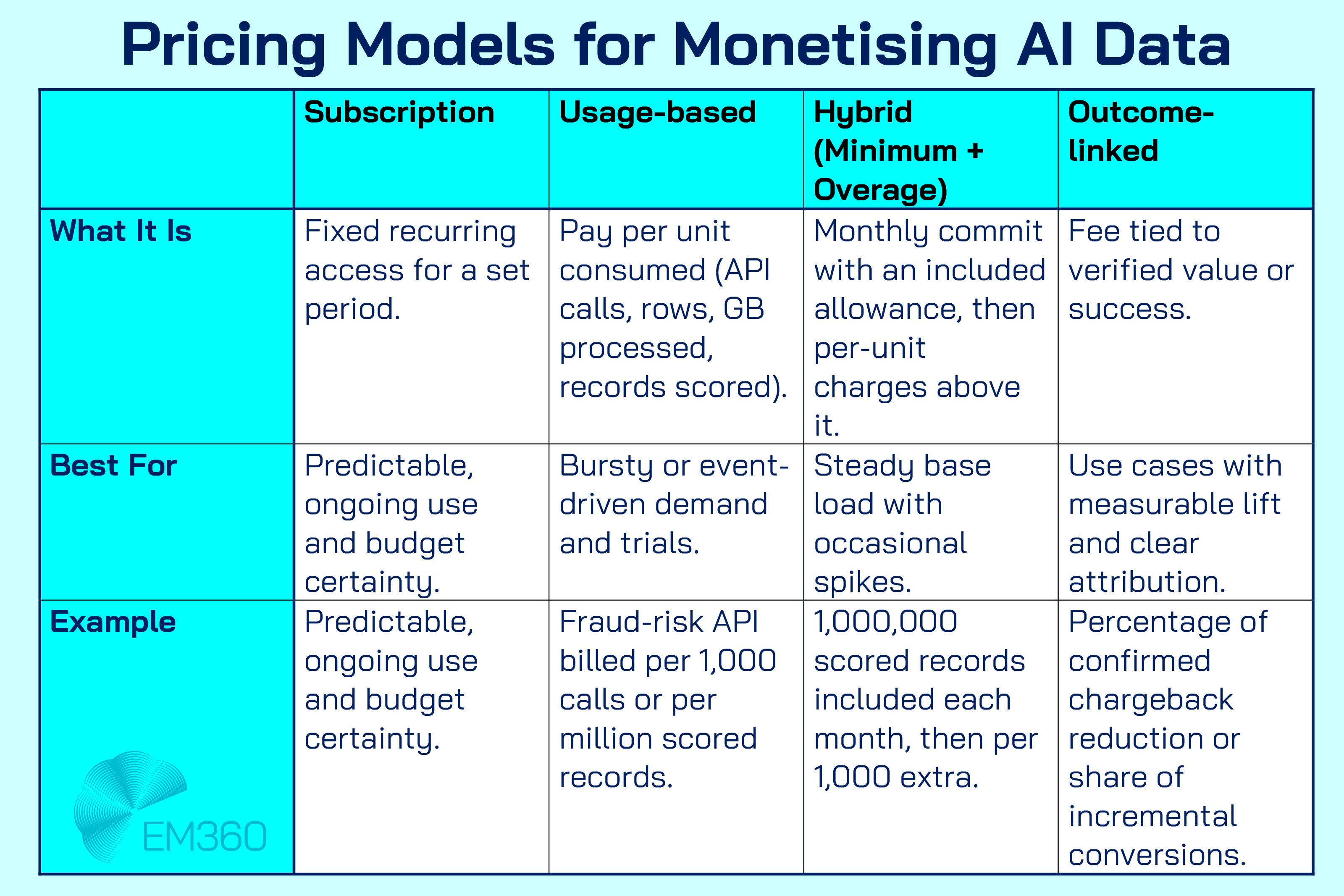

Pricing Models That Actually Work

Pricing must match perceived value and buyer risk. Two proven models dominate marketplace sales today.

Subscription

Charge a fixed fee for time-bound access to products, often with tiers for volume, freshness, or support. AWS Data Exchange products are fundamentally subscription-based, with options for duration and auto-renewal.

Usage-based

Charge per query, row scanned, compute minute, scored record, or API call. Snowflake supports usage-based plans with charges triggered by consumer activity and zero invoice in months without billable events.

Beyond these, combine models for fit: minimum commitments plus overage, cohort-based pricing for analytics buyers, or success-linked fees where your signal materially moves an outcome. Product management practices for satisfying consumers, setting prices, and sustaining profit are critical to keep margins healthy over time.

Inside 2025's BI Stack Choices

Compare leading BI platforms by data stack fit, from semantic layers to multi-cloud alignment, to decide where to anchor enterprise analytics.

Architecture And Governance Foundations

Monetising AI data relies on infrastructure choices that reduce friction without compromising control.

Operate data as a product

Give each data or AI product an owner, a roadmap, versioning, quality and timeliness SLOs, and a financial view of cost-to-serve vs revenue. Leaders in this approach report stronger results across pricing and profit sustainment.

Cost visibility and FinOps for AI

GPU-heavy inference, vector search, and frequent fine-tuning can erode margin. Extend FinOps practices to AI to track unit economics, allocate costs fairly, and forecast spend across the model lifecycle. The FinOps community provides specific guidance for GenAI workloads and cost estimation patterns you can adopt today.

Privacy, contracts, and compliance by design

Design products to meet regulatory obligations from day one.

- The EU Data Act becomes applicable on 12 September 2025, with model contractual terms to support fair B2B data sharing and reasonable compensation. Build these considerations into your agreements.

- The EU AI Act is in force and introduces transparency duties for certain systems. Governance teams should track disclosure and instructions obligations for any high-risk uses.

- Adopt privacy-enhancing technologies such as differential privacy and secure multi-party computation where relevant. NIST and OECD have published guidance to help evaluate and deploy PETs in AI contexts.

- For consumer-facing products, align with ISO 31700 Privacy by Design to embed safeguards throughout the lifecycle.

Lineage, quality, and SLAs

Buyers expect provenance and reliability. Keep lineage from source through transformations, publish schema and data-drift notes, and state refresh cadences clearly. Track and publish uptime and support response targets like any other enterprise service. MIT CISR emphasises tracing financial impact and sustaining profit across the product lifecycle, which depends on these core controls.

Build, Buy, Or Partner

Data Control Lessons from Google+

Uses the Google+ shutdown and Project Strobe review to explore privacy expectations, API governance, and consent in large-scale platforms.

Choose the operating pattern that fits your asset, control, and speed. Most enterprises will use all three at different stages. Start where you can execute now, then sequence the others as capability and market proof build.

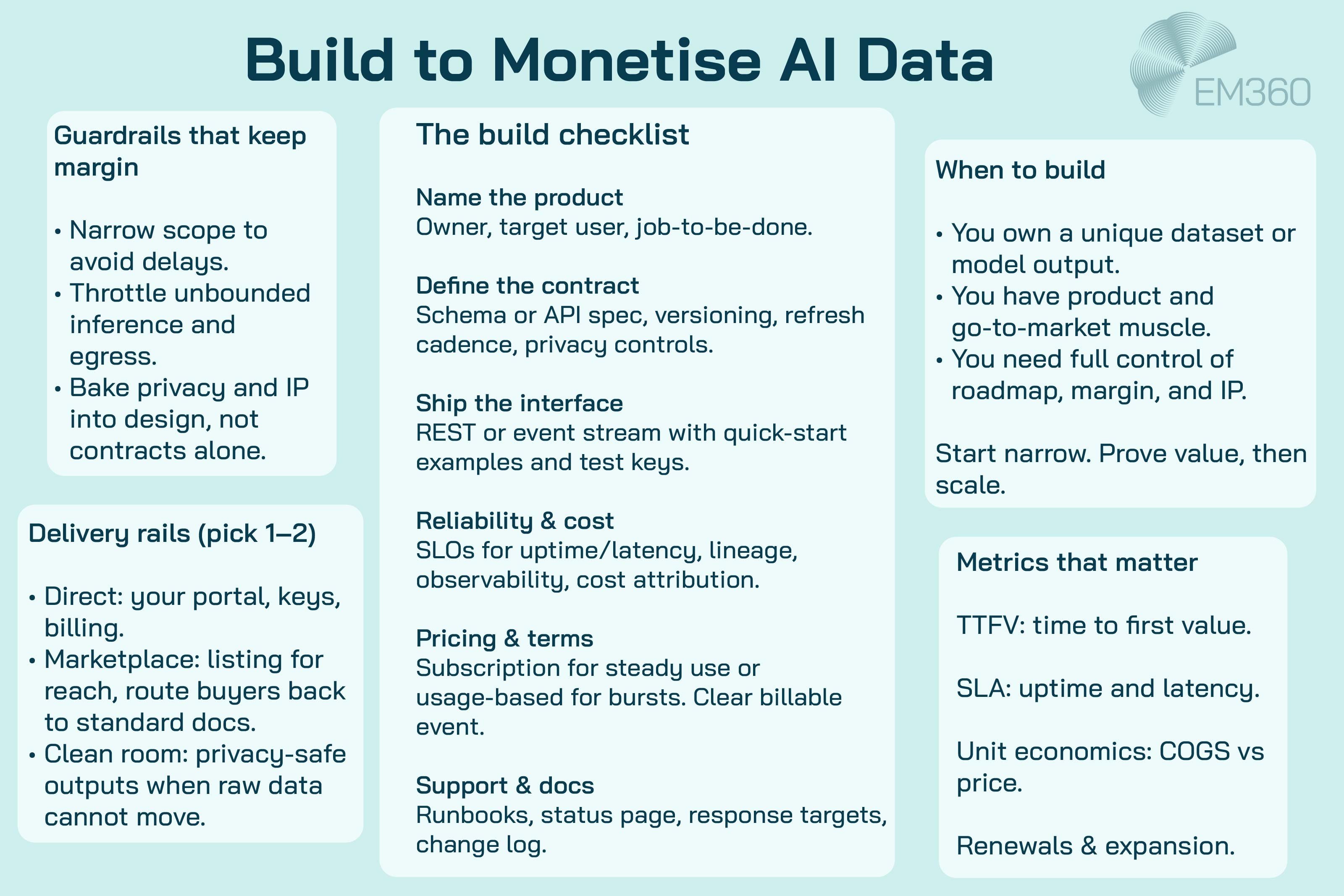

Build

Build when you own a differentiated asset and have the product and go-to-market muscle to carry it. You keep control of roadmap, margins, and customer relationships. You also carry the full weight of delivery, support, and compliance.

Start by treating the asset like any other product. Name the owner. Define the contract, refresh cadence, quality SLOs, and support model. Put an API or delivery interface in front of it that customers can use in minutes. Document the schema or score, publish examples, and make pricing unambiguous.

Give the product solid rails. You need lineage from source to output, observability for uptime and latency, cost attribution for storage, compute, and egress, and access controls tied to entitlements. If distribution is through your own channels, instrument activation and renewals. If you later list on a marketplace, keep the listing lightweight and direct buyers back to your standard docs, support, and status pages.

Price to match how value is consumed. Subscription works where access is predictable and ongoing. Usage makes sense for event-driven or bursty demand. Whichever you choose, define the billable event precisely and measure it the same way in product and in finance so the numbers reconcile.

Manage the risks with discipline. Time to market can drift if the scope balloons. Cost to serve can creep if inference is unbounded or data egress is noisy. Sales friction grows if security, privacy, and IP terms are vague. Keep the first release narrow, protect privacy by design, and publish a public spec so procurement can move.

What to measure is simple. Time to first value for new customers. Contribution margin after direct COGS. Renewals and expansion. SLA performance. If those trend the right way, keep investing. If not, fix unit economics before you scale.

Buy

From Audits to Continuous Trust

Shift compliance from annual snapshots to embedded, automated controls that keep hybrid estates aligned with fast-moving global regulations.

Buy when you need to fill gaps quickly or enrich your models with signals you do not have. The goal is speed with control. You are paying to reduce time to value, not to add operational drag.

Choose providers with clear lineage, refresh cadences, and licensing that covers training, fine-tuning, and derivative works where needed. Prefer delivery through exchanges or direct entitlements that avoid brittle data movement. Keep a local cache or virtualised view to stabilise downstream schemas and to control egress costs.

Integrate like an engineer, not a collector. Validate data quality against your ground truth. Track schema drift and freshness. Wrap the feed behind your own contract so internal teams and customers do not bind to a third-party format. Add guardrails that throttle cost, for example daily caps or query budgets tied to use cases.

Buying introduces its own risks. Lock-in appears if your product depends on an opaque vendor feature. Compliance risk grows if licence terms do not match how you intend to use the data. Model behaviour can overfit to a provider’s quirks. Reduce these by running a bake-off across multiple sources, by isolating provider specifics at the edge of your system, and by keeping a clear rights register for every input.

Measure the uplift you actually get. Track the delta in model performance, coverage, or conversion against the added cost. Watch the freshness and fill rates. Keep an exit plan ready so you can switch providers without a rewrite.

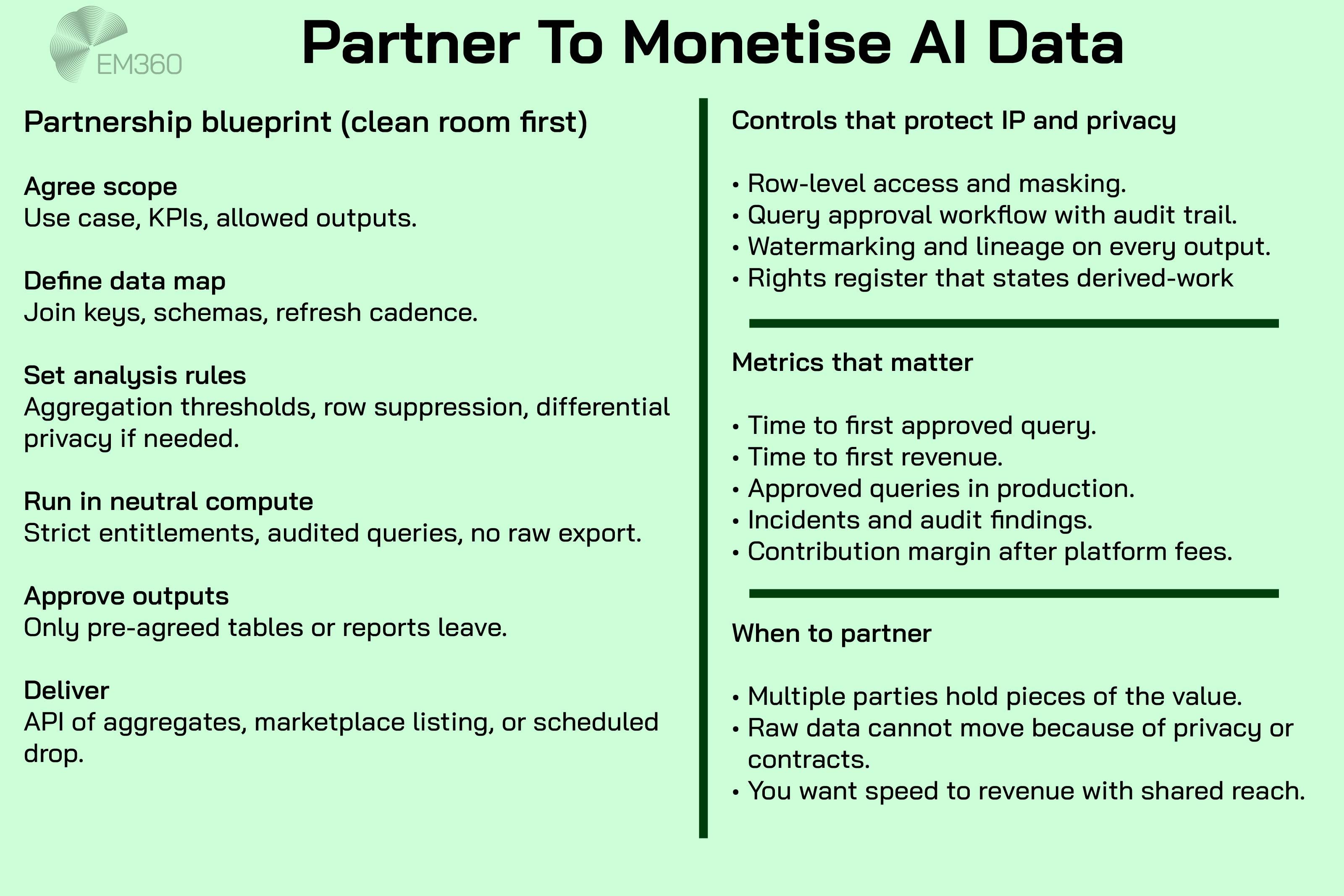

Partner

Partner when multiple parties hold pieces of the value that are stronger together. The aim is to combine insight while preserving confidentiality and IP. Clean rooms are the practical way to do this.

Agree the problem and outputs before you touch any data. Define the allowed joins, aggregation rules, and what can leave the environment. Use a neutral compute layer with strict entitlements, audited queries, and output controls. If differential privacy or row-level protections are required, build them into the analysis rules rather than relying on policy by email.

Treat the partnership like a product and a commercial relationship. Set joint ownership of the backlog, response times, and change control. Specify IP boundaries in plain language. If you will co-sell, define who leads, who follows, and how revenue is shared. Keep legal and security teams involved from day one so contracts and controls evolve together.

Partnerships fail when incentives diverge or when the clean room becomes a bespoke project. Keep the scope small at first and prove a single use case end to end. Standardise reusable components such as approved queries, data dictionaries, and output schemas so you can add partners without starting again.

Measure what matters to both sides. Time to approve the first query. Time to first revenue. Number of use cases moved from pilot to repeatable. Incidents and audit findings. If those are healthy, expand the catalogue and deepen the commercial terms. If not, fix the rules or walk away before sunk costs grow.

Implementation Blueprint For The First 90 Days

A focused, time-boxed approach avoids sprawling pilots that never reach production.

1. Inventory and value your assets

Catalogue proprietary datasets, model outputs, and decision services. Score each on uniqueness, sensitivity, freshness, and buyer demand. Use a simple scoring model to shortlist three candidates with credible value stories.

2. Select your monetisation path

Decide whether the first release will optimise internal operations, wrap an existing offering, or sell an information solution. Map the decision to current capabilities and risk posture to avoid overreach.

3. Package as a product

Define the product spec: schema or API contract, documentation, refresh cadence, privacy controls, and support model. Assign a product owner and establish SLOs for timeliness and availability. Anchor every choice to a target segment’s job-to-be-done.

4. Stand up the rails

Provision your chosen route to market. For exchanges, configure listing metadata, entitlement scopes, and monitoring. For clean rooms, implement analysis rules and output governance so only approved aggregates or tables exit.

5. Price and contract

Start with subscription or usage-based pricing that reflects perceived value and buyer risk. For usage, define the billable event precisely and test measurement. Align contracts to the Data Act’s model terms if selling into the EU.

6. Launch a controlled pilot

Pick three to five design partners who match your ideal customer profile. Instrument everything: activation, time-to-first-value, query patterns, and support load. Capture references and case evidence you can reuse in the next wave.

7. Measure and iterate

Run a simple P&L for the product: revenue, direct COGS (compute, storage, egress, marketplace fees), allocated costs, and contribution margin. Use FinOps practices to reduce unit costs without hurting quality. Publish a quarterly roadmap and commit to refresh cadence.

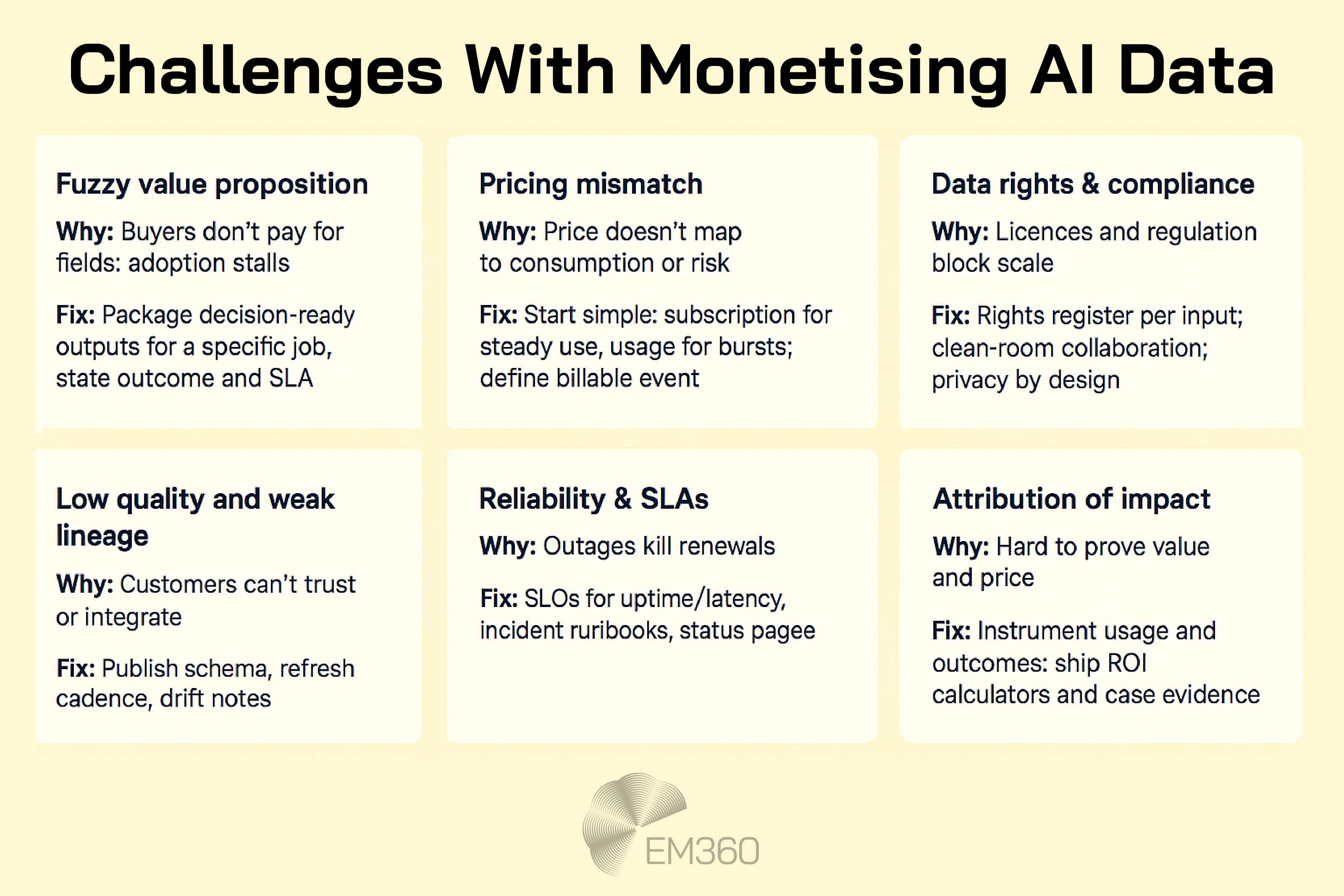

Common Pitfalls To Avoid

Fuzzy value propositions. Buyers pay for decisions and outcomes. A dump of raw fields rarely sells without context. Shape the product to the moments that matter in a workflow.

Hidden cost-to-serve. Unbounded inference calls, chatty workload patterns, and egress can erase margin. Model unit economics early and gate usage where needed.

Privacy and IP leakage. Do not rely on ad-hoc data sharing. Use clean rooms and PETs to collaborate safely, and ensure contracts specify derived-work rights.

Weak product discipline. If there is no owner, there is no product. Treat listings and APIs with the same seriousness as customer-facing software. Measure satisfaction and renewals, not just downloads.

Final Thoughts: Monetisation Works When You Productise Value

Enterprises monetise AI data most effectively when they package outputs that customers can trust, buy, and run in minutes. The playbook is consistent: productise, choose the right market rails, price with discipline, and run the numbers like any other line of business. With clean contracts, privacy-preserving collaboration, and strong FinOps, data and AI products can scale without eroding margin or trust. For practical frameworks, real examples, and straight answers on what works, EM360Tech brings together practitioners who are building these products today, from reference architectures to pricing patterns, so your next step is faster and lower risk.

Comments ( 0 )