Artificial intelligence has shifted from fringe experiment to boardroom priority. Whether automating workflows, enhancing customer experiences or powering decision‑making, AI now shapes competitive advantage across industries. Yet the path to harnessing AI is far from straightforward.

With hundreds of models and frameworks available, leaders must decide whether to adopt open-source AI or invest in proprietary AI models. Or consider whether implementing a hybrid strategy that blends both approaches is the best option. The decision affects cost, innovation, control, compliance and long‑term flexibility—all critical for forward‑looking organisations.

This guide demystifies the debate, weighing the benefits and drawbacks of each option through a practical lens. Backed by recent studies and surveys, it equips enterprise leaders with the insights needed to make confident decisions. These decisions can then be strategic in shaping AI adoption.

What Is Open‑Source AI?

The term “open-source AI” is often used loosely. But true openness is defined by the Open Source Initiative. Users must be free to use the software for any purpose. They must be able to study how it works.

They must be able to modify it. They must also be able to study and share both the original and modified versions.

In the AI context, full openness includes the model architecture — how data flows through the network. It includes training-data recipes — how the dataset was curated. It also includes the model weights — the numerical parameters learned during training. Few models meet this gold standard.

Many so‑called open models provide access only to the weights; training data and architecture remain proprietary, limiting transparency but enabling broader experimentation.

Examples and adoption of open-source AI models

Popular open-source frameworks include Google’s TensorFlow. Another is Meta’s PyTorch. These have underpinned most machine-learning breakthroughs over the past decade.

Platforms like Hugging Face host thousands of models for tasks from language translation to image generation. Meta’s LLaMA-3, Mistral and Kimi are the latest foundation models offering open weights under permissive licences.

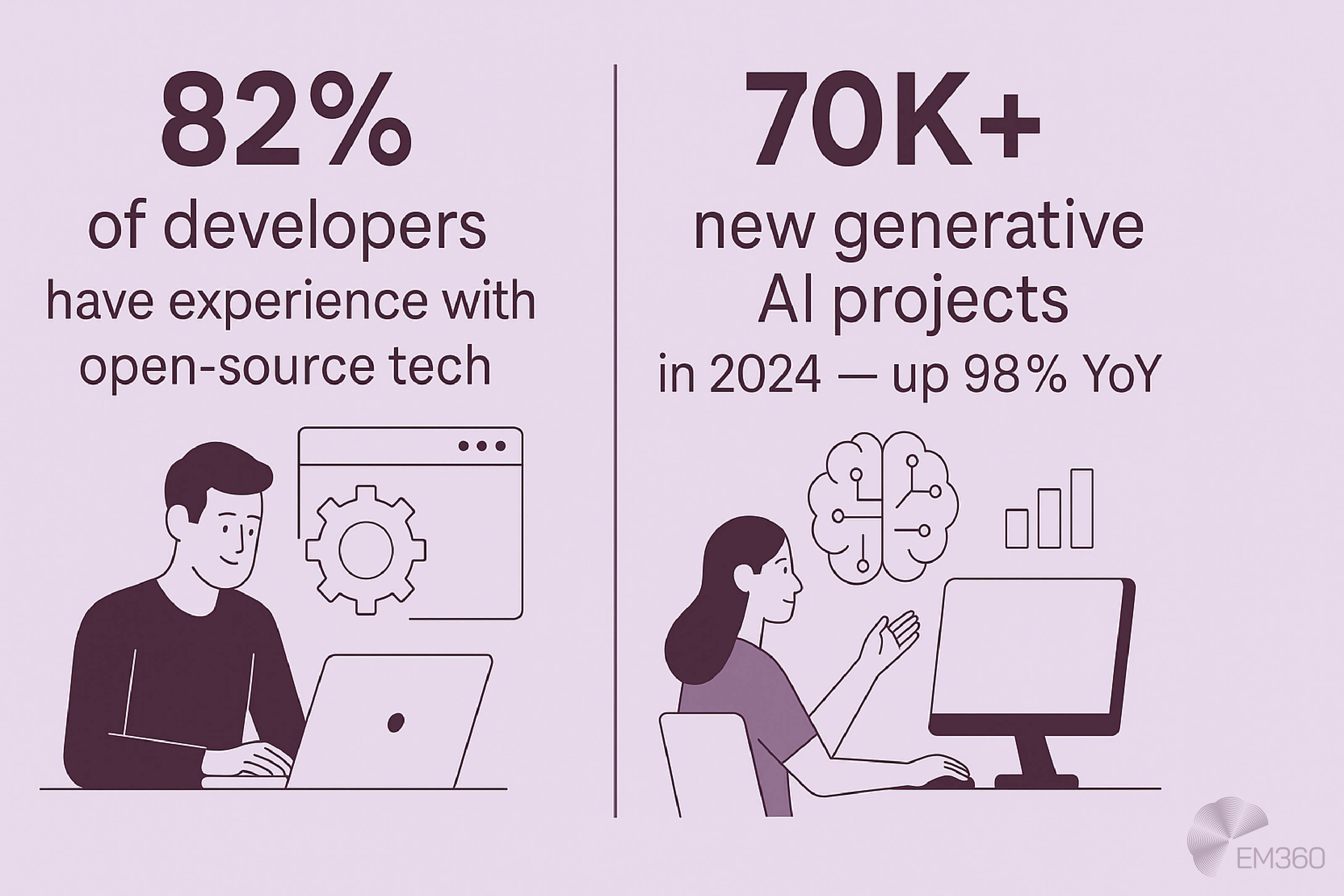

The open‑source movement is thriving: a March 2025 Stack Overflow survey found that 82 per cent of developers have some or a great deal of experience with open‑source technology. And GitHub recorded over 70,000 new generative AI projects in 2024—representing a 98 per cent year‑over‑year increase.

Such momentum underscores the vibrancy and rapid evolution of community‑driven AI.

Advantages of open-source AI for enterprise users

- Transparency and trust: Open code allows developers to audit algorithms, identify biases and check compliance with ethical standards. A global community can quickly detect and patch vulnerabilities.

- Flexibility and customisation: Enterprises can fine‑tune models on proprietary data without sending it to third‑party clouds. This is essential for regulated sectors such as healthcare and finance.

- Cost efficiency: Open‑source software is free to acquire. There are no licence fees, and community support accelerates learning and experimentation.

- Innovation at scale: Community contributions drive rapid improvements. New research is often released in open formats, enabling organisations to stay at the cutting edge.

Challenges with open-source AI in enterprise enviroments

- Hidden costs: While acquisition is free, production‑grade deployment requires skilled engineers and investment in infrastructure. Achieving low latency and high uptime demands dedicated hardware and expertise.

- Support and maintenance: Organisations must manage updates and security patches themselves, which can strain small or non‑technical teams.

- Partial openness: Many models provide open weights but keep training data proprietary, limiting full transparency.

What Is Proprietary AI?

Proprietary AI models are owned by vendors who control the codebase, training data and distribution. Access is typically provided through subscription licences or APIs. Examples include OpenAI’s GPT‑4o, Anthropic’s Claude 3, IBM Watson, Google’s Gemini models and Microsoft’s Azure OpenAI service.

Vendors may release older model weights to foster innovation while keeping their most advanced models closed.

When Coding Becomes Conversation

How natural language prompts and LLMs are redefining software delivery, talent models and accountability in enterprise development.

Examples and adoption of proprietary AI models

- OpenAI / ChatGPT: ChatGPT has become the most widely used AI tool globally, with over 400 million monthly active users as of February 2025. OpenAI’s enterprise tier has accelerated adoption in business contexts. A company statement revealed that teams have adopted ChatGPT in over 80 per cent of Fortune 500 companies within nine months of its launch. This was measured by registered corporate email domains. Early enterprise users include Block, Canva, Carlyle, PwC and Zapier.

- Microsoft AI solutions and Copilot: Microsoft claims that more than 85 per cent of the Fortune 500 now use its AI solutions, and its 365 Copilot product is rapidly gaining traction. The same blog cites IDC research showing that 66 per cent of CEOs report measurable benefits from generative AI initiatives. Microsoft’s integration of AI across Dynamics 365, Azure and Office suites reduces friction for enterprises already embedded in its ecosystem.

- Anthropic Claude and Google Gemini: Anthropic’s safety‑focused Claude models and Google’s Gemini series are accessed via APIs through cloud providers. Adoption data is less public, but these vendors boast enterprise clients in media, finance, healthcare and government sectors. Their appeal often stems from robust safety policies and deep integration with existing cloud stacks.

- IBM Watson and industry‑specific AI: – IBM Watson remains a significant player in industries like insurance, healthcare and banking, where domain‑specific data and regulatory requirements drive demand. Proprietary vertical AI solutions—such as Harvey for legal research or Komatsu’s autonomous mining AI—also fall into this category.

Statistics on proprietary model adoption are harder to come by than for ChatGPT and Microsoft, but analyst firms point to rapid acceleration. Gartner forecasts that more than 80 per cent of enterprises will have deployed generative AI applications or used GenAI APIs by 2026, up from less than 5 per cent in 2023.

Inside AI-First Software Delivery

AI-woven pipelines let agents write tests, tune cloud spend, and orchestrate releases, compressing software delivery from weeks to hours.

They’ve also found that 78 per cent of organisations are already using AI in at least one business function. In large enterprises, adoption spans multiple departments via internal teams, cloud AI platforms and third‑party tools.

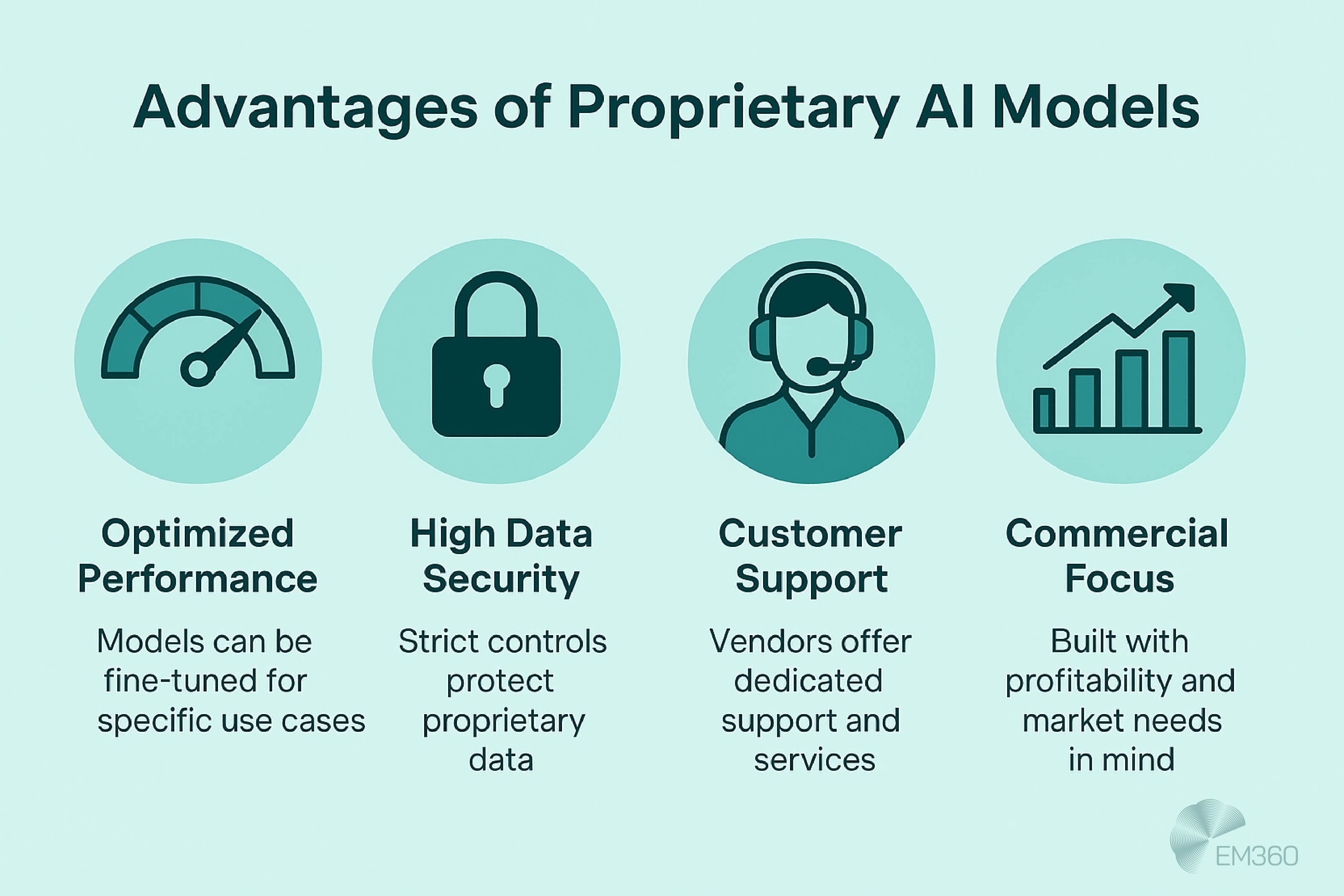

Advantages of proprietary AI for the enterprise

- Enterprise‑grade performance: Vendors operate massive infrastructure, ensuring fast response times and high reliability—vital for customer‑facing applications.

- Turnkey solutions: Proprietary models are plug‑and‑play. Fine‑tuning can be achieved via straightforward interfaces, reducing time‑to‑value and enabling teams without deep AI expertise to deploy quickly.

- Support and compliance: Vendors handle security hardening, regulatory certifications and upgrades. For industries where compliance is non‑negotiable, this can simplify procurement and reduce risk.

- Predictability: Clear service‑level agreements (SLAs) and performance guarantees reduce operational risk and provide consistent budgeting.

Challenges of proprietary AI at the enterprise level

- Licensing costs: Up‑front and recurring fees can be significant. Vendor lock‑in means switching providers may be costly or disruptive.

- Limited customisation: Closed models cannot be deeply modified; integration with unique workflows may be constrained.

- Data sovereignty concerns: Data is processed through vendor infrastructure; in regulated sectors where data cannot leave premises, proprietary models accessed via API are often off‑limits.

- Lower trust among developers: The Stack Overflow survey we mentioned before found that 37 per cent of participants dislike contributing to closed‑source AI and only 47 per cent trust proprietary AI for development work.

Key Differences Between Open‑Source And Proprietary AI

While both open-source and proprietary AI can deliver enterprise-grade results, the way they are built, maintained, and deployed shapes their strengths and limitations. Understanding these distinctions is essential for leaders looking to balance innovation, control, and risk.

Ownership, licensing and control

Open‑source licences such as Apache 2.0 and MIT grant users broad freedoms to use, modify and redistribute models. This autonomy prevents vendor lock‑in and encourages interoperability across platforms.

Right-Sizing GenAI Models

Avoid overbuilding LLMs for narrow use cases by using SLMs to balance accuracy, governance, compute cost and on-device execution needs.

Proprietary licences restrict access; enterprises depend on the vendor for updates and future roadmap decisions. When evaluating solutions, leaders should examine whether they can maintain control of critical workflows or risk dependency on an external provider.

Cost and total cost of ownership

At first glance, open‑source AI appears cheaper because there are no licence fees. However, integration, infrastructure, ongoing maintenance and security can drive significant costs. Skilled machine‑learning engineers are required to fine‑tune models and manage operations.

Proprietary AI involves clear subscription or usage fees, but vendors shoulder the burden of scaling, patching and monitoring. The most cost‑effective path depends on an organisation’s existing capabilities and scale.

For instance, a small team without AI expertise may benefit from a turnkey solution despite higher licence fees; a large organisation with a mature engineering department might find open‑source more cost effective over time.

Transparency, security and compliance

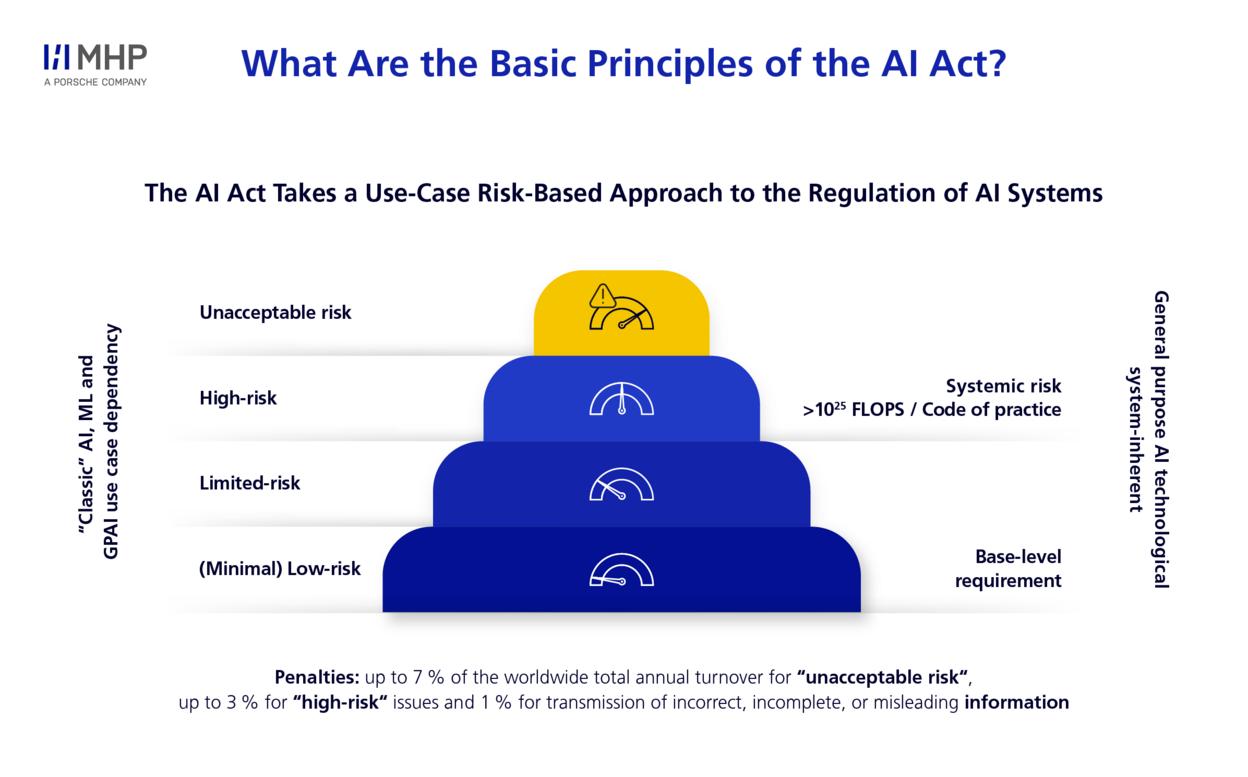

Open‑source models expose their inner workings, enabling thorough audits and community‑led vulnerability fixes. This transparency aids compliance with emerging regulations such as the EU AI Act. Nonetheless, the responsibility for security and compliance rests with the adopting organisation.

Proprietary vendors provide certifications (ISO 27001, SOC 2) and often integrate privacy‑preserving techniques, simplifying regulatory approval. But closed codebases can conceal biases or vulnerabilities, and organisations must trust vendors to disclose issues promptly.

Performance and reliability

The gulf between open‑source and proprietary AI performance is narrowing. Open‑source models like LLaMA‑3 and Mistral achieve comparable benchmarks to GPT‑4 on many tasks. Yet proprietary vendors operate massive data centres offering consistent low latency and high availability—essential for real‑time applications.

Enterprises looking for consumer‑grade responsiveness may prefer proprietary models unless they are willing to invest heavily in infrastructure and optimisation.

Customisation and flexibility

Inside AI’s Key Breakthroughs

Trace the landmark advances that shifted AI from theory to boardroom agenda, redefining how leaders think about automation and intelligence.

Open‑source models offer deep customisation. Enterprises can re‑engineer architectures, integrate domain‑specific data and deploy models in private environments. This is critical for specialised use cases such as predictive maintenance in manufacturing or custom language models for legal research.

Proprietary models are easier to configure but cannot be fundamentally altered. While some vendors provide fine‑tuning options, customisation is limited to adjusting pre‑defined parameters.

Data privacy and deployment options

Data sovereignty is a decisive factor. Organisations handling sensitive information (health records, financial transactions, classified data) often require on‑premises deployment. Open‑source AI allows models to run locally, ensuring data never leaves organisational control.

Proprietary models generally process data in the vendor’s environment; a few offer enterprise‑on‑prem or private cloud deployments, but these solutions command higher fees. In addition, BlackBerry research suggests that 75 per cent of businesses have plans to restrict or prohibit ChatGPT and similar tools, reflecting concerns about data leakage and compliance.

Ecosystems and lock‑in

Open ecosystems like Hugging Face and GitHub encourage cross‑pollination. Migrations between frameworks are feasible, and vendor independence protects against sudden cost hikes or discontinued services. Proprietary ecosystems integrate deeply with other products (e.g., Microsoft’s Azure AI with Office 365).

This integration streamlines workflows but increases dependence on a single provider and may limit future innovation.

Business Considerations & Use Cases

Selecting the right AI approach is as much about business context as it is about technology. From regulatory demands to market positioning, the factors driving adoption will shape where and how AI delivers value. Mapping these considerations to practical use cases helps ensure investments are both strategic and measurable.

Define strategic objectives

AI investments should align with business goals. Are you seeking rapid time‑to‑market? Is experimentation and innovation the priority? For early‑stage projects or organisations with tight budgets, open‑source tools provide a low‑risk way to explore AI without substantial investment.

For mission‑critical applications requiring guaranteed performance and compliance, proprietary solutions may be appropriate.

Evaluate internal capabilities

Skilled data scientists and engineers can unlock the potential of open‑source AI. Without such expertise, organisations risk delayed deployments and technical debt. Where talent is scarce, a managed proprietary solution can accelerate deployment while your team upskills.

Consider industry and regulatory context

Regulated sectors often favour on‑premises deployment, making open‑source the obvious choice. A recent report by the U.S. National Telecommunications and Information Administration (NTIA) concluded that open foundation models confer broad benefits and that there is insufficient evidence to justify restricting open model weights.

Public Knowledge emphasises that open models support innovation, accountability and access. Nevertheless, some regions mandate that AI systems meet specific compliance standards; partnering with a vendor may simplify certifications.

Balance cost and innovation

Conduct a total cost of ownership analysis. Open‑source solutions may require capital expenditure on hardware and salaries, whereas proprietary solutions shift costs to operational budgets.

Weigh the flexibility to innovate against predictable spending. Nearly 80 per cent of organisations either have deployed or are piloting AI, signalling that early adoption is becoming a competitive necessity.

Illustrative use cases

- A healthcare provider fine‑tunes an open‑source language model on anonymised clinical notes to build a decision‑support tool. Data remains within the hospital, maintaining patient confidentiality and complying with data‑privacy laws.

- A multinational bank chooses a proprietary chatbot platform with built‑in regulatory compliance to handle customer queries across multiple jurisdictions. Rapid deployment and vendor support outweigh the lack of deep customisation.

- A retail company adopts a hybrid strategy: an open‑source model powers personalised marketing recommendations, while a proprietary fraud‑detection model screens transactions. This balances innovation and risk mitigation.

Emerging Trends & The Future of AI Models

The AI landscape is evolving at speed, with breakthroughs in model design, training efficiency, and governance reshaping what’s possible. Tracking these trends helps enterprise leaders anticipate shifts, adapt strategies, and stay ahead in a market where today’s advantage can quickly become tomorrow’s baseline.

Hybrid approaches

Organisations increasingly combine open‑source and proprietary models. For example, they might use an open‑source foundation model for core reasoning and layer proprietary APIs for industry‑specific capabilities or compliance features.

Hybrid AIOps strategies allow leaders to harness the innovation of the open‑source community while leveraging the reliability of vendor solutions.

Smaller, efficient models

GitHub’s 2024 Octoverse report highlights a growing appetite for smaller models with lower compute requirements.

Projects like ollama/ollama—the fastest‑growing open‑source AI repository by contributor count—enable developers to run language models locally on laptops. This shift enables edge deployments and democratises AI experimentation.

Policy and ethics

Governments are grappling with how to regulate AI. The NTIA’s endorsement of open models suggests a recognition that openness fosters innovation and accountability. The EU AI Act emphasises transparency and risk management, potentially favouring open‑source solutions.

Ethical AI principles—fairness, accountability and transparency—resonate strongly with open frameworks.

Developer trust and preferences

The Stack Overflow survey found that most respondents prefer open‑source activities over proprietary ones, citing higher trust and community engagement. Only 47 per cent of developers trust proprietary AI for development work. This generational shift suggests that the talent pool of tomorrow will expect openness and flexibility in AI tools.

Global dynamics

China’s strategy of releasing open‑source models to lower costs and gain market share, alongside initiatives in Europe and the U.S. to develop sovereign computing resources, suggests that geopolitical considerations will shape the availability and distribution of AI models. Proprietary vendors, meanwhile, are under pressure to demonstrate transparency and ethical safeguards.

Decision Framework For Enterprise Leaders

Choosing between open-source AI, proprietary models, or a hybrid approach is rarely straightforward. The right path depends on your organisation’s goals, capabilities, and appetite for risk. This framework distils the key considerations into a practical guide, helping enterprise leaders align AI adoption with strategy, compliance, and long-term value.

- Clarify your goals. Are you experimenting with AI or deploying critical systems? Innovation‑focused teams may start with open‑source models; operational systems may warrant proprietary SLAs.

- Assess data sensitivity. Can your data leave the premises? If not, open‑source or on‑prem solutions are essential.

- Review internal expertise. Do you have the talent and infrastructure to run and fine‑tune models? If yes, open‑source offers freedom; if not, proprietary may be quicker.

- Calculate total cost. Compare licensing fees against infrastructure, staffing and maintenance costs. Plan for long‑term scalability.

- Determine customisation needs. If your use case is niche or requires deep integration, open‑source may be better. If generic capabilities suffice, proprietary solutions may be adequate.

- Plan for flexibility. Avoid putting all your eggs in one basket. A hybrid approach can provide the best of both worlds and future‑proof your AI strategy.

- Pilot and iterate. Test solutions with limited data, measure performance, gather user feedback and scale only when satisfied.

Final Thoughts: The Decision Is A Continuum, Not A Binary

The choice between open‑source AI and proprietary models is not a simple either‑or. Decisions lie along a continuum, from fully open to fully closed. Leaders must align model selection with strategic objectives, regulatory requirements, technical capabilities and risk appetite.

Open‑source AI offers unparalleled transparency, flexibility and community‑driven innovation, but demands investment in skills and infrastructure. Proprietary AI delivers ready‑made performance, support and compliance, yet can limit control and increase costs.

Hybrid models increasingly bridge the gap, combining the creativity of open communities with the robustness of vendor solutions. In an era where AI underpins competitive advantage, wise leaders will build an adaptive, evidence‑driven strategy.

By weighing the trade-offs and building flexibility into their strategy, enterprises can capture the strengths of both approaches—driving responsible, resilient, and innovative AI adoption. For more insights, explore the latest guidance from EM360Tech.

Comments ( 0 )