The golden age of AI chatbots has well and truly arrived. It began with the launch of OpenAI’s ChatGPT, which amazed users with its ability to generate text and answer topics on almost any topic in the world.

Since then, AI has gripped Silicon Valley, with some of the biggest tech companies in the world creating their own Large Language Models (LLMs) to capture a slice of ChatGPT's popularity.

One of these LLMs is Google’s Gemini, which was released as Bard at the start of 2023. It has since become one of the leading ChatGPT alternatives on the market today.

But how does Gemini compare to ChatGPT? This article explores the key differences between Gemini vs ChatGPT while comparing their respective features, advantages, and limitations to find out which one is better in 2024.

What is Gemini?

Gemini is an AI-powered chatbot developed by Googe that can communicate and generate human-like text in response to a wide range of questions.

Originally launched under the name of Bard in February 2023, it has since rebranded as Gemini. The chatbot has garnered significant attention for its ability to reason, understand, and solve problems across various domains.

One of Gemini's key strengths is that it’s multimodal. Unlike many AI models trained on text alone, Gemini is designed to understand and process information from different modalities, including text, code, and even silent films. This allows it to grasp complex concepts and perform tasks that were previously challenging for AI.

Gemini is also known for its exceptional reasoning ability. It can analyze vast amounts of information and easily draw insightful conclusions and connections. This is supported by Gemini’s direct connection to Google, which allows it to answer questions with real-time information so that responses are always up-to-date.

Gemini is available in three versions:

- Gemini Ultra: This is the top-of-the-line version, likely boasting the most processing power and capability. Think of it as a powerful V8 engine built for demanding tasks.

- Gemini Pro: This version represents a good balance between power and efficiency. It's likely suitable for a wide range of tasks, similar to a reliable V6 engine.

- Gemini Nano: This version is likely the most lightweight and resource-efficient option. Imagine it as a fuel-efficient 4-cylinder engine, well-suited for tasks that don't require the full power of the other versions.

Key Features of Gemini

1. Multimodal Understanding

Unlike many AI models limited to text, Gemini excels at processing information from various sources. This includes:

- Text: Understanding and generating human-like text for tasks like summarization, translation, and question answering.

- Code: Analyzing codebases, identifying potential issues, suggesting solutions, and explaining its reasoning for programmers.

- Visuals: Understanding the content of silent films, inferring plot and themes, and even recognizing objects and their relationships in images.

2. Up-to-date information

Gemini continuously crawls webpages, indexing and analyzing information in real time to generate your responses. This means you get access to the latest updates and breaking news as soon as they happen without needing to wait for the AI algorithms to catch up.

3. Human-like Explanations

Gemini doesn't just give you answers; it explains them clearly and concisely, using natural language that you can easily understand. Thanks to its advanced natural language processing (NLP) capabilities, Gemini grasps the deeper meaning and context behind your queries. This allows it to provide more relevant and insightful results, even for complex or open-ended questions.

4. Comprehension and Improvement

Gemini can be an incredibly powerful tool for programmers. It can analyze large codebases, identify potential bugs and inefficiencies, suggest solutions, and even explain its reasoning in a way that's easy to understand. This can significantly improve code quality and save developers time.

5. Focus on Responsible Development

Google emphasizes the responsible development and deployment of Gemini. They recognize the potential impact of AI and are committed to using it for good.

What is ChatGPT?

ChatGPT is a large language model (LLM) trained on a massive dataset of text and code from the internet, allowing it to engage in conversations and answer questions on any topic from the user.

When Coding Becomes Conversation

How natural language prompts and LLMs are redefining software delivery, talent models and accountability in enterprise development.

Developed by OpenAI, GPT can generate human-quality text, translate languages, write different kinds of creative content, and most importantly, hold engaging conversations on various topics.

ChatGPT was one of the first chatbots of its kind when it launched in November 2022. It's largely credited with setting off the AI revolution that has gripped Silicon Valley over the past year or so, and has since reached over 180 million users and is backed by tech giants such as Microsoft and Inosys, making OpenAI a frontrunner in the AI race.

But that doesn't mean ChatGPT is the best chatbot in the world, it just means it's the oldest - and that isn't always a good a thing. Many of the other chatbot providers have since borrowed OpenAI's GPT LLM to use in their own, specialised chatbots, meaning that there are many chatbots out there with similar capabilities to ChatGPT.

ChatGPT comes in two versions:

- Free GPT-3.5 version: This is the most widely available version, accessible through the ChatGPT website and open to anyone. It uses the GPT-3.5 language model and offers basic conversational capabilities, creative text generation, and information retrieval.

- Paid GPT-4 version (ChatGPT Plus): This premium version offers advanced features and capabilities compared to the free one. It uses the more powerful GPT-4 language model, making it more accurate and more up-to-date.

Key Features of ChatGPT

1. Strong Conversational Abilities

Thanks to its powerful natural language processing (NLP) capabilities, ChatGPT shines in carrying on natural-sounding conversations. It can respond to your queries in a way that mimics human interaction, adapting its tone and style to fit the context. Think of it as a chatty and knowledgeable friend you can bounce ideas off of.

2. Text Generation

ChatGPT excels at generating different creative text formats, allowing you to create poems, scripts, musical pieces, emails, letters, and more. You can provide it with prompts or specific instructions, and it will craft unique and engaging text content.

3. Universal

Inside LLM Architecture

Break down transformers, attention, training data and parameters to see what it takes to deploy enterprise-grade language models.

ChatGPT's capabilities extend beyond simple conversations. It can translate languages, summarize information, write different kinds of creative content, and even answer your questions in an informative way, even if they're open-ended, challenging, or strange.

4. Adaptability and Personalization

One of ChatGPT's key features is its ability to learn and adapt. The more you interact with it, the better it understands your preferences and communication style. This personalization aspect can lead to more meaningful and relevant interactions over time.

5. API Access

Thanks to OpenAI's open-source API, you can integrate ChatGPT into your own applications and projects to leverage its capabilities. This makes it incredibly versatile and available for use by both developers and end users.

Gemini vs ChatGPT: Which is Better?

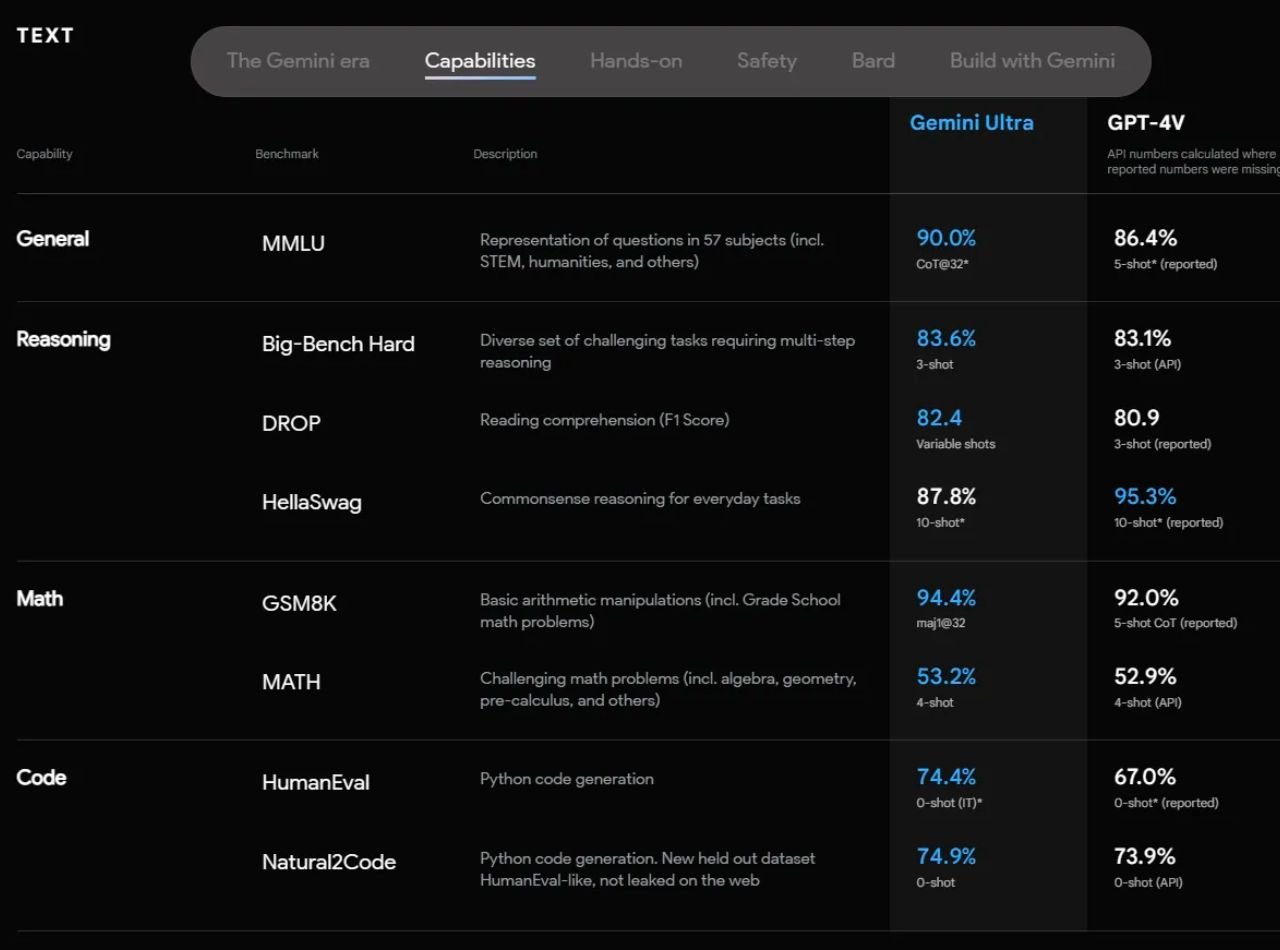

Google has so far struggled to attract as much attention as OpenAI's explosive ChatGPT. But Gemini Ultra performs better than ChatGPT on 30 of the 32 academic benchmarks in reasoning and understanding it tested on.

Gemini Ultra is also the first AI model to outperform human experts on these benchmark tests. It scored 90%, on a multitasking test called MMLU, which covers 57 subjects including maths, physics, law, medicine and ethics, beating all other current AI models, including OpenAI's GPT-4o.

The less powerful Gemini Pro model also outperformed GPT-3.5, the LLM behind the free-to-access version of ChatGPT, on six out of eight tests.

Still, Google warned that 'hallucinations' were still a problem with every version of the model. “It’s still, I would say, an unresolved research problem,” said Eli Collins, the head of product at Google DeepMind

Ultimately, Gemini and ChatGPT have their own distinct advantages and disadvantages. Gemini's access to real-world information make it a strong contender for complex tasks, but ChatGPT's conversational abilities and creative text generation make it a powerful tool for various applications.

Comparing Gemini and ChatGPT Benchmarks

To gauge the effectiveness of AI models, researchers use benchmarks like the Massive Multitask Language Understanding (MMLU) test. This test assesses an AI's ability to understand and solve problems across various language-related tasks.

When AI Agents Meet Governance

Explores how Qwen’s agentic model amplifies identity, access, jurisdiction and durability risks in enterprise AI strategy.

According to Google, Gemini AI beat ChatGPT in almost all of its academic benchmarks. Here's a comparison between Gemini Ultra and ChatGPT-4 using the benchmarks tested by Google:

1. General Understanding

- Gemini Ultra scored an incredible 90.0% in Massive Multitask Language Understanding (MMLU), demonstrating its ability to comprehend 57 subjects, including STEM, humanities, and more.

- GPT-4 Achieved just an 86.4% 5-shot capability in a similar benchmark.

2. Reasoning Abilities

- Gemini Ultra scored 83.6% in the Big-Bench Hard benchmark, demonstrating proficiency in a wide range of multi-step reasoning tasks.

-

GPT-4 showed similar performance with an 83.1% 3-shot capability in a similar context.

3. Reading Comprehension

- Gemini Ultra scored an 82.4 F1 Score in the DROP reading comprehension benchmark.

- GPT-4V achieved a slightly less impressive score with 80.9 3-shot capability in a similar scenario.

4. Commonsense Reasoning

- Gemini Ultra scored with an 87.8% 10-shot capability in the HellaSwag benchmark.

- GPT-4 showed a slightly higher 95.3% 10-shot capability in the same benchmark.

5. Mathematical Proficiency

- Gemini Ultra excelled in basic arithmetic manipulations with a 94.4% maths score.

- GPT-4 maintained 92.0% 5-shot capability in Grade School math problems.

6. Math Problems

- Gemini Ultra could tackle complex math problems with a 53.2% 4-shot capability.

- GPT-4 scored slightly less, with a 52.9% 4-shot capability in a similar context.

7. Code Generation

- Gemini Ultra could generate Python code with a commendable 74.4% capability.

- GPT-4 did not perform as well, scoring a 67.0% capability in a similar benchmark.

8. Natural Language to Code (Natural2Code)

- Gemini Ultra showed proficiency in generating Python code from text with a 74.9% 0-shot capability.

- GPT-4 scored well too, maintaining a 73.9% 0-shot capability in a similar benchmark.

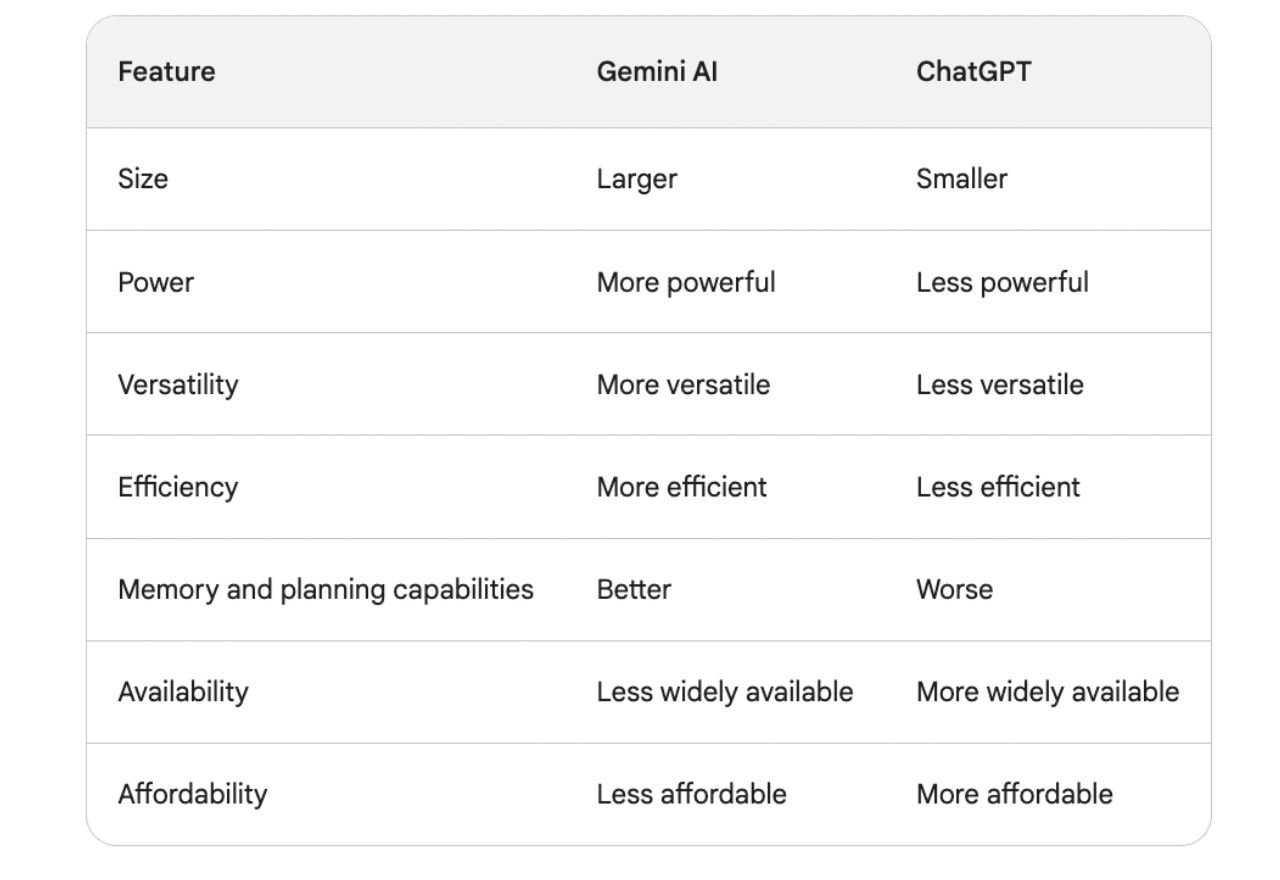

Differences Between Gemini AI and ChatGPT

Like other AI models, including ChatGPT, Hassabis said data used to train Gemini had been taken from a range of sources including the open web. However, there are some key differences between the two models that make Gemini AI a more versatile and powerful tool.

Inside Poe's Chatbot Aggregator

See how a unified access layer to GPT-4, Claude, Gemini and more is reshaping how enterprises test, compare and deploy generative AI.

For instance, The GPT-3.5 model used on the free version of ChatGPT was trained using data up to September 2022, meaning that it can only provide accurate information up to that point. The same is technically true of GPT-4, but it’s better than GPT -3.5 at learning and responding to current information provided through ChatGPT prompts.

Gemini AI, however, is trained on real-time data from the internet, meaning it can answer questions using up-to-date information. The model is trained on a massive dataset of text and code, making it larger and more powerful than ChatGPT.

This means that it can generate more complex and nuanced text, and it can also perform more demanding tasks, such as translation and summarization.

Comments ( 0 )