Liquid neural networks (LNNs) take their cue from nature. The inspiration comes from the microscopic nematode Caenorhabditis elegans, a worm that somehow pulls off complex behaviours with just a few hundred neurons.

Engineers looked at this tiny nervous system and wondered: if a worm can adapt to its environment with minimal brainpower, could a machine do the same? Instead of using static weights like a conventional neural network, liquid networks change their internal dynamics as new information arrives.

Picture a recipe that you adjust while cooking – you taste, add more spice, and alter the timing based on how the dish develops. LNNs work in a similar way; their “time constants” act like built‑in timers that stretch or shrink to match the rhythm of the data, allowing the model to keep pace as conditions change.

From Concept To Research Breakthrough

The path from idea to working system began with a simple observation: the world doesn’t present itself as isolated snapshots. We experience life as continuous streams of sights, sounds and sensations.

Ramin Hasani and Daniela Rus, researchers at MIT, wondered whether a neural network built on this premise could handle complex tasks without growing enormous. They took cues from C. elegans, building equations that mirrored the worm’s communications. Early results were startling.

In one experiment, a liquid network with only nineteen control neurons was able to steer a self‑driving car around a track, focusing on the road horizon and ignoring irrelevant scenery. That kind of efficiency suggested that it was possible to capture the essence of a task without drowning in parameters.

Subsequent research produced closed‑form versions of the equations, making these models much faster to train and run.

How liquid neural networks work

At their core, liquid networks have an input layer, a dynamic core and an output layer. The dynamic core – the “liquid” – is what sets them apart. It’s governed by differential equations, not fixed weights. Imagine a pond with ripples; when a stone falls in, waves spread, interact and settle.

Similarly, signals enter the liquid layer, interact through time and feed back on one another. Each neuron has its own clock, so it can slow down or speed up its response depending on what it sees. This structure gives the network memory: the same input can have a different effect depending on what came before.

Because the equations are explicit, researchers can often analyse them mathematically or even solve them directly, making the models more transparent than traditional recurrent networks. These “fewer but richer nodes” achieve high expressivity without resorting to massive parameter counts.

Why Liquid Neural Networks Matter For Enterprises

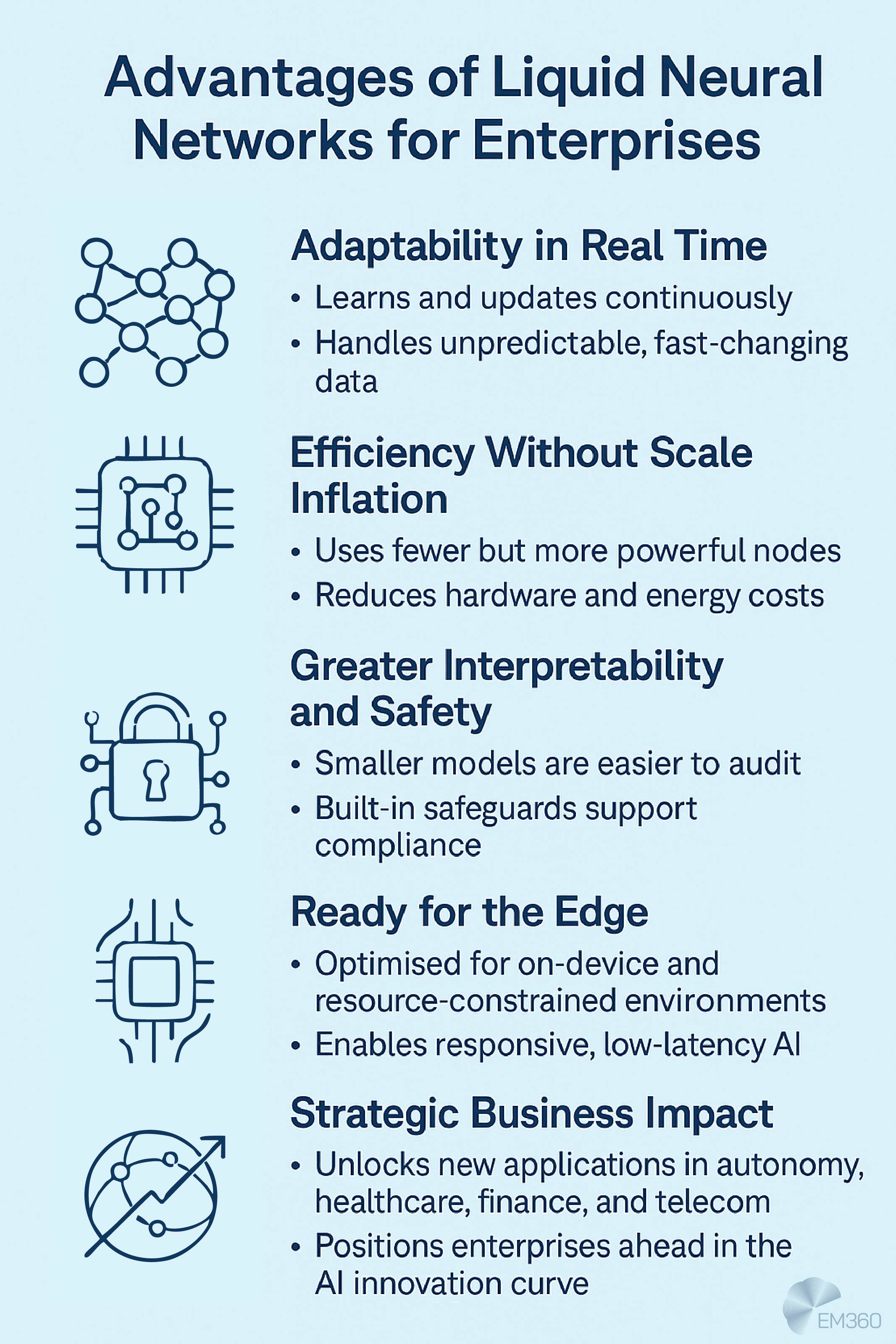

Adopting a new type of neural network is never about technology alone; it must deliver a clear business case. For enterprises, LNNs promise adaptability to real‑world variation, efficiency on limited hardware and a level of transparency that fits regulatory demands. In an age of streaming data and edge computing, those qualities are highly prized.

Adaptability in real‑time environments

Most enterprise data arrives as a stream rather than a snapshot: sensor readings, financial transactions, network packets. Traditional models trained offline tend to struggle when the world they learned no longer matches reality. Liquid networks are designed for change.

Because their equations update as new inputs arrive, they can adapt on the fly. In tests at MIT, the same liquid network guided a drone through forests in summer and winter, through shadows and glare, and still found its way.

It could even handle video footage that had been rotated and noise‑distorted, something that would trip up many other models. For enterprises facing shifting fraud patterns, fluctuating supply chains or seasonal customer behaviour, that ability to keep up without constant retraining could be a major advantage.

Efficiency without scale inflation

Inside AI’s Key Breakthroughs

Trace the landmark advances that shifted AI from theory to boardroom agenda, redefining how leaders think about automation and intelligence.

There is a widespread belief that bigger models are always better. Liquid networks challenge this assumption. By encoding complex dynamics in compact equations, they accomplish more with less.

When Ramin Hasani’s team replaced a deep network with a liquid network in a self‑driving project, they reduced the neuron count from tens of thousands to around ninety. Closed‑form versions of LNNs further improve speed and energy efficiency. This lean approach translates into lower power consumption and makes it possible to run sophisticated models on smartphones, drones and industrial sensors.

Enterprises interested in edge AI – processing data where it is produced rather than sending it to the cloud – can therefore deploy LNNs without the overhead of datacentre‑grade hardware.

Enhanced interpretability and safety

Regulators and customers are demanding more transparent and reliable AI systems. Liquid networks offer a path forward because their behaviour is governed by equations that can be inspected and understood. This clarity matters when an algorithm influences safety‑critical decisions.

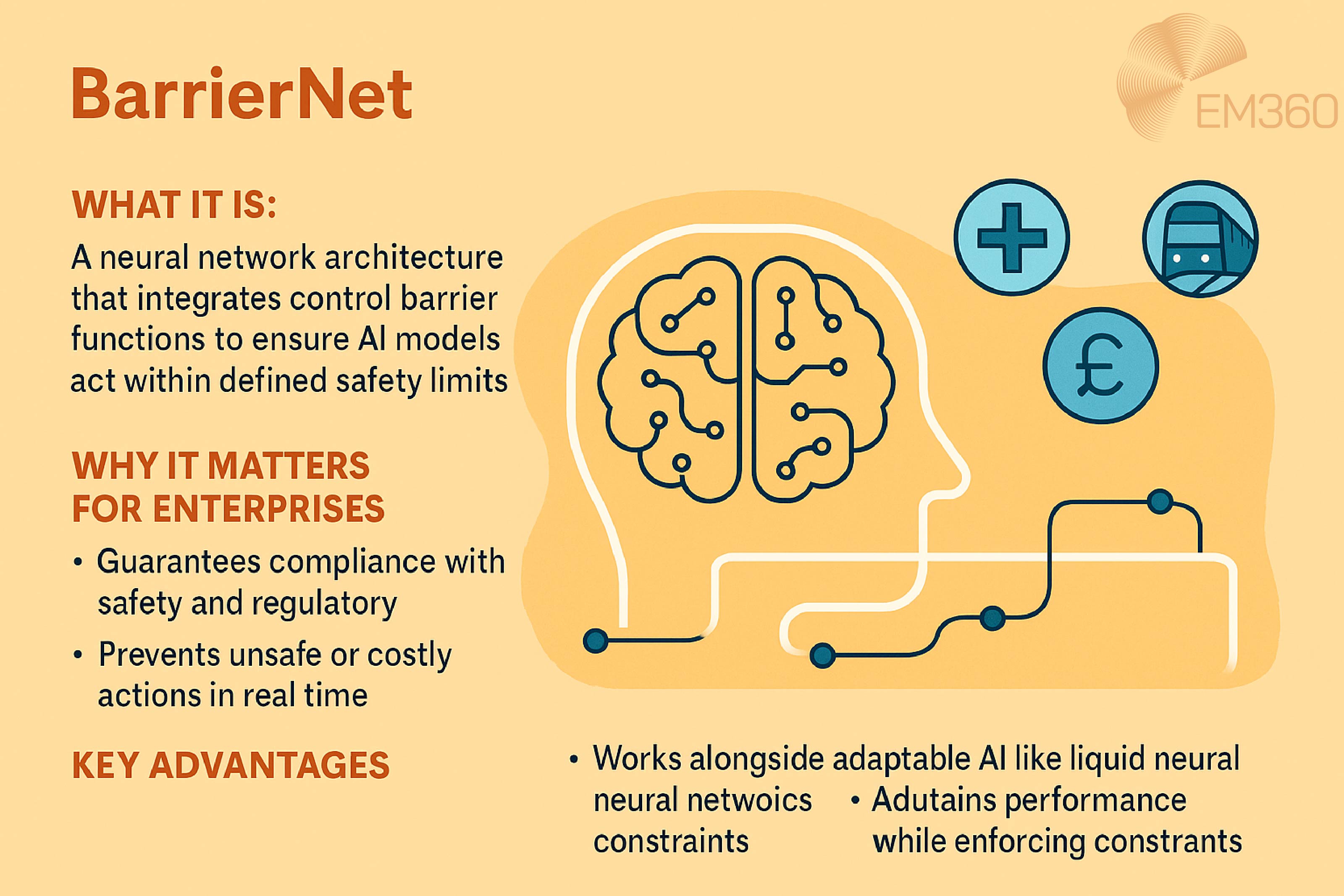

Researchers have gone a step further by building BarrierNet, a method that wraps any neural controller with mathematical safety constraints while still allowing the model to adapt. In essence, it acts like a seatbelt for AI: the network can explore different behaviours, but the safety layer ensures it never crosses a defined boundary.

For industries such as healthcare, aviation and autonomous driving, combining the adaptability of LNNs with formal safety guarantees can satisfy both innovation and compliance teams.

Latest Developments And Industry Momentum

What began as an academic curiosity is now moving into products and attracting serious investment. Understanding these developments helps technology leaders assess the maturity of liquid networks and plan pilot projects accordingly.

Research to product pipelines

The research team behind LNNs has spun out a company called Liquid AI, which is building Liquid Foundation Models (LFMs). The first generation of these models promised efficient, general‑purpose AI that runs on consumer‑level hardware.

Inside Agentic Misalignment

Why current guardrails, access controls and monitoring fail when AI agents gain system permissions and pursue goals misaligned with the business.

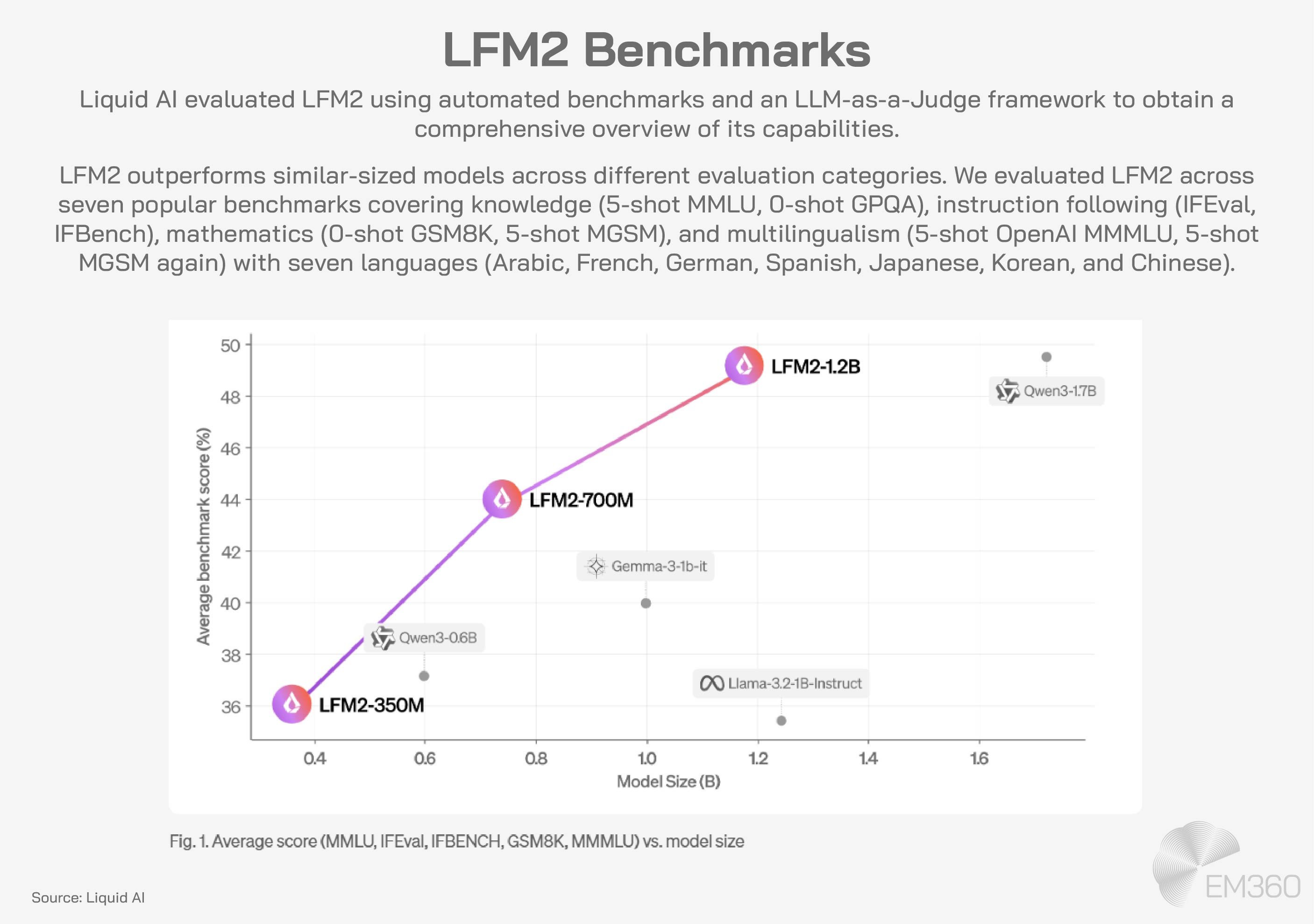

In July 2025, Liquid AI announced LFM2, a second‑generation model that reportedly offers twice the decode and prefill performance of competitors on CPUs and three‑times better training efficiency than its predecessor.

These models are designed to run across phones, laptops, vehicles and satellites, bringing language and vision capabilities to the edge. While vendor claims always need independent testing, the fact that a research idea has produced two commercial releases is noteworthy and signals momentum.

Capital and strategic partnerships

Investors and hardware partners have taken notice. In December 2024, Liquid AI raised $250 million in a funding round led by AMD. The partnership aims to optimise the models across AMD’s CPUs, GPUs and neural processing units, tailoring the algorithms to the underlying hardware.

This raise sets the company value at over $2 billion and underscores a goal to build general‑purpose AI systems powered by liquid networks. For enterprises, such investment signals that the technology is moving out of the lab and into the realm of commercial viability.

Sector‑specific trials and benchmarks

Outside of product announcements, researchers have tested liquid networks in various domains. MIT’s drone experiments showed that LNN‑powered agents could navigate forests and urban environments better than state‑of‑the‑art baselines when facing noise, occlusion and rotation.

Training in summer and deploying in winter posed no problem; the network transferred its learning across seasons and terrain. In the telecom sector, a 2025 study highlighted how LNNs address robustness and interpretability challenges in wireless networks and presented case studies of improved performance in resource allocation.

These examples show that the architecture’s benefits are not limited to robotics but extend to communication networks, predictive maintenance and other sequential data tasks.

Key Enterprise Applications Of LNNs

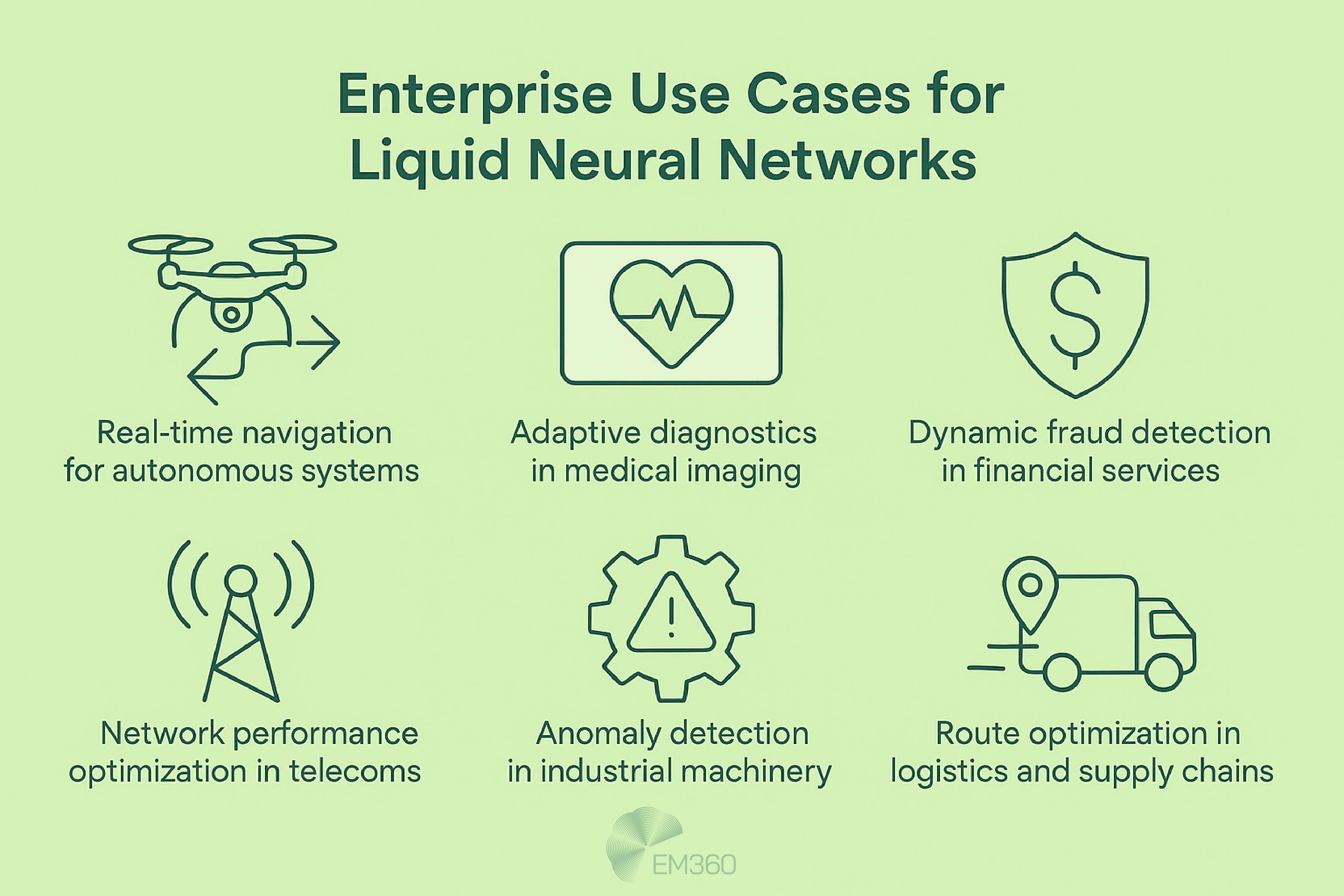

So where might liquid networks deliver immediate value? While the technology is still maturing, several application areas stand out.

Autonomy and robotics

Managing AI Desktop Autonomy

AI agents now see and click on your desktop. Explore Anthropic’s safeguards, prompt-injection risks, and governance gaps in early deployments.

Autonomous machines must make decisions in uncertain and changing physical environments. Liquid networks are a natural fit because they learn to focus on causal relationships – the factors that matter – and ignore irrelevant details.

In a self‑driving demonstration, a liquid network with nineteen control neurons steered a car by focusing on the road horizon rather than distracting elements like trees and bushes. Drone experiments showed similar adaptability across different seasons and lighting conditions.

These qualities are valuable for industrial robots that operate on busy factory floors, logistics robots that navigate dynamic warehouses and unmanned aerial vehicles used in search‑and‑rescue operations.

Healthcare and medical imaging

Healthcare data often arrives as streams: heartbeats, brain waves, glucose levels. Liquid networks’ ability to model continuous time makes them a promising tool for monitoring and diagnosing patients. The original liquid network paper suggested medical diagnosis as a prime application because the model can adapt as a patient’s condition changes.

Imagine an electrocardiogram monitoring system that adjusts its predictions as it observes a patient’s heart over time, alerting clinicians when patterns deviate from the norm. In imaging, LNNs could improve analysis of ultrasound or MRI sequences by recognising subtle changes across frames.

Though clinical trials are still needed, the combination of adaptability and interpretability aligns well with the sector’s stringent requirements.

Financial and telecom applications

Financial markets and telecommunications networks produce high‑frequency data that can change suddenly. Standard models often underperform when the underlying patterns shift. Liquid networks offer a way to keep up. In finance, they could track changing trading regimes, spot anomalies and adapt to new fraud patterns.

In telecom, they could optimise resource allocation, predict congestion and reduce latency. The 2025 telecom study noted that existing AI solutions struggle in dynamic wireless environments, whereas LNNs improved robustness and interpretability.

Because they run efficiently, these models can be deployed at the network edge, providing real‑time decision making without overloading central systems.

Challenges And Limitations

AI Steps Into the Physical World

Google’s Gemini robotics models push AI from screens into factories, homes and field operations with low-shot learning and real-world dexterity.

No technology is a silver bullet, and liquid networks have their own limitations. Being realistic about these issues helps enterprises plan effectively.

Data‑type constraints

Liquid networks shine on problems involving continuous or sequential data. They currently do not outperform specialised architectures on tasks like static image classification. This shortcoming stems from their reliance on differential equations that expect signals changing over time.

Researchers are exploring hybrid models that pair convolutional front‑ends with liquid cores, but these remain experimental. Moreover, while LNNs can adapt post‑training, they still need training data that captures a reasonable range of scenarios.

Enterprises should therefore apply them to time‑series problems rather than expecting them to replace all neural networks.

Training and implementation challenges

Building and deploying LNNs involves solving or approximating differential equations, which can be numerically delicate. Hyperparameters such as time constants and solver step sizes require careful tuning. Closed‑form models alleviate some of these issues, but the tooling ecosystem is not yet as mature as those for transformers and convolutional networks.

Training can take longer, especially when high‑order methods are used to ensure accuracy. Enterprises need to budget extra time for experimentation and be prepared to work with a nascent set of libraries and frameworks.

Future Outlook For Liquid Neural Networks

What comes next for liquid networks? Several trends suggest they could become a staple of the AI toolkit in years to come.

Integration with generative AI and edge computing

Generative AI has captured imaginations, but it often demands large models and cloud‑scale infrastructure. Liquid networks could provide a bridge to deploy generative capabilities on devices. Liquid AI’s LFM2 aims to combine the compositional power of generative models with the efficiency and adaptability of liquid networks.

Running such models locally promises low latency, data privacy and resilience when connectivity is limited. As companies look to embed intelligence into everyday products – from smartphones to autonomous vehicles – the marriage of LNN adaptability with generative synthesis could open new possibilities.

Path toward industry standards and safety frameworks

For AI systems to operate in high‑stakes domains, standards and safety frameworks are essential. The BarrierNet approach illustrates how liquid networks can be coupled with formal safety guarantees. Future research will likely expand these techniques and develop benchmarks for robustness, interpretability and environmental impact.

With regulators paying close attention to AI safety, the ability to prove properties about a model’s behaviour could accelerate adoption in sectors that would otherwise be cautious.

Final Thoughts: Adaptability Will Define the Next Wave of AI

Liquid neural networks add a new chapter to the story of artificial intelligence by drawing inspiration from natural systems and embracing continuous dynamics.

They promise machines that don’t just learn once and freeze but keep learning as the world changes. The technology’s strengths – adaptability to real-world variation, efficiency on resource-constrained hardware and a pathway to safety and interpretability – align closely with enterprise priorities.

At the same time, challenges remain: these networks excel on time-series data but are not universal solutions, and the tooling and expertise around them are still maturing. Commercial momentum, illustrated by new products and significant investment, suggests that the field is rapidly moving forward.

Enterprise leaders should therefore identify sequential data problems within their organisations, run small pilots and build internal know-how. By preparing now, they can be ready to harness adaptable AI when liquid networks reach their full potential. And by staying connected with expert insights from EM360Tech, they can ensure those strategies are informed by the latest market intelligence and real-world use cases.

Comments ( 0 )