AI isn’t a side project anymore. It’s turning up inside customer support workflows, sales tooling, fraud detection, software delivery, HR screening, and analytics pipelines that executives rely on to make calls quickly. That’s the upside.

The downside is that AI introduces a different kind of organisational risk: decisions can become harder to explain, ownership can get messy fast, and small design choices can create outsized reputational and regulatory consequences.

ISO/IEC 42001 exists for that exact reality. It’s a way to put AI governance on rails, so responsible AI isn’t something you remember to do after the incident report lands. It becomes a system you run on purpose.

What Is ISO 42001?

ISO/IEC 42001:2023 is an international standard for an Artificial Intelligence Management System (AIMS). In plain terms, it sets requirements and provides guidance for how an organisation should establish, implement, maintain, and continually improve a management system for the responsible development, provision, or use of AI systems.

That wording matters. ISO 42001 is not a technical standard that tells you how to build a model, which algorithm to choose, or what “good accuracy” looks like. It’s a governance standard. It focuses on the organisational structures, policies, processes, and accountability mechanisms that help you manage AI consistently at scale.

It’s also positioned as a certifiable management system standard, which means it’s designed to be assessed against defined requirements. That matters for enterprises that need repeatable controls, auditable evidence, and a shared language across business units and suppliers.

Why ISO 42001 Exists

The pressure isn’t just “more AI”. It’s AI spreading across the organisation faster than governance can keep up.

In many enterprises, AI capabilities appear in three places at once:

- First, teams build or customise AI internally, usually to improve speed and decision-making.

- Second, vendors embed AI into platforms you already use, sometimes as default functionality.

- Third, business functions adopt AI tools directly, especially in marketing, customer success, analytics, and sales operations.

When those three streams collide, the risk is rarely “the model is wrong” in isolation. The bigger issue is governance friction:

- Who owns the decision to deploy an AI feature in a critical workflow?

- Who signs off on the risks to customers, employees, or partners?

- How do you monitor outcomes over time, especially when data, user behaviour, and business context change?

ISO 42001 exists because organisations need a management system that can answer those questions without reinventing the wheel for every new AI use case. It is explicitly framed as a systematic approach to managing AI in a recognised management system framework, including topics like ethics, accountability, transparency, and data privacy.

Boardroom Guide to AI Defense

Why model threats are now material risks, and how CISOs are using specialised tools to turn AI from unmanaged exposure into governed capability.

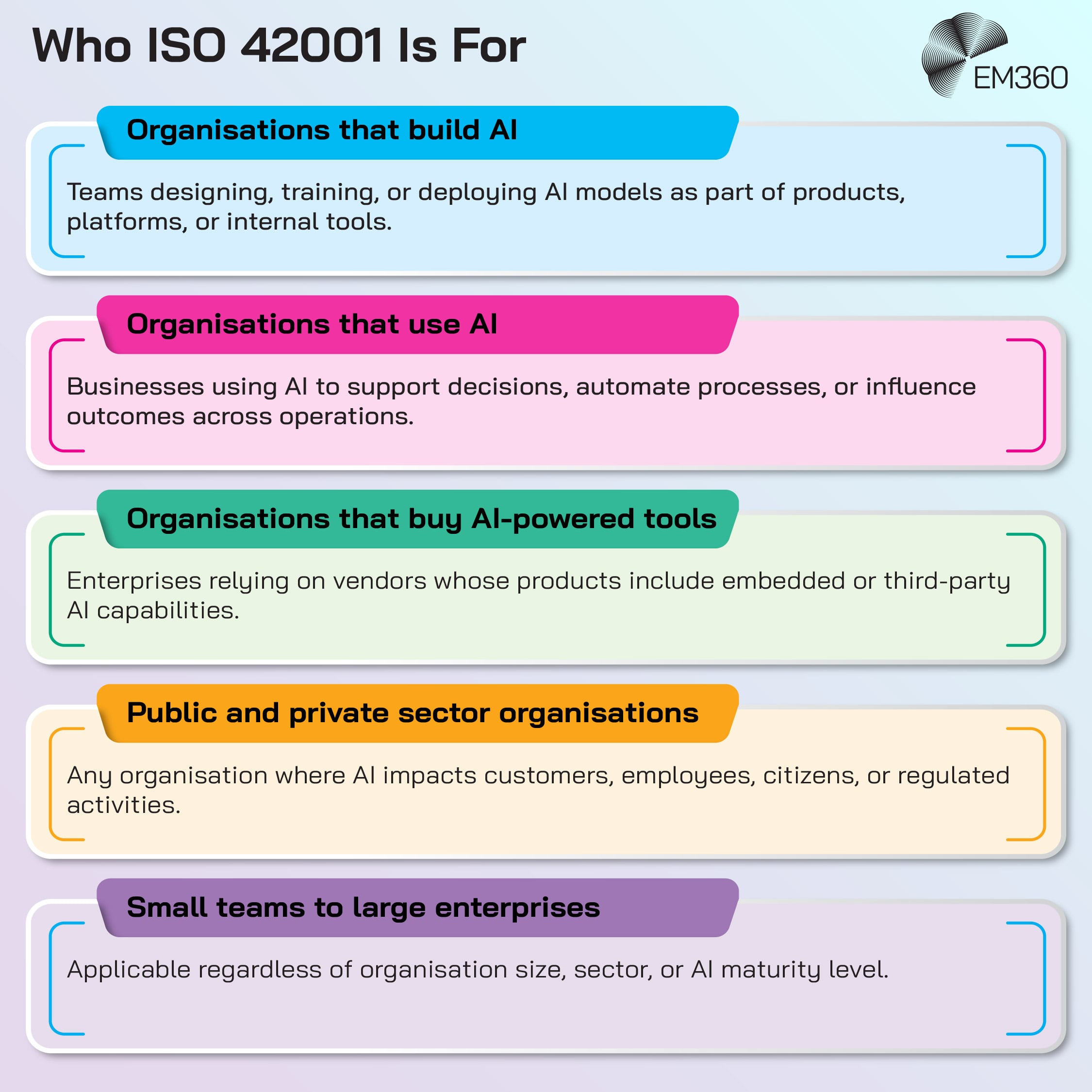

Who ISO 42001 Is For

ISO 42001 is intended for any organisation that develops, provides, or uses AI systems. That includes enterprises building AI products, organisations deploying AI internally, and businesses managing AI delivered through third-party platforms.

That breadth is deliberate. A lot of enterprise AI risk doesn’t come from a data science team training a model from scratch. It comes from decisions like:

- Turning on an AI feature in a customer-facing product without a clear accountability path.

- Using AI-generated outputs in regulated communications.

- Letting automated decision support shape outcomes in hiring, lending, healthcare, or security operations.

If your organisation is making decisions with AI, or letting AI influence decisions, you’re in the governance space ISO 42001 is built for.

What ISO 42001 Covers at a High Level

ISO 42001 is best understood as a set of management system requirements focused on how you govern AI across its lifecycle.

At a high level, it’s designed to help organisations create a structured approach that includes:

- Clear governance and leadership accountability for AI decisions.

- A risk-based approach to planning and controls, so you can identify and manage AI risk consistently.

- Lifecycle thinking, so AI systems are managed from design through deployment, monitoring, change management, and retirement.

- Oversight of third parties, because suppliers and embedded AI capabilities can introduce material risk even when you didn’t “build the AI”.

- Continual improvement, which is essential for AI because the risk profile can shift as inputs, users, and business contexts change.

If you’ve worked with management systems before, the shape will feel familiar. The difference is the focus. ISO 42001 is built around AI-specific governance needs such as transparency, accountability, and managing impacts that aren’t always visible at deployment time.

Inside Multi-Agent AI Systems

Breaks down orchestration, tool access, and IAM guardrails that turn experimental agent networks into enterprise execution fabrics.

How ISO 42001 Fits With Existing ISO Standards

ISO 42001 follows a management system approach, which means it’s designed to integrate into the way enterprises already run governance for risk-heavy domains.

The most common comparison is ISO/IEC 27001 for information security management. The point isn’t that “AI governance equals security governance”. The point is that enterprises already know how to run a management system that is policy-led, risk-based, evidence-driven, and continuously improved. ISO 42001 is built to be compatible with that mindset.

This is one reason ISO 42001 can be practical for large organisations. It gives you a framework that can align with existing audit cadences, governance committees, and risk processes, rather than creating a parallel world where AI governance lives in a separate silo.

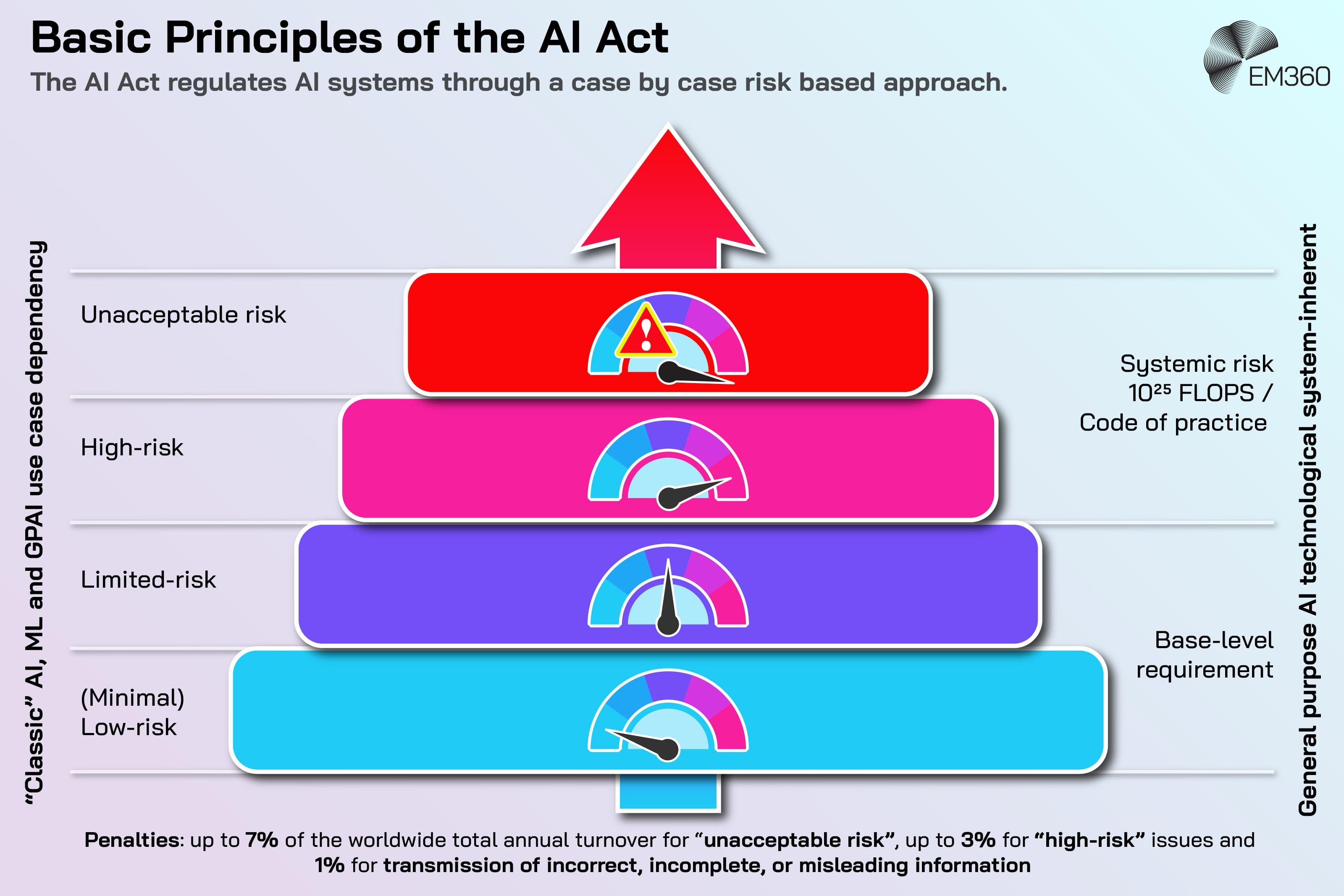

ISO 42001 and AI Regulation

ISO 42001 is voluntary. It isn’t a law, and adopting it doesn’t automatically make you compliant with any specific regulation.

What it can do is help organisations build the kind of governance posture regulators increasingly expect to see: documented processes, consistent risk management, clear accountability, and lifecycle oversight.

That expectation is visible in the EU AI Act’s approach to high-risk AI systems, which includes requirements for a risk management system that runs across the AI system’s lifecycle and is regularly reviewed and updated.

The key takeaway for enterprise leaders is this: regulation is pushing organisations toward demonstrable, repeatable AI governance. ISO 42001 is one way to create the operating discipline that makes that governance easier to run, measure, and evidence when questions land from regulators, customers, or your own board.

Governing AI-Driven Content Scale

Balance velocity with brand, compliance and data risk through human-in-the-loop workflows and an AI maturity model.

Why ISO 42001 Matters for Enterprise Leaders

If you’re leading AI adoption, ISO 42001 matters even if certification isn’t on your roadmap.

It gives you a clear way to think about AI risk management without reducing everything to technical controls. That’s important because enterprise AI risk is often organisational first:

- Decision-making can become harder to explain, which weakens trust internally and externally.

- Ownership can fragment across product, data, legal, security, and operations, which slows response when something goes wrong.

- AI capabilities can scale faster than oversight, which creates a gap between what the business is doing and what leadership can defend.

ISO 42001 is a signal that AI governance is becoming a leadership discipline. The organisations that treat it that way will move faster with less chaos, because they’ll have clearer boundaries, clearer accountability, and more consistent decision-making under pressure.

Final Thoughts: ISO 42001 Turns AI Governance Into a System, Not a Reaction

ISO 42001 exists because AI is now part of how enterprises operate, not just how they innovate. Once AI is embedded in day-to-day decisions, governance can’t be a series of one-off conversations and rushed sign-offs. It has to be an intentional management system that scales with the technology and the business.

The forward-looking reality is that AI governance maturity will become a differentiator. Not because it sounds responsible, but because it makes AI adoption sustainable when scrutiny rises, incidents happen, and stakeholders want answers fast.

If you’re tracking where AI standards and regulation are heading, EM360Tech keeps a close eye on the frameworks enterprises are adopting to make responsible AI workable at scale, so you can stay grounded as expectations shift.

Comments ( 0 )