In 2026, most large organisations are no longer debating whether to use AI. They are trying to keep up with what they’ve already unleashed. Generative tools sit in daily workflows, agentic systems are starting to act on behalf of people, and non-human identities are multiplying across cloud, data and application estates.

Adoption has raced ahead. Governance has not.

Executives can feel the gap. Surveys over the past year show leaders reporting broad use of AI across the business, while admitting that policies and controls are only partly keeping pace. That tension is reshaping the fundamentals of enterprise AI governance.

The job is no longer to write a policy and hope it sticks. It’s to build a living structure that can track fast, decentralised adoption without inhibiting productivity. For CIOs and CISOs heading into 2026, the question shifts from “Do we have an AI governance framework?” to “Can we grow it in a controlled way?”

The crawl, walk, run approach that Delinea, a 7-time Gartner Magic Quadrant Leader, suggests provides that path.

Crawl Phase - Governance Starts With Visibility and Usable Guardrails

The first fundamental is simple to state and hard to achieve. You can't govern what you can't see. The crawl phase is about visibility and a basic AI governance structure that people will actually use.

At this stage, enterprises should resist the temptation to design a perfect model and focus instead on a minimum viable approach that aligns with NIST’s Govern and Map functions.

Establish the minimum viable governance structure

Crawl is where you decide who makes which decisions. That means defining clear roles for business owners, security, legal, data and risk teams, and agreeing which types of AI use cases they're responsible for reviewing.

It also means categorising AI use into broad model and use case types, from low-risk productivity tools to higher-risk decision-making systems that affect customers or critical infrastructure.

This is where many organisations overcomplicate the work. A heavy, opaque process pushes AI experimentation into the shadows. A lightweight structure with obvious decision rights does the opposite. It signals that governance is here to support delivery, not to block it.

Used well, it becomes the foundation for responsible AI behaviour rather than a checklist that people try to work around.

Build a real inventory of AI usage across the enterprise

The hardest task in the crawl phase isn't policy, it's discovery. Most enterprises still underestimate how much AI is already in use. Teams adopt tools directly, pilots spin up inside business units, and vendors quietly add features that change how data is processed or decisions are made. This decentralised adoption leaves security and risk teams trying to govern a moving target.

A credible inventory starts with simple questions.

- Which teams are using AI tools today?

- Which vendors have added AI features to existing contracts?

- Where are custom models or copilots being trialled?

The goal isn't a perfect list. The goal is a working view of where AI inventories are clearly documented, where they're incomplete, and where shadow AI is likely to appear next.

That visibility is what allows the next stages of governance to mean something in practice.

Set policies people can live with

Once you know roughly what is happening, you can start setting guardrails. Crawl phase policies should answer a few basic questions.

- Which kinds of AI tools are allowed, limited or prohibited?

- What data can and can't be shared with external models?

- How should employees handle prompts that involve sensitive or regulated information?

Identity-Led AI Governance

Why CISOs are making identity the primary control plane to close AI adoption gaps and align governance, access, and security operations.

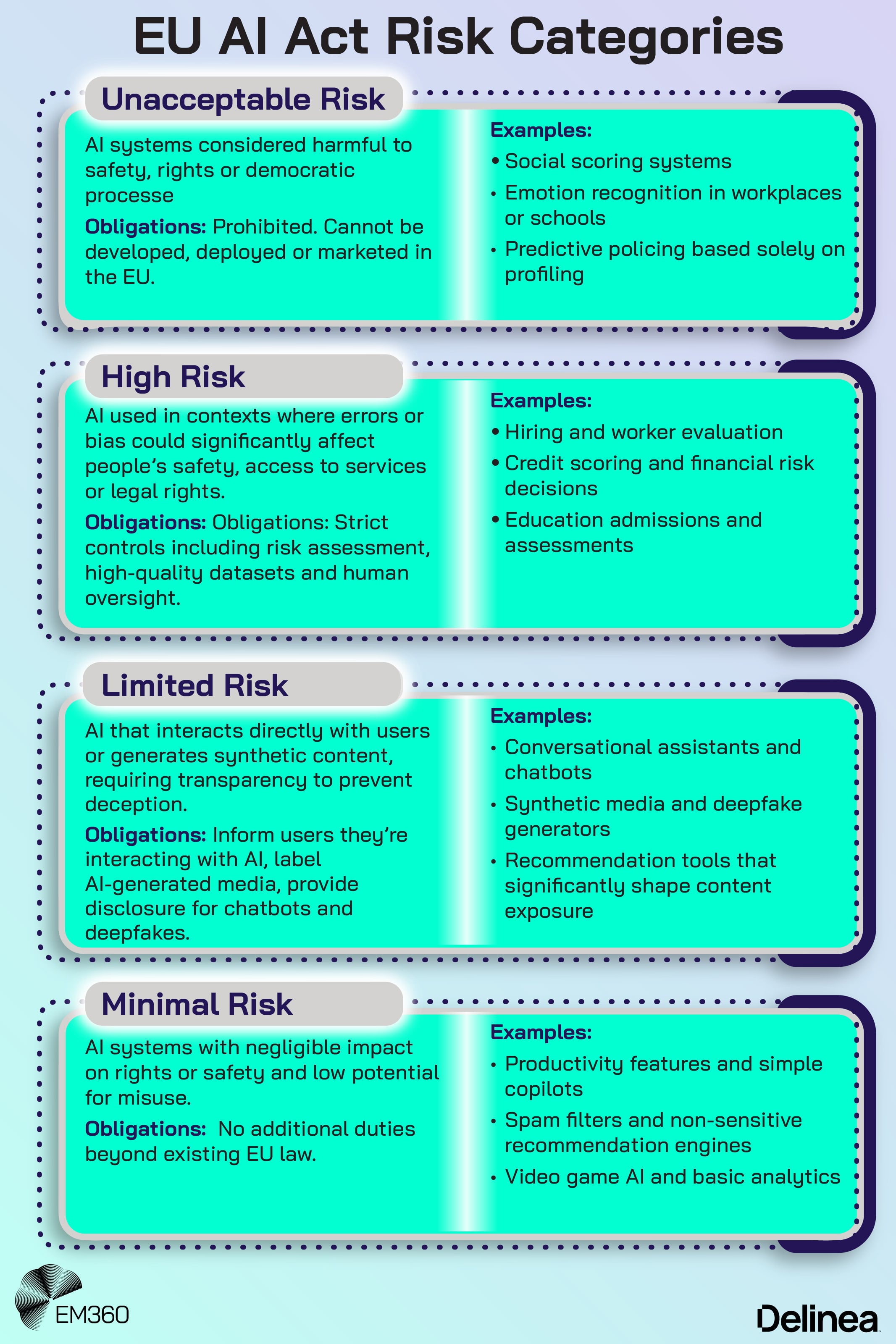

This is also where regulatory alignment begins. Using the EU AI Act’s risk categories as a reference point can help teams decide which use cases require deeper assessment and documentation. Mapping usage types to NIST’s guidance on context, impact and risk helps translate abstract rules into concrete controls.

Crucially, the process for approving or rejecting AI use must be fast. Delinea puts it plainly: if governance slows everyone down, they will simply find ways around it. The crawl phase is successful when people know what the rules are, trust that they're fair, and can get decisions quickly enough to keep moving.

Walk Phase - Identity-First Controls for Humans, Machines and Agents

Once you have a handle on where AI is used and what the basic rules are, the fundamentals shift from visibility to control. Walk is where identity-first security becomes central. The question is no longer just “What AI are we using?” It becomes “Who, or what, is AI acting as in our environment?”

Discover and classify all non-human and agentic identities

Non-human identities are not new, but their scale and behaviour have changed. Service accounts, APIs and workloads have now been joined by AI agents that can plan, act and adapt.

Many of these agents are granted broad access using repurposed human credentials or generic machine accounts, which makes it difficult to see when an action was taken by a person and when it was taken by an autonomous system.

Walk phase governance treats these entities with the same priority as human users. That means deliberately discovering where AI agents exist, which credentials they use, and what systems they can reach. It also means classifying them according to risk, purpose and criticality.

The distinction between human credentials and dedicated agent credentials is something that can’t be ignored. Without that separation, any audit trail or control model is blurred from the start.

Separate human and machine privileges

Identity As The AI Control Plane

How shifting AI oversight to identity, access and privilege design turns governance into an operational control loop for agentic systems.

Once identities are discovered and classified, governance maturity starts to look like identity separation. Humans and non-human entities should not share accounts, tokens or entitlements. Each should have its own defined scope, with machine identities constrained by the principle of least privilege in the same way as human users.

Technologies such as privileged access management and identity governance tools like Delinea are not new to security leaders, but their role shifts when AI systems are making decisions. Walk phase governance uses these tools to ensure that AI systems have exactly the access they need, no more and no less, and that elevated rights are granted just in time rather than sitting open and unused.

The detail of how that is implemented will vary, but the strategic point is consistent. Governance that does not differentiate between human and non-human identities is no longer enough.

Bring AI systems under unified management

The final part of the walk phase is about consolidation. The discovery work from crawl and the identity separation work from earlier in this phase should feed a more unified model for monitoring and control. Enterprises should know which AI systems exist, how they authenticate, what they're allowed to do and how they're being monitored.

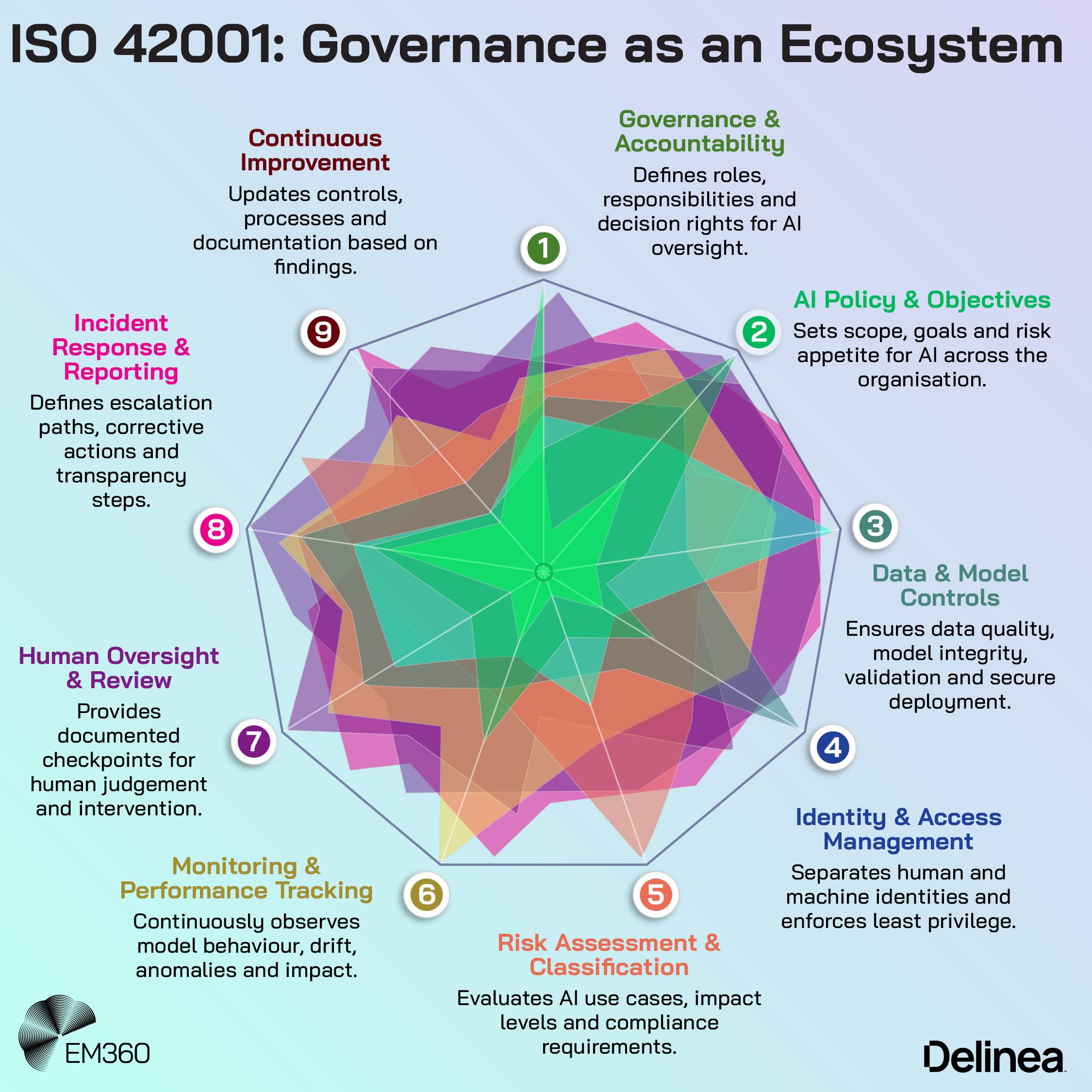

This is where ideas from ISO 42001 and similar management system standards become useful. They encourage organisations to treat AI as something that needs ongoing planning, operation, performance evaluation and improvement, rather than a set-and-forget project.

For security leaders, unifying AI systems under this kind of structure reduces policy violations and prepares the ground for the next step. It's much easier to design AI monitoring and control when you are not chasing dozens of point solutions across different teams and platforms.

Run Phase - Intelligent Authorisation and AI-Assisted Governance

Run is where governance moves from static control to adaptive intelligence. At this stage, the fundamentals shift again. The enterprise is no longer just using controls to govern AI. It's starting to use AI to strengthen control.

Use AI to govern AI

When AI Agents Meet Governance

Explores how Qwen’s agentic model amplifies identity, access, jurisdiction and durability risks in enterprise AI strategy.

Agentic systems behave differently from traditional software. They learn from feedback, chain tasks and, in some cases, initiate actions based on goals rather than explicit instructions. That makes traditional logs and approvals necessary but not sufficient.

Run phase governance introduces AI-assisted governance capabilities that can watch and interpret agent behaviour in context. That might include anomaly detection tuned to AI action patterns, behavioural baselines for agents, and alerts when an agent starts accessing systems or data outside its normal scope.

It also includes tamper-evident logs that record who authorised an action, which identity executed it and which model or agent decided to proceed.

Recent warnings about agentic operating systems and browsers underline why this matters. If agents can read, write and trigger actions across applications, then AI needs to help monitor AI. Human-only review does not scale at the speed and volume of automated activity.

Move to context-aware authorisation decisions

In the run phase, intelligent authorisation becomes a core capability. Decisions about whether to allow an action are no longer based solely on a role or a static policy. They start to incorporate identity, behaviour and environment.

- Who is requesting access?

- Is it a human or a machine?

- Is this consistent with historical patterns?

- What is the sensitivity of the data or system involved?

Linking these signals together mirrors the Measure and Manage concepts in NIST’s AI framework and supports the EU AI Act’s expectation of continuous oversight for higher-risk use cases. The practical benefits are simple. Access decisions become more precise and more defensible.

They can adjust dynamically as the environment changes, without needing constant manual rule updates.

Prepare for agent intent and decision autonomy

The final fundamental recognises that agentic behaviour isn't a temporary trend. Systems that can interpret goals, plan steps and take action will become more common, not less. Governance that assumes API to API connections are always deterministic and under tight human control will fall behind that reality.

From Tools to AI Agents

Map the seven-stage evolution from reactive systems to superintelligence and align enterprise strategy to each step of agentic maturity.

Run phase enterprises start designing for intent and autonomy. That includes setting clear boundaries around what an agent is allowed to optimise for, defining explicit “off-limits” zones for agent decision-making, and planning escalation paths where human review is required.

It also means testing cross-API flows for unexpected interactions and building safeguards that prevent apparently minor actions from cascading into critical impact. This isn't about distrusting AI by default. It's about acknowledging that when systems can act with a degree of independence, continuous AI risk management needs to account for that independence directly.

Final Thoughts: Governance Only Works When It Learns as Fast as AI Does

The fundamentals of enterprise AI governance have shifted. Static policies and occasional reviews are not enough when AI is everywhere, acting on behalf of people and interacting with other systems in ways that were not originally planned. A phased approach helps leaders move with intent.

Crawl builds visibility and simple guardrails. Walk brings identity-first control to humans, machines and agents. Run turns governance into a more adaptive system that uses context and AI itself to keep risk within acceptable bounds.

The thread that connects these phases is learning. The organisations that will be comfortable with AI in 2026 are not the ones that avoided risk entirely. They are the ones that treated governance as a living discipline that could expand as quickly as new tools and behaviours emerged.

They will know where AI is used, which identities it's acting as, and how decisions are being made and monitored.

For security and technology leaders, that shift is already under way. Conversations like Delinea’s recent Security Strategist session show how identity-first thinking is evolving to meet agentic systems and non-human identities head on. EM360Tech will continue to surface the practitioners and patterns that make that evolution real.

As you refine your own approach, the most important question isn't how much AI you are using. It's whether your governance is learning fast enough to keep up.

Comments ( 0 )