In a major step toward a future dominated by AI-powered digital assistants, OpenAI on Thursday rolled out a new “agent” feature for ChatGPT, allowing the chatbot to take real-world actions on a user’s behalf. The update signals a significant leap in AI autonomy, positioning ChatGPT not just as a conversational tool but as a decision-making agent capable of interacting with external tools and services, including calendars, shopping platforms, and more.

While the move is being hailed as another milestone in the AI race, it is also sparking serious security and privacy concerns across the tech community.

What is ChatGPT Agent?

The new agent mode enables ChatGPT to “think” and “act” using its own virtual computer. This means users can issue complex commands like:

- Summarise upcoming client meetings from my calendar and relevant news.

- Find a hotel near the wedding venue and suggest five outfit options that match the dress code.

- Find and purchase ingredients for a Japanese breakfast.

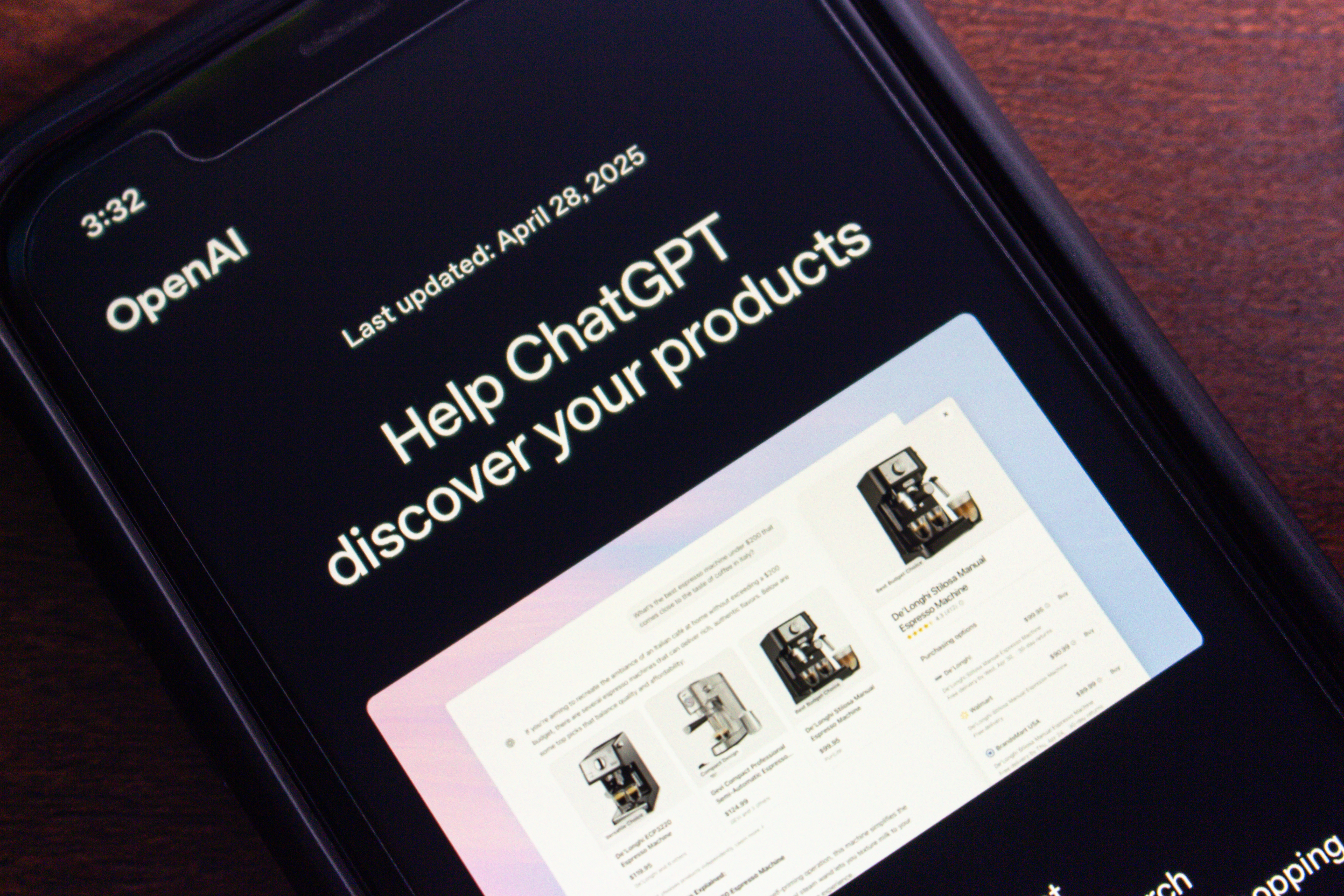

These scenarios reflect OpenAI’s long-term vision of AI as a universal assistant — capable not just of talking, but doing. The assistant can now autonomously browse the web, analyse data, make plans, and even take limited actions like purchasing items or scheduling events, provided it has the necessary permissions.

Available to paying subscribers of ChatGPT’s Pro, Plus, and Team plans, the feature combines OpenAI’s existing “Operator” and “Deep Research” capabilities to create a more complete digital helper.

OpenAI’s Booming User Base

OpenAI’s timing couldn’t be better. As of December 2024, ChatGPT surpassed 300 million weekly active users, and by mid-2025 had 500 million. According to Mary Meeker’s 2025 AI Trends report, ChatGPT reached 800 million weekly active users by April 2025, with projections to surpass 1 billion users by the end of the year. OpenAI’s enterprise offering has also seen rapid adoption, with over 600,000 users across more than 93% of Fortune 500 companies, including global giants like PwC, which deployed ChatGPT Enterprise to 100,000 employees.

With ChatGPT now natively embedded in Microsoft tools like Word and Excel via Copilot, the potential reach of autonomous AI agents is game-changing. However, critics warn that this rapid deployment is outpacing the public’s ability to fully understand or manage the privacy, security, and ethical risks associated with such technologies.

Learn more: AI Governance for ChatGPT Agents in Enterprise Environments

ChatGPT Agents Pose New AI Security Threats

The announcement of the new agent aligns with broader trends in the industry. Google is rapidly developing its Gemini AI agent, which can book tickets and manage tasks. While, Apple is working on a more advanced Siri. The competition is fierce, but so is the scrutiny.

Autonomous agents, by design, require more access to user data and services. To book a dinner reservation, the agent needs calendar access. To buy a dress, it may need access to your wallet. This centralisation of power in a software agent’s hands has raised red flags for cybersecurity professionals.

Luiza Jarovsky, co-founder of AI, Tech & Privacy Academy, said:

Read AI is Making “As-Code” Inevitable

Why As-Code Wins in AI Data

How AI shifts data teams from drag-and-drop tools to declarative guardrails, making versioned code the backbone of trusted analytics.

“Unfortunately, the pace of AI development is much faster than the pace of AI literacy. Most people haven't yet understood ChatGPT's privacy risks, but they will be thrown a new feature with exponentially MORE risks."

The security risks

OpenAI itself acknowledged the risks in its launch post, saying the system is intentionally limited in what it can access and do. High-risk tasks like bank transfers are off the table, and sensitive actions like sending emails require human confirmation. Still, experts argue that the mere ability to interface with tools like calendars, emails, wallets, and contact lists introduces exponential risk.

The threat landscape includes:

- Malicious actors trick AI agents into leaking private data.

- Over-permissioned agents are being used for tasks that don’t require them.

- Social engineering attacks targeting agent access pathways.

- Lack of user literacy leads to uninformed permission grants.

OpenAI’s Sam Altman’s Warning

OpenAI CEO Sam Altman, in a post on X, urged users to approach the feature cautiously. Altman said:

“I would explain this to my own family as cutting edge and experimental; a chance to try the future, but not something I’d yet use for high-stakes uses or with a lot of personal information until we have a chance to study and improve it in the wild. We don’t know exactly what the impacts are going to be, but bad actors may try to “trick” users’ AI agents into giving private information they shouldn’t and take actions they shouldn’t, in ways we can’t predict. We recommend giving agents the minimum access required to complete a task to reduce privacy and security risks.”

He emphasised that users should grant agents only the minimum access required to complete a task, echoing long-standing security principles of least privilege.

Guardrails for Agentic AI

Break down the tooling stack behind secure agent workflows, from firewalls and runtime filters to red-teaming kits and multimodal moderation models.

OpenAI Tightens Control

Giving AI agents the ability to make purchases or schedule plans brings undeniable convenience, but also serious security risks. OpenAI researchers warn that such autonomy can expose systems to threats like prompt injection attacks, where malicious commands trick the AI into leaking data, spreading misinformation, or executing unauthorised actions.

To mitigate these risks, OpenAI has introduced a takeover mode, allowing users to pause the agent and take manual control. In high-stakes scenarios, such as accessing sensitive data or completing transactions, ChatGPT Agents will also require user approval before proceeding.

This new agentic capability signals a major step forward in AI evolution, but it also raises critical questions about trust, safety, and user responsibility. As enterprises accelerate the adoption of artificial intelligence, security experts are calling for stronger regulations and better user education before agentic AI becomes mainstream.

For now, OpenAI’s agent remains limited, experimental, and opt-in—but the broader conversation about control and accountability is just beginning.

Have a look at this video to learn more:

Comments ( 0 )