In 2025, emerging technologies stopped behaving like optional innovation experiments and started acting like architecture. Not because every organisation suddenly wanted to be first, but because the underlying constraints shifted.

Timelines got firmer. Platforms got more deployable. Autonomy got more real. Connectivity stopped being a shrug. And the “who or what is allowed to do what” question expanded beyond people.

That is the thread running through these five moments. Each one moved an emerging capability closer to enterprise reality, while also raising the cost of treating it as a side project. The result is that 2026 planning looks less like a series of disconnected pilots and more like a portfolio of decisions about ownership, governance, resilience, and scale.

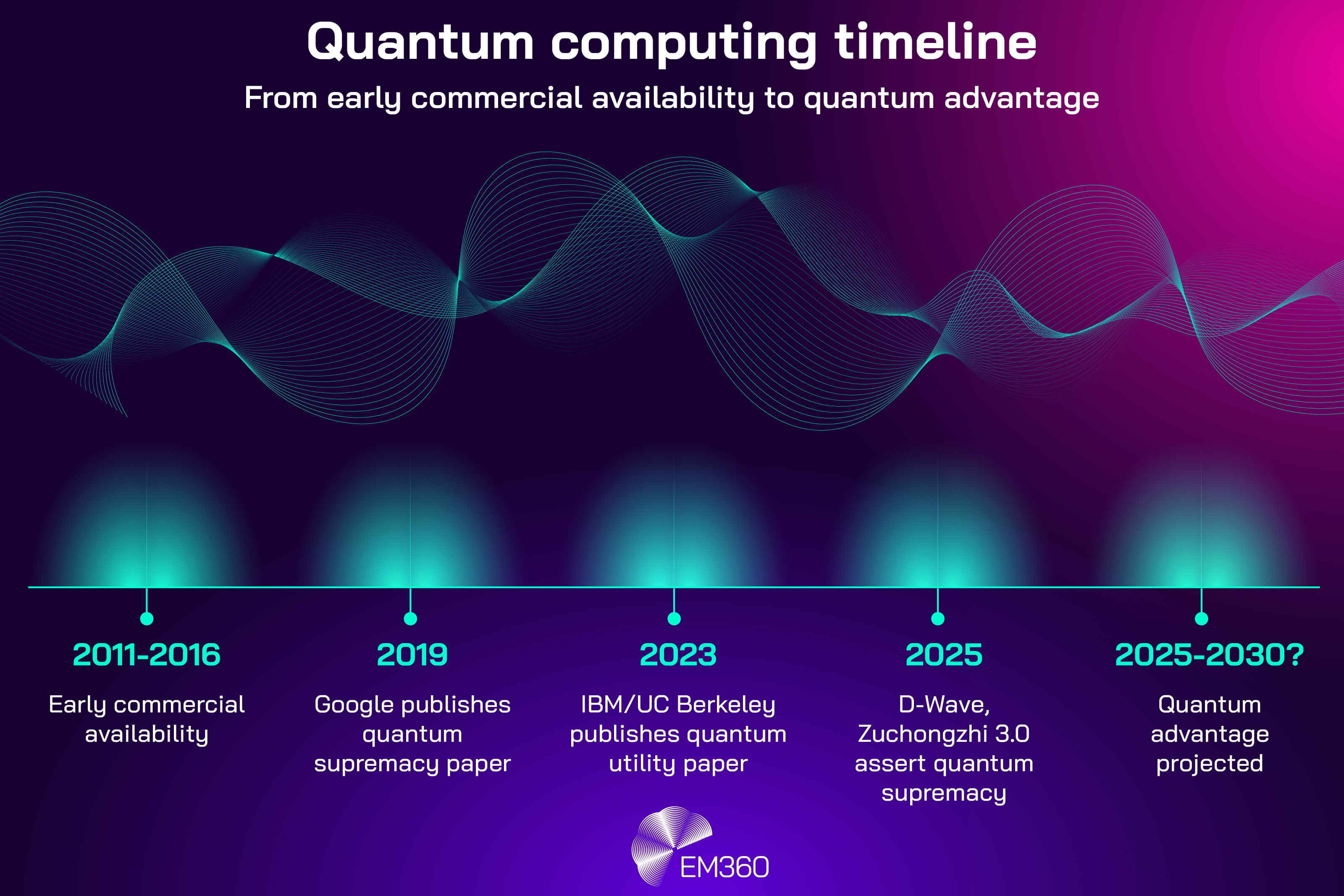

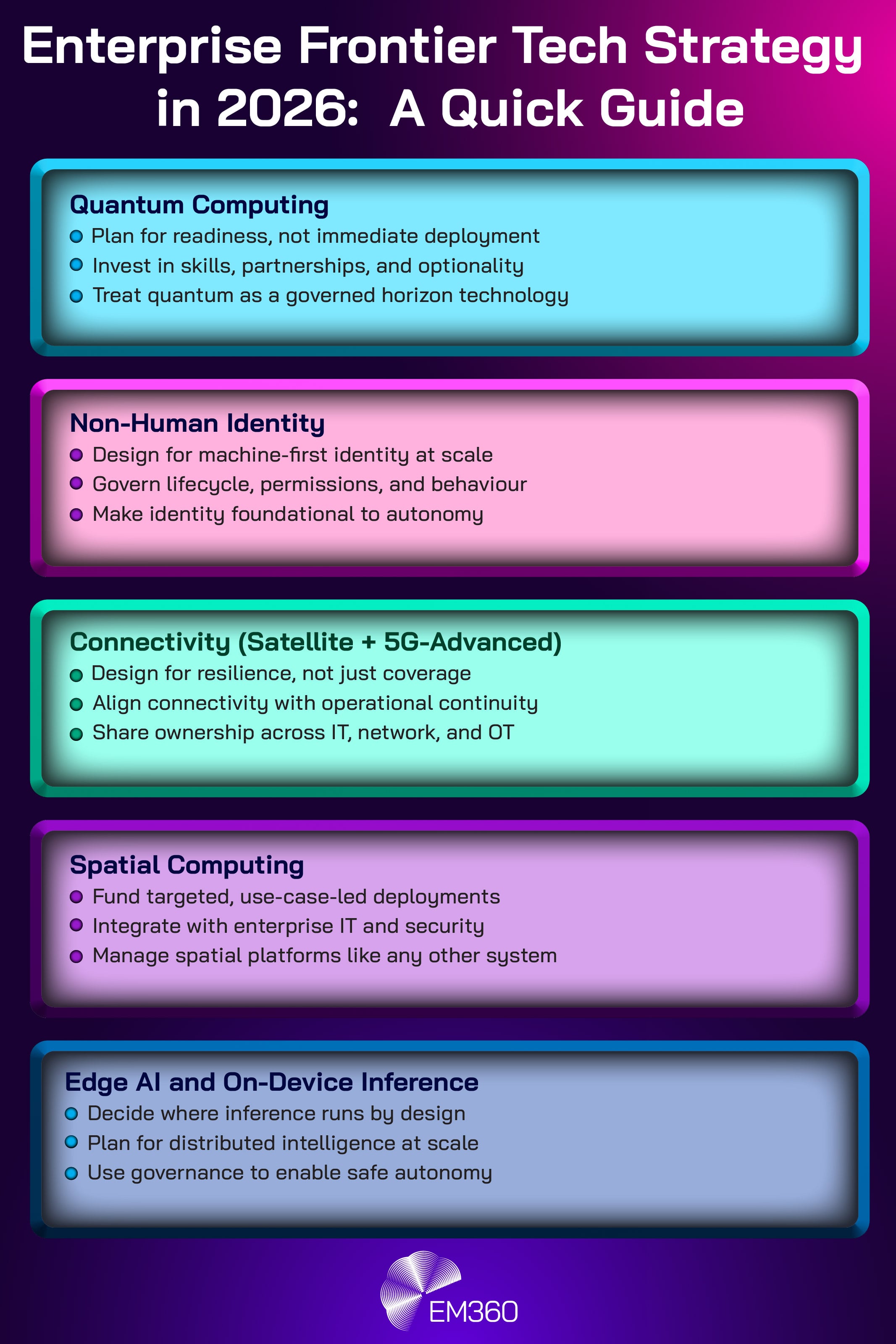

Quantum Computing Shifted From Research Curiosity to Enterprise Planning Horizon

Quantum has been “coming” for years. What changed in 2025 is that enterprises were given clearer signals about when and how quantum becomes relevant to planning. The technology is still immature for most production use cases, but the ecosystem is no longer speaking only in hypotheticals.

IBM is one example of that shift. The company’s published roadmap sets expectations around achieving near-term “quantum advantage” by the end of 2026 and delivering a large-scale fault-tolerant quantum computer by 2029. These are not guarantees of enterprise transformation, but they are specific enough to move quantum from curiosity into strategic horizon scanning.

At the same time, the broader ecosystem is pushing in the same direction. Hardware announcements and scaling approaches are becoming more concrete, including new architectures designed to support orders-of-magnitude increases in qubit counts over the coming years. The point is not whether any single vendor “wins”, but that the pace and clarity of roadmaps have improved.

How enterprises responded in the moment

Most enterprises did not respond by rushing into deployment. They responded by shifting quantum into structured exploration.

That typically looked like three moves. First, capability mapping, identifying which business problems even belong in the quantum conversation (optimisation, simulation, complex modelling). Second, partnership scanning, choosing where to experiment through cloud-accessible services and vendor ecosystems. Third, skills planning, because quantum readiness is as much about people and tooling as it is about hardware.

The practical enterprise reaction was not “buy quantum”. It was “make sure we are not starting from zero if this crosses a threshold faster than expected”.

Quantum planning in 2026 focuses on preparedness, not deployment

In 2026, the smart posture is optionality. Quantum computing belongs on a multi-year technology roadmap for certain sectors, but it does not belong on most near-term transformation scorecards.

That matters because enterprises have a bad habit of swinging between hype and dismissal. Quantum punishes both. Dismissal creates organisational lag in skills and partner readiness. Hype creates waste and disappointment. Preparedness splits the difference: small, deliberate investments that keep the organisation capable of moving when the economics and reliability justify it.

If you are looking for the shift in mindset, it is this: quantum is no longer a conversation about “whether it will matter”. It is a conversation about “what we need in place if it does”.

Non-Human Identity Became a Foundational Constraint for Emerging Tech

Identity used to mean people logging in. That assumption broke in 2025.

As AI agents, automation, APIs, cloud workloads, robots, and edge devices proliferated, non-human identity became the dominant identity category. Microsoft has been explicit about the trend, noting that non-human identities (NHIs) greatly outnumber human identities and are often highly privileged, with AI agents expected to accelerate growth further.

Rethinking Trust at the Edge

Why protocol-era VPN and IKEv2 assumptions undermine Zero Trust, and what boards should ask about hidden edge dependencies.

CyberArk’s 2025 research adds scale and risk to the same story, reporting that machine identities outnumber human identities by more than 80 to one and highlighting how many have sensitive or privileged access.

This is an emerging tech moment because autonomy depends on identities. If systems are going to act on behalf of the business, they need credentials, permissions, and authentication paths. That means identity stops being a subdomain and becomes a constraint that shapes everything else.

How enterprises responded in the moment

The first reaction was visibility panic, because most organisations were not built to answer basic questions at machine scale.

Where do these identities live? Who owns them? Which ones are dormant? Which ones are over-permissioned? Which ones are embedded in scripts nobody wants to touch? The uncomfortable realisation for many teams is that identity governance often trails behind automation, not because people are careless, but because the tooling and operating model were designed for humans.

Enterprises responded with a mix of tactical clean-ups and early structural change. Secrets rotation, access reviews, and service account audits became urgent. At the same time, more organisations started treating machine identity management as a first-class programme rather than a patchwork of team-by-team practices.

Emerging tech strategies in 2026 start with identity, not features

In 2026, the “features” conversation is less useful than the “permission” conversation.

AI agents, robotics, and distributed systems all depend on trusted identities. Without them, autonomy becomes a risk multiplier: more actors, more access paths, more opportunities for misuse or compromise. That is why an identity-first architecture becomes the enabling layer for emerging tech strategies, not an optional security enhancement.

The practical implication is that emerging tech roadmaps start to include identity requirements upfront. That means shared responsibility across platform engineering, security, cloud teams, and product owners for services and automations. It also means lifecycle policies that treat machine identities like managed assets: issued, monitored, rotated, retired, and reviewed.

Inside Public DNS Performance

Breaks down how leading DNS networks route queries, cut latency and offload fragile ISP infrastructure at global scale.

Because if a technology cannot be governed through strong lifecycle management and permission boundaries, it stays in pilot mode for longer, or it becomes too dangerous to scale.

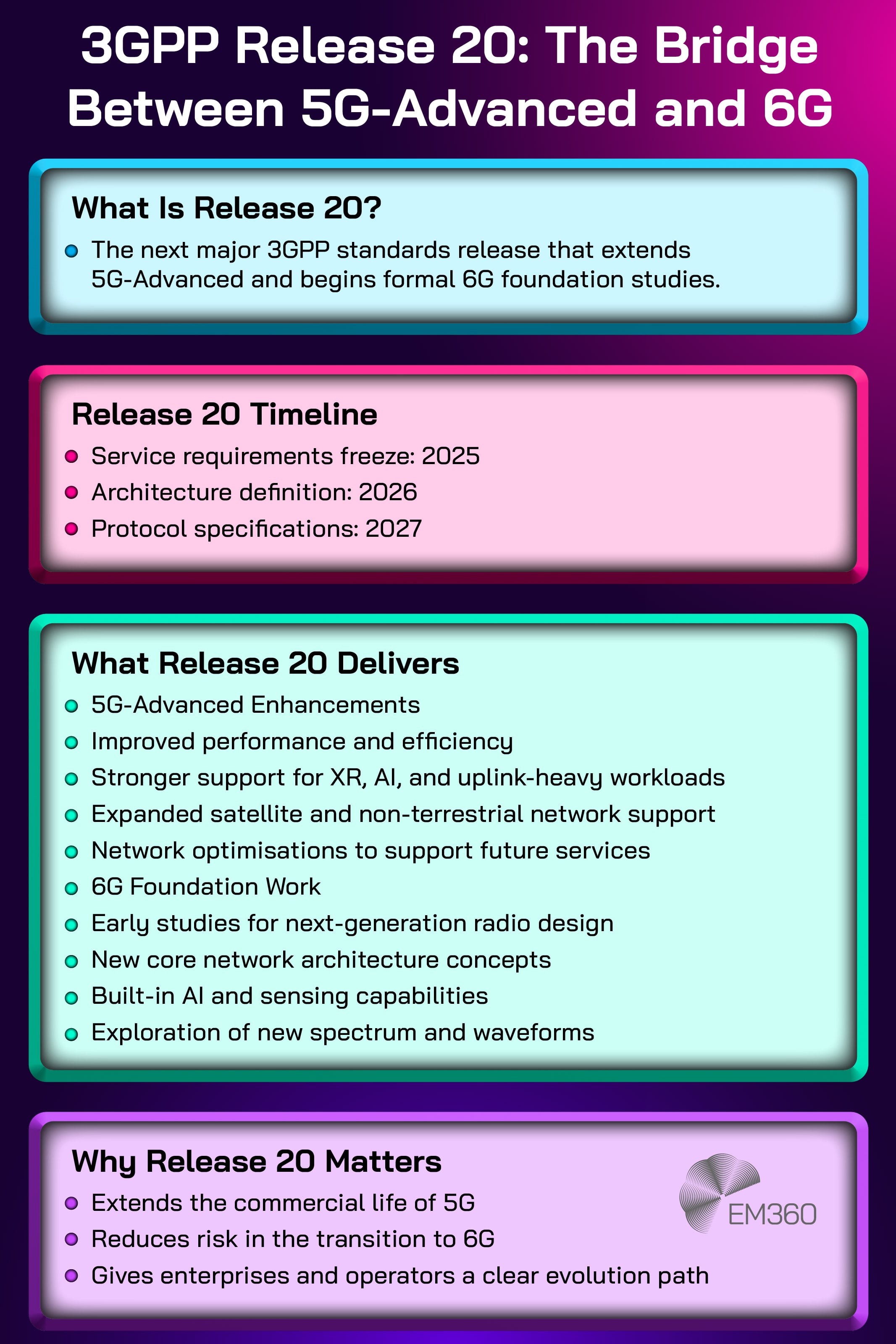

Satellite and 5G-Advanced Connectivity Turned “No Signal” Into an Architectural Choice

Two connectivity shifts crossed from roadmap into reality.

First, direct-to-cell satellite connectivity became more tangible as a commercial service model. T-Mobile’s Starlink-powered “T-Satellite” positioning explicitly covers texting and select satellite-ready apps, aimed at reducing dead zones.

Second, 5G evolution moved into a more enterprise-relevant phase. 5G Americas published a 5G-Advanced overview describing how 3GPP Release 18 and beyond introduce new features and deployment considerations, including strategic implications for decision-makers. 3GPP itself frames Release 20 as further improvements of the 5G-Advanced system.

The combined effect is simple: connectivity gaps are no longer purely geographic fate. They are increasingly something enterprises can design around.

How enterprises responded in the moment

Sectors that live outside the office felt this first. Logistics, utilities, mining, manufacturing, shipping, and field services do not need perfect connectivity everywhere, but they do need predictable connectivity where safety, uptime, and operational visibility depend on it.

Enterprises responded by rethinking assumptions. “No signal” shifted from being a passive risk to being a design decision. Network teams started looking at resilience options the way continuity teams look at failover.

Technology leaders began connecting connectivity planning to operational technology environments and edge computing ambitions, because an edge strategy is only as strong as the network it rides on.

Connectivity planning in 2026 becomes a resilience decision

In 2026, connectivity planning stops being an optimisation exercise and becomes a resilience choice. 5G-Advanced is not just a faster network story. It is a platform evolution story, one that affects how enterprises think about capacity, latency, industrial IoT support, and future applications.

When Data Breaches Meet Proxies

Assess how proxy strategy can mitigate large-scale data compromise, limit blast radius and reduce exploitable attack surfaces.

The satellite piece adds a different kind of resilience: a plausible fallback path where terrestrial coverage is weak. T-Mobile’s planned expansion to satellite data for select apps reinforces the shift from emergency-only messaging toward limited but meaningful functionality.

The enterprise takeaway is that connectivity is becoming part of the business continuity conversation, not just the telecom bill.

Stronger organisations will treat connectivity as shared infrastructure across IT, network teams, and OT owners, with clearer decision rights. Network architecture will be discussed in the same breath as edge deployments, remote workforce strategy, and resilience planning. The goal is not novelty. It is continuity

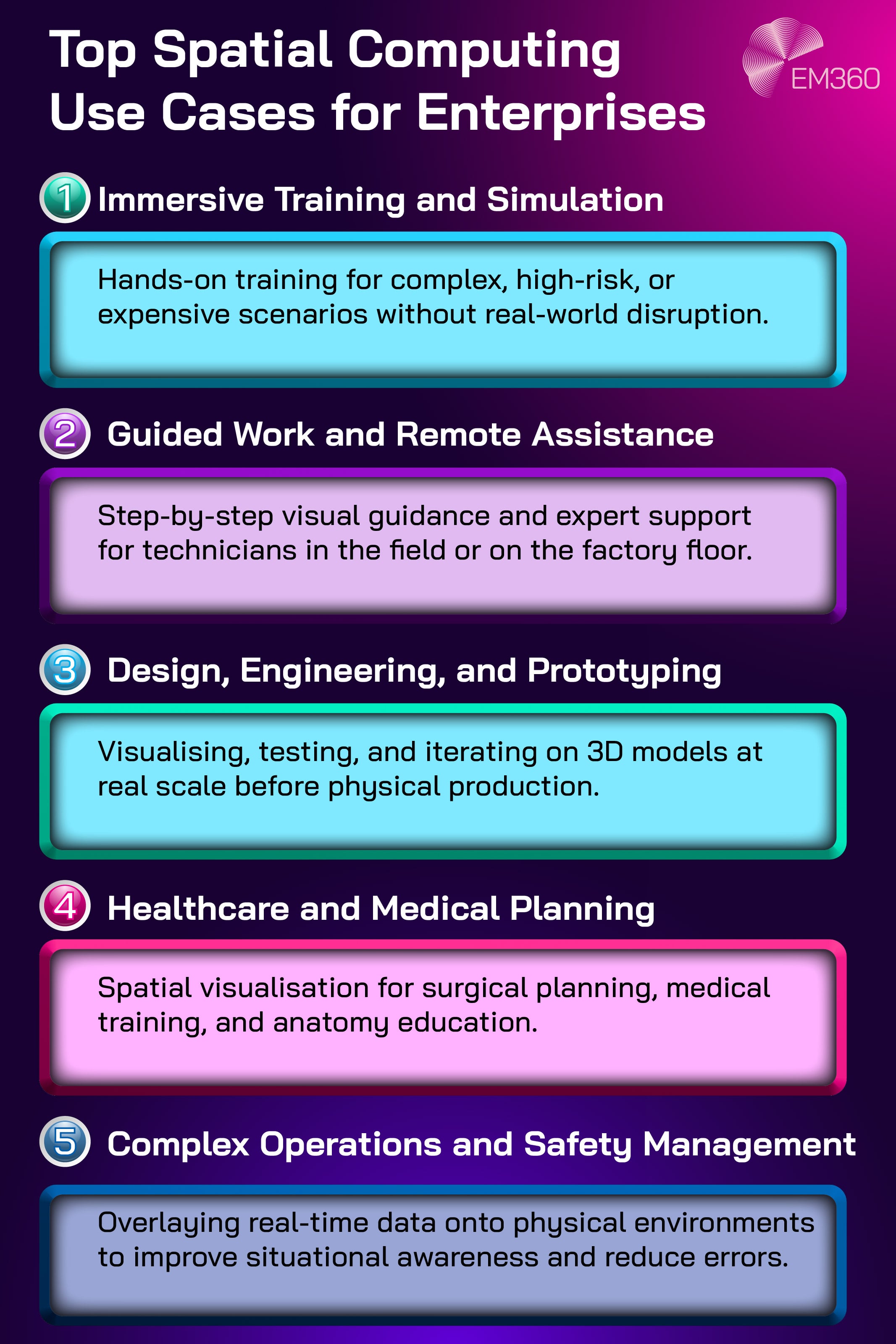

Spatial Computing Matured Into an Enterprise Platform Layer

Enterprises do not scale on hype. They scale on supportability.

Spatial computing took a meaningful step toward platform maturity in 2025 through developer tooling and enterprise-focused capabilities. Apple’s WWDC 2025 visionOS 26 session explicitly flags Enterprise APIs as part of the platform updates, alongside broader improvements for building spatial apps.

Apple also highlighted enterprise capability enhancements for spatial business apps in a dedicated session, signalling ongoing investment in the enterprise layer. The significance is not that every enterprise should rush to deploy headsets. It is that the platform is increasingly being built in a way that enterprises can actually manage.

How enterprises responded in the moment

The enterprise response was a shift from “interesting demo” to “is this deployable”.

That means IT and security teams getting involved earlier, asking about device management, identity integration, private distribution, and data handling. It also means business leaders focusing on narrow, high-value workflows rather than broad “future of work” narratives.

Spatial computing became easier to treat like a managed capability, which is the point at which enterprise interest becomes more serious.

Spatial computing investment in 2026 becomes use-case driven

In 2026, spatial computing investment becomes less about experimentation and more about measurable outcomes.

When Open Source Runs Defense

Assess how open-source firewalls reshape security strategy by trading license spend for transparency, control and in-house expertise.

The strongest candidates are practical: training, guided work, specialist workflows, and situations where the environment is complex enough that a spatial interface reduces errors, speeds up onboarding, or improves safety. These are not vanity pilots. They are productivity bets.

That shift matters because it changes how spatial projects are funded and evaluated. The conversation moves from innovation budgets to operational value, which forces better scoping and better accountability.

The enterprises that scale spatial computing will treat it like any other platform layer: governed, integrated, and supported through lifecycle management. Spatial computing becomes a managed part of the technology stack, not a novelty, only when it can meet the same operational expectations as other enterprise tools.

Edge AI and On-Device Inference Became the Practical Path to Scale

The AI conversation shifted. Not away from models, but toward where those models run.

Industry analysis in 2025 increasingly framed the move into the “era of inference” as edge deployments expanded. CEVA’s 2025 Edge AI Technology Report describes edge AI evolving from niche to mainstream and highlights the value of localised intelligence across sectors such as manufacturing and healthcare.

GSMA Intelligence also leaned into the same theme, positioning “AI at the edge” and the strategic value of different inference options as a focus area in 2025 analysis.

This matters because edge inference changes the practical constraints of AI adoption: latency, reliability, privacy, bandwidth, and cost.

How enterprises responded in the moment

Enterprises began separating “where training happens” from “where inference runs”.

That change unlocks new deployment patterns. It also forces new questions. How do we secure devices running models? How do we update them? How do we monitor performance and drift? How do we manage fleets rather than a single central platform?

Physical automation accelerated the urgency of those questions. Robotics companies and manufacturers signalled stronger commercial intent in 2025, including plans to scale production and deploy humanoid robots in industrial contexts. The details vary by vendor, but the direction is clear: more autonomy in more places.

AI strategies in 2026 prioritise where inference runs

In 2026, “cloud-first” becomes less of a default and more of a choice.

Where inference runs shapes everything from uptime requirements to compliance exposure. It also shapes operational design. If your AI capability relies on constant connectivity and centralised processing, it will fail in environments where networks are unreliable or latency is intolerable. If it runs locally, you trade some central control for resilience and speed.

The strategic shift is that AI adoption becomes a distribution problem, not just a model problem. In 2026, the winners treat edge AI as a fleet management reality. They plan for secure provisioning, monitoring, patching, model updates, and governance across thousands of devices and endpoints.

They build controls that assume failure and inconsistency will happen, and they design for recovery. That is also where governance becomes the enabler, not the brake. If enterprises can manage distributed intelligence responsibly, they can deploy autonomy where it actually creates value, instead of limiting it to controlled pilots.

Final Thoughts: Emerging Tech Now Sets the Rules

The five moments that mattered most in 2025 did not just introduce new tools. They changed the rules enterprises operate under.

The common takeaway for 2026 is not “adopt more emerging tech”. It is “treat emerging tech like architecture”. Put ownership where it belongs. Build governance that scales. Make resilience a design choice. And stop funding pilots that cannot survive contact with operations.

The thought-provoking part is uncomfortable, but useful: the organisations that win in 2026 will not be the ones with the most pilots. They will be the ones that can turn a new capability into a managed, secure, and accountable part of how the business runs.

If you want to keep tracking how these shifts connect across AI, data, security, and emerging tech, EM360Tech will keep mapping the moments that matter, and the operational decisions they force.

Comments ( 0 )