Security teams no longer struggle to see what is wrong. Every registry scan, shift-left check, and runtime alert feeds into an ever-growing queue of container vulnerabilities. The real constraint is time. Human capacity to fix issues has not kept pace with how fast modern tools surface them.

Cloud native architectures widened that gap. Containers are short lived, layered, and built on long chains of open source dependencies. A single vulnerable base image can seep into hundreds of services.

That tension is the real bottleneck. Detection is fast. Fixing at scale is slow. Root’s work in this space underscores how urgent this shift has become, especially for teams trying to modernise remediation without forcing risky upgrades or disruptive rebuilds.

Why Modern Container Environments Create an Unmanageable Remediation Gap

Containers scale fast. Remediation does not. As environments become more fragmented and short lived, the volume of issues outpaces what teams can realistically fix through traditional processes.

The speed and scale problem

Traditional patch cycles assumed static servers and occasional maintenance windows. Once you move to containerised workloads, that model breaks. Images are built and deployed continuously across clusters that can host thousands of short-lived workloads. Each image carries its own dependencies and configuration.

For security teams, the surface area to patch is no longer a fixed estate. It is a moving landscape of images, layers, and versions that change daily. Manual remediation cannot realistically track and patch every variation without slowing delivery to a crawl.

Why detection outpaces remediation

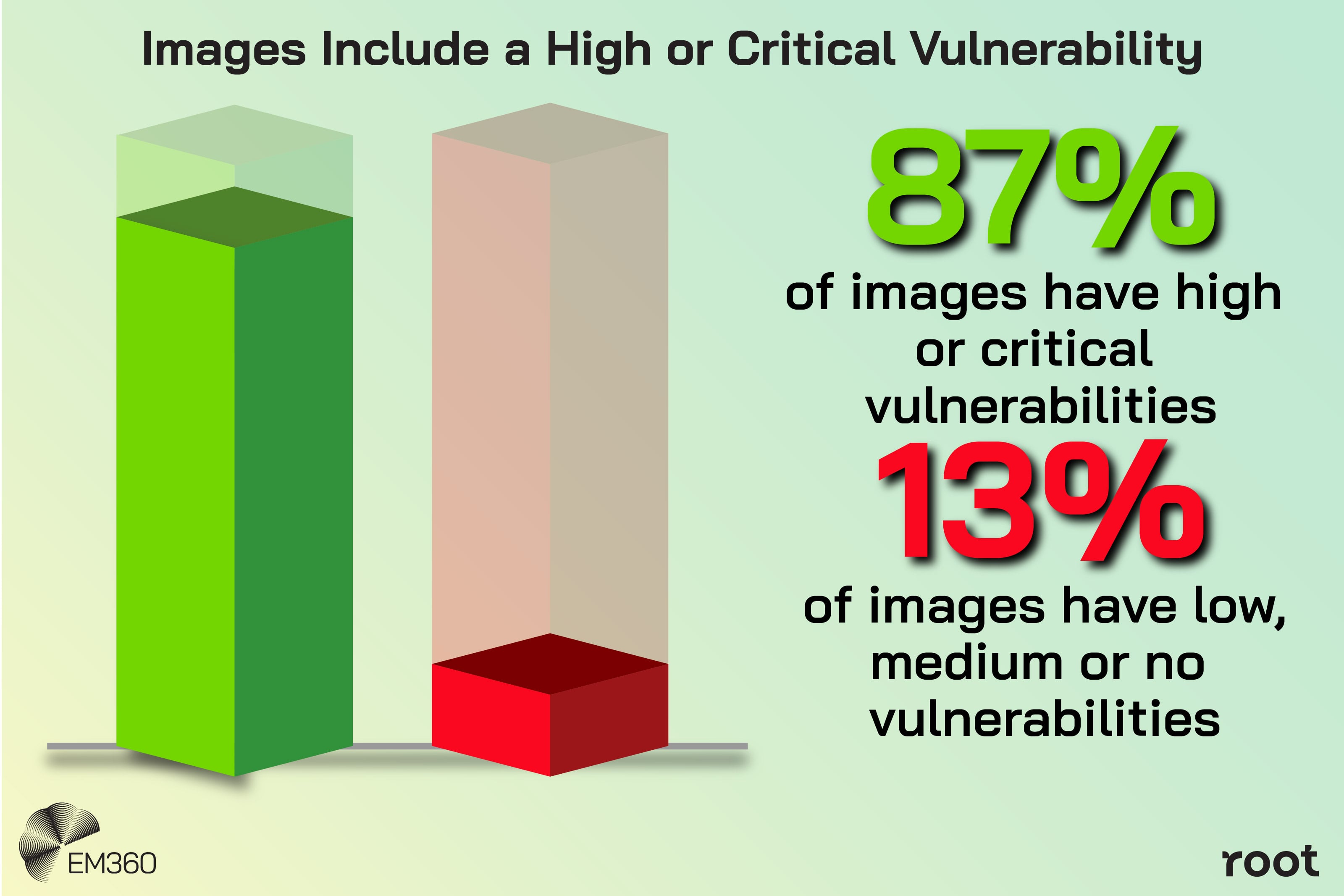

Tooling has advanced faster than process. Container scanners, software bills of materials, and registry checks now provide deep visibility into known vulnerabilities. A 2023 cloud native report from Sysdig, for example, found that the majority of container images they analysed contained high or critical vulnerabilities.

Root’s 2026 Shift Out Benchmark Report found that 33 per cent of engineering and platform teams have 25 per cent or more of production containers running unpatched high or critical CVEs older than 30 days, and 60 per cent have had multiple release delays due to security findings.

It also estimates that engineering teams spend 1.31 full-time equivalents (a full time employees working hours per month) per team per month on remediation, with 72 per cent of triage time lost to false positives. In other words, tools are discovering more risk than current processes and headcount can handle.

The strategic cost of slow fixes

When fixes lag, production environments accumulate known vulnerabilities that remain open for weeks or months. Compliance teams struggle to prove that critical issues are addressed within policy timelines. Audit findings shift from “we did not know” to “we knew and could not keep up”.

For engineering leaders, the impact shows up in release pipelines. Security gates block deployments more often. Developers wait on exception approvals or rework builds to accommodate patches. The organisation pays in slower releases, higher operational risk, and growing fatigue.

Why Risk-Based, Context-Aware Remediation Matters More Than Ever

When everything looks urgent, nothing gets fixed. Context is what separates real risk from background noise, helping teams focus on the vulnerabilities that genuinely matter.

Too many vulnerabilities, too little impact

Not every high severity vulnerability is equally dangerous. Without context, though, everything looks urgent. The raw volume of findings far exceeds the capacity of most teams.

Runtime-focused research helps reframe the problem. The Sysdig study found that when you filter down to packages that actually load at runtime, the fraction of vulnerabilities that truly matter drops sharply. Many “critical” alerts never become reachable in production at all.

How context changes the remediation equation

Context is what turns a flat list of CVEs into a plan of action. Factors like runtime reachability, exploit availability, network exposure, data sensitivity, and business criticality all influence which vulnerabilities deserve attention first.

When Shift Left Stops Working

Why security leaders are pivoting to AI-led remediation and secure-by-default libraries instead of overloading developers with CVE backlogs.

When organisations narrow their focus to vulnerabilities that are present in production, known to be exploitable, and associated with assets under active attack, the pool of “critical” issues shrinks dramatically. That smaller list is far more aligned with how attackers behave and how defenders need to respond.

Supply chain risk reshapes priorities

The software supply chain adds another layer. Open source ecosystems now see thousands of malicious packages published each month, and attackers are embedding payloads deep in dependency chains. A recent open source risk report from Mend highlighted both the scale and sophistication of these attacks.

An example is the Shai-Hulud 2.0 attack in November 2025, which compromised over 700 npm packages affecting projects with more than 132 million monthly downloads. It also exposed an uncomfortable reality: if your only remediation path is “upgrade to latest”, you may have no safe option when the supply chain itself has been poisoned.

Because “latest” is the thing that’s been compromised.

For container image security, that matters. Base images inherit hundreds of packages that pull in their own dependencies. A single compromised library can affect multiple services and teams.

So prioritisation now has to consider how a vulnerability enters your environment, where it spreads, and what it touches along the way, using SBOM data and supply chain visibility to guide decisions.

What AI-Driven Remediation Actually Means for DevSecOps Teams

AI-driven remediation is not hype. It is a practical way to take the repetitive, time-heavy parts of fixing vulnerabilities off human hands so teams can move faster without cutting corners.

Moving beyond AI-assisted triage

Early uses of AI in security focused on noise reduction. Models grouped alerts, highlighted anomalies, or recommended which incidents to look at first. That helped with prioritisation, but it did not change how fixes were created and applied.

AI-driven remediation goes further. Instead of stopping at “what should we care about”, these systems begin to answer “what should we do about it”. That can include recommending specific configuration changes, suggesting patched versions of vulnerable components, or generating code-level diffs that address the issue.

Rethinking Dependency Control

Pinned libraries, risky upgrades, and stalled pipelines. How autonomous remediation reshapes software supply chain security.

Agentic AI and the rise of automated remediation

The next step is agentic AI. Rather than providing passive advice, agentic systems take on parts of the remediation workflow themselves. They monitor for new vulnerabilities, assess context, propose patches, and in some cases apply changes directly to container images or infrastructure definitions under defined guardrails.

Recent launches of agentic AI for containers show where the market is heading. Platforms now promise to fix vulnerabilities across applications, language runtimes, and base images while keeping changes compatible with the existing environment.

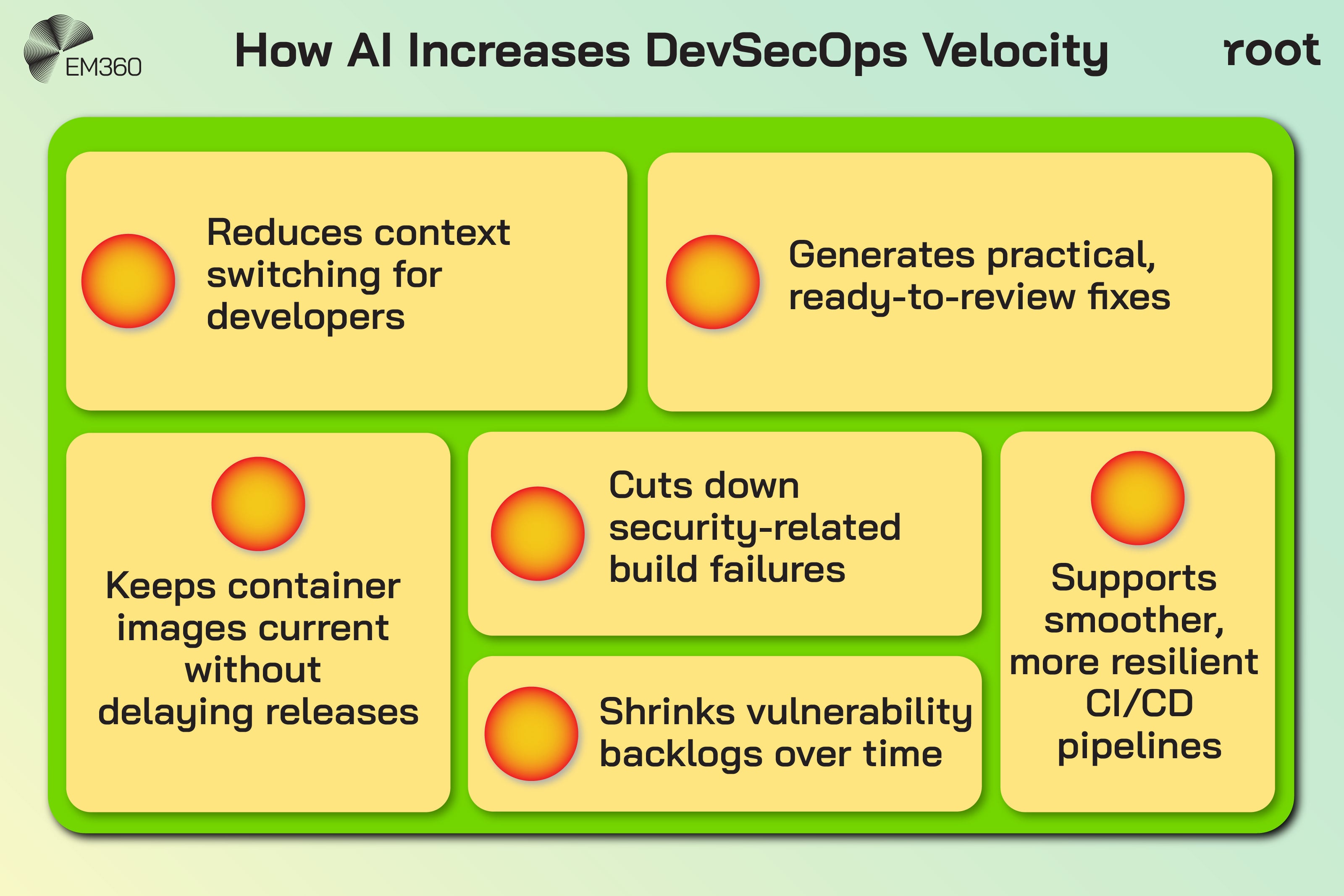

Why AI accelerates secure development lifecycles

When AI takes on the heavy lifting of spotting the right fixes and shaping them into something usable, teams get their breathing room back. Developers do not have to stop what they are doing to chase down every CVE or untangle long reports.

They review a smaller set of fixes that already make sense. Pipelines move with less friction. Security stops feeling like a blocker and starts feeling like part of the flow. The backlog stops piling up because remediation is no longer fighting for space with feature work. It runs alongside it without stealing the spotlight.

How Automation Rebalances Security, Development and Operations

Once remediation becomes consistent and fast, the pressure shifts. Developers reclaim time, security focuses on strategy, and operations see fewer last-minute surprises.

What changes for development teams

For developers, the difference shows up in the quiet moments. Less jumping between dashboards. Less scrambling to understand why a build has failed. AI-driven remediation gives them targeted changes they can approve with confidence, instead of long lists that eat into their focus. Vulnerability debt shrinks because fixes slot naturally into their workflow instead of derailing it.

What changes for security teams

When Dashboards Hide CVE Risk

Why 82% report shift-left success while only 4% clear vulnerability backlogs, and what autonomous remediation signals for security strategy.

Security teams shift from being the people who carry every patch on their shoulders to the people who set the rules for how fixes should happen. Their role becomes one of oversight, clarity, and quality. They decide what “good” looks like, which changes need human eyes, and how everything is tracked. It lets them spend more time on real security judgment and less time wrestling with queues.

What changes for operations and platform teams

Operations and platform teams feel the stability first. When remediation becomes consistent, there are fewer late-night patch rushes and fewer surprises waiting in production. Environments stay cleaner, drift drops, and patch levels stop being a guessing game. It becomes easier to explain what changed, when, and why, without digging through fragmented logs.

Practical Guidance for Organisations Adopting AI-Driven Remediation

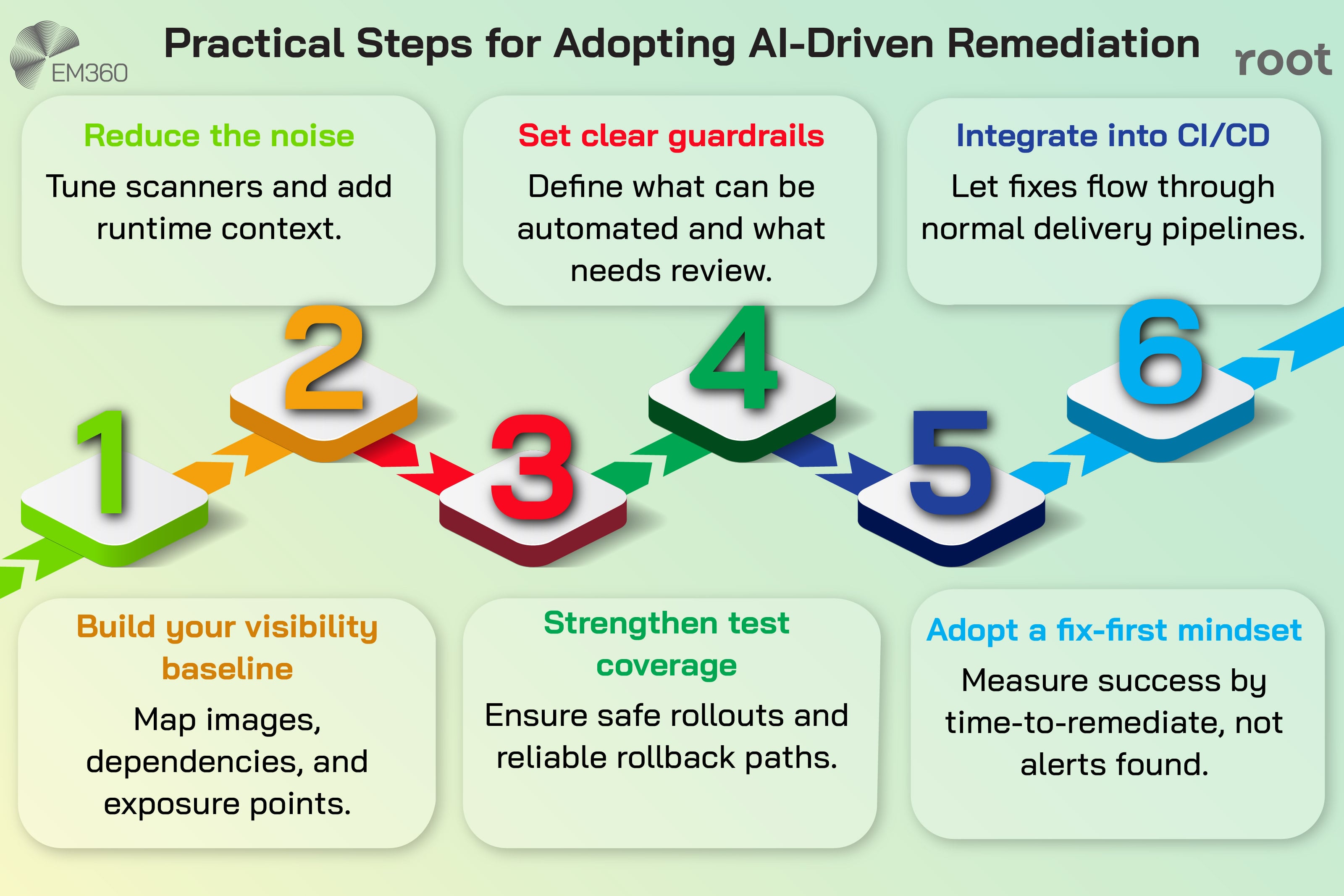

Successful adoption starts small. Reduce the noise, set clear guardrails, and let automated fixes slot naturally into existing delivery rhythms.

Start with visibility that reduces noise

AI-driven remediation works best when the signals feeding it are well curated. Before switching on automation, organisations need to tune their detection pipelines to reduce unnecessary noise and enrich findings with runtime and business context. Without that groundwork, even the smartest automation will struggle to make good decisions.

Build responsible guardrails into automated remediation

The power of automated patching needs to be balanced with disciplined controls. Human review, rollback mechanisms, robust test coverage, and clear change records are non negotiable if AI is going to make changes to container images or infrastructure definitions.

Shift from ‘scan-and-alert’ to ‘fix-first’ culture

Technology alone will not change outcomes if the culture around it does not evolve. Organisations that get the most value from AI-driven remediation treat it as a way to remove friction from delivery, not as a new source of alerts. They measure success by reduced time-to-fix, fewer emergency patches, and improved compliance posture, rather than by the sheer volume of findings.

Final Thoughts: Remediation Becomes Scalable When Context Guides Automation

When CVEs Vanish by Default

CVE work shifts from developer teams to automated remediation, changing how security and engineering allocate time, budgets and accountability.

The real barrier in container security has never been how fast we uncover problems. It has always been the strain of fixing them at the speed modern environments demand. Containers, microservices, and sprawling supply chains amplified that gap, turning remediation into a structural challenge rather than a process flaw.

Context and automation change the equation. Context separates signal from noise. AI-driven remediation turns the right signals into action. Together, they give organisations a realistic path to keeping container images current, teams unblocked, and risk under control.

For leaders shaping their next phase of DevSecOps maturity, this is the moment to rethink how remediation fits into delivery. Root is already working at the frontier of this shift, and EM360Tech’s Security Strategist series continues to highlight why these capabilities matter for the modern enterprise. Organisations that take this step now will set the pace for secure, sustainable software delivery in the cloud native era.

Comments ( 0 )