For a while it felt like the AI boom could only move in one direction. Generative AI was on every keynote slide, every quarterly call, every dinner conversation. The story was simple. Infinite growth, unstoppable disruption, a future where anything that could be automated, would be.

The AI detractors were out there and sounding every alarm bell they could get their hands on, but they mostly went unnoticed. Or were simply drowned out by the sheer number of AI supporters who were (and are) all the way fully invested in the AI revolution. Lock, stock, barrel and shiny AI-generated, fully custom, pearl inlay grips.

But in the last little while we’ve started seeing different headlines. AI slowdown. Questions about an AI bubble. Stories about big AI investment plans sitting next to quieter pieces on energy use, thin margins and delayed projects. People who’d rushed to try the latest model admitting that they’re using it less than they thought they would.

Inside enterprises, AI adoption reports are starting to sound more cautious. Outside, the wider AI market suddenly looks less like a straight line up and more like something wobbling on its feet. The signals behind this shift are real. Some are about money. Some are about trust.

Some are about the simple reality that it’s easier to promise transformation than to deliver it.

None of them, on their own, tell you that an AI winter has arrived. Together, they do tell you something important about where the story of AI is right now, and why it no longer feels the way it did a year ago.

Why the Mood Around AI Has Shifted

You can feel the temperature change before you see the numbers. For a long time the story of AI was all peak and no valley. Every product launch felt like a revolution, every demo looked like magic, and anyone who questioned the pace was treated as if they simply didn't understand the future. The AI hype cycle felt stuck at permanent peak excitement.

Now the mood is different. The headlines aren't just about breakthrough models and jaw-dropping capabilities. They sit next to pieces on energy use, rising costs, legal fights and missed expectations. One day your feed tells you AI will rewrite every industry.

The next day you see deep cuts to AI teams, cautious guidance on earnings calls, or someone explaining why their big AI project is on hold. The result is a kind of whiplash. Public perception of AI is no longer a straight line of optimism. It's a flicker between hope, fatigue and doubt.

You can see it on the ground as well. Inside companies, leaders who raced to push pilots into every corner of the business are pausing to ask harder questions.

- Where’s the actual lift in revenue or productivity?

- What’s safe to automate?

- Which experiments are burning budget without changing anything material?

Outside those boardrooms, people who were promised instant transformation are noticing that their daily work looks mostly the same, only now with a few tools that sometimes help and sometimes get in the way. AI expectations are starting to meet real workflows, and the gap between the two is shaping how people feel.

There is also the human experience of the tools themselves. Many people tried a generative AI chatbot once or twice and felt impressed, then ran into answers that were wrong or strangely confident about nonsense. Some kept going and learned how to get real value out of it. Others quietly stepped back.

Developers who embraced AI coding assistants are finding that they save time in some places and create new review overhead in others. All of this feeds into AI sentiment, which is now more complicated than simple excitement or simple fear.

Inside 2026 Data Analyst Bench

Meet the analysts reframing enterprise data strategy, governance and AI value for leaders under pressure to prove outcomes, not intent.

Underneath, the wider technology cycle is doing what it always does. New capabilities arrive, stories race ahead of reality, money follows the story, and only later do we find out what actually sticks. Mood shifts early, often before the data has had time to settle. People feel the friction in their own projects long before it shows up in neat charts.

They notice that something which was meant to feel effortless is suddenly starting to look like hard work. That's the space we are in right now. The story of AI has moved from pure promise to something more cautious, more mixed, and more honest. To understand why, you have to look at the specific signals people are pointing to when they say the AI boom is over.

The Signals Everyone Is Pointing To

When people say the AI boom is over, they aren't speaking in general terms. They are pointing at specific earnings calls, survey data, funding commitments and legal fights. They are quoting numbers that, at first glance, look like clear warning signs. Plateauing AI server sales. High adoption with thin returns.

Workers who try generative AI once and then drop it. Investors quietly selling out of the biggest AI names. Lawsuits and new rules landing faster than some teams can keep up.

Put together, these signals form the evidence file for the “AI winter” story.

Slower growth and lumpy AI infrastructure demand

The first cluster of evidence comes from AI infrastructure. For two years, it looked like anything related to AI servers or accelerators could only go up. Now the numbers are more uneven.

Hewlett Packard Enterprise is one of the most quoted examples. In its latest guidance, HPE reports weaker revenue tied directly to AI server sales being pushed back. Its finance chief describes AI server demand as “lumpy” and says customers are entering an “AI digestion phase,” stretching out deliveries instead of racing to add more capacity.

Inside Streaming Data Stacks

Dissects leading real-time platforms and architectures turning Kafka-era pipelines into a governed backbone for AI and BI.

That translates into a mid single digit decline in AI server revenue for the recent quarter and a softer forecast for the next one. For critics, this looks like AI infrastructure demand starting to roll over rather than powering ahead forever.

Nvidia’s highly publicised “100 billion dollar” OpenAI arrangement is another favourite data point. On paper, it sounds like a done deal. In reality, it's still only a letter of intent for around 10 gigawatts of Nvidia systems, not a binding contract. In December, Nvidia’s CFO confirms that the deal isn't final and may never be completed on those terms.

That clarification gets read as a sign that even the most aggressive AI infrastructure plans are now under more pressure and scrutiny than the headlines suggest. At the same time, you have suppliers further up the chain reporting the opposite.

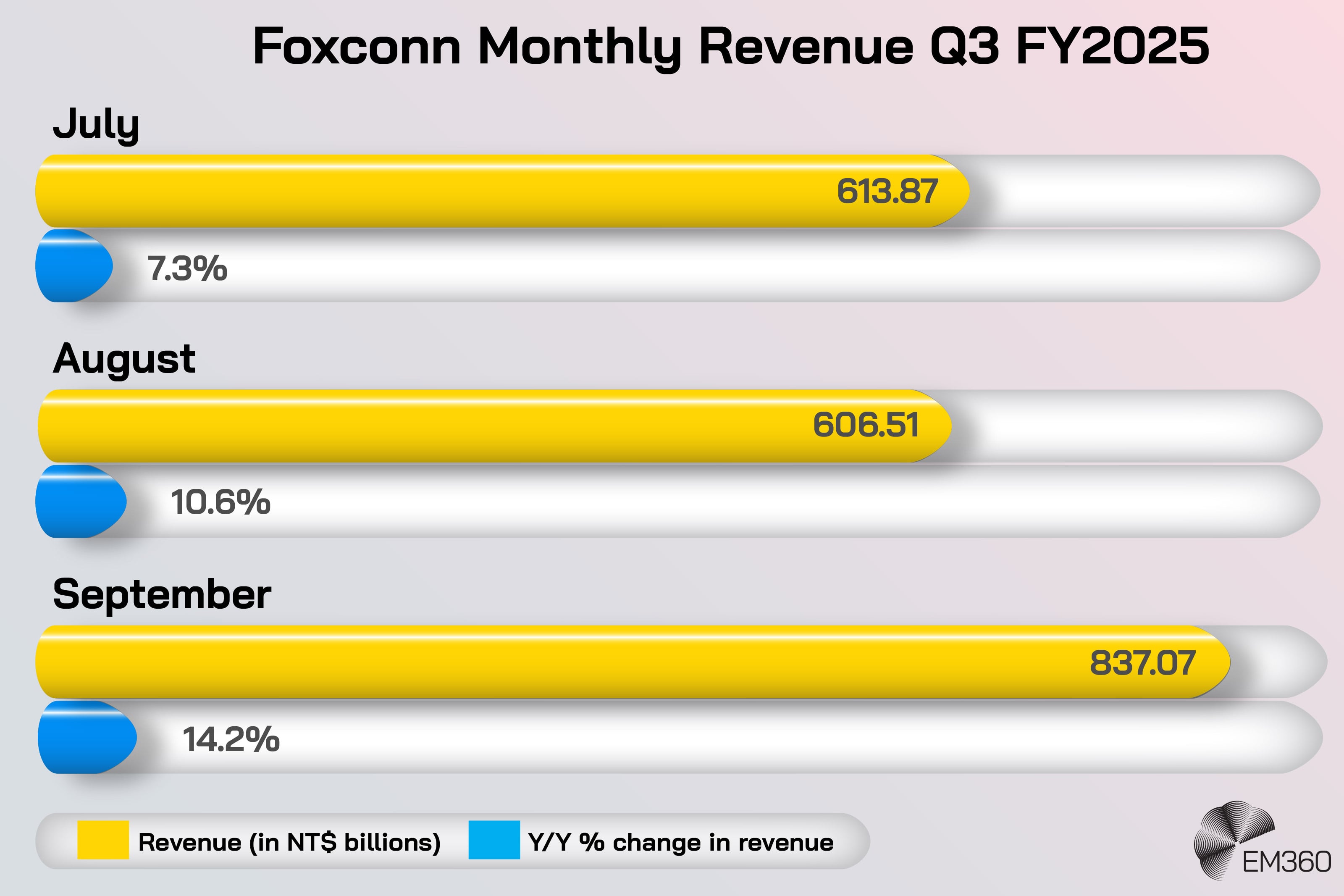

Foxconn, the world’s largest contract manufacturer, says its cloud and networking revenue has jumped by more than a quarter year on year, largely because of AI server demand. Its data centre business is now growing faster than its smartphone work, and the company is investing hundreds of millions of dollars into new factories to keep up.

This mix of cautious guidance from integrators like HPE, unfinished mega deals at the platform layer, and strong growth at manufacturers like Foxconn is the backdrop for the “AI infrastructure is cooling” argument. The story isn't that demand has vanished. It's that the easy, straight line up is gone, and the pattern now looks erratic enough to make people nervous.

The adoption–value gap inside organisations

The second set of signals lives inside companies. Here, the most cited numbers come from McKinsey and a cluster of executive surveys that track AI adoption and return on investment.

McKinsey’s 2025 State of AI survey finds that close to 90 per cent of organisations now use AI in at least one business process. Around 88 per cent say they have deployed AI in at least one function, which makes it sound as if enterprise AI is already mainstream.

Taming Agentic AI in Operations

Shift the risk question from trusting the agent to defining which workflow steps stay rule-bound, making autonomy auditable and safe.

Look a little closer and the story changes. Most of those deployments are still stuck at pilot or limited scale. Only about one third of firms say they have scaled AI into multiple parts of the business. Roughly one per cent describe their deployment as truly “mature.” The majority have not rolled AI out across the organisation in a way that meaningfully changes how they operate.

The value side is even more stark. Across the same McKinsey data, only 39 per cent of companies report any material impact on earnings from AI so far, usually less than five per cent of total profit. That leaves over 60 per cent of firms who have invested in AI but seen no meaningful lift in enterprise-level profit at all.

A separate Deloitte-HKU C-Suite Survey adds that 45 per cent of executives say AI’s ROI has been “below expectations,” while just 10 per cent say it's exceeded them. This feeds into a wider narrative about wasted spend. A widely shared MIT-affiliated study, summarising 300 enterprise generative AI deployments, finds that about 95 per cent of pilots fail to deliver measurable profit-and-loss impact.

Only the top five per cent of projects show rapid revenue acceleration. The rest either stall at proof-of-concept or produce “little to no measurable impact on P&L.”

Those are the numbers people reach for when they say there is an adoption–value gap. On paper, almost everyone is “doing AI.” On the income statement, most still cannot point to returns that justify the level of noise, or the level of spend.

Trust, accuracy and workflow friction

The third cluster shows up in how people actually use AI tools day to day, and how much they trust them. Here, the data comes from workforce and developer surveys.

PwC’s Global Workforce Hopes and Fears 2025 survey is one of the most quoted sources. It finds that 54 per cent of employees used some form of AI at work over the past year, but only 14 per cent use generative AI every day. Daily use rises to about 19 per cent among office workers and drops to around 5 per cent among manual workers.

Boardroom Guide to AI Defense

Why model threats are now material risks, and how CISOs are using specialised tools to turn AI from unmanaged exposure into governed capability.

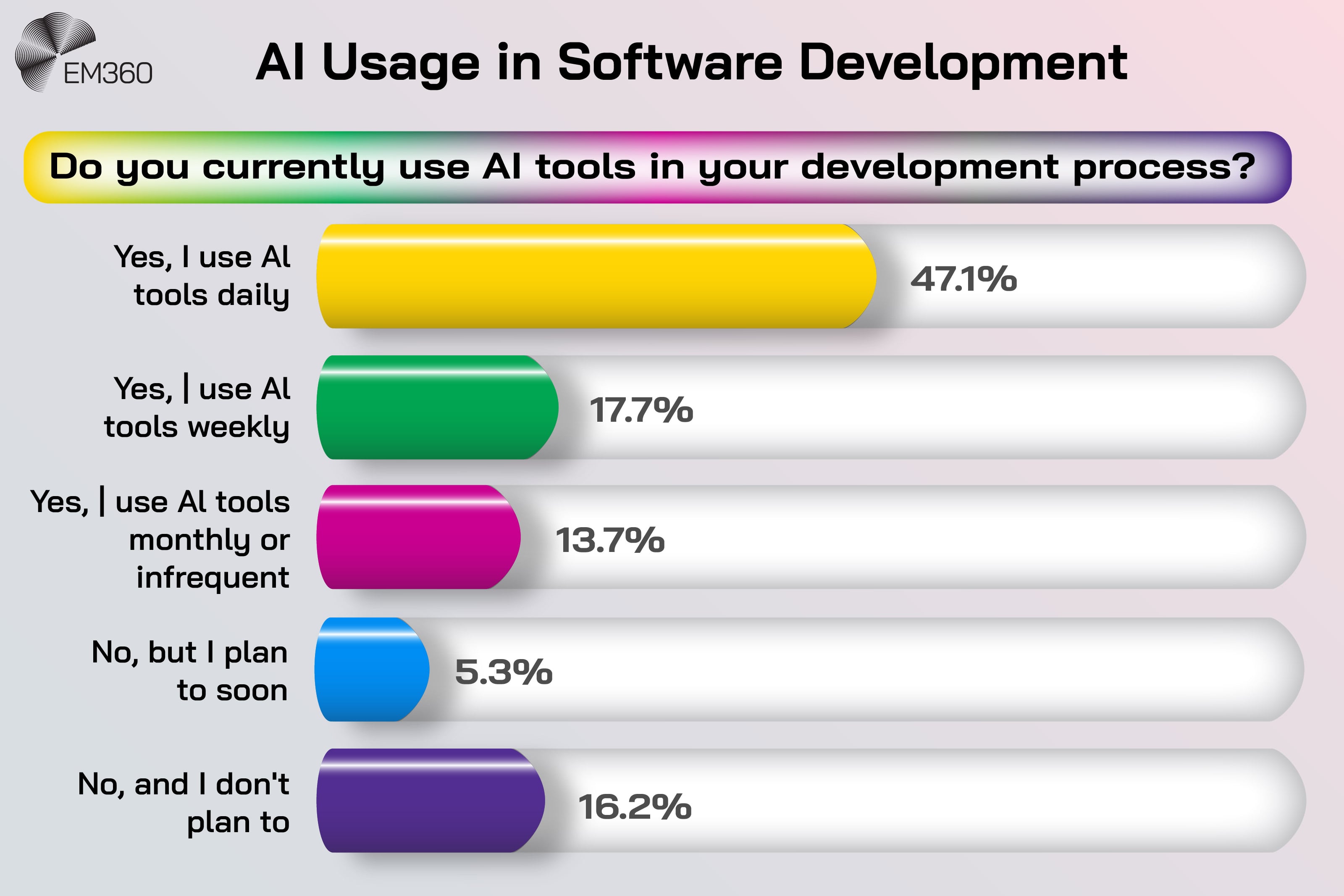

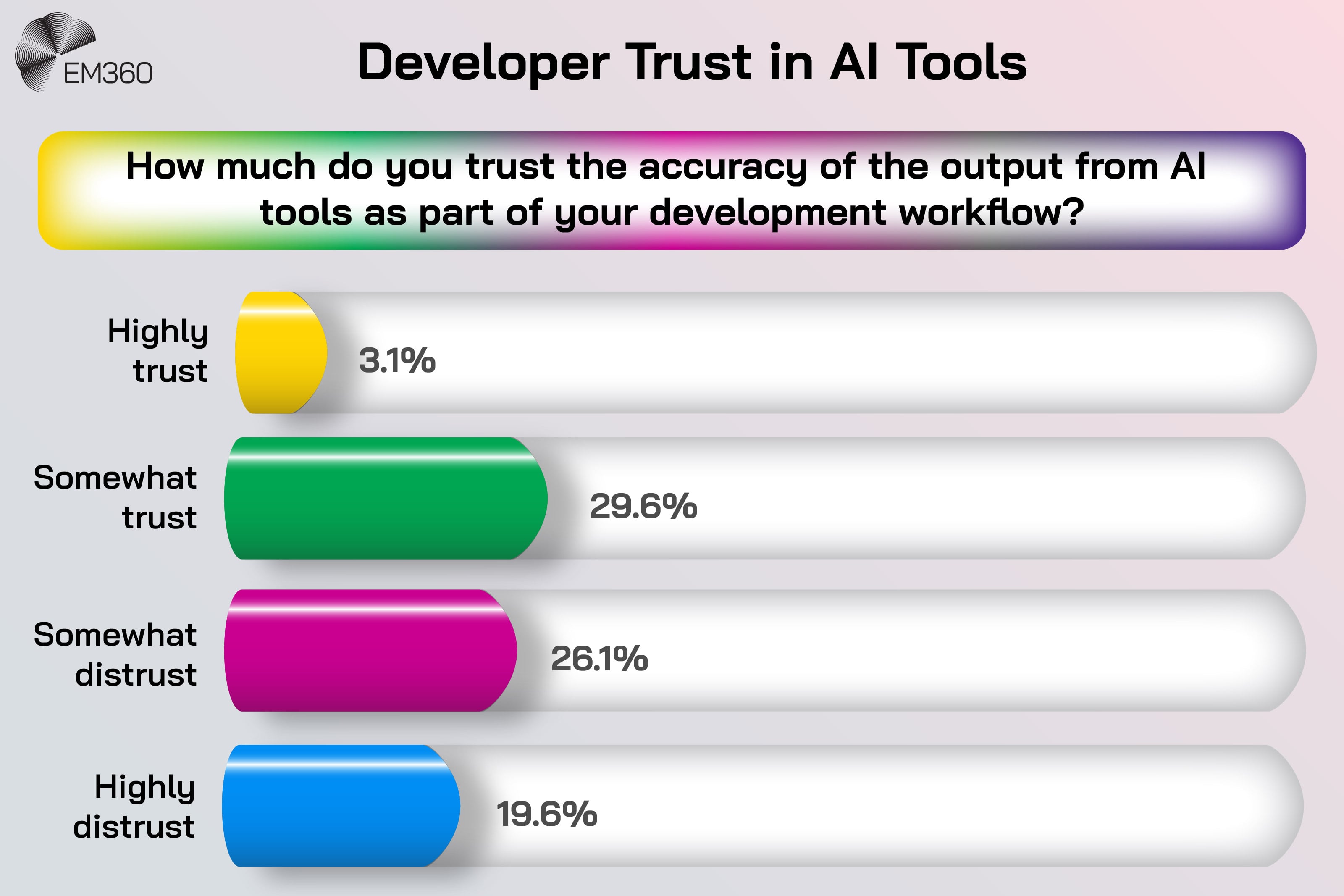

Among developers, adoption is much higher but trust is far shakier. The 2025 Stack Overflow Developer Survey reports that 84 per cent of respondents use or plan to use AI tools, and just over half of professional developers, around 51 per cent, now use them daily as part of their workflow.

At the same time, 19.6 per cent say they actively distrust the accuracy of AI outputs, compared with only 32.7 per cent who say they trust them. This includes just 3.1 per cent of developers who say they “highly trust” AI’s answers. Many developers say AI often generates code that's “almost right but misses the mark,” which means they spend time checking and correcting it.

These numbers are used to argue that AI isn't yet the friction-free productivity engine it's often sold as. Daily usage is rising, but still limited outside specific roles. Trust is fragile, especially among the people who work closest to the systems. The lived experience is closer to “helpful but error-prone colleague” than flawless assistant.

That gap between hype and hands-on reality is another key part of the slowdown story.

Market signals and the “smart money” slowdown

The financial side of the evidence file is harder to ignore, partly because it comes with large, round numbers and big names attached.

On the language side, analysis by AlphaSense shows that mentions of an “AI bubble” in earnings calls and investor events have jumped 740 per cent in a single quarter, appearing in 42 calls between October and December 2025. Executives and analysts are no longer avoiding the phrase.

They are debating it openly, which feeds into the idea that fear is now part of the AI narrative at board level.

On the market structure side, Reuters reports that the “Magnificent Seven” technology giants now account for around 36 per cent of the S&P 500’s total value. These same firms provide roughly three quarters of the index’s returns, about 80 per cent of its earnings growth and nearly 90 per cent of capital spending growth since the launch of ChatGPT.

That level of concentration is one reason critics say the AI market looks fragile. If AI sentiment turns, a small group of stocks could drag a much larger market with them. Then there are the symbolic exits. Regulatory filings show that Peter Thiel’s hedge fund sold its entire Nvidia stake, roughly 537,000 shares worth around 100 million dollars at the time.

SoftBank, another high profile investor, has also sold a multi-billion dollar Nvidia position to free up capital for other AI bets. These moves are used to tell a simple story: “smart money is leaving AI.” Finally, research from Goldman Sachs and others adds a nuance that rarely makes it into social posts.

Public AI-exposed companies are expensive, but many still have earnings that roughly support their valuations. The sharper bubble risk, they argue, sits in private AI markets, where start-up valuations often float far above fundamentals and depend on circular financing patterns.

That distinction doesn't stop the word “bubble” from spreading, but it shapes how seriously different investors treat the risk.

Legal, regulatory and societal headwinds

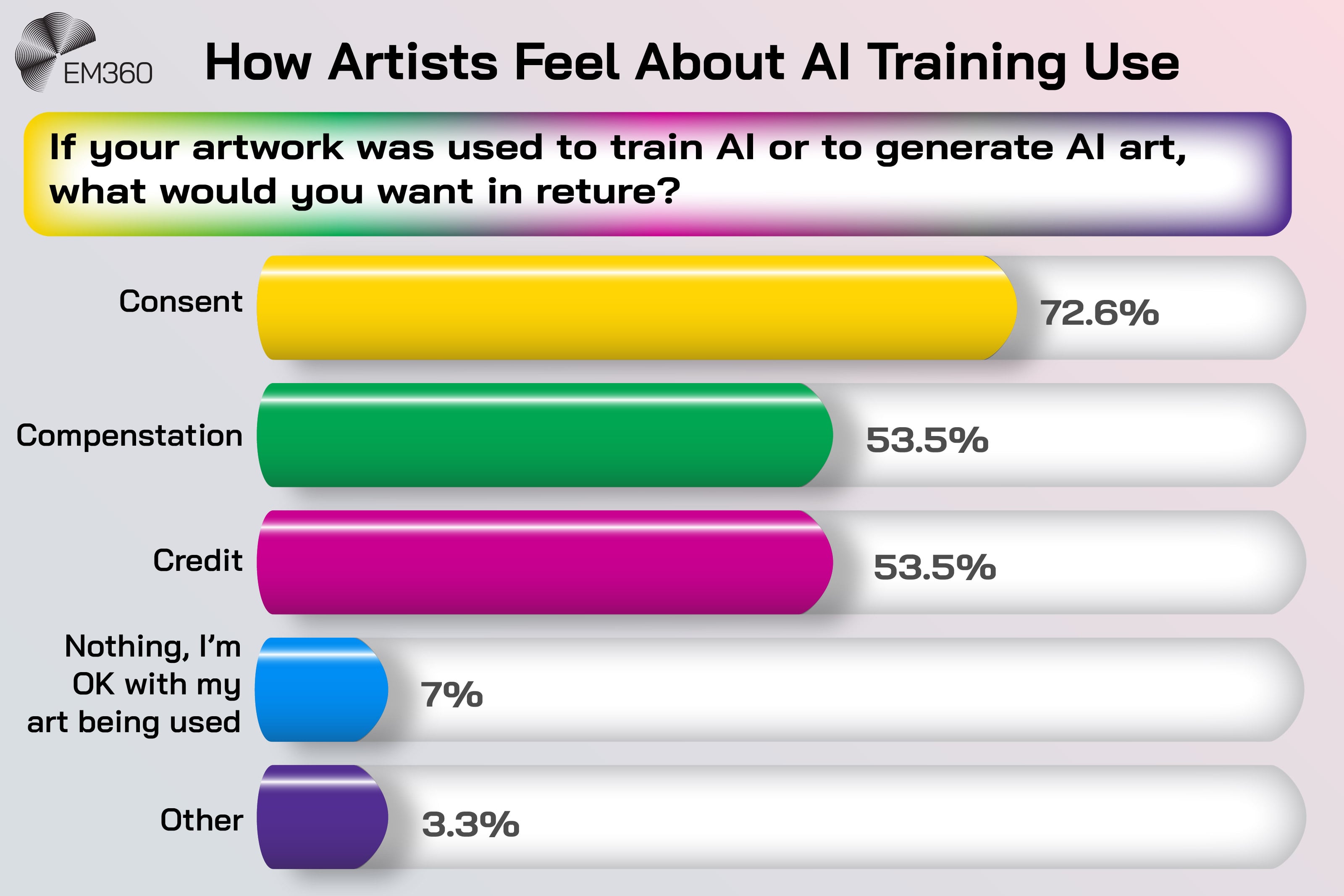

The last group of signals comes from outside the balance sheet. These are the legal, regulatory and societal pressures that make AI feel less like an open frontier and more like a contested space. On the legal front, a wave of copyright lawsuits is challenging how training data for large models is sourced and used.

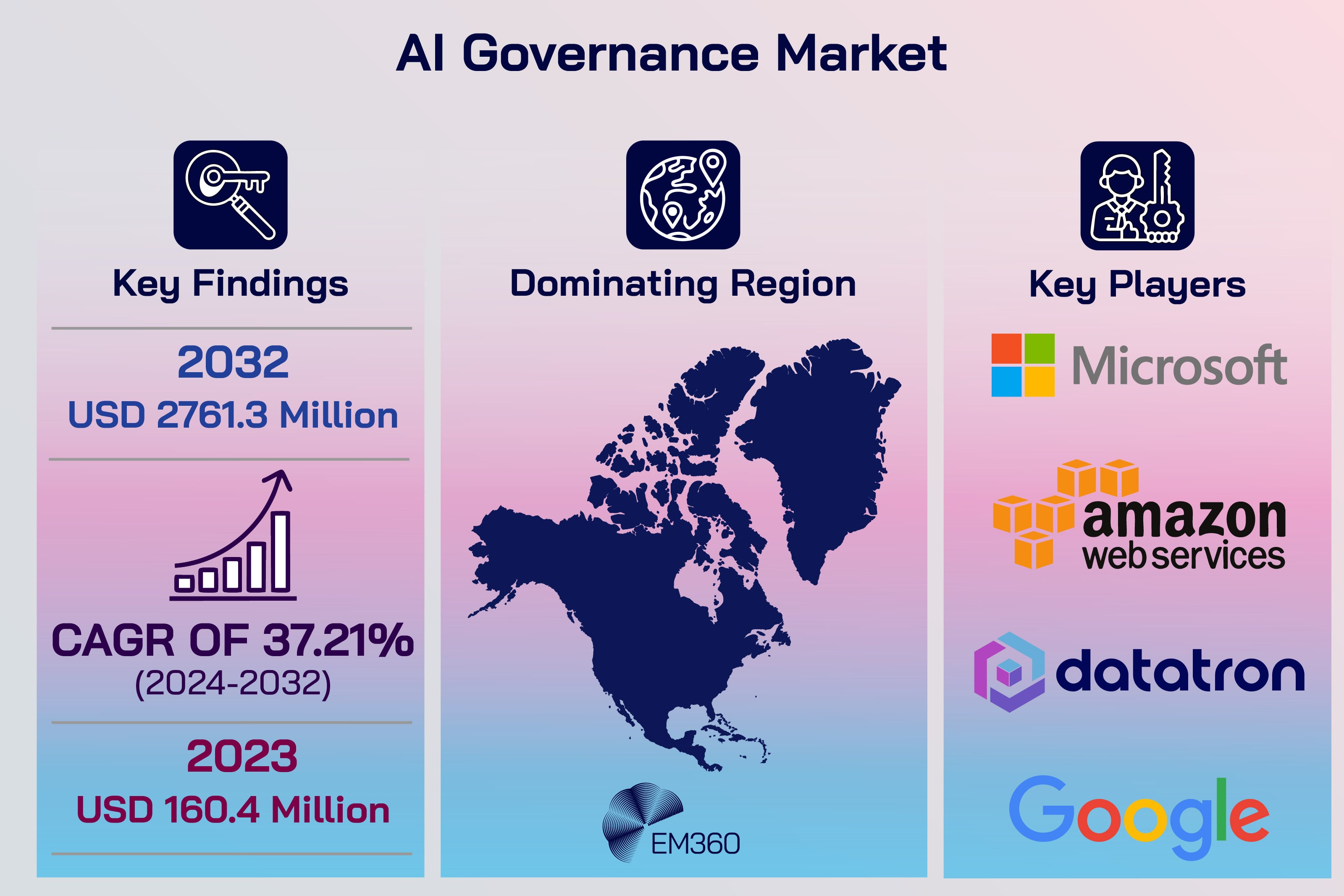

These cases focus on whether scraping public content for training counts as fair use, and what compensation, if any, rights holders should receive. At the same time, regulators in Europe are finalising the EU AI Act, which introduces risk-based rules for AI systems and stricter obligations in high-risk areas like recruitment, credit scoring and healthcare.

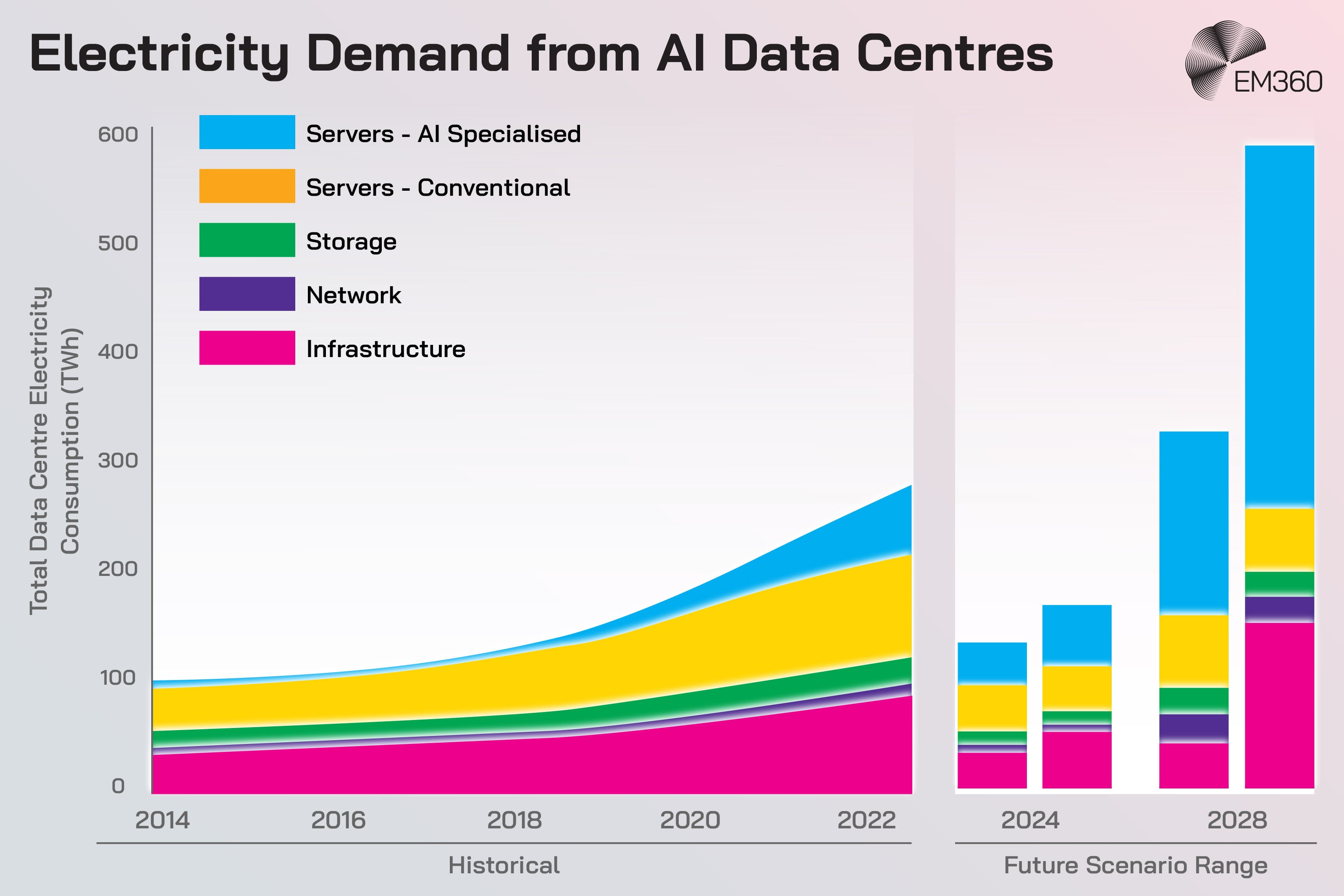

Other jurisdictions are working on their own frameworks for AI governance, AI compliance and AI safety. Behind the legal documents sit very physical concerns. Analysts and infrastructure experts are warning about the energy and water demands of large AI data centres, especially in regions where grids are already under pressure.

Some estimates suggest AI-driven workloads could become a significant share of future electricity demand if current growth continues, prompting local communities and policymakers to ask what is being traded away to support that growth. For many people, these questions land as a simple gut feeling.

If AI is so resource-hungry, and still so uneven in its benefits, is the trade-off worth it? When you add in the ongoing worry about misinformation, deepfakes and biased systems — it becomes easier to understand why public conversations about AI have shifted from uncritical excitement to something more cautious.

Taken together, these are the main signals people reach for when they claim that the AI boom is over. They are real numbers, real moves and real concerns. The harder question is what they actually show once you look beyond the headlines.

What These Signals Actually Tell Us

Once you away the drama, the same question keeps coming up. Are these signals proof that AI is running out of road, or are they what you would expect from any technology that grew too fast, too loudly, for too long?

The short version is that most of what people are pointing to looks less like collapse and more like a system trying to settle into something sustainable. The spending cools. The stories get less glossy. The hard work starts to show. That's uncomfortable, but it's also familiar.

This Is Not Collapse, It Is Market Normalisation

No industry gets to live on exponential curves forever. Early spikes in AI spend were never going to be the new normal. They were the catch-up phase, where everyone was racing not to be left behind.

What you're seeing now is market normalisation. After a rush of orders, customers pause to see what that capacity is actually doing for them. That's what HPE is describing when it talks about an AI digestion phase. Capex (capital expenditure) doesn't vanish. It changes shape.

Instead of "buy everything you can get", you start seeing more selective buying, more scrutiny, and more questions about payback periods. That's a normal step in the AI spending cycle, not a sign that the story is over.

This is what technology maturation usually looks like in real time. Early on, demand patterns are simple. Everyone wants the shiny new capability. After that, demand patterns get messy. Different sectors move at different speeds. Some overcommitted buyers pull back.

Others only start to lean in once the first wave has tested the ground. Infrastructure cycles also tend to lag behind narrative cycles. The story is often still in full hype mode while the people signing the cheques are already slowing down to reassess. Seen through that lens, the signals from HPE, Nvidia and Foxconn aren't contradicting one another.

They are three different points on the same curve as AI demand patterns move out of the fantasy stage and into something more like reality.

Adoption Is Not Failing, It Is Doing the Hard Part Now

The adoption numbers tell a similar story. On paper, almost everyone is using AI somewhere. In practice, very few organisations have turned that early use into deep, repeatable change. It's tempting to read that as failure. It's more accurate to recognise it as the difficult middle of AI scaling.

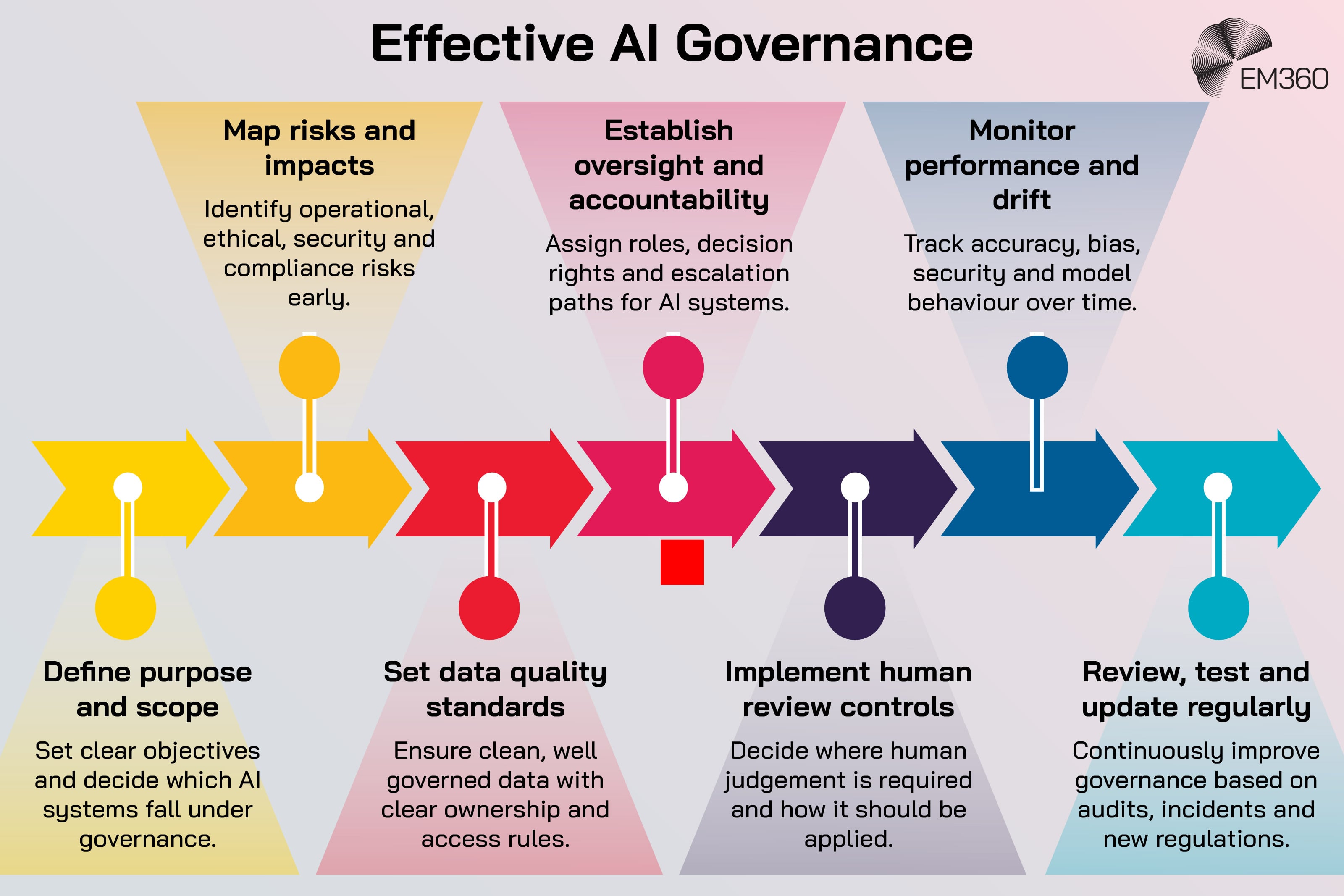

Experimenting is the easy part. You can spin up a pilot, plug a model into a narrow workflow and get an impressive demo without touching the rest of the organisation. Scaling is the work of years. It means cleaning up data, agreeing on policies, changing processes and sometimes changing incentives.

Data maturity, AI governance and basic enterprise readiness move much slower than slideware. When you look at it that way, the adoption–value gap looks less like a verdict on AI and more like a verdict on how long real AI transformation takes. Companies aren't walking away from AI.

They are trying to move from "this is interesting" to "this is reliable enough to bake into how we operate". That's a harder, slower story, and it doesn't fit neatly into a headline, but it's the one that actually matters.

Trust Problems Are Solvable Engineering Problems

The trust and accuracy issues are real. They also sit in a category that engineers are very familiar with. Systems are messy, then they get better.

Right now, model reliability is uneven. Large models can be astonishing on some tasks and strangely fragile on others. LLM accuracy isn't yet at the point where you can treat every answer as ground truth, which is why so many teams have wrapped them in human review and guardrails.

None of that's a surprise to the people building them. Hallucinations are an acknowledged behaviour of these systems, not a mysterious flaw, and there is active work on hallucination mitigation through better training data, retrieval-augmented generation and domain-specific models.

Trust builds when tools become predictable. If you know where a system is strong, where it's weak and how it will behave under pressure, you can make better decisions about how to use it. That's what quality work in AI looks like right now. It's not about chasing bigger models for their own sake. It's about steady, incremental gains in AI quality and AI refinement that make it safer to depend on.

In other words, the trust problem isn't a sign that AI doesn't work. It's a sign that the technology arrived in public before the usual smoothing and sanding had finished. The gap between expectation and behaviour is where most of the frustration lives.

Market Pullback Doesn’t Mean AI Is Done

Markets have their own logic. They don't move only on beliefs about technology. They also move on interest rates, risk appetite, regulation, and simple profit taking. You can see all of that at play in the AI-exposed part of the market right now. Yes, some investors are stepping back. Yes, you're seeing market correction language more often.

That doesn't automatically mean those investors no longer believe in AI. Funds lock in gains for many reasons. Some have mandates to rebalance when any single position grows too large. Others are rotating into earlier stage bets where they see more upside. Those are standard forms of investment risk management, not a group vote that the AI sector performance story is finished.

There is also the simple fact that extreme concentration invites a valuation reset. When a handful of companies carry a large share of an index and a large share of its future earnings story, any wobble in that group will echo loudly. That's part of the technology cycle. We have seen it before with other waves of tech. Prices run ahead, then they cool, and only later does it become clear which part of the drop was warranted and which part was fear.

Regulation Reflects Impact, Not Decline

The last set of signals is the easiest to misread. New rules and lawsuits feel like resistance. They can just as easily be a sign that a technology has crossed a threshold of importance.

When lawmakers invest time and political capital into AI policy, they aren't doing it because AI is irrelevant. They are doing it because these systems now shape credit decisions, hiring, policing, healthcare access and information flows. At that point, some form of AI risk management is inevitable.

The question isn't whether frameworks will arrive, but how well they will balance innovation with protection.

Good AI governance frameworks and a clear AI compliance landscape can actually support adoption over time. They set boundaries, provide shared language for risk, and make it easier for cautious organisations to move from "wait and see" to "we know what is allowed and what is not".

That doesn't make regulation easy or painless. It does mean that its presence is not, by itself, proof that AI is faltering. Often, it's proof that AI is now too embedded in critical systems to be left to informal norms.

Put simply, the signals people are using to call time on the AI boom are real, but they aren't all pointing in the direction that the loudest headlines suggest. Many of them look more like a system settling after a rush than a system breaking apart. The harder part is working out whether this feels like a hype crash, or whether it's the uncomfortable beginning of a maturity phase.

How to Understand the Difference Between a Hype Crash and a Maturity Phase

One useful way to think about what is happening with AI right now is to zoom out and look at the pattern. Not the headlines. The pattern. New general purpose technologies almost never move in a straight line. They move through a familiar rhythm of excitement, disappointment and then slow, steady technology maturity.

First, something becomes technically possible. A handful of early adopters experiment with it. Then the story takes over. The story races far ahead of what the technology can actually do. This is the peak of the hype cycle, where every problem looks like it will be solved, every industry looks like it's about to be rewritten, and every objection gets waved away as a lack of vision. That's the phase AI has just lived through.

The next part of the AI lifecycle is less glamorous. Reality catches up. Limits appear. Costs become visible. The easy wins are already taken, and what is left is the long work of figuring out where the technology genuinely fits. It feels, from the inside, like a crash. From a distance, it looks more like innovation adoption doing what it always does.

Early promises get stress tested against real data, real workflows and real people. Some ideas don't survive that contact. The ones that do become quieter and more boring, but they also become much more useful.

That's usually where the real value starts to accumulate. The next phase is slower, but it's also more honest. Enterprises stop trying to sprinkle AI over everything and start building it into specific parts of the business where there is a clear reason for it to be there. Regulators stop arguing about abstract risks and start writing detailed rules.

Workers stop treating AI as a novelty and start treating it as one tool among many. The story cools at the same time as the foundations deepen. That's what a long term trajectory looks like when a technology is moving from novelty towards infrastructure.

So when you see AI spending growth flatten, or hear that a big project is delayed, it can feel like proof that the whole thing is fading. It's often a sign that the work is shifting from fast, visible moves to slower, structural ones. Slowing down can be a form of deepening, not disappearing. For the people living through it, that shift shows up in very human ways, long before it shows up in neat graphs.

What This Moment Really Means for People

It's easy to talk about AI in terms of markets and models and forget that most of the impact lands on people first. Before it shows up on a balance sheet, it shows up as a feeling. Excitement. Pressure. Fear. Relief. Sometimes all at once.

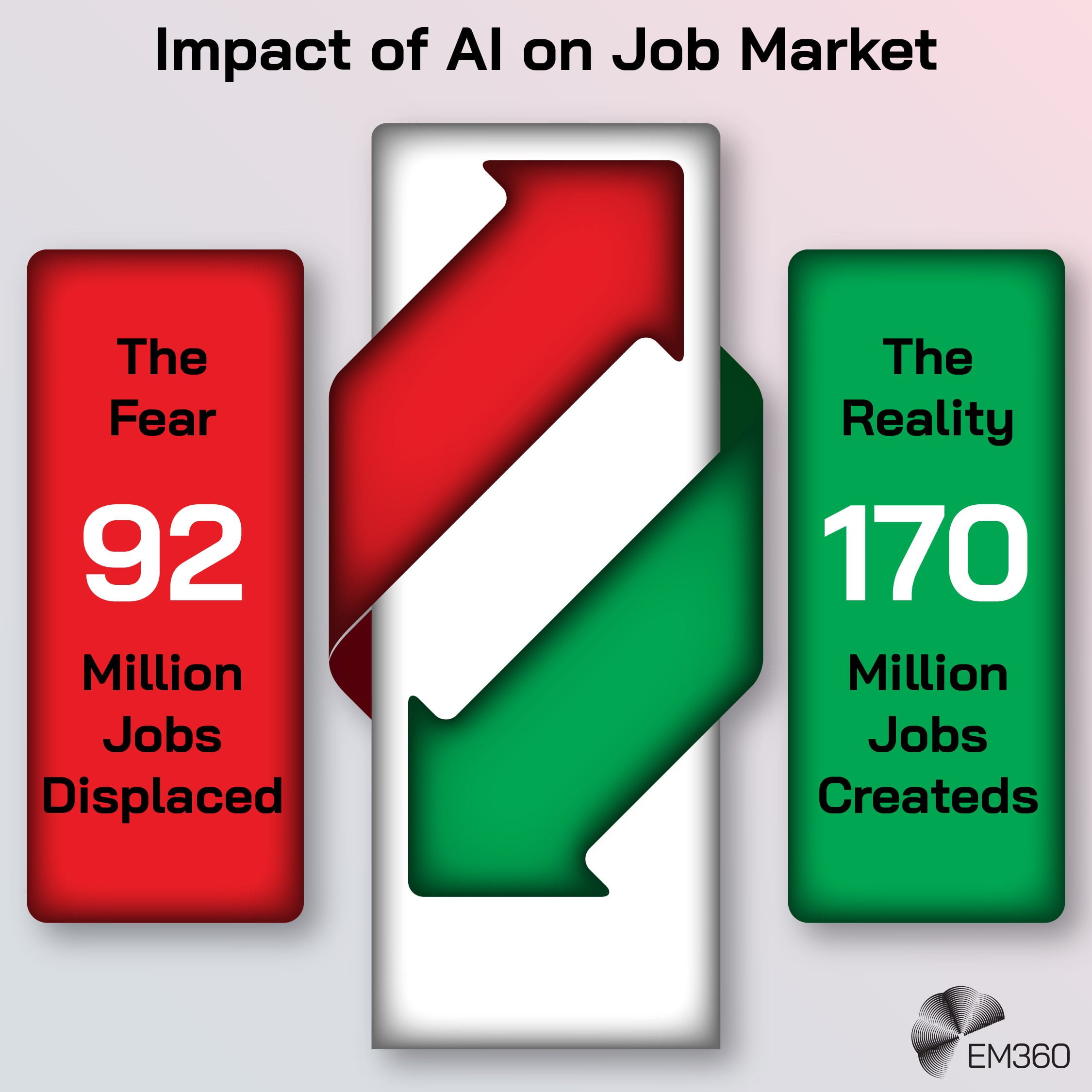

For workers, the story is still tangled. A lot of the headlines about AI’s impact on jobs have been loud and absolute. Everything will be automated. Nothing is safe. At the same time, many people are sitting at their desks trying to decide whether this new tool is actually helping them, or quietly trying to replace them.

They’re told that AI in the workplace will take away the boring parts of their jobs, but the boring parts are often where they feel most competent. When a chatbot writes a decent first draft faster than they can, it raises a very human question. If the machine can do that, what am I still here for.

Others are having the opposite experience. They are discovering that AI doesn't magically know their domain, their customers or their constraints. It needs to be guided. They are learning that the value of their work isn't only in the output, but in the judgment that shapes it.

For them, the rise of AI throws a different light on AI skills. The most important skills aren't just prompt tricks. They are the ability to decide when to use the tool, when to ignore it, and how to explain that choice.

For the people building AI, the mood is complicated in a different way. The last few years have been about pushing the boundaries of what is technically possible. Now, the reality of constraints is much clearer. Energy bills. Latency. Data access. Legal risk. The builders who stay in the field are the ones who can live with those constraints and still find ways to move forward.

They are discovering that a lot of the real work of AI is less about raw intelligence and more about fit. Where does this system make life meaningfully better for the person on the other side of the screen. Where does it just make things more fragile.

Leaders are also moving into a different posture. At the height of the hype, it was almost a reputational risk not to have an AI story. Now they'rebeing asked what their experiments have actually delivered. They are being pushed to show where automation has freed people to do better work, and where it's simply shifted tasks around.

The conversation is moving from “what are you trying” to “what are you keeping”. That pivot from experimentation to accountability is one of the clearest signs that AI is maturing.

Outside the walls of any single company, communities are weighing the trade-offs. They are asking what heavy AI workloads mean for local power grids and water supplies. Parents and teachers are asking what constant access to text generators means for learning and attention.

Creators are asking what it means when their work can be scraped, remixed and reissued without consent. All of this feeds into AI public concern. Not because people are anti technology by default, but because they'retrying to work out whether the benefits are being shared fairly, and who picks up the cost when things go wrong.

At the centre of all of this is human–AI interaction. Not as a design slogan, but as a daily reality. People are learning what it feels like to delegate part of their thinking to a system that doesn't experience context, exhaustion, shame or pride. They’re learning what it does to trust when a tool is usually right and occasionally wrong in ways that are hard to predict.

They’re learning what it does to confidence when some of their skills are amplified and others feel sidelined.

That's why the stakes feel so personal right now. AI isn't just another background system ticking away in a data centre. It's something that's starting to sit in meetings, inboxes, classrooms and creative spaces. The question isn't only what the technology can do next, but what kind of future people want to build around it.

Once you see that, the debate about whether the AI boom is over becomes less about charts and more about direction. The more useful question is where AI goes from here, and what it would look like for this next phase to be slower, more deliberate and genuinely worth it.

Where AI Goes From Here

If you set the noise aside for a moment and look at the pressure points, a few things stand out quite clearly. None of them give you a neat timeline for the future of AI. They do give you a sense of the shape that AI evolution is likely to take from here.

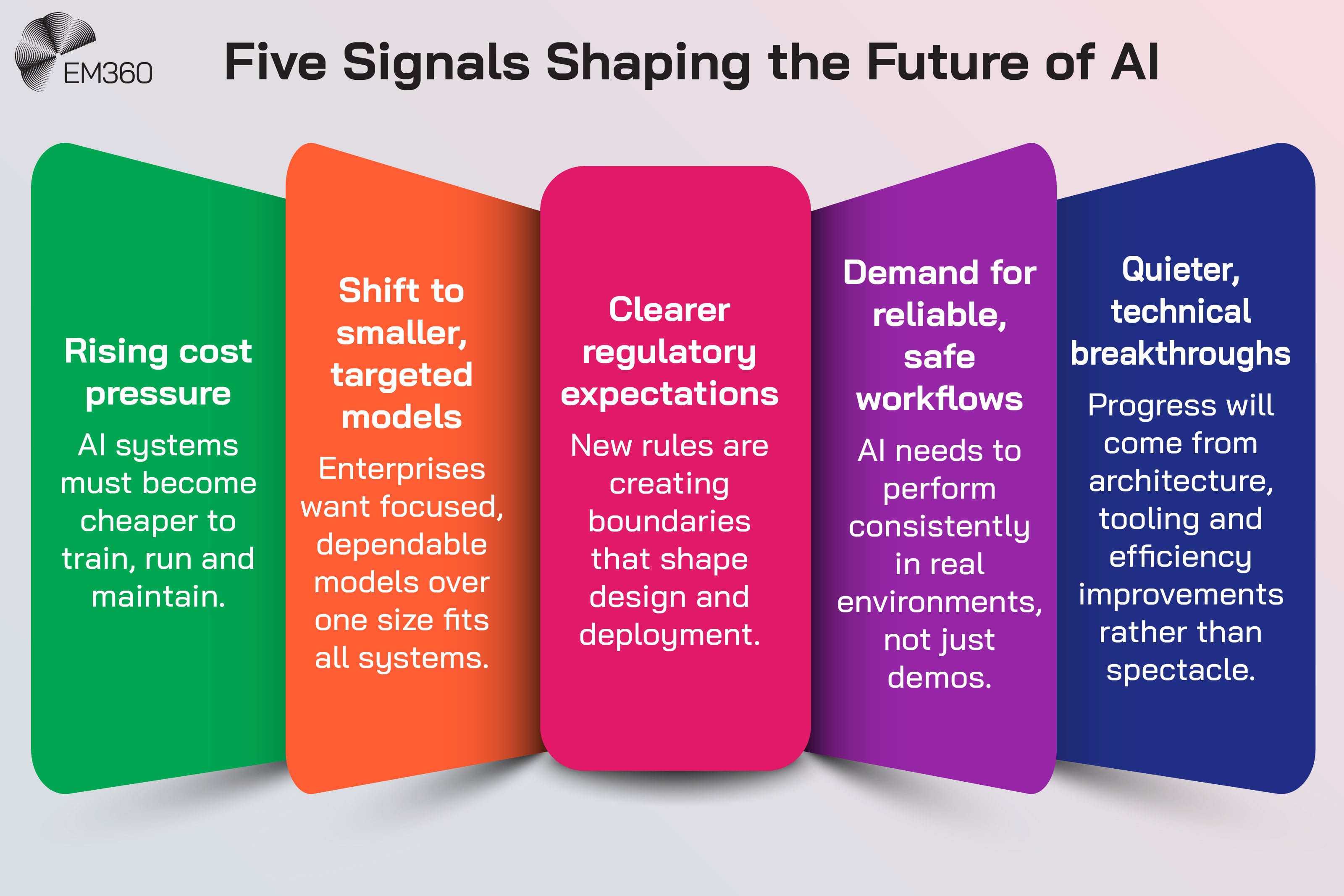

The first is cost. Training and running large models is expensive. Not just in terms of hardware, but in terms of energy, cooling, networking and people who know how to operate them safely. As those bills arrive, they start to quietly edit the AI roadmap.

You can already see a shift away from “make the biggest model possible” towards “make the smallest model that gets the job done”. That shows up in work on model compression, distillation and retrieval based systems that keep the heavy lifting in one place and push lighter workloads to the edge.

Cost pressure isn't the end of ambition. It's the reason ambition has to show its working.

The second is focus. Enterprises are starting to realise that they don't need a single system that can do everything in theory. They need systems that can do specific, valuable things in practice, every day, without drama. That's pushing enterprise AI strategy towards narrower, more reliable setups.

A model that's tuned deeply on one domain, paired with clean data and clear guardrails, is often more useful than a general model that knows a little about everything and behaves unpredictably under stress. The story shifts from “how smart is this model” to “how often does this workflow succeed”.

The third is rules. Regulation is moving from headlines to implementation, and that will change how teams design and deploy AI. Clearer requirements around data protection, transparency and risk controls will create friction in the short term. Over time, they can create a safer and more predictable environment for adoption.

When organisations know what is required of them, they can plan for it. That's where AI innovation trends and AI governance meet. Some experiments will not survive that contact. Others will become stronger because they'rebuilt on foundations that can stand up to audit, not just to a demo.

The fourth is substance. The winners in this next phase will almost certainly be the people and teams who solve real problems, not the ones who promise the most dramatic disruption. That means tools that reduce handling time in a contact centre without destroying customer trust.

Models that help clinicians summarise records accurately without hiding uncertainty. Systems that help engineers understand and improve complex infrastructure rather than guess at it. In other words, solutions where AI is present, but the value is felt in the outcome, not in the branding.

The last piece is tone. The next meaningful breakthroughs are likely to look quieter from the outside. They will be architectural shifts that reduce latency and cost. Tooling that makes evaluation and monitoring less painful. Interfaces that make it easier for non specialists to understand what a system is doing and why.

None of that will generate the same level of public excitement as a brand new chatbot, but it's exactly the kind of work that deepens the future of AI and extends its useful life. Big leaps forward often look, from the inside, like a series of small, careful steps.

Put together, this is what a more mature AI long term trajectory looks like. Less spectacle. More plumbing. Fewer promises. Better questions. The question for leaders, builders and everyday users isn't whether AI will vanish. It's how to live well with a technology that's gradually moving from headline act to background infrastructure.

Final Thoughts: The AI Boom Is Not Ending, It Is Growing Up

Step back from the panic, and the picture becomes simpler. The AI boom isn't crashing to a halt. It's shedding some of its illusions. The signals people are watching don’t point to an empty future. They point to a future where AI maturity matters more than AI novelty.

There’s no doubt that living through that shift is going to be uncomfortable. Some early bets won’t pay off. Progress is not linear, and technology evolution often looks chaotic at close range. We are watching a powerful technology learn to live in public. Mistakes are visible. Course corrections are visible. So is the learning that comes out of both.

For anyone trying to make decisions in this space, the most useful stance is calm curiosity. Pay attention to where AI is quietly making work better, not just where it is loudly promising change. Look for enterprise AI insight that connects numbers to lived experience, not just valuation charts.

Ask whether the systems on the table make people safer, more capable and more trusted, or whether they simply shift risk around. If you want help separating noise from signal, explore the latest expert analysis, podcasts and guides on EM360Tech and keep your AI strategy anchored in reality, not headlines.

Comments ( 0 )