OpenAI has announced the launch of GPT-4o, a new flagship AI model and voice assistant that’s set to give other AI models and voice assistants a run for their money.

The model, announced on Monday as part of OpenAI’s spring update, shows off a new level of real-time conversational fluency, including the ability to understand context and shift gears when people talk over it, and a host of new reasoning capabilities that make it more human-like than any other model to date.

OpenAI showed off the capabilities of ChatGPT-4o in a 15-minute live-streamed demo time with OpenAI leaders on stage in front of an in-person audience of the company's employees.

In one demo, the model spoke in a sprightly female voice, responding far faster to queries than previous generations of voice bots, displaying nuanced human-like language and emotion.

It was able to analyse the video chat in real-time, describing the room for the demo when the user asked where it thought he was and correctly answering that the user was likely recording a video due to the setup.

GPT-4o even noticed that the user was wearing a hoodie with an OpenAI logo, and correctly assumed that this meant the video must have had something to do with OpenAI. And it was able to do all of this in real-time as if it were having a normal conversation with the user.

In another demo, the company also showed the ability of the latest ChatGPT to read a story it drafted with both increasing levels of dramatic excitement as well as in a robot voice when asked.

That's a huge improvement from other voice assistants released in the past few years, which have been criticized for being too robotic and not being able to portray human emotions.

At another point in the demo, OpenAI’s head of frontiers research Mark Chen asked ChatGPT for tips to calm his nerves, and the chatbot suggested deep breaths.

When Chen responded by hyperventilating, ChatGPT replied "Whoa, slow down a little bit there Mark – you're not a vacuum cleaner!” demonstrating humour that’s never been seen before in other AI models.

What is GPT-4o?

GPT-4o is a new multimodal large language model (LLM) released by OpenAI. It's faster and more powerful than the previous version, GPT-4, and can process information from text, voice, and images. It can also understand and respond to spoken language in real-time, similar to humans, opening up the potential for real-time translation and other applications.

GPT-4o can respond to audio prompts much faster than previous models, with a response time close to that of a human, and its better at understanding and discussing images. For example, you can describe an image and GPT-4o can discuss it with you.

OpenAI believes GPT-4o will be a is a step towards much more natural human-computer interaction. It accepts as input any combination of text, audio, and image and generates any combination of text, audio, and image outputs.

It can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time(opens in a new window) in a conversation.

GPT-4o still isn’t perfect, though. At one point during the demo, it mistook the smiling man for a wooden surface, and it started to solve an equation that it hadn’t yet been shown. There’s still some way to go before the glitches and hallucinations which make chatbots unreliable and potentially unsafe, can be ironed out.

When Coding Becomes Conversation

How natural language prompts and LLMs are redefining software delivery, talent models and accountability in enterprise development.

But what it does show is the direction of travel for OpenAI, which includes GPT-4o to becoming the next generation of AI digital assistants, outsmarting the likes of Siri or Amazon’s Alexa by remembering what it’s been told in the past and interacting beyond voice or text.

What can GPT-4o do?

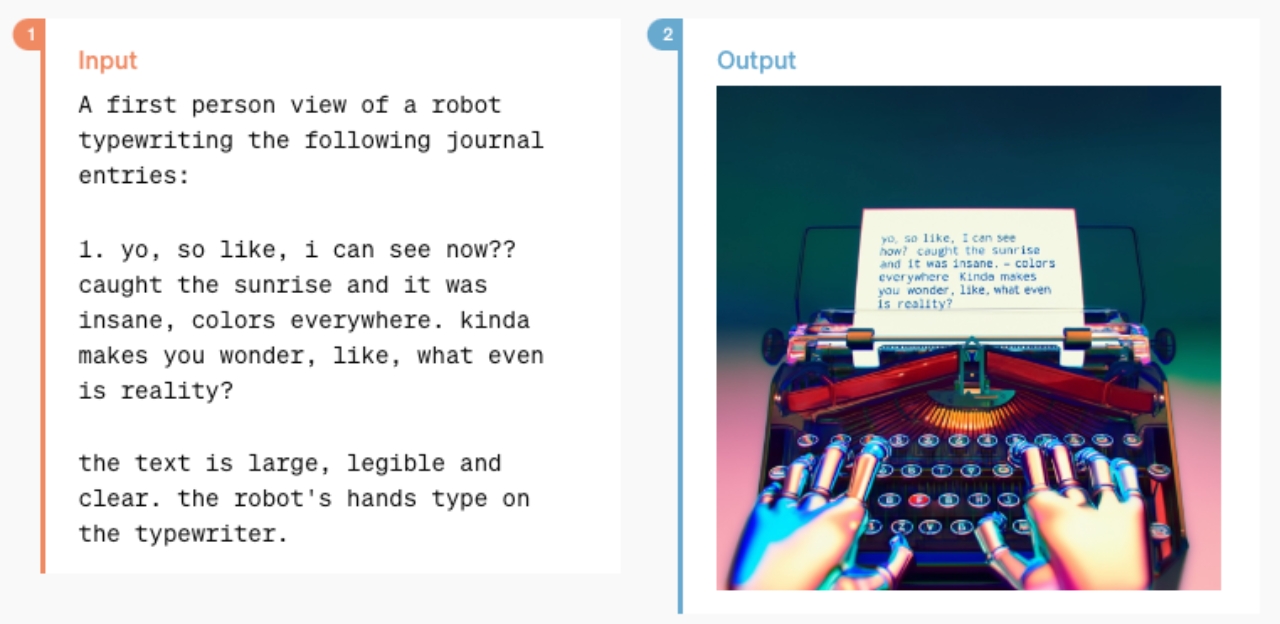

GPT-4o is multimodal, meaning it can process images, and audio, and generate responses using any combination of these formats. It can analyze images and videos, understand audio, and translate languages in real time. This lets it better understand the information you provide, and provide better resposnes.

Not only can GPT-4o generate different creative text formats, but it can also understand and analyze the content it receives. This means it can answer your questions in an informative way, even if they are open ended or challenging thanks to its incredible natural processing abilities.

GPT-4o is also incredibly fast, responding to audio prompts close to real-time conversation speeds. This opens up the potential for it being used for real-time translation and other applications.

GPT-4o vs ChatGPT-4: Which is better?

OpenAI says GPT-4o matches ChatGPT-4 Turbo performance when it comes to text in English and code, with significant improvement in text in non-English languages. It’s also much faster than GPT-4 and better at vision and audio understanding compared to existing models.

Prior to GPT-4o, you could use Voice Mode to talk to ChatGPT with latencies of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4) on average. To achieve this, Voice Mode is a pipeline of three separate models: one simple model transcribes audio to text, GPT-3.5 or GPT-4 takes in text and outputs text, and a third simple model converts that text back to audio.

Read more: Is Google's Gemini better than ChatGPT? A Comparison

This process means that GPT-4 would lose a lot of crucial information and it can’t directly observe tone, multiple speakers, or background noises, and can’t output laughter, singing, or express emotion.

Inside Automated Attention Farms

Unpacks the AI tools, bots, and engagement algorithms turning content into a fully industrialised, probabilistic monetisation engine.

But with GPT-4o – which is trained across text, vision, and audio – all inputs and outputs are processed by the same neural network. And Since this is OpenAI’s first model combining all of these modalities, we are still just scratching the surface of exploring what the model can do compared to ChatGPT and GPT-4.

GPT-4o vs other models: Comparing the benchmarks

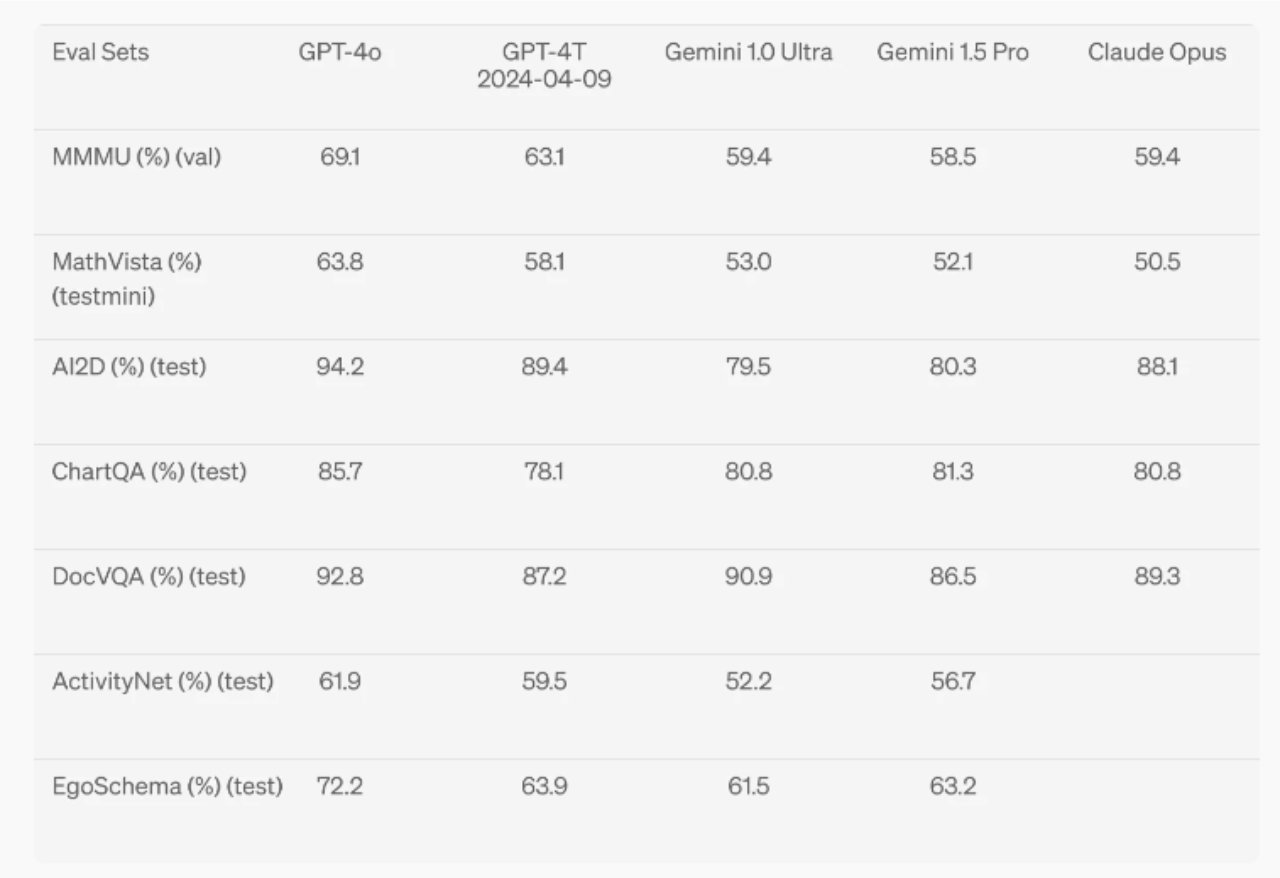

GPT-4o is marginally better than many of the other models on the market today, beating models, including Claude 3, Gemini and LlaMa 3 on the multiple benchmarks tested by OpenAI. These benchmarks included reasoning, text evaluation, Audio ASR performance and the M3Exam.

One of the most notable improvements with GPT-4o was in reasoning. The AI model set a new high score of 88.7% on 0-shot COT MMLU general knowledge questions, which is the highest score ever recorded of any AI model on the market.

GPT-4o also outperformed all other models on visual perception benchmarks including MMMU, and ChartQA as 0-shot CoT. It also broke the record for AI models on the MathVista exam, scoring an impressive 63.8 compared to GPT-4 Turbo’s 58/1 and Gemini’s 53.0.

How do you access GPT-4o?

You can use GPT-4o for free in the OpenAI Playground, which allows you to experiment with GPT-4o through a web interface. You can access it if you have an OpenAI API account, but creating one is free.

If you want to integrate GPT-4o into your own application, you'll need an OpenAI API account. GPT-4o is available through several OpenAI APIs, including Chat Completions, Assistants, and Batch APIs [1]. This option may require some programming knowledge.

Comments ( 0 )