AI adoption is rising fast. Most DevOps teams have some form of automation in place. Observability platforms are richer than they’ve ever been, with more telemetry, more context, more “intelligence”.

And yet, if you talk to engineering leaders off the record, the story rarely sounds like relief.

They’re shipping. They’re keeping the lights on. They’ve got dashboards, alerts, runbooks, on-call rotations, and increasingly, AI-assisted workflows. But they don’t feel lighter. They don’t feel faster. The hard parts of operating software don’t feel meaningfully easier.

That’s what makes the New Relic 2026 AI Impact Report so telling. The data points toward reduced noise and faster resolution. But it also surfaces a quieter tension. Even as AI improves parts of the operational workflow, many teams still feel the drag of day-to-day work.

If AI is supposed to reduce operational work, why does it still feel like the weight hasn’t shifted?

The most useful way to answer that is to stop arguing about tools and start talking about engineering toil.

The Signal Hidden Inside the 2026 AI Impact Report

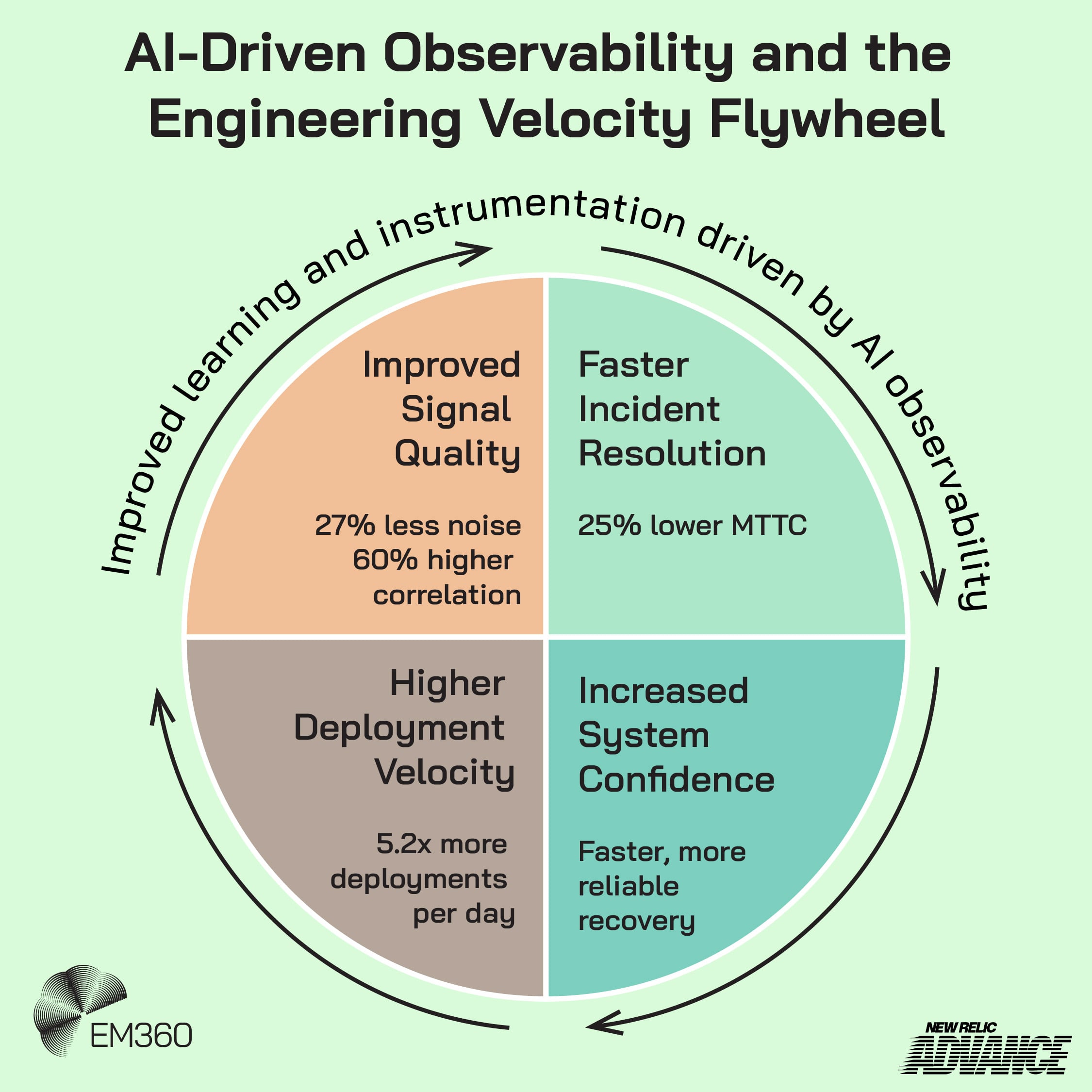

Most AI and observability narratives follow the same arc. More intelligence leads to less noise. Less noise leads to faster incident resolution. Faster resolution frees up time for better engineering.

The AI Impact Report points in that direction too. It sets up a story about teams regaining time and momentum as AI takes on more of the grunt work involved in modern operations. It’s an optimistic story, but it’s not naïve. It’s grounded in what teams want most: fewer distractions, faster answers, and more space to build.

The friction starts when you ask a practical question.

If AI can reduce operational noise and speed up incident work, why does engineering still feel so overloaded?

That’s not a gotcha. It’s the real test of impact. If the gains don’t show up in the day’s work, teams stop trusting the story. They keep the humans on the hook, they keep the processes manual, and AI becomes another layer in the stack rather than a release valve.

So before you debate tools or maturity models, it’s worth being precise about what you’re actually trying to remove.

What Engineering Toil Actually Means (And Why Precision Matters Now)

Toil isn’t just “work you don’t like”.

In Site Reliability Engineering (SRE) terms, toil is operational work that’s manual, repetitive, and largely automatable. It’s the kind of work that scales with the size of the system, not the sophistication of the team. The bigger and more complex the environment becomes, the more toil you accumulate unless you actively engineer it away.

That definition matters because it stops the conversation from sliding into vagueness.

If your team spends hours on production work that can’t be automated, that might still be a problem, but it isn’t necessarily toil. If you spend hours on tasks that should’ve been automated years ago, that’s closer to the point. If your best engineers are stuck in a loop of triage and admin because the system produces noise faster than the team can filter it, you’re living inside toil.

This is also where AI impact claims can go off the rails.

“AI saved time” only means something if it reduces toil rather than shifting essential work around. If AI reduces one type of manual effort but introduces new repetitive tasks to keep it accurate, supervised, and safe, then the net effect might be a reshuffle rather than a reduction.

When Vibe Code Breaks Ops

AI-generated code is pushing prototypes into production faster than ops can cope. How observability becomes the gatekeeper for enterprise resilience.

That doesn’t mean AI isn’t valuable. It means the target has to be clear.

Why AI Often Shifts Toil Instead of Eliminating It

AI can compress parts of operational work. It can group signals, summarise activity, suggest likely causes, and speed up the first few minutes of an investigation. Those are real wins.

But toil is stubborn because it’s rarely a single step. It’s a chain of steps, spread across people, tools, and teams. AI often improves the middle of the chain while leaving the edges untouched, and those edges are where the work piles up.

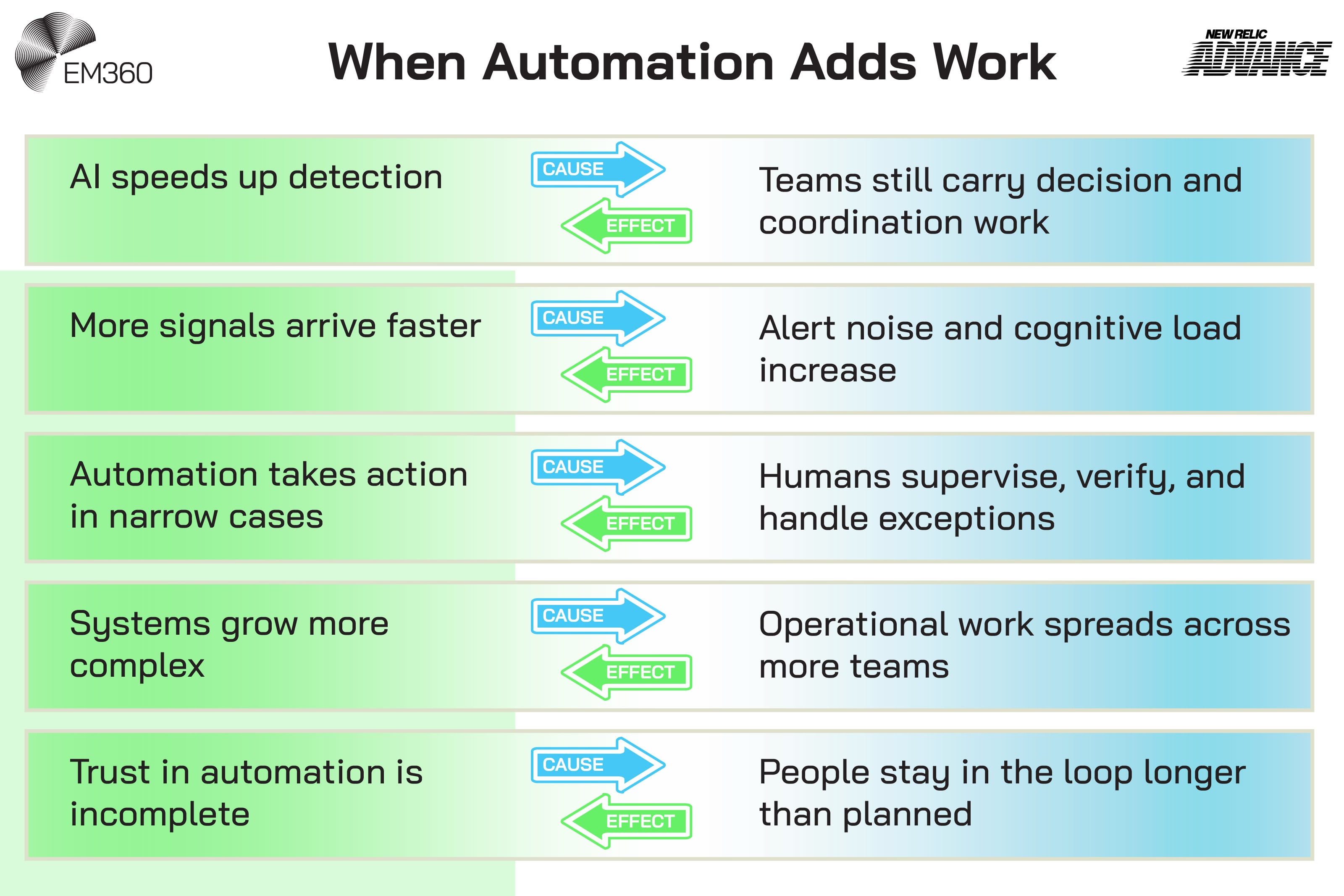

A few patterns show up repeatedly:

- AI speeds up detection, but not decision-making. It surfaces more context faster, but someone still has to decide what to believe and what to do next.

- AI makes triage quicker, but doesn’t necessarily improve coordination. It can narrow the problem, yet the team still has to chase ownership, align on risk, and manage the human side of incident work.

- AI can propose actions, but it doesn’t own outcomes. The moment something could impact customers or revenue, humans step back in, not because they’re resistant, but because accountability hasn’t moved.

So the work shifts. Instead of spending time finding the needle, teams spend time verifying the needle is real, deciding whether it’s safe to act, and cleaning up the mess when automation gets it wrong.

That’s still operational labour. It can still be repetitive. It can still scale painfully.

Noise moves faster than teams can adapt

Alert fatigue has never been just about too many alerts. It’s about too many moments that demand attention without delivering clarity.

Inside AI-Native Observability

How telemetry from LLM calls, agent orchestration and incident workflows becomes the backbone for operating AI-driven systems safely at scale.

AI can help reduce noise, but it can also accelerate it. If the system produces more signals, more correlations, more recommendations, and more “possible issues”, then the team’s workload can spike even as the platform feels smarter. The cognitive load goes up, not down.

This is where teams start doing what they always do under pressure. They route around the system. They mute alerts, rebuild manual checklists, and trust their instincts more than their tooling. That’s not irrational. It’s a survival response.

The result is predictable. You end up with better data in the platform and the same work on the team.

Automation introduces new kinds of work

Automation is rarely free. It moves the work to a different place.

The work might be setting boundaries. Tuning thresholds. Reviewing behaviour after changes. Investigating false positives. Handling edge cases. Building fallbacks. Explaining decisions to stakeholders. Proving that the automation is safe.

Those are all reasonable forms of operational work. They can also turn into toil if they become repetitive and constant.

This is one reason “agentic” language can trigger scepticism in engineering circles. Teams aren’t allergic to autonomy. They’re allergic to unpredictable autonomy. If you can’t explain why something happened, or confidently predict when it might happen again, you’re not reducing toil. You’re trading one class of manual work for another, and the new class often feels riskier.

That tension is shaping how vendors are approaching automation now. New Relic, for example, is positioning its agentic capabilities around intelligent reasoning, automated remediation within guardrails, and workflows that keep humans accountable rather than sidelined. The goal isn’t autonomy for its own sake. It’s automation that can act safely, explain its decisions, and integrate into the way teams already operate.

That distinction matters. Because toil doesn’t automatically lessen when systems act independently. It only does when teams trust those systems enough to let them share the load.

The Trust Gap the Report Data Can’t Close on Its Own

Managing Systems You Don’t Know

Growing complexity means engineers own code they didn’t write. Explore how AI context cuts incident time and reduces blind spots in production.

AI capability isn’t the main blocker anymore. Trust is.

Trust shows up in small behaviours first. Do engineers follow the recommendation, or do they open three dashboards to confirm it? Do they let the system group alerts, or do they keep triage manual? Do they allow automated remediation, or do they treat automation as a suggestion generator?

Those behaviours determine whether AI reduces toil or simply accelerates information flow.

Trust also has a cost. It has to be earned through consistent outcomes. It has to be supported through governance and clarity. And it has to be defended during high-pressure moments, because that’s when teams revert to what feels safest.

This is where the AI Impact Report becomes most useful. It points to genuine potential. But potential doesn’t change operations on its own. People change operations when they believe the system is reliable enough to carry weight.

If you want AI to reduce toil, you need something more than intelligence. You need a reason for teams to let go.

Where Intelligent Observability Changes the Trajectory

This is where intelligent observability becomes more than a slogan.

The promise isn’t just “more visibility”. Most teams already have visibility. They have dashboards, logs, traces, alerts, and metrics. They have enough data to drown a sprint planning session.

What they don’t have is consistent direction.

Intelligent observability helps when it reduces the amount of interpretation needed to make a good decision. Because it unifies telemetry across infrastructure, applications, and increasingly AI workloads. It adds context to root cause analyses. It connects signals across the stack instead of isolating them in silos.

It points to likely causes rather than just dumping symptoms in a queue. In other words, it shortens the distance between “something’s wrong” and “here’s what’s actually happening”.

When AI Runs Observability

How AI-driven observability links system health, cost, and business outcomes for leaders managing complex digital estates.

This is the shift New Relic is leaning into with its intelligent observability strategy. The focus isn’t just richer dashboards. It’s a unified data foundation that supports automated remediation, agentic workflows, and modern APM that connects technical performance to business impact.

That matters because interpretation is often the hidden source of toil.

If AI can reduce the manual effort involved in finding relationships between signals, tracking changes, and isolating what matters right now, then operational work becomes less repetitive and less draining. Not because incidents disappear, but because the path through them becomes clearer.

Root cause analysis is a good example. Everyone wants it faster. But what teams really want is the removal of the repetitive steps that delay it: hunting through unrelated alerts, bouncing between services, guessing what changed, and waiting for the right person to weigh in.

When observability reduces those steps and feeds directly into accountable automation, AI starts to feel like impact rather than novelty.

Why Engineering Toil Is Becoming a Leadership Question

Toil can’t be solved purely at the tool level because it’s often created at the operating model level.

Leaders decide how work is owned. How incidents are handled. What gets prioritised. What gets automated. What gets documented. What gets standardised. What gets measured.

AI can expose gaps faster. It can highlight where the system is noisy, where workflows are slow, and where teams are stuck in repeat loops. But it can’t redesign the organisation for you. This is where the conversation gets uncomfortable, and also where it becomes genuinely useful.

If you want to reduce toill, you often have to make decisions that don’t feel like “AI initiatives” at all:

- You might need platform teams that own shared operational patterns instead of leaving every team to reinvent them.

- You might need clearer definitions of “done” for operational tasks, so work doesn’t get reopened endlessly.

- You might need governance that gives teams permission to automate, along with guardrails that keep that automation safe.

- You might need to treat operational work as a product that can be improved, not as an endless tax that teams have to pay.

This is also why architecture starts to matter more than features. Leaders are looking for unified data foundations rather than disconnected tools.

They want to break down silos instead of adding another dashboard. They want platforms that are open and extensible, that reduce vendor lock-in rather than deepening it. They want to lower total cost of ownership without sacrificing visibility or control.

That’s the direction vendors such as New Relic are signalling as they consolidate observability, automation, and AI into more cohesive platforms. Not because consolidation sounds strategic, but because fragmented systems are one of the quiet drivers of engineering toil.

Toil persists when the system demands repeated human effort to stay stable. That’s as much about leadership decisions as it is about tooling.

Why These Questions Are Now Being Debated Live

The reason these conversations are accelerating is simple. AI is no longer a side project in engineering operations. It’s becoming part of the standard stack, and expectations are shifting from “assist” to “act.”

That shift sounds simple until you try to operationalise it, which is why New Relic Advance 2K26 focuses on what “act” looks like when automation has to be explainable, governed, and safe.

The event is structured around the practical realities we’ve just explored. Intelligent observability isn’t positioned as richer dashboards, but as a unified data foundation that supports automated root cause analysis and remediation workflows.

The agentic platform will be showcased in action, demonstrating how AI agents can resolve issues before they escalate while operating within clear guardrails.

Attendees will see product demos, hear from New Relic’s product leadership, and learn from enterprise customers applying these approaches in complex environments. The focus isn’t abstract AI capability. It’s how to implement accountable automation, reduce operational noise, and streamline engineering workflows in production systems.

The AI Impact Report highlights the opportunity. Advance shows what it looks like when that opportunity is operationalised.

Final Thoughts: AI Impact Depends on Whether Teams Trust the Systems Doing the Work

The AI Impact Report points to real momentum. AI can reduce noise, speed up resolution, and free up time. But toil won’t fall just because AI gets smarter.

Toil drops when teams trust their systems enough to change how they work. When observability delivers direction rather than more signals. When automation earns the right to act, not just recommend. And when leaders treat operational work as something that can be engineered down, not endured.

If you’re trying to make AI impact tangible in 2026, the most honest starting point is also the most practical one: follow the toil. It’ll tell you where the work really is, where trust breaks down, and what “meaningful automation” has to look like before it deserves the name.

For teams looking to close that gap, New Relic Advance 2K26 offers a chance to see how intelligent observability, automated root cause analysis, and agentic automation are being applied in production environments. Through live demos, product leadership insights, and enterprise perspectives, the focus shifts from potential to implementation.

EM360Tech will continue tracking how these shifts are unfolding across engineering, observability, and leadership, and how events like New Relic Advance 2K26 are shaping the next phase of operational AI.

Comments ( 0 )