Agentic AI is everywhere right now. Executives want to automate decisions, teams are testing new workflows, and every vendor claims to have an agent on the way. Yet despite the enthusiasm, most organisations are not seeing real results.

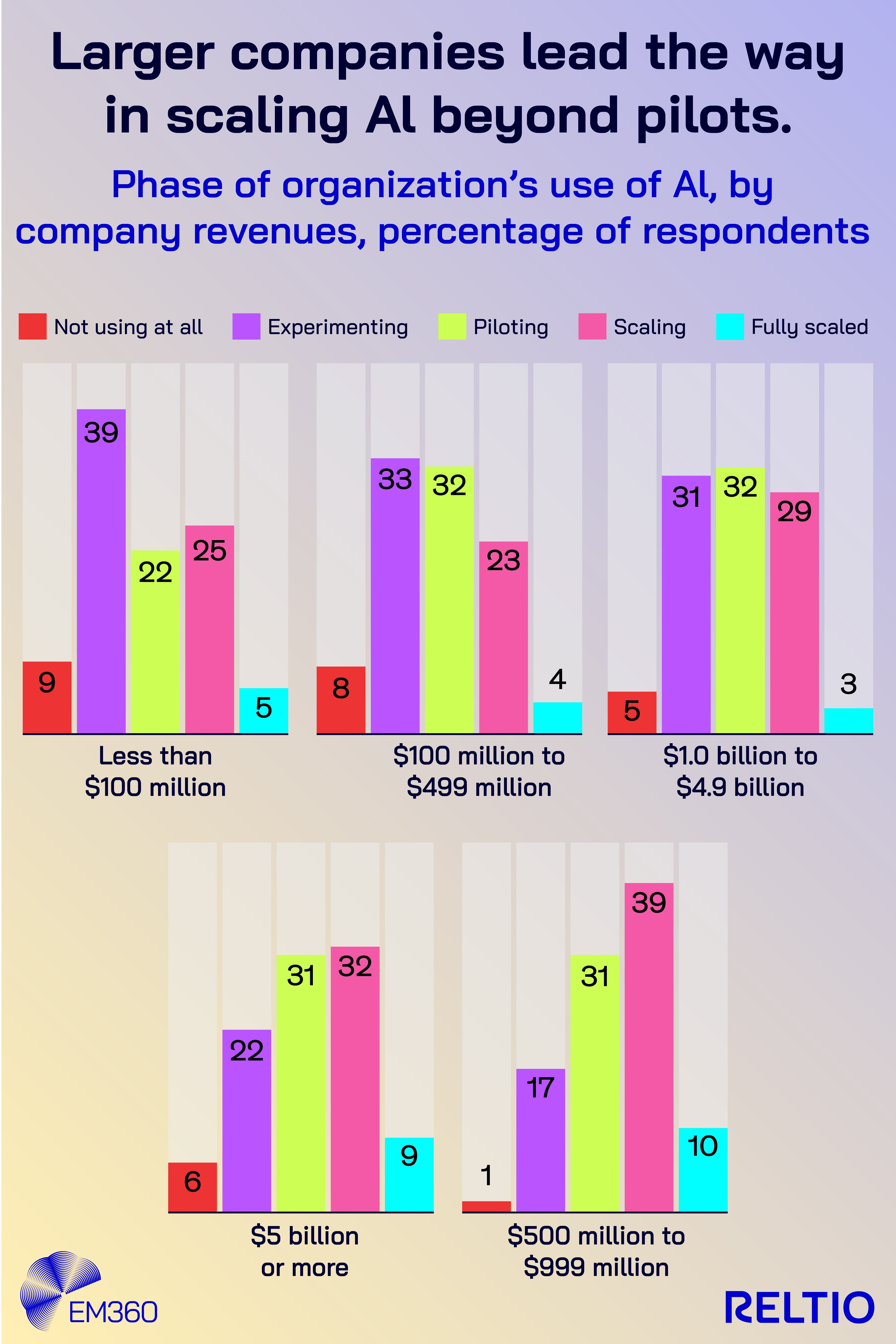

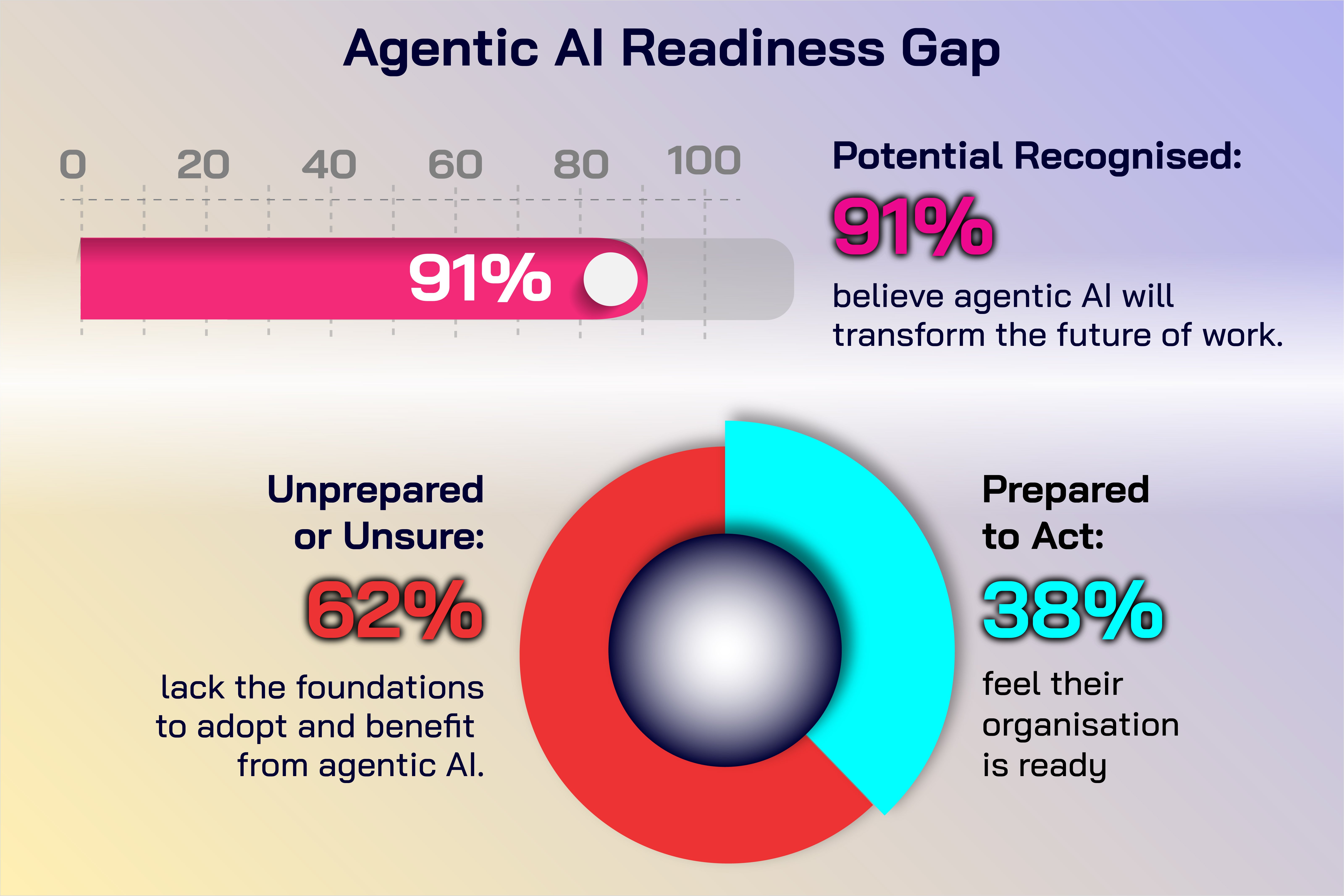

The data is blunt. McKinsey reports that only a small share of generative AI use cases reach meaningful scale. Gartner expects more than 40 per cent of agentic AI projects to be cancelled by 2027. Harvard Business Review found that although more than 90 per cent of leaders believe agentic AI will transform work, barely a third feel prepared to use it effectively.

The pattern is consistent. Small, highly controlled pilots succeed. Attempts to go broader slow down, stall, or quietly disappear. The issue is not the models, the agent frameworks, or the orchestration tools. The issue is context.

Agentic AI depends on a complete, current, and consistent picture of the business. Most enterprises cannot provide it. That gap is what turns promising demonstrations into fragile systems that cannot be trusted at scale.

This is where the real work begins.

What Do AI Agents Actually Need to Work?

Most conversations about agentic AI focus on the model. The real question is whether the agent can see enough of the business to make the right decision at the right moment. That depends entirely on context.

Agents depend on structured context, not just large context windows

Context windows sound impressive, but bigger inputs do not guarantee better decisions. Research shows that even advanced models struggle when the sequence becomes long or cluttered. They lose track of key details, misinterpret steps, or drift from the task.

For enterprise teams, that means raw volume is not the answer. Agents need structured, filtered context that highlights what matters and removes what does not. Without that structure, they behave unpredictably no matter how large the window is.

Enterprise context is about relationships, events and state

Enterprises do not run on isolated records. They run on relationships and events. A meaningful decision depends on understanding who is linked to whom, what has changed, and how those changes affect the next action.

For an agent, context includes lifecycle changes, ownership structures, recent interactions, and the signals hidden across systems. That interconnected view is what separates a sensible action from a risky one.

Why AI Demands a New Data Core

Executives rethink data from MDM to unified cores so AI agents can act with trusted, real-time context across the enterprise.

Real-time access and constraints determine safe autonomy

The final piece is time. Agents cannot operate safely if they work from outdated or incomplete data. Real autonomy depends on seeing current state, applying clear rules, and checking that conditions have not shifted halfway through a workflow.

Without real-time context and well-defined constraints, agents will make decisions that look reasonable on static data but fail under real operational pressure.

Why Agentic AI Isn’t Working Yet

When you look at how agentic AI behaves outside controlled environments, the problem becomes clearer. The technology is not collapsing. The surrounding architecture is.

Pilots succeed but systems break at scale

A small, well-defined pilot can work because the team curates the data, controls the workflow, and shields the agent from the complexity of the wider enterprise. The trouble starts when you try to extend that success across domains.

McKinsey’s research shows that only a small portion of generative AI use cases ever scale beyond early trials. Agents need consistent context across systems, regions, and processes, and most enterprises simply cannot provide that level of alignment. The architecture bends under the weight.

Designing the AI Agent Stack

See how data foundations, ML platforms and orchestration enable progressively autonomous agents across operations.

Fragmented data kills accuracy and trust

Gartner’s expectation that more than 40 per cent of agentic AI projects will be cancelled in the coming years points to a common issue. Context is scattered, duplicated, and inconsistent.

If an agent sees different versions of the same customer, or outdated information about a product or risk flag, its decisions become unreliable. Accuracy drops, explanations become difficult, and confidence in the system fades fast.

Context windows collapse under real workloads

In production, inputs are messy. Tickets are long, logs are dense, and documentation varies across teams. Even strong models struggle to hold the full thread when they face long, complex sequences.

The result is context collapse. Agents skip steps, confuse entities, or misread the intent of a request. Those mistakes are tolerable in a chat interface, but not in workflows that touch money, customers, or compliance obligations.

Governance and observability are missing

Finally, most organisations lack the governance and visibility needed for autonomous systems. As more agents appear, the risks multiply. Actions are taken without clear logs, access grows faster than controls, and leaders cannot always trace how or why an agent made a decision.

Without tight guardrails and reliable observability, scaling agentic AI becomes a risk instead of an advantage.

Risk, ROI and AI Adoption 2026

Financial and consumer firms confront AI ROI, governance, and regulatory pressure while pushing agents into core workflows and customer decisions.

Why Your Organisation Still Isn’t AI-Ready

Most organisations are not struggling with agentic AI because the technology is immature. They are struggling because the foundations needed to support autonomous systems were never built with autonomy in mind.

AI ambition is rising faster than data maturity

The HBR Analytic Services research highlights a clear contradiction. Most leaders expect agentic AI to reshape the future of work, yet only a small share feel genuinely prepared. Ambition is accelerating, but the underlying data maturity is not keeping pace.

That divide shows up quickly. Targets are set, pilots move ahead, and the practical work of strengthening data foundations is deferred. When results fall short, the technology gets blamed, even though the real issue is readiness.

Data teams are still solving yesterday’s problems

Many organisations are modernising data infrastructure one use case at a time. The AWS and MIT CDO Agenda findings show that teams often prioritise fixing data for specific analytics or AI projects instead of building durable foundations across the estate.

Agentic AI cannot thrive in that environment. Each new workflow ends up with its own version of entities, rules, and quality standards. That inconsistency makes it impossible for agents to operate safely across multiple domains.

When AI Runs Data Quality

Why rules-based checks are giving way to AI-driven observability, lineage and incident response in next-generation data organizations.

Context management is not an established discipline yet

Context engineering is still handled as a series of local experiments rather than a coordinated capability. Teams build isolated retrieval setups for individual applications, but very few treat context management as an enterprise function.

Until that shifts, agentic AI will remain trapped in pilots. Without shared context, shared rules, and shared understanding, agents can only function inside narrow, heavily supported environments.

What Needs to Change Before Agentic AI Can Deliver Real Value

Agentic AI will not scale until enterprises treat context as a core operational capability. The fixes are straightforward, but they require discipline.

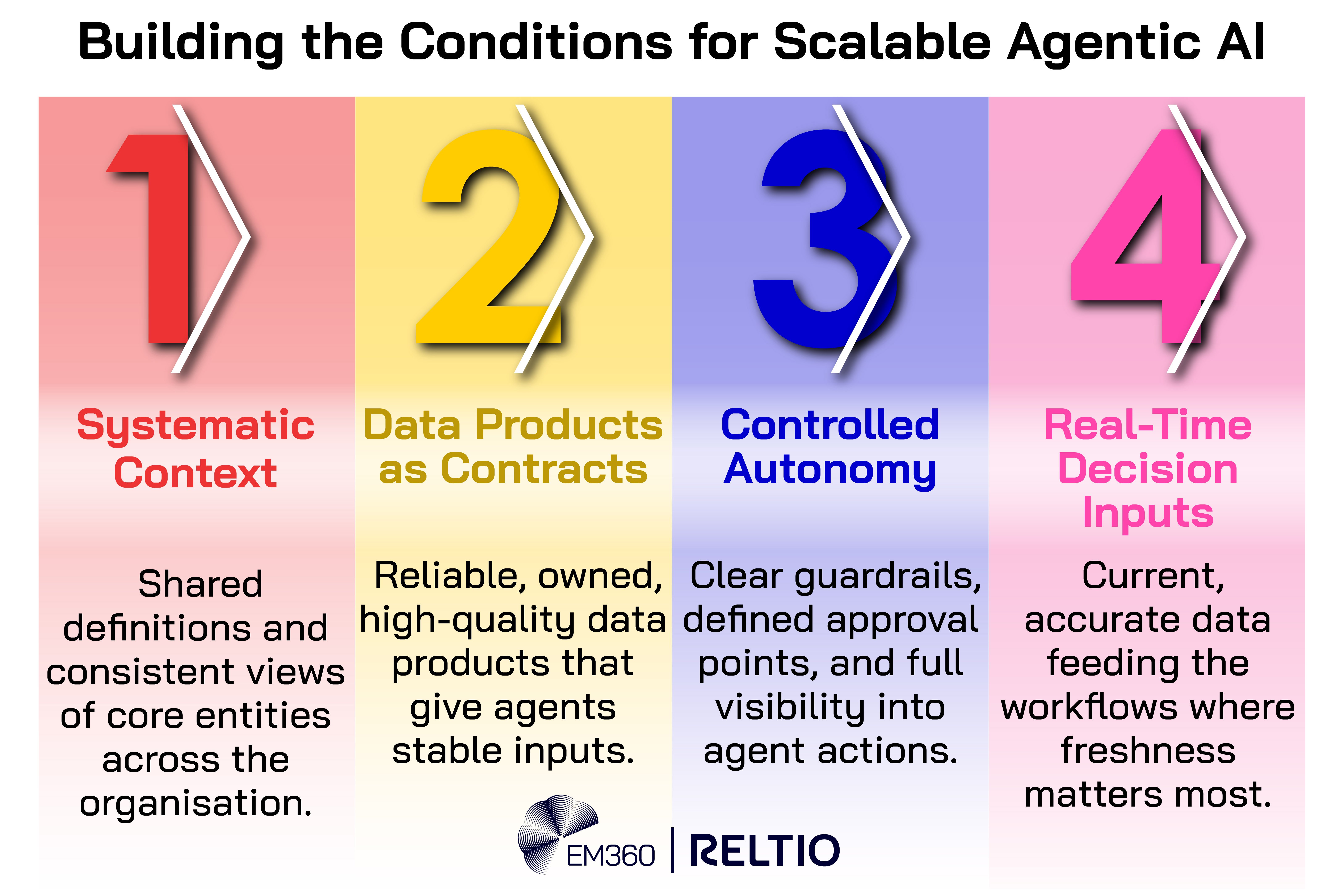

Build systematic context, not ad hoc pipelines

Most early implementations rely on custom integrations that only work for one workflow. A scalable model needs shared definitions for core entities, consistent ways to surface them, and a clear line between sources of record and downstream views. That gives every agent the same understanding of the business.

Providers like Reltio are increasingly focusing on this challenge, helping organisations create consistent, high-quality views of core data that agents can rely on.

Treat data products as the contract between agents and the business

Data products create predictable, owned, high-quality data that behaves the same across teams. With more organisations adopting this model, agents can rely on stable inputs instead of pulling from fragile pipelines that change without warning.

Harden autonomy with governance, guardrails and observability

Autonomy only works when boundaries are clear. Agents need defined limits, review points for sensitive actions, and full visibility into what they did and why. Without that, every new agent becomes a risk instead of an asset.

Make real-time data a standard, not an exception

Agents cannot make good decisions on stale information. Real-time inputs do not have to cover everything. They just need to support the workflows where state changes quickly and accuracy matters. When that context is fresh, agents stop drifting and start delivering value.

Final Thoughts: Context Is the Real Constraint on Agentic AI

Agentic AI is not failing because the models are limited. It is failing because the context they rely on is incomplete, inconsistent, or too slow to reflect what is happening right now. Enterprises want autonomous systems. Early pilots show the promise. But when organisations try to scale, they run into the same barrier every time: fragmented data, uneven governance, and context that cannot keep up with real operational change.

The organisations that move ahead will be the ones that treat context as a strategic foundation. They will unify their core entities, build shared data products, tighten their guardrails, and make freshness a default rather than a luxury. That work is less dramatic than an agent demo, but it is what turns autonomy from a possibility into something leaders can depend on.

If you are shaping your own AI strategy, Reltio’s perspective on trusted, real-time data adds useful clarity to a fast-moving landscape, and EM360Tech’s wider coverage of AI and data offers a grounded view of what it takes to get this right. Together, they provide a practical lens for anyone building the foundations for agentic AI that actually works.

Comments ( 0 )