In the rapidly evolving world of AI, recurrent neural networks (RNNs) have emerged as one of the most powerful tools for deciphering and manipulating sequential data. If you’ve ever chatted with Apple’s digital assistant Siri or used Google Translate, you’ve already seen a recurrent neural network in action.

In this article, we’ll explore the definition of recurrent neural networks (RNNS), explain how they work, what they’re used for and how they’re likely to evolve.

What are recurrent neural networks (RNNS)? Definition

Recurrent neural networks (RNNs) are a powerful type of artificial neural network specifically designed to handle sequential data.

This means they are able to process sequences of data, such as text, speech, time series, or images with dependencies between elements.

Unlike standard neural networks that process individual inputs independently, RNNs have an internal "memory" that allows them to learn and utilize context from previous inputs when processing new ones.

This includes learning from past use to make predictions about the future, like predicting the next word in a sentence or frame in a video.

How do recurrent neural networks work?

Unlike standard neural networks like those used in large language models (LLMs) that process each piece of information independently, RNNs have a unique ability: memory.

Recurrent neural networks work by remembering past data inputs to predict the future. With variations like LSTMs and GRUs that allow RNNs to remember more information and longer sequences, they're revolutionizing fields like natural language processing, forecasting, and beyond.

Their unique memory allows RNNs to consider the context of the data they're processing. They achieve this by storing information from previous inputs in a hidden layer. When processing a new piece of data, this stored information influences the network's understanding and prediction.

What are Long Short-Term Memory (LSTM) architectures and Gated Recurrent Units (GRUs)?

While RNNs boast impressive memories, they aren't perfect. The core challenge lies in processing and utilizing information from earlier stages within the network.

As the sequence length increases, the gradients (measures of how errors propagate) can become vanishingly small, making it difficult for the network to learn long-term dependencies effectively. This is described as the vanishing gradient problem – information from the distant past fades as it travels through the network, hindering long-term dependencies.

Thankfully, advanced RNN architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) incorporate mechanisms that control the flow of information within the hidden layer, allowing them to retain relevant information for longer durations.

Unlike basic recurrent neural networks (RNNs) that struggle with long-term memory, Long Short-Term Memory (LSTM) networks are equipped with special "gatekeepers."

These gates control information flow, allowing LSTMs to "forget" irrelevant details and selectively remember crucial information for longer periods. This enhanced memory superpower makes LSTMs ideal for tasks like machine translation, speech recognition, and time series forecasting, where understanding long-term dependencies is key.

A Gated Recurrent Neural Network (GRU) is a recurrent neural network with a built-in memory manager. It deals with sequential data like text or speech and is able to remember important information whilst forgetting less relevant inputs.

LSTM vs GRU: What's the Difference?

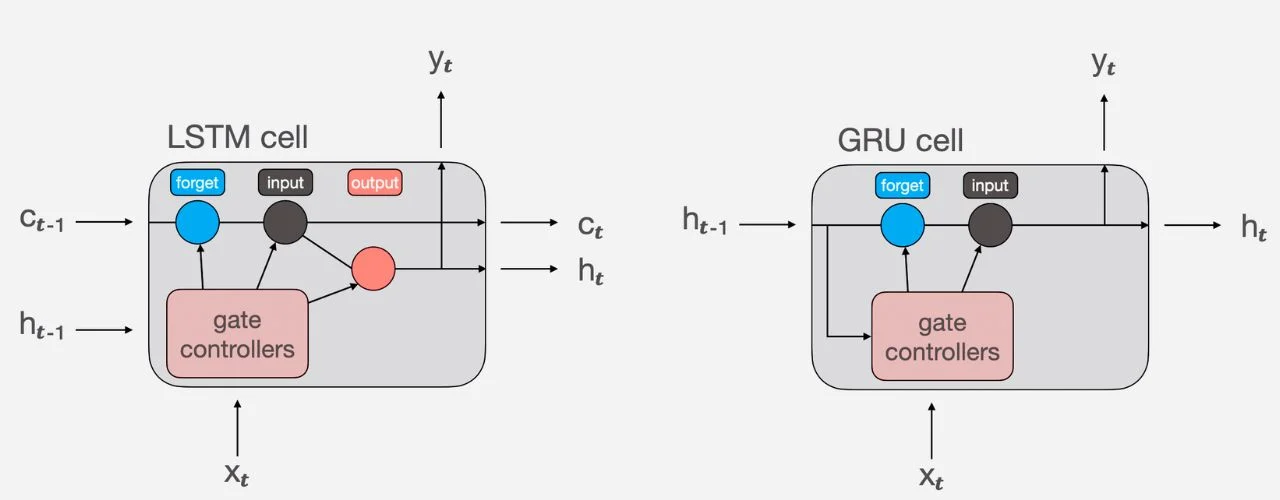

The difference between LSTMs and GRUs lies in their memory management methods. LSTMs utilize three specialized gates: forget, input, and output, allowing precise control over information flow and long-term recall. This makes them ideal for tasks like machine translation, where remembering distant information is crucial.

GRUs take a simpler approach with a single "update gate" that combines the functionalities of forget and input gates. This streamlines information flow, making them faster and more computationally efficient than LSTMs. While potentially less precise in remembering very long sequences, GRUs excel in tasks with shorter sequences or limited resources, such as chatbots or text generation.

What are recurrent neural networks used for?

Recurrent neural networks are used for natural language processing, Speech Recognition, Signal Processing and Time Series Forecasting.

1. Natural language processing

Natural language processing allows human-computer interaction to be more natural and efficient, this is used in:

- Machine translation: Understanding the nuances of language and producing fluent, context-aware translations.

- Chatbots and virtual assistants: Engaging in natural conversations, remembering past interactions and adapting responses accordingly.

- Text summarization and generation: Generating summaries of factual texts or creating creative content like poems or stories.

2. Speech Recognition

Speech Recognition and Synthesis help bridge gaps between spoken and written communication through speech-to-text and text-to-speech conversion, this is used in:

- Understanding spoken language: Accurately transcribing speech, even in noisy environments or with complex grammar.

- Text-to-speech conversion: Generating natural sounding speech from text, enhancing accessibility and human-computer interaction.

- Voice assistants and smart speakers: Responding to your voice commands and questions, learning and adapting your preferences over time.

3. Signal Processing and Time Series Forecasting

Signal Processing and Time Series Forecasting means extracting patterns to predict future trends within sequences of data, this is used in:

- Stock market prediction: Analyzing historical trends and financial news to make informed predictions about future prices.

- Anomaly detection in sensor data: Identifying unusual patterns in sensor data, indicating potential equipment failures or security breaches.

- Weather forecasting: Analyzing weather patterns from various sources to predict future weather condition

The specific application of a recurrent neural network depends on the chosen architecture and training data. With further exploration and innovation, RNNs are likely to play a significant role in shaping the future of AI and its impact on our lives.

The Future of Recurrent Neural Networks

Recurrent Neural Networks (RNNs) have undoubtedly reshaped the landscape of sequential data processing through their unique ability to learn and leverage context. However, despite their popularity, we are still in the early stages of their development.

New architectures promise enhanced efficiency and specialization, enabling them to tackle even more complex challenges in healthcare, finance, and beyond. We can expect broader adoption of personalized experiences, natural human-computer interactions, and even automated decision-making systems.

In the future, recurrent neural networks could revolutionize music creation with personalized soundtracks and interactive experiences. They could transform how we understand video content through automatic captioning, summarization, and anomaly detection. RNN’s potential is also being explored in the medical field to significantly improve diagnoses by analyzing images and predicting patient outcomes.

New architectures are set to be more efficient and specialized, tackling specific domains including healthcare and finance. Expect wider adoption in personalized experiences, natural human-computer interaction, and even automated decision-making.

However as the evolution of AI and recurrent neural networks continues to accelerate, ethical considerations must be addressed head-on, especially as RNNs become more powerful and integrated into critical areas like healthcare, finance, and security.

Transparency and explainability of RNN decisions will be fundamental to building trust and ensuring their responsible development.

Comments ( 0 )