Porcha Woodrugg was eight months pregnant and getting her two children ready from school when six police officers knocked on her door. They were there to arrest her on charges of carjacking and robbery.

“Are you kidding, carjacking? Do you see that I am eight months pregnant?” the lawsuit Woodruff filed against Detroit police reads. She was detained and questioned for 11 hours and released on a $100,000 bond.

Upon release, she immediately reported to a hospital where she was treated for dehydration.

Porcha later found out that she was the latest victim of a facial recognition misfire, after her image was incorrectly matched to a carjacking nearby. According to The Guardian, the victim chose Woodruff’s picture as the perpetrator of the robbery - though nowhere in the investigator’s report did it describe the woman in the video footage as heavily pregnant.

A month later the charges were dismissed due to insufficient evidence.

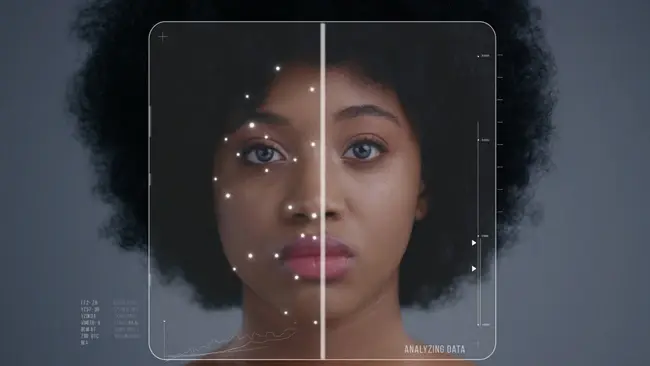

Facial recognition, law enforcement, and racist bias

Woodruff’s is the sixth known case of an arrest made due to false facial recognition by US law enforcement - and the third case in Detroit.

All six people who were falsely arrested are Black.

Privacy experts have warned for years about the privacy violations and dangers that come with systems that claim to identify anyone by their face, not to mention the inability to properly identify people of colour.

Despite this, law enforcement agencies across the US continue to contract with the facial recognition systems like Amazon’s Rekognition and Clearview AI.

Why do these mistakes happen? And where do we go from here?

So why do these mistakes happen? Well much like any other AI-based system, facial recognition is only as good as the data its trained on. This data often reflects implicit biases of those that build out these data sets. As Amnesty International has noted, most images that these systems are based on are of white faces.

In fact, according to a 2017 NIST study, the poorest accuracy rates come when identifying Black women between the ages of 18-30. Moreover, false positive rates are highest in west and east African and east Asian people, and lowest in eastern European individuals. The study concludes by stating that with a factor of 100 more false positives between countries, the disparity between these groups is high.

In short, you are far less likely to be falsely identified for a crime if you’re white.

So where do we go from here? Well, some critics argue that even with perfect facial recognition technology, we wouldn’t be safer as a society.

Immaculate facial recognition technology being commonly used could quickly create a boundless surveillance network reminiscent of George Orwell’s 1984, eradicating any privacy in public places.

In fact, many of these facial recognition systems have been banned in Europe and Asia, as well as banned from selling data to US companies.

And with over half (55%) of people wanting more restrictions on police use of facial recognition technology, the public feelings are clear.

As for Porcha Woodruff, she’s seeking financial damages from Detroit police.

“I don’t feel like anyone should have to go through something like this, being falsely accused,” Woodruff told the Washington Post. “We all look like someone.”

Comments ( 0 )