At their core, language models are statistical tools designed to understand and generate human language. They work by assigning probabilities to sequences of words or characters. To put it simply, they excel at predicting what word or phrase is likely to come next in a given sentence or piece of text.

The Technology Behind Language Models

Several key technologies power language models:

-

Neural Networks: The backbone of most modern language models is a type of neural network called a Transformer. Transformers excel at processing sequential data (like language) and identifying long-range dependencies within a text.

-

Deep Learning: Language models train using a process called deep learning. This involves feeding massive amounts of text data into the neural network, allowing it to automatically adjust its internal parameters for better language understanding.

-

Unsupervised Learning: Training primarily occurs through unsupervised learning. The model isn't given predetermined "correct" outputs. Instead, it learns patterns and relationships within the language data itself.

How Language Models are Trained

The training process of language models centres around these core steps:

-

Data Collection: Vast text datasets are gathered, ranging from books and articles to conversational records and code. This data provides the foundation for the model's learning.

-

Preprocessing: The raw text data is cleaned and formatted, transforming it into a form suitable for the model to process (e.g., Tokenization – breaking it down into words or meaningful units).

-

Training: The neural network is exposed to the preprocessed data. Its goal is typically to predict the next word in a sequence. Each time it makes a prediction, it gets feedback on whether it was right or wrong.

-

Refinement: Over millions of iterations, the model's internal parameters are adjusted through a process called backpropagation. The goal is to continuously increase the probability the model assigns to correct word sequences.

The End Result

After extensive training, a language model becomes adept at understanding natural language including interpreting the structure, meaning, and intent within text; and then generating new text that resembles the data it was trained on. This text can be in various forms (e.g., translations, summaries, code, even stories).

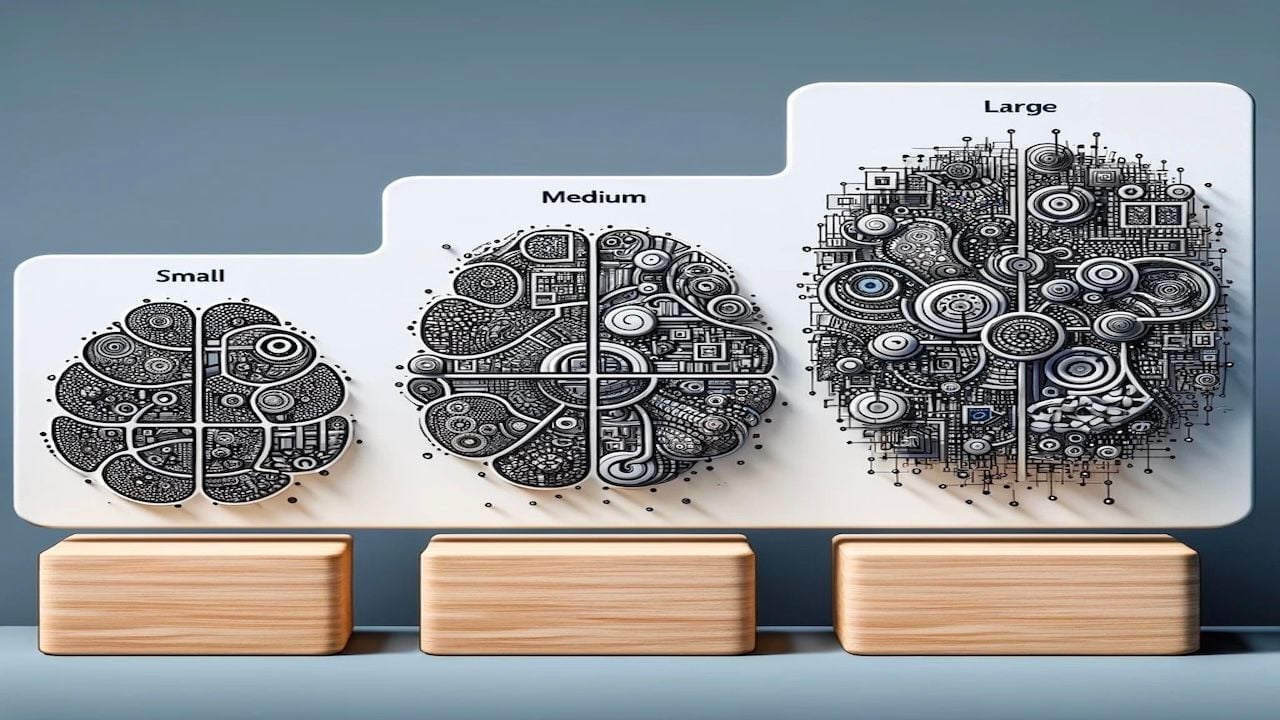

Types of Language Models

Language models come in various sizes and complexities, broadly categorized as:

Small Language Models:

-

Relatively simple and computationally efficient.

-

Limited vocabulary and understanding of complex sentence structures.

-

Suitable for basic tasks like spell-checking, autocomplete on your phone, or basic chatbots.

Medium Language Models:

-

Offer a balance between complexity and resource requirements.

-

Capable of generating more coherent and diverse text.

-

Can be used for tasks like email composition, content summaries, or more sophisticated chatbots.

Large Language Models (LLMs):

-

Massively complex, trained on vast amounts of text data.

-

Demonstrate an impressive understanding of language nuances, context, and reasoning.

-

Enable applications like highly creative text generation, advanced translation, writing different kinds of creative text formats, like poems, code, scripts, musical pieces, email, letters, etc.

How Language Models Are Used

The applications of language models across various sizes are plentiful:

-

Text Generation: All sizes of language models help with generating text, ranging from simple sentence completion to lengthy articles or stories.

-

Machine Translation: Language models translate between languages, with greater accuracy as model size increases.

-

Question Answering: Models, especially LLMs, can locate and extract answers from large amounts of text.

-

Text Summarization: Condensing longer documents into key points.

-

Code Generation: Some models can help write and debug computer code.

-

Conversational AI: Small and medium models often power simpler chatbots, while LLMs enable highly engaging and informative conversations.

Benefits of Each Size

-

Small Models: Fast and efficient to run, even on devices with limited computational power (like smartphones). They are ideal for tasks where speed and low-resource usage are prioritized.

-

Medium Models: Provide a good balance between performance and complexity. They are well-suited for applications requiring more language understanding without the massive resource needs of LLMs.

-

Large Language Models: Offer cutting-edge language capabilities. They produce extremely human-quality text and handle complex reasoning but can sometimes lack speed and struggle with factual accuracy, needing careful oversight.

Combining Language Models

Language models of different sizes can be used in tandem, leading to some interesting hybrid approaches and creative outputs. Here's how they might be combined and what benefits this can bring:

Ways to Combine Language Models

-

Ensemble Methods: Outputs from multiple language models (small, medium, or large) are combined strategically. This can improve accuracy and robustness by using the strengths of different models. For example, a small model might provide initial fast results, while a large model offers greater refinement.

-

Knowledge Distillation: A large, powerful language model "teaches" a smaller model. This helps the smaller model gain some of the larger model's capabilities while remaining compact and efficient.

-

Multi-Stage Pipelines: A small model might act as a filter or pre-processor for a larger model. For instance, a small model could handle basic classification tasks and only pass highly relevant inputs on to a larger model for more complex or creative generation.

Types of Output Using Hybrid Approaches

-

Improved Efficiency and Accuracy: Blending small and large models can bring speed and resource efficiency without sacrificing quality. Tasks requiring accurate language processing but quick turnarounds are great candidates for this approach.

-

Tailored Results: Specialized small models, focused on particular domains or tasks, can collaborate with a more general large model. This offers output that's both customized and highly sophisticated. Imagine a medical chatbot with a specialized small model for basic triage and a large model for detailed explanations.

-

Hierarchical Text Generation: A small model could create a basic outline or structure for text. A larger model is then employed to add creativity, stylistic flair, and greater detail to the content.

Caveats

Combining language models of different sizes can be quite complex. Key considerations include:

-

Compatibility: Not all models work together seamlessly. Careful choice and technical work might be needed for effective combination.

-

Computational Cost: While a goal might be efficiency, using multiple models concurrently can still have compute implications.

Examples of Hybrid Models

Scenario 1: Ultra-Efficient News Briefing

Task: Produce a personalized daily news summary that's both concise and informative.

-

Small Model: Scans vast amounts of news articles, rapidly identifying key topics and filtering out irrelevant content.

-

Medium Model: Extracts the most pertinent facts and quotes from the relevant articles, forming a basic summary structure.

-

Large Model: Adds nuanced language, contextualizes the summary, and tailors it to the user's specific interests (politics, tech, etc.).

Scenario 2: Real-Time Creative Writing Assistant

Task: Help a writer with world-building and character development during a story creation session.

-

Small Model: Suggests basic plot outlines, genre tropes, or archetypes while the writer brainstorms.

-

Medium model: Fleshes out characters by providing background details, potential personality traits, or dialogue suggestions.

-

Large Model: Helps ensure that the characters and plot maintain consistency as the story evolves, potentially highlighting contradictions or continuity issues.

Scenario 3: Multilingual Customer Service Triage

Task: Efficiently direct and assist customers of a multinational company with a wide array of needs across different languages.

-

Multiple Small Models: Multiple small models, each specializing in a different language, provide initial support with classification of the customer's intent (technical issue, billing question, etc.)

-

Medium model: Routes the customer's message to the appropriate large model or human support team based on its category.

-

Large Models: (if needed) Specialized large models are deployed for complex problems or specific languages, with potential translation between languages when required.

Selecting the “Right” Model(s)

Selecting the right language model (or the decision to combine them) is crucial for businesses looking to harness the power of AI for their applications. Here's a breakdown of key considerations when building an LLM strategy:

Core Factors

Task Requirements:

-

Complexity: Do you need basic text completion or high-level generation with deep reasoning? Small models can suffice for simple tasks, while complex demands often necessitate large models.

-

Accuracy: How critical is factual correctness and consistent quality in the output? Highly sensitive applications (e.g., legal, financial) prioritize accuracy, often favoring medium or large models.

-

Domain Specificity: Does your use case involve industry jargon or specific knowledge? Tailor-made smaller models, fine-tuned on specialized data, might be needed alongside or within a hybrid approach.

Resources:

-

Computational Power: Large language models come with significant compute costs. Assess your infrastructure and budget before opting for massive models.

-

Development Expertise: Do you have in-house AI/ML expertise for custom model training or management of hybrid systems? Outsourcing or pre-trained models might be more suitable if not.

Speed and Latency:

-

Real-time Interactions: Low-latency applications (like chatbots) might favor smaller models for quick responses.

-

Batch Processing: Tasks where speed isn't immediate (content summaries, research) can leverage larger models.

Security and Data Privacy:

-

Sensitivity of Data: For highly confidential tasks, consider on-premise solutions or specialized models trained on private data to avoid external data exposure.

Considerations for Hybrid Approaches

-

Efficiency vs. Capability Trade-off: Hybrid approaches offer efficiency advantages and customization. Assess if this balance is preferable to simply scaling to a larger pre-trained model.

-

Cost-Effectiveness: While potentially more efficient, developing and maintaining a hybrid architecture involves complexities and potential overhead costs.

-

Technical Feasibility: Integrating models of different sizes requires specialized skills to ensure seamless information flow and output consistency.

Additional Factors

-

Ethical Implications: Language models can inadvertently reflect biases within training data. Consider strategies for reducing harmful outputs, especially in sensitive domains.

-

Continuous Improvement: Language models are constantly evolving. Factor in plans for model updates, retraining, and monitoring.

Decision-Making Process

-

Define your Use Case: Clearly outline the problem you wish to solve and the expected characteristics of the desired outputs.

-

Prioritize Key Considerations: Prioritize whether speed, accuracy, resource availability, or specialized needs are of the utmost importance for your application.

-

Evaluate Options: Explore pre-trained language models (off-tlf) across different sizes, and custom models if you have internal data and expertise. Consider if a hybrid approach would be viable and advantageous.

-

Test and Iterate: Experiment with potential language model solutions to get a real-world sense of performance and trade-offs.

Comments ( 0 )