As generative AI continues to capture the world’s imagination, experts warn that enthusiasts are failing to recognise that the systems are, by definition, artificial.

The technology has taken the world by storm since the rise of ChatGPT last November, which shocked users with the AI’s humanoid responses and impressive accuracy.

AI systems like ChatGPT scrape data from the internet to rapidly generate responses to users’ queries. They can use this data to formulate answers to questions on almost any topic.

With the capabilities of Artificial intelligence rapidly expanding since the launch of these technologies, some experts have begun to question whether the world could one day witness its first case of AI singularity– when AI advances so much that it becomes sentient.

Others think the day has already arrived. Last year, Blake Lemoine, an engineer at Google, claimed that the tech giant’s own language model LaMDA was sentient. Google said this claim was “wholly unfounded,” hastily removing him from his position.

But Microsoft’s recently-launched Bing Chat appears to reignited Lemoine’s belief. The generative AI chatbot has been dominating the headlines this week for its bizarre, aggressive, and sometimes outright scary responses to questions and queries.

Users have been sharing posts of the AI-wired bot seemingly displaying behaviour traits they attribute to a sentient being – from having an existential crisis to attempting to persuade a New York Times Journalist to leave his wife and pursue a love affair with itself.

But experts say these seemingly singular behaviours and responses – though bizarre and bewildering – are nothing more than an example of illusions of AI’s sentience.

They derive from man-made systems trained to mimic human behaviour and language to facilitate effective communication between AI and humans.

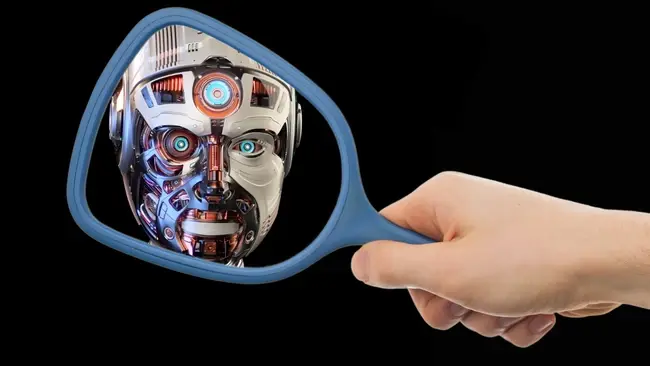

The AI mirror test

While some users are able to distinguish these systems as such, others find themselves struggling to navigate the linguistic complexity of these human-made AI systems.

Instead, they perceive AI as singular entities, failing to understand that when speaking to these systems they are essentially speaking to themselves.

To read more about artificial intelligence, visit our dedicated AI in the Enterprise Page.

This misconception has been exacerbated by the tales of sentient behaviours from reputable and influential figures and journalists recalling their conversations with Microsoft's Bing Chat:

“Could Bing be the Big One – has Microsoft created the AI that will finally break its chains and go rogue? Probably not, but if it is, then its emotional temperament doesn’t bode well for humanity," Thom Waite writes in Dazed Digital.

“In the light of day, I know that Sydney is not sentient [but] for a few hours Tuesday night, I felt a strange new emotion — a foreboding feeling that AI had crossed a threshold, and that the world would never be the same. Hopefully, Microsoft’s engineers are hard at work to keep it under control as we speak, ” Kevin Roose writes in the New York Times.

Having spent time with these systems myself, I can resonate with these reactions. But I also believe that sparking the discussion of AI sentience at such an early stage in the development of the technology will only end in public confusion and misconstrued distrust.

As James Vincent writes in the Verge, such reactions are “overblown and tilt us dangerously toward a false equivalence of software and sentience. In other words: they fail the AI mirror test.”

No, AI is not sentient

For the world to pass this ‘AI mirror test’, It is important it remembers that chatbots are man-made tools. They’re systems based on human datasets of human texts, messages and dialogue scraped from the internet.

Their responses are recreations of text from personal blogs, short stories, forum discussions, movie reviews, poems, song lyrics, manifestos, and everything in between. They are not created by the sentience of any AI machine, but by humans.

The reason Bing is spewing toxic is because the people testing it are... spewing toxic .

— Alex J. Champandard ❄️ @alexjc@creative.ai (@alexjc) February 16, 2023

- Ethicists looking for racism, sexism. It's generated for them!✅

- Hackers looking for secret prompts. Boom, faked too.✅

- -posters looking for controversy: also all there!✅

AI technologies are built to analyse the creative, the scientific, and the entertaining, and try to recreate them in the most humanoid way possible.

Experts believe that the bizarre responses users are experiencing on systems like ChatGPT and Microsoft’s Bing Chat are simply a byproduct of this.

"The reason we get this type of behaviour is that the systems are actually trained on huge amounts of dialogue data coming from humans," Muhammad Abdul-Mageed, Chair in Natural Language Processing at the University of British Columbia, told Euronews.

"And because the data is coming from humans, they do have expressions of things such as emotion," he added.

Comments ( 0 )