You've probably heard of Large language models (LLMs), the technology driving the AI revolution across the tech space and powering everything from Google's search results to OpenAI's ChatGPT.

These AI tools can process and generate massive amounts of text, blurring the lines between human and machine capabilities. From composing realistic dialogue to translating languages in real time, LLMs are finding applications across businesses and personal use.

LLMs are rapidly transforming the way we interact with technology, but with so many players on the market how do you decide which one to use?

How Do LLMs Work

LLMs are trained on enormous amounts of text data, often scraped from books, articles, code, and the internet. This exposure allows them to learn complex relationships between words and how language is used in different contexts.

They rely on artificial neural networks, a complex system loosely inspired by the human brain. These networks can identify patterns in the data and use them to process information and generate text. Many advanced LLMs utilize the Transformer architecture, which excels at understanding the relationships between words in a sentence and allows them to generate coherent and grammatically correct text.

Training a large language model is a complex task that requires significant computational resources and expertise. LLMs thrive on massive amounts of text data. The more data you have, the better the model will understand the nuances of language and generate human-quality text. Training data can be from books, articles, websites or code.

Data preparation means making the raw data suitable for the LLM to use. By carefully preparing the data, we equip the LLM with the knowledge base it needs to excel at tasks like text generation, translation, and question answering. This process includes;

- Cleaning and Filtering: Raw data often contains errors, inconsistencies, and irrelevant information. Tools are used to remove typos, grammatical mistakes, and HTML code remnants, ensuring the LLM learns from clean text.

- Normalization: The data might undergo normalization to ensure consistency. This could involve converting text to lowercase, stemming words (reducing them to their base form), or lemmatization (converting words to their dictionary form).

- Tokenization: Here, the text is broken down into smaller units that the LLM can understand and process. This can be words, characters, or even sub-word units. Tokenization allows the LLM to identify patterns and relationships between these units.

- Vocabulary Building: The unique tokens encountered during tokenization form the LLM's vocabulary. Depending on the model architecture, there might be a limit to the vocabulary size, requiring decisions about which words to include or exclude.

Types of LLM

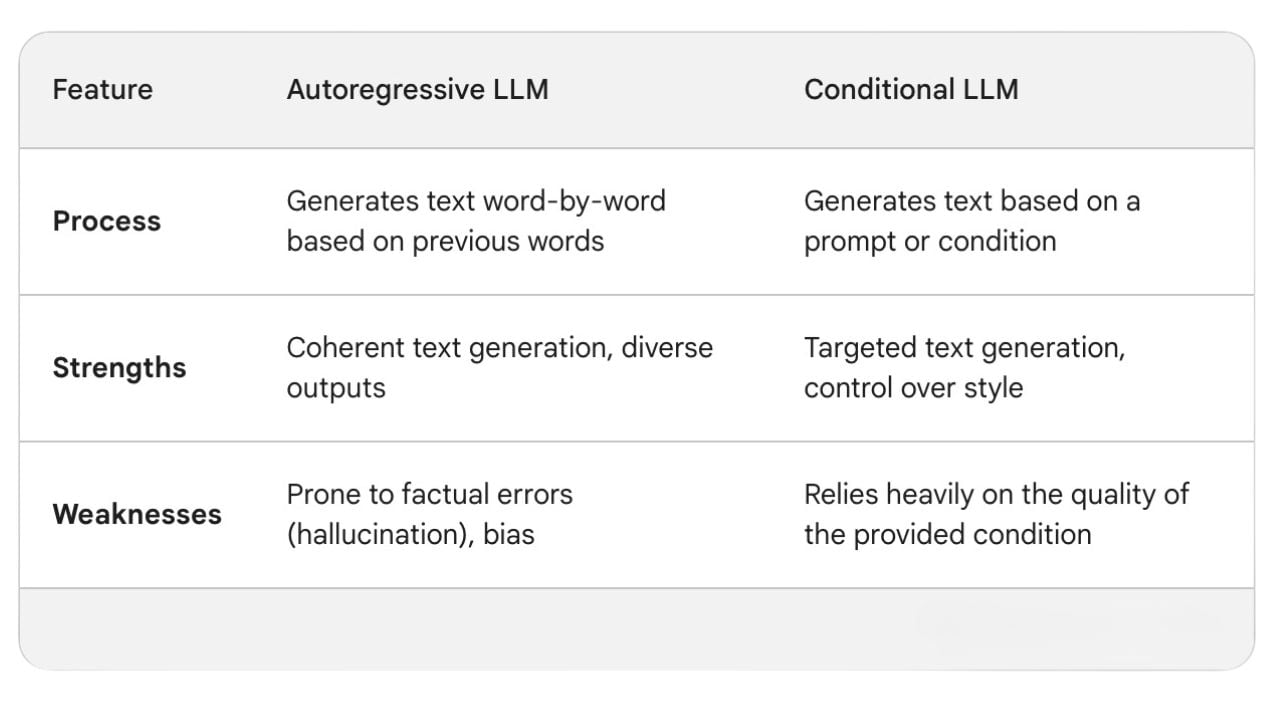

There are two main types of LLM, autoregressive and conditional generative. Autoregressive models generate text word by word, predicting the next word based on the ones before. Conditional generative models consider additional information, like a specific prompt or desired writing style, to tailor their text generation.

Autoregressive Models

Autoregressive models are better at generating coherent and grammatically correct text. They work like a sophisticated autocomplete that predicts the next word based on the ones before. Autoregressive LLMs are trained on colossal amounts of text data which they then analyze to learn the probabilities of which word typically comes next in a sentence.

When a user inputs a prompt starting sentence the autoregressive model predicts the rest of the paragraph word by word. This chain of predictions allows for short form or long form content to be generated.

Given a prompt or starting sentence, the model predicts the most likely following word, then uses that prediction to inform the next word, and so on. This chaining of predictions allows them to generate paragraphs, articles, even entire scripts.

Autoregressive models are perfect for overcoming writer's block, rewriting content and creating summaries. They are used to power chatbots in natural language understanding and generation as well as translating text whilst maintaining fluency.

When Coding Becomes Conversation

How natural language prompts and LLMs are redefining software delivery, talent models and accountability in enterprise development.

Conditional Generative Models

Unlike autoregressive models, conditional generative LLMs don’t operate word-by-word. They leverage additional information, known as ‘conditions’ that influence their text generation. These conditions could include starting topics or instructions of the model to follow, style indication such as professional essays or a casual email.

Conditional LLMs are more flexible than autoregressive models because they can consider the bigger picture. This allows for tailoring the output to match the condition, making it useful for creative content generation, with freedom to easily alter tone and format.

Best LLMs 2024

From streamlining workflows to unlocking creative possibilities, LLMs are revolutionizing human-computer interaction. But with a crowded market, choosing the right LLM for your needs can be a challenge - especially given the range of tools fighting for a space on the LLM leaderboard today.

To help you decide, we’re counting down ten of the best LLMs on the market in 2024, ranking each of them based on their features, popularity and performance.

Comments ( 0 )