Meta has just announced the launch of Llama 3, the next generation of their large language model that brings a host of new AI features to Meta's social platforms.

The powerful AI, trained on a massive dataset of text and code, not only boasts improved capabilities but is also integrated into Meta's core social media platforms – Facebook, Instagram, and WhatsApp – as their new AI assistant, "Meta AI."

The announcement also came with talks of an even more powerful Llama on the horizon. Meta has hinted at a 400 billion parameter model still in training, suggesting their commitment to pushing the boundaries of AI.

In this article, we will explore Meta Llama 3, how to use it and the differences between Llama 2 and Llama 3.

What is Meta Llama 3?

Meta Llama 3 is a large language model (LLM) developed by Meta that's trained on a massive amount of text data.

This allows it to understand and respond to language in a comprehensive way, making it suitable for tasks like writing different kinds of creative content, translating languages, and answering your questions in an informative way.

Llama 3 models will be available on AWS, Databricks, Google Cloud, Hugging Face, Kaggle, IBM WatsonX, Microsoft Azure, NVIDIA NIM, and Snowflake.

The development of Llama 3 represents a significant advancement in LLM technology, especially for Meta. Its openness encourages collaboration and paves the way for even more powerful and versatile AI tools in the future. As research and development progress, we can expect even more innovative applications for Llama 3 across various industries.

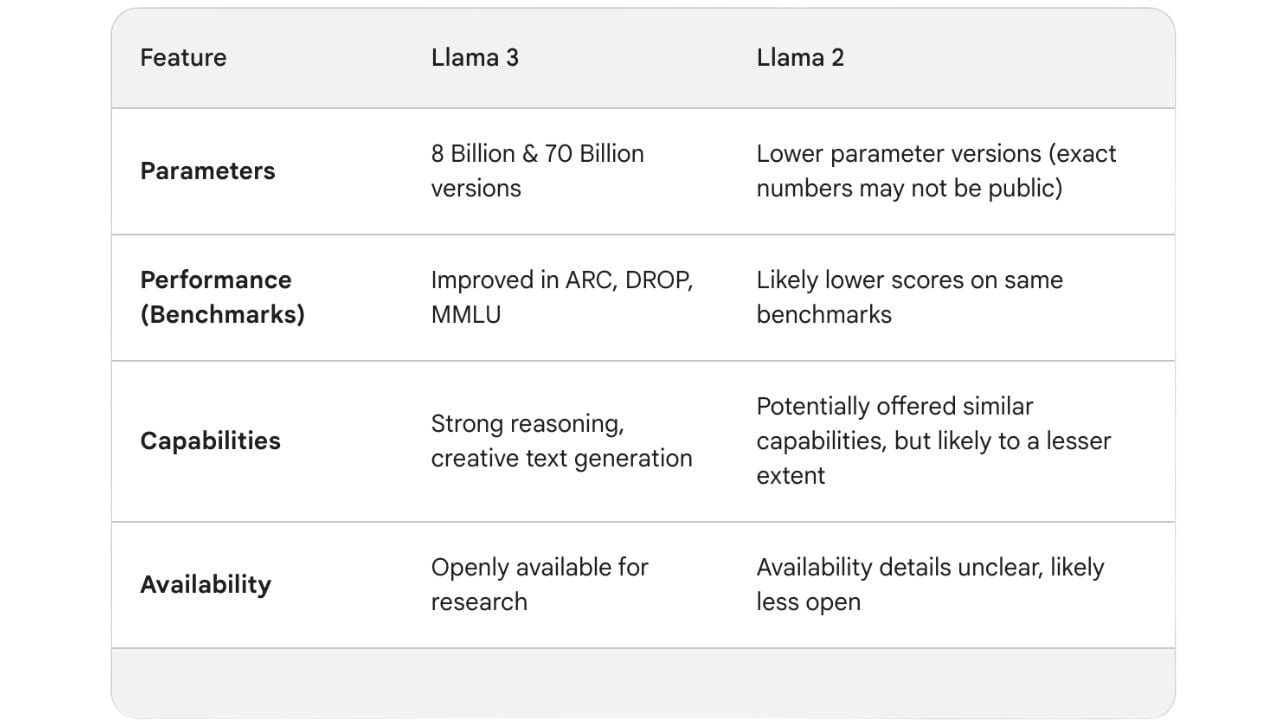

Llama 3 vs Llama 2: Which is better?

Compared to previous versions like Llama 2, Llama 3 boasts better reasoning abilities, code generation, and can follow instructions more effectively. It also outperforms other open models on benchmarks that measure language understanding and response (ARC, DROP and MMLU).

One of the most intriguing new feature of Llama 3 compared to Llama 2 is its integration into Meta's core products. The AI assistant is now accessible through chat functions in Facebook Messenger, Instagram, and WhatsApp.

This translates to a more helpful and more accessible AI assistant. Imagine asking Facebook for a summary of a research paper, or requesting creative writing prompts on Instagram – Llama 3 aims to be there for these tasks and more.

Read: Llama 2: Everything You Need To Know About Meta's ChatGPT Rival

Llama 3 is able to follow instructions and complete multi-step tasks more effectively and can generate various creative text formats like poems, code, scripts, and more. Crucially, researchers can access and build upon Llama 3, fostering further AI development.

When Coding Becomes Conversation

How natural language prompts and LLMs are redefining software delivery, talent models and accountability in enterprise development.

Llama 3 vs Llama 2: Key Differences

1. Strong Benchmarks

Strong benchmarks refer to standardized tests that evaluate an LLM's capabilities across various aspects of language processing. These benchmarks help researchers and developers compare different LLMs and measure their progress over time.

MMLU benchmarks to assess how well an LLM grasps the meaning and context of a given prompt or question. Whereas, ARC and DROP benchmarks evaluate an LLM's ability to apply logical reasoning and solve problems. ARC stands for Abstract Reasoning Corpus and might involve solving puzzles or answering questions that require making inferences based on the information provided.

DROP stands for Dynamic Reasoning Over Paragraph and could involve tasks like identifying the cause-and-effect relationships within a passage.

Strong benchmarks on established tests like MMLU, ARC, and DROP indicate that Meta Llama 3 performs well in areas like language comprehension, reasoning, and potentially factual knowledge retrieval.

2. Creative Text Generation

Llama 3 has been trained on a massive dataset of text and code, including creative writing examples. This allows it to understand the patterns and structures of different text formats. When you provide a prompt or starting point, Llama 3 can use its knowledge to generate text that adheres to the format and style you specify.

Read: Is Gemini Racist? Google’s AI Pulled Amidst Bias Allegations

Llama 3 offers a powerful tool for sparking creative ideas and generating drafts in various text formats. However, human input and expertise are still essential for refining or completing creative projects.

Securing Enterprise AI Models

Breaks down the AI security stack from guardrails to runtime monitoring, mapping ten leading platforms to the modern ML lifecycle.

3. Open-Source

Meta's decision to make Llama 3 open-source is a significant development in the field of large language models (LLMs). Open-source software refers to code or models that are freely available for anyone to access, modify, and distribute. This allows researchers, developers, and even the general public to experiment with Llama 3, build upon it, and contribute to its further development.

This level of openness allows for both scrutiny and collaboration. Researchers can examine how Llama 3 works and identify potential biases or limitations. This fosters a collaborative environment where developers can work together to improve the model. This can accelerate innovation in the field of AI and LLM development.

Researchers and students can also learn how LLMs work by studying its code and experimenting with it. This level of openness allows for ongoing discussions and evaluations regarding the ethical implications of AI development and the potential biases within the model.

4. Integration

Meta has integrated Llama 3 into their virtual assistant, "Meta AI," making it able to answer questions and complete tasks with greater understanding. This also means it is available across Meta platforms like Facebook Messenger and will potentially be integrated into search features in the future. Ultimately it is likely that Llama will be integrated automatically in ways that benefit the user without them having to understand how they are using the LLM.

Read: Google is Banning Gemini from Talking About Elections

Managing AI Hype and Reality

Use the strangest tech milestones to stress-test assumptions, avoid overreach and position AI bets ahead of competitors.

What is Meta Llama 3.2?

Meta Llama 3.2 is the latest update to the tech giants large language model. It features groundbreaking multimodal capabilities, alongside improved performance and more language support. The model is set to demonstrate a significant step forward in LLMs.

Llama 3.2 introduces the LLM’s ‘native understanding’ of visual inputs. This means it an accurately describe an image, provide answers to questions based on a visual input as well as understand embedded images in text based documents.

This visual understanding will also be implemented in Meta’s AI image editing tool. This will allow users to alter an image based on text inputs, the example given at Meta Connect 2024 shows a picture of a man with the text input ‘change the shoes to neon rollerskates’.

Llama 3.2 models can also process and generate longer pieces of text because of their expanded context length of 128K tokens.

How to download Llama 3.2?

The open source models of Llama 3.2 are available for download both on llama.com and Hugging Face. They are also available through Meta’s partner platform ecosystem including AMD, AWS, Databricks, Dell, Google Cloud, Groq, IBM, Intel, Microsoft Azure, NVIDIA, Oracle Cloud and Snowflake.

- Visit llama.com

- Click ‘download models’

- Fill out your details under the ‘Request Access to Llama Models’

- Select the model you wish to download from the choices of the lightweight 1B & 3B, the multimodal 11B & 90B and the text based models, 405B, 70B & 8B.

- Review the Community License Agreement

- Click accept

- Follow the instructions to download the model

Inside the Minds Shaping AI

Ten visionaries whose research and bets on deep learning and generative models are setting the pace for AI adoption across every sector.

How to use Llama 3

Llama 3 will be available on all major platforms including cloud providers and model API providers. You can access the Meta Llama models directly from Meta or through Hugging Face or Kaggle.

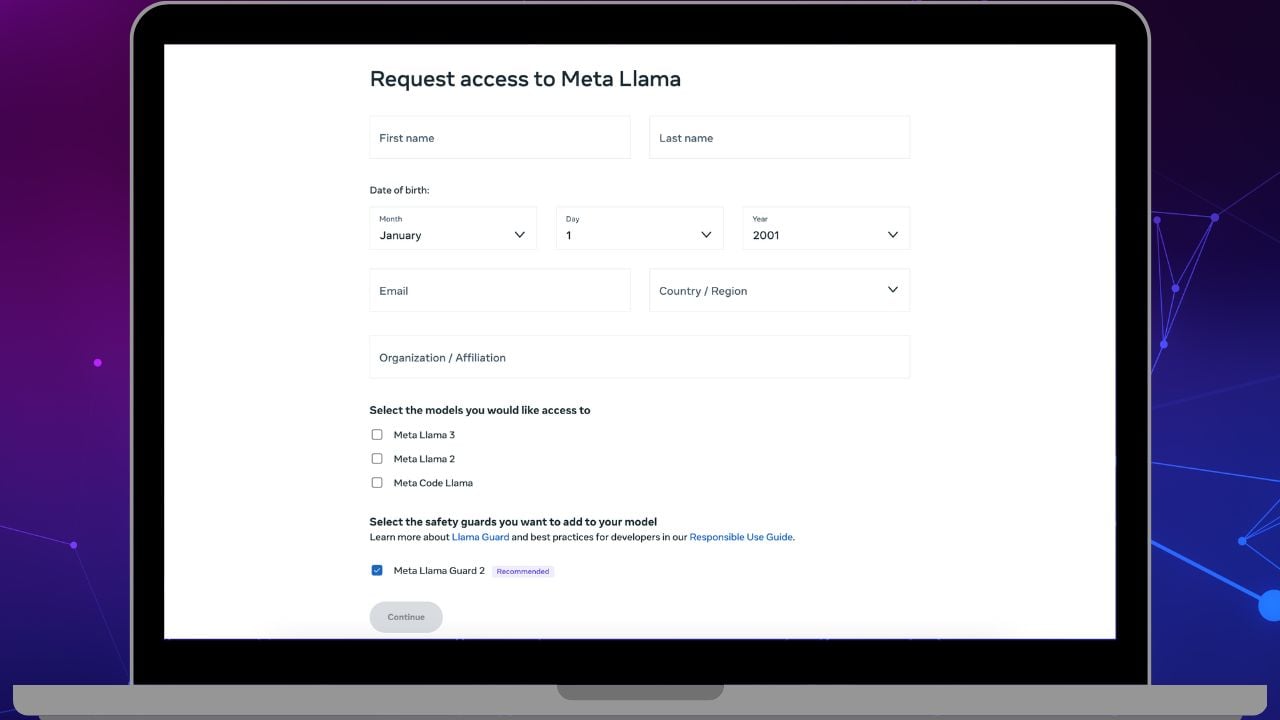

How to download Llama 3 from Meta:

- Visit Meta’s request access form.

- Fill in your information including name, email, date of birth and country.

- Select the models that you want to access.

- Review and accept the license agreements.

- For each model, you will receive an email that contains instructions and a pre-signed URL to download.

Meta’s ‘Llama Recipes’ contains their open-source code that can be leveraged broadly for fine-tuning, deployment and model evaluation.

The arrival of Meta Llama 3 marks a significant step forward in large language model technology.

Its focus on reasoning, creative text generation, and open-source accessibility positions it as a powerful tool for various applications. It offers a glimpse into the exciting potential of this evolving technology.

As research and development continue, we can expect even more advancements in the field of LLMs and the way they interact with our world.

Comments ( 0 )