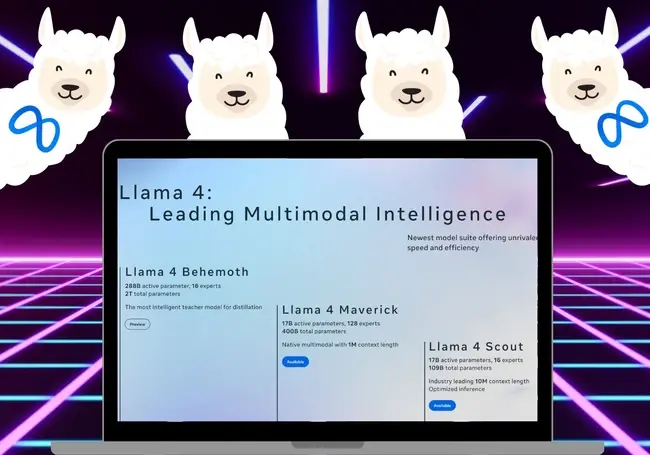

Meta has just released Llama 4, their next-generation large language model family. The initial release makes the highly efficient Llama 4 Scout and the powerful Llama 4 Maverick fully available.

The models boast native multimodality and innovative architectures that are set to power new AI features within Meta's social platforms.

Meta has offered a glimpse into their future release with details on the ongoing training of the massive Llama 4 Behemoth.

In this article, we will explore Meta Llama 4 and go into depth on Llama 4 Scout, Llama 4 Maverick and Llama 4 Behemoth. We’ll also show you how to download and use the available Llama 4 models.

What is Meta Llama 4?

Meta Llama 4 is the latest generation of large language models from Meta. The goal of this new iteration is to allow people to build more personalised multimodal experiences.

This means applications built with Llama 4 will be able to adapt and respond to individual users in a more nuanced way. It will consider not only the textual input but considering other contexts such as visuals.

Personalised and Multimodal

Llama 4 was built to incorporate early fusion to integrate text and vision tokens into a unified model backbone. Early fusion is a major step forward in artificial intelligence since it enables developers to simultaneously pre-train the Llama 4 with large amounts of unlabeled text, image, and video data.

This may involve understanding user preferences, remembering past interactions involving both modalities, as well as generating responses that incorporate both text and visual elements.

Llama 4 is designed from its foundational architecture to inherently understand both modalities together. It doesn't treat images as just another sequence of tokens to be appended to text, it has mechanisms to directly relate visual features with textual concepts during the initial stages of processing.

The models abilities to understand text and images simultaneously allows for more context aware, personalised generations.

Developers will be able to leverage the multimodality and increased level of personalization to build solutions for a range of sectors. Education content could be tailored to a student's learning style, accessible interfaces could be built, interactive entertainment could be revolutionised and shopping experiences online could be much more tailored.

When Coding Becomes Conversation

How natural language prompts and LLMs are redefining software delivery, talent models and accountability in enterprise development.

Mixture–of-Experts Architecture

The new Llama 4 models are Meta’s first models that use a mixture of experts (MoE) architecture.

This means that the model is made of smaller, specialized "expert" networks, each focusing on a particular aspect of language or knowledge.

A "gating network" then acts as a central router, analyzing incoming requests and activating only the relevant experts for the task. This "sparse activation" ensures efficiency and allows the model to scale to larger sizes and handle more complex task

New MetaP Training Technique

For Llama 4, Meta developed a new training technique that they call ‘MetaP’. This allows them to set critical model hyper-parameters like per-layer learning rates and initialization scales.

This means choosing and configuring the key settings that control how a machine learning model, specifically a large language model like Llama 4, learns during its training process.

Ultimately this makes the process of training larger and more complex Llama 4 models becomes less computationally expensive and more predictable.

Llama 4 allows for open source fine-tuning efforts by pre-training the models on 200 languages.

Inside LLM Architecture

Break down transformers, attention, training data and parameters to see what it takes to deploy enterprise-grade language models.

What is Llama 4 Scout?

Meta’s Llama Scout a powerful yet efficient multimodal large language model.

Llama 4 Scout stands out for its exceptionally long memory. This makes it well-suited for tasks that require understanding and processing vast amounts of information across different modalities.

Meta states that the 17 billion active parameter model has 16 experts and is the ‘best multimodal model in the world in its class [..] is more powerful than all previous generation Llama models, while fitting in a single NVIDIA H100 GPU.’

What is Llama 4 Maverick?

Llama 4 Maverick is a powerful multimodal model from Meta. It is designed to be a powerful and versatile multimodal model that excels in both understanding and generating text and images across multiple languages.

Its architecture supports high performance and quality, making it a strong contender for building sophisticated AI assistants and applications that need to bridge language barriers and understand visual context.

Meta says Llama Maverick shows comparable results to DeepSeek v3 on reasoning and coding ‘at less than half the active parameters’.

AI Slop and the Erosion of Trust

Analyses how mass AI-generated media accelerates misinformation, manipulates perception, and weakens public confidence in facts.

What is Llama 4 Behemoth?

Llama 4 Behemoth is a highly powerful, large language model. Behemoth is Meta's "teacher model" that is being used to enhance the rest of the Llama 4 family.

Llama 4 Behemoth is currently in training and has not been released publicly. It is described as a very large model, with nearly two trillion total parameters and 288 billion active parameters. This makes it significantly larger than the currently released Llama 4 Scout and Llama 4 Maverick models

Meta claims that Llama 4 Behemoth is already outperforming existing top models on STEM benchmarks.

Like the other Llama 4 models, Behemoth utilizes a Mixture of Experts (MoE) architecture, but with a larger number of experts and active parameters.

How To Download Llama 4?

Llama 4 Scout and Llama 4 Maverick are currently available, whilst Behemoth is still in previews.

To use Llama 4 Scout or Llama 4 Maverick:

- Visit llama.com

- Click ‘download models’

- Fill out your details under the ‘Request Access to Llama Models’

- Select the model you wish to download.

- Review the Community License Agreement

- Click accept

- Follow the instructions to download the model

Inside Poe's Chatbot Aggregator

See how a unified access layer to GPT-4, Claude, Gemini and more is reshaping how enterprises test, compare and deploy generative AI.

How To Use Llama 4?

Llama 4 will be available on all major platforms including cloud providers and model API providers. You can access the Meta Llama models directly from Meta or through Hugging Face.

To download Llama 4 from Meta:

- Visit Meta’s request access form.

- Fill in your information including name, email, date of birth and country.

- Select the models that you want to access.

- Review and accept the license agreements.

- For each model, you will receive an email that contains instructions and a pre-signed URL to download.

Meta’s ‘Llama Recipes’ contains their open-source code that can be leveraged broadly for fine-tuning, deployment and model evaluation.

Comments ( 0 )