If you know anything about machine learning, you’ll know how important reinforcement learning (RL) is. The process has emerged as a powerful tool in the realm of AI, enabling many of the machines we use today to become faster and more efficient.

But what even is Reinforcement learning, and why is it so important

This article delves into the meaning of reinforcement learning, exploring why the practice is important of this while giving real-world examples of its algorithms in action.

What is Reinforcement Learning (RL)? Definition

Reinforcement learning (RL) is a type of machine learning where an agent learns by interacting with its environment. It’s based on the concept of behavioral conditioning where an agent learns to associate certain actions with positive or negative rewards.

The goal of RL is to find an optimal policy that maximizes the cumulative reward over time. The agent tries to maximize a reward signal by taking actions that lead to positive rewards and avoiding actions that lead to negative rewards.

This enables it to learn the optimal behaviour by learning from interactions with the environment and observations of how it responds, similar to children exploring the world around them and learning the actions that help them achieve a goal.

Reinforcement Learning maximizes the expected cumulative reward over time. This makes it especially useful in fields field like robotics – where robots learn to navigate and manipulate objects and process information as quickly as and effectively as possible.

How does reinforcement learning (RL) work?

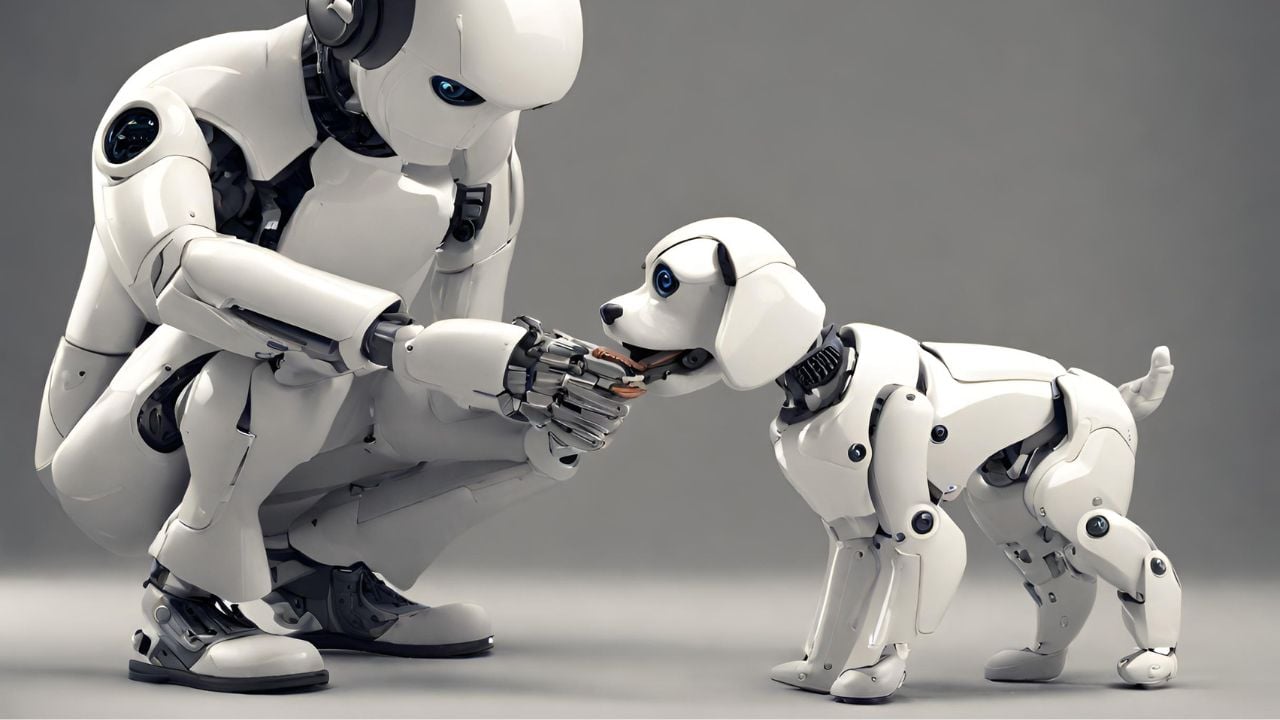

Think of Reinforcement learning as like training a puppy. You reward good behaviours and discourage unwanted ones, gradually making the puppy behave how you want it to. RL works in exactly the same way, with algorithms shaping an agent's behaviour by rewarding successful actions and discouraging unsuccessful ones.

During the reinforcement learning process, the agent interacts with an environment, which can be physical or simulated. The environment then provides the agent with sensory inputs, such as observations or measurements, and responds to the agent's actions according to the rules of the environment.

Like a puppy, the agent is the decision-making entity. It receives sensory inputs from the environment, processes them, and selects an action to execute. The agent's goal is to maximize its cumulative reward over time. The agent learns through trial and error, interacting with the environment and receiving rewards. The agent updates its policy and value function to improve its decision-making based on the feedback it receives.

The RL process can be summarised in a series of steps:

- The agent observes the current state of the environment.

- Based on this observation, the agent selects an action.

- The environment transitions to a new state, and the agent receives a reward.

- The agent updates its knowledge based on the received reward and refines its strategy for future actions.

Examples of RL Algorithms

Reinforcement learning (RL) has a wide range of algorithms, each with its strengths and weaknesses. Here are some of the most commonly used RL algorithms and how they work:

- Q-learning. Q-learning is a model-free RL algorithm that estimates the value of taking a specific action in a given state. It iteratively updates a Q-value table, which stores the expected cumulative reward for each state-action pair. Q-learning is versatile and can be applied to a variety of problems, including discrete and continuous action spaces.

- SARSA. SARSA (State-Action-Reward-State-Action) is another model-free RL algorithm, similar to Q-learning. But instead of updating the Q-values directly, SARSA updates the estimated value of the action taken in the current state. SARSA is known for its stability and adaptability, and is in a wide range of industries.

- Policy gradient methods. Policy gradient methods are a class of RL algorithms that directly optimize the policy function, which maps states to actions. They use gradient-based optimization techniques to update the policy parameters in the direction of increasing expected rewards. Policy gradient methods are particularly well-suited for continuous action spaces.

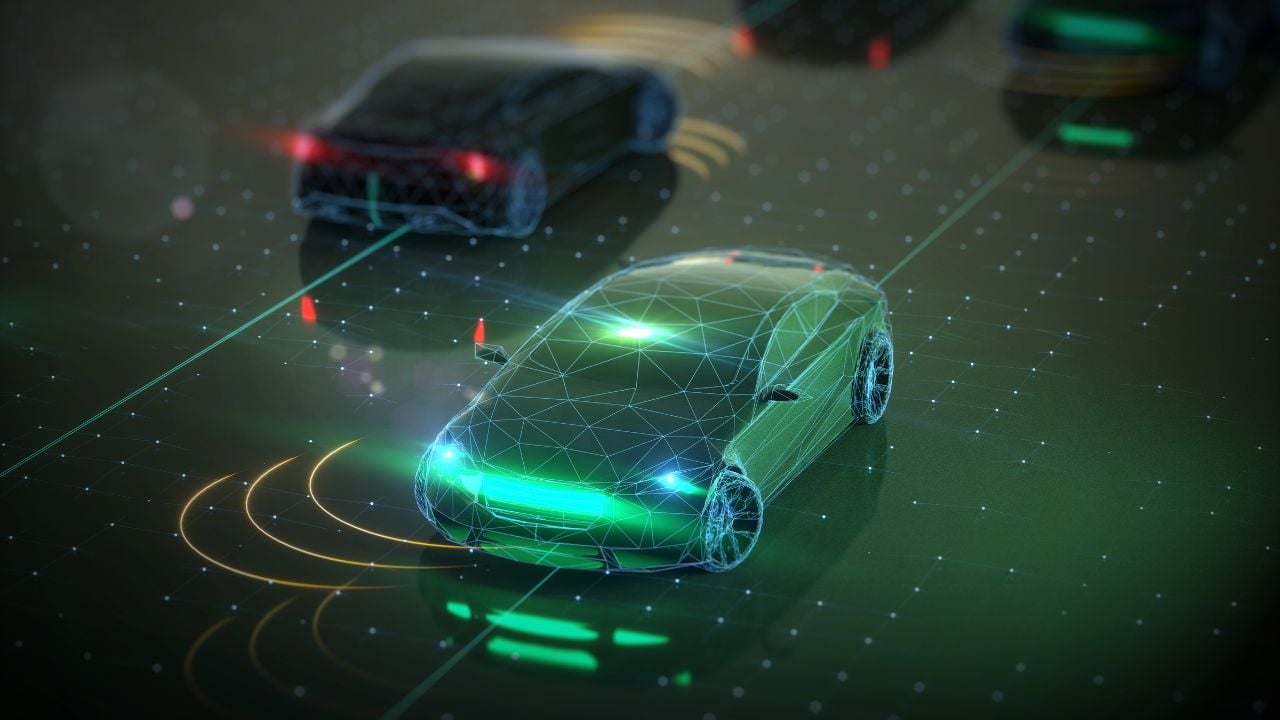

- Deep reinforcement learning (DRL). DRL combines RL with deep learning techniques, using neural networks to represent the policy, value function, or other components of the RL algorithm. DRL has been particularly successful in complex domains like game-playing and self-driving cars.

- Actor-critic algorithms. Actor-critic algorithms are a type of RL algorithm that separates the policy learning from the value function estimation. The actor is responsible for selecting actions, while the critic evaluates the value of the current state and the policy's performance. Actor-critic algorithms are often used in DRL settings.

These are just a few examples of RL algorithms available today. The choice of algorithm depends on the specific problem, the characteristics of the environment, and the desired trade-off between exploration and exploitation.

Why is reinforcement learning important?

The importance of reinforcement learning stems from its unique ability to learn from experience, adapt to changing environments, and make real-time decisions that maximize long-term rewards.

RL algorithms are particularly well-suited for tackling complex problems that involve uncertainty and delayed rewards. Unlike traditional machine learning techniques that rely on labelled data, RL agents can learn through trial and error, making them suitable for situations where labelled data is scarce or impossible to obtain.

Applications of reinforcement learning

RL's ability to adapt to changing environments is crucial for several real-world applications. In dynamic environments like robotics and self-driving cars, RL agents can continuously learn and adjust their behaviour based on the latest information, making them more robust and effective than traditional rule-based systems.

RL's ability to handle delayed rewards also makes it suitable for long-term planning and optimization. By considering the cumulative effects of actions over time, RL agents can make decisions that maximize long-term goals, even when the immediate consequences of an action are not apparent.

Some of the key applications of Reinforcement Learning include:

- Robotics: RL plays a crucial role in advancing robotics, enabling robots to perform complex tasks in unstructured environments. RL algorithms can train robots to navigate, manipulate objects, interact with humans, and perform autonomous tasks in dynamic settings. For instance, RL-powered robots can be used in manufacturing, warehouse operations, and search and rescue missions.

- Self-Driving Cars: RL is also a core technology in self-driving cars. RL algorithms can train self-driving cars to make real-time decisions while navigating roads, handling traffic situations, and adapting to unexpected conditions. RL-powered self-driving cars have the potential to revolutionize transportation, enhancing safety, efficiency, and accessibility.

- Resource Management: RL is increasingly being applied to optimize resource allocation in various scenarios. RL algorithms can be used to manage energy distribution, network traffic, and supply chains effectively. For instance, RL can optimize power grid operations, minimize network congestion, and improve logistics planning.

- Healthcare: RL has promising applications in personalized medicine, treatment optimization, and drug discovery. RL algorithms can analyze patient data, identify patterns, and suggest personalized treatment plans. Additionally, RL can be used to design and optimize drug therapies by simulating molecular interactions and predicting drug efficacy.

- Finance: RL is gaining traction in the financial sector for investment decision-making, risk management, and algorithmic trading. RL algorithms can analyze market data, identify trading opportunities, and make informed decisions that maximize returns while minimizing risks. RL-powered trading strategies have the potential to enhance portfolio management and financial forecasting.

Benefits of Reinforcement learning

Reinforcement learning (RL) offers several key benefits that have made it an increasingly popular and powerful approach to machine learning.

Its ability to learn from experience, adapt to changing environments, and make real-time decisions that maximize long-term rewards makes it a valuable asset for solving complex problems.

Here are some of the main benefits of reinforcement learning:

- Adaptability to Uncertainty: RL excels in dynamic and unpredictable environments where traditional machine learning techniques struggle. By learning through trial and error, RL agents can adapt to changing conditions and make decisions that maximize their long-term rewards, even when the environment is not fully known or predictable.

- Efficient Exploration and Exploitation: RL algorithms balance exploration (trying new actions to discover better strategies) and exploitation (taking the best known actions to maximize immediate rewards). This balance is crucial for finding optimal solutions in complex environments, especially when rewards are delayed or the environment is constantly changing.

- Handling Delayed Rewards: RL can handle situations where the immediate consequences of an action may not be apparent, and the full reward is only realized after a sequence of actions or over an extended period. This capability is essential for long-term planning and optimization in problems like resource management and investment planning.

- Real-Time Decision-Making: RL algorithms can operate in real-time, making decisions as the environment evolves. This capability is critical for applications like robotics and self-driving cars, where immediate responses are necessary to navigate complex environments and avoid hazards.

- Learning from Interactions: RL can learn from direct interactions with the environment, without the need for labeled data or pre-programmed rules. This makes RL suitable for tasks where traditional data-driven approaches are impractical, such as training robots to perform complex tasks or optimizing systems that operate in dynamic environments.

- Potential for General AI: RL holds promise for achieving artificial general intelligence (AGI), the ability to solve a wide range of problems without explicit programming. RL's ability to learn from experience, adapt to new situations, and make decisions under uncertainty makes it a promising approach for developing AGI.

Challenges and Considerations

Despite its numerous benefits, reinforcement learning (RL) faces several challenges and considerations that need to be addressed for its successful application. These challenges stem from the complexity of real-world environments, the need for efficient learning algorithms, and the ethical implications of autonomous decision-making.

RL algorithms often need a large amount of data and experience to learn effectively, which can be costly and time-consuming to obtain. This is particularly challenging in situations where interactions with the environment are expensive or difficult to replicate, such as real-world robotics or self-driving cars.

RL algorithms can also struggle in situations where the consequences of an action are delayed or the full reward is only realized after a long sequence of actions. This can make it difficult to attribute rewards to specific actions and hinder the learning process.

They often can’t generalize their learned policies from one task or environment to another. Transfer learning techniques can help bridge this gap, allowing agents to leverage knowledge from previous experiences in new situations.

Final Thoughts

Reinforcement learning is a powerful and versatile tool for tackling complex problems in a range of domains. Its ability to learn from experience, adapt to changing environments, and make real-time decisions that maximize long-term rewards makes it a valuable asset for solving problems in robotics, game playing, self-driving cars, resource management, healthcare, and finance.

While RL faces challenges with sample efficiency, exploration-exploitation trade-offs, delayed rewards, generalization, and ethical considerations, its potential benefits far outweigh these challenges.

As RL research continues to advance, we can expect to see even more innovative and impactful applications emerge, transforming the way we interact with the world around us.

Comments ( 0 )