If you’ve been following the rise and explosion of AI in recent years, you've probably heard of machine learning (ML).

ML models are the brains behind large language models (LLMs) like OpenAI's ChatGPT, allowing computers to automatically learn from data and past experiences to identify patterns and make predictions with minimal human intervention.

They’re also fundamental to crucial applications across various industries, from recommendation systems like those used by Netflix and Amazon to fraud detection in financial institutions and large corporations.

But what even is machine learning, and why has it become so central to many of the technologies we use today?

This article tells you everything you need to know about machine learning (ML), including what it is, how it works and its algorithms, along with examples of ML models in action.

What is Machine Learning (ML)?

Machine Learning (ML) is a branch of AI that focuses on enabling computers to learn and make decisions or predictions based on data, without being explicitly programmed for specific tasks. Instead of relying on static instructions, ML systems use algorithms and statistical models to analyse data, identify patterns, and improve their performance over time.

Machine learning models are trained on large datasets of labelled examples, allowing them to identify patterns and make predictions. These models adapt and evolve as they encounter new information, making them capable of handling tasks that range from image recognition and natural language processing to predictive analytics and recommendation systems.

This has made them a crucial component of many modern technologies, powering applications like facial recognition, natural language processing, and customised recommendations.

How does machine learning work?

Machine learning algorithms process large amounts of data and identify patterns or relationships that can be applied to future tasks or predictions. This learning process involves several steps: collecting data, choosing an algorithm, training the model, testing it, and deploying it to make real-world decisions or predictions.

First, data is collected and prepared. The data can be structured data (like spreadsheets with rows and columns) or unstructured data (such as images, text, or audio), so needs to be cleaned and formatted to ensure accuracy, as noisy or irrelevant data can negatively affect the model's performance. Once the data is ready, the ML algorithm is selected based on the type of task, whether it's classification, regression, clustering, or another problem type.

The next step is training the model. This involves feding the algorithm with data and so that it learns to recognize patterns or relationships between the input data and the desired output. After training, the model is tested on new, unseen data to evaluate its performance.

This helps ensure that the model can generalize and make accurate predictions on data it hasn't encountered before to prevent issues like overfitting or underfitting. Once validated, the model can be deployed in real-world applications, where it continues to learn from new data, improving its predictions or decisions over time.

Machine learning models continuously evolve, refining themselves as more data becomes available. However, ensuring the model remains accurate and free from bias and issues like requires constant monitoring, fine-tuning, and updates to prevent data drift and maintain reliability in changing environments.

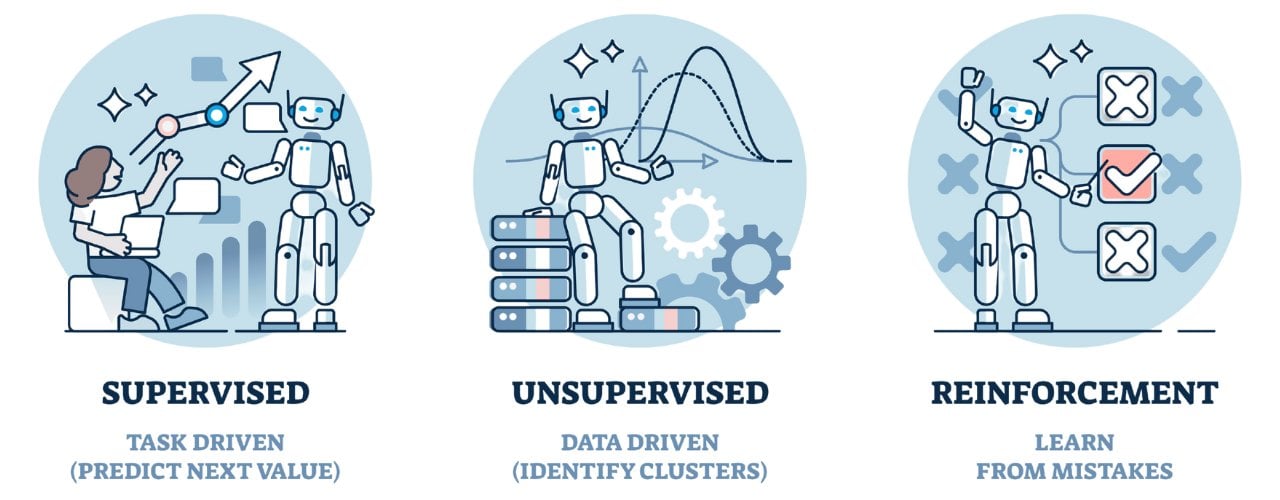

Types of Machine Learning

1. Supervised Learning

Supervised learning is the most common type of machine learning. In this approach, the model is trained on a labelled dataset, where each input is paired with the correct output (also called the target or label). The goal is for the model to learn the relationship between inputs and outputs so it can predict outcomes for new, unseen data.

For instance, in a task like an image classification, the model is trained on a dataset of images where each image is labelled with a category (e.g., "cat" or "dog").

During training, the model tries to minimize the difference between its predictions and the actual labels by adjusting its internal parameters. Common supervised learning tasks include classification (categorizing data) and regression (predicting continuous values).

2. Unsupervised Learning

Unlike supervised learning, unsupervised learning works with unlabeled data. The model is not provided with explicit outputs to guide its learning. Instead, the algorithm tries to find hidden patterns or structures within the data. A common application of unsupervised learning is clustering, where the model groups similar data points together based on their characteristics. Unsupervised learning is valuable when working with large datasets that lack labels, but the insights generated are often exploratory rather than predictive.

3. Reinforcement Learning

Reinforcement learning is a different approach where an agent learns by interacting with an environment and receiving feedback in the form of rewards or penalties. This learning paradigm is often likened to trial and error. The agent makes decisions, observes the outcomes, and adjusts its actions to maximize long-term rewards.

Reinforcement learning is commonly used in scenarios where there is a need for sequential decision-making, such as game playing, robotics, and autonomous systems.

For example in training an autonomous vehicle, the system is rewarded for staying on the road and penalized for deviating from it. Over time, the model learns the optimal strategy or policy for navigating the environment effectively.

Reinforcement learning can be complex due to the dynamic nature of environments and the need to balance short-term actions with long-term goals.

Each type of machine learning has its own strengths and applications, and the choice of which to use depends on the nature of the problem, the data available, and the desired outcome. Some advanced models even combine multiple types of learning to achieve better performance.

Examples of Machine learning (ML) Algorithms

ML algorithms are the mathematical frameworks that enable computers to learn from data and make decisions or predictions. There are many types of ML algorithms, each suited to specific tasks such as classification, regression, clustering, or dimensionality reduction. Here are some of the most widely used ML algorithms:

1. Linear Regression

Linear regression is one of the simplest and most commonly used algorithms for regression tasks, where the goal is to predict a continuous output variable. In linear regression, the algorithm models the relationship between the input features (independent variables) and the output (dependent variable) as a linear equation. It assumes that the change in the output variable is a linear function of the input variables. This algorithm is ideal for tasks like predicting housing prices based on features like square footage or location. Though it's simple and interpretable, linear regression may struggle with complex, non-linear relationships between variables.

2. Logistic Regression

Despite its name, logistic regression is a classification algorithm rather than a regression one. It is used to predict the probability of a binary outcome, making it suitable for tasks like spam detection or medical diagnosis (e.g., determining whether a tumor is malignant or benign). The algorithm applies a logistic function to the input data, which constrains the output to fall between 0 and 1. Logistic regression is often chosen for its simplicity and efficiency, especially in cases where the relationship between variables is straightforward.

3. Decision Trees

Decision trees are versatile and interpretable algorithms that can be used for both classification and regression tasks. They work by recursively splitting the data into subsets based on the value of input features, creating a tree-like structure where each node represents a decision based on a feature, and each branch represents the outcome of that decision.

Decision trees are easy to understand and can handle both categorical and numerical data, but they can become overly complex and prone to overfitting if not properly controlled. Techniques like pruning or combining decision trees into ensemble methods (such as random forests) can help mitigate these issues.

4. Random Forest

Random forest is an ensemble learning algorithm that builds multiple decision trees and combines their results to make more accurate and stable predictions. In this approach, each tree in the forest is trained on a random subset of the data and features, and the final prediction is typically based on the majority vote (for classification) or the average (for regression) across all trees. Random forests are highly effective at reducing overfitting, increasing accuracy, and handling large datasets with complex relationships. They are widely used for tasks such as fraud detection, recommendation systems, and image classification.

5. Support Vector Machines (SVM)

Support Vector Machines (SVM) are powerful algorithms used primarily for classification tasks, though they can also handle regression. SVM works by finding the optimal hyperplane that best separates different classes in the data. The algorithm aims to maximize the margin between the hyperplane and the nearest data points from each class (known as support vectors).

SVM is particularly useful in cases where the data is not linearly separable, as it can use kernel functions to transform the data into a higher-dimensional space. It’s effective in high-dimensional spaces and in situations where the number of features exceeds the number of data points.

6. K-Nearest Neighbors (KNN)

K-Nearest Neighbors (KNN) is a simple, non-parametric algorithm used for classification and regression tasks. The basic idea is that for any new data point, the algorithm looks at the 'k' nearest data points in the training set and assigns the most common label (for classification) or the average value (for regression).

KNN does not build a model during training; instead, it makes decisions based on the entire dataset, making it a type of lazy learning algorithm. It’s easy to implement and effective for small datasets, but its performance can degrade with large datasets due to high computational costs and sensitivity to noisy data.

7. K-Means Clustering

K-Means is a widely used unsupervised learning algorithm for clustering tasks. It works by partitioning a dataset into 'k' clusters, where each data point belongs to the cluster with the nearest mean. The algorithm iteratively adjusts the cluster centroids until the optimal grouping is achieved. K-Means is commonly applied in market segmentation, image compression, and pattern recognition. Although it is fast and scalable, it requires pre-defining the number of clusters and may struggle with complex, non-spherical data distributions.

8. Neural Networks

Neural networks, particularly deep neural networks, are inspired by the structure and function of the human brain. They consist of layers of interconnected nodes (neurons), where each node processes input and passes it to the next layer.

Neural networks are especially powerful for tasks involving high-dimensional and complex data, such as image recognition, natural language processing, and autonomous driving. Deep learning, a subset of neural networks, uses multiple layers to automatically extract increasingly abstract features from raw data. Neural networks require large amounts of data and computational power, but they have revolutionized fields like AI and machine learning by achieving state-of-the-art performance in many areas.

What’s the difference between AI and ML?

The main difference between AI and ML lies in their scope and focus. AI is the overarching concept of machines capable of intelligent behaviour, while ML is a specific approach used to achieve this intelligence by learning from data. All machine learning is a form of AI, but not all AI involves machine learning.

Some AI systems may rely on hard-coded rules or logic, whereas ML models autonomously improve their performance based on the data they process.

Read more: Deep Learning vs Machine Learning: What’s the Difference?

In practice, machine learning is often a key driver behind the AI applications we see today, especially in areas like natural language processing, image recognition, and autonomous systems. However, AI goes beyond just machine learning, aiming to create intelligent systems that can work autonomously in diverse environments.

Examples of machine learning in the real world

1. Recommendation Systems

One of the most prominent uses of machine learning is in recommendation systems, which are employed by companies like Netflix, Amazon, and Spotify to personalize content for users. ML algorithms analyze user behaviour, such as viewing history, search queries, or purchase patterns, to suggest products, movies, or music that align with individual preferences.

For example, Netflix uses machine learning to recommend shows or films based on a user’s past viewing habits, while Amazon’s recommendation engine suggests products based on previous purchases or searches.

2. Fraud Detection

In the financial sector, machine learning plays a crucial role in fraud detection. Banks and financial institutions use ML models to identify suspicious transactions and flag potentially fraudulent activity. These models analyze historical transaction data to detect anomalies or unusual patterns that could indicate fraud, such as transactions made from different locations in a short period or unusually large withdrawals.

ML algorithms are constantly updated with new data, making them highly adaptive to evolving fraud techniques. This technology helps reduce the risks associated with online banking, credit card usage, and digital payments, ensuring greater security for both consumers and businesses.

3. Healthcare Diagnostics

In healthcare, machine learning is revolutionizing diagnostics by improving the accuracy and speed of medical imaging analysis. For example, ML algorithms can be trained to detect abnormalities in X-rays, MRIs, or CT scans, such as identifying tumours or early signs of diseases like cancer or pneumonia. These models can often analyze images faster than human radiologists, providing second opinions and reducing diagnostic errors.

4. Autonomous Vehicles

Self-driving cars are one of the most cutting-edge applications of machine learning. Autonomous vehicles use ML algorithms to process data from sensors, cameras, and lidar systems in real time to navigate roads, recognize objects (like other vehicles, pedestrians, or traffic signals), and make driving decisions. Companies like Tesla, Waymo, and Uber rely on deep learning—a subset of machine learning—to enable cars to "learn" from driving data and improve their performance on the road.

5. Natural Language Processing (NLP)

Natural Language Processing (NLP), which is powered by machine learning, is used in virtual assistants like Amazon’s Alexa, Google Assistant, and Apple’s Siri. These systems can understand and respond to voice commands, translating spoken language into actions like playing music, setting reminders, or answering questions. For example, Google Translate uses ML to provide real-time translation across numerous languages, while businesses deploy chatbots to handle customer inquiries.

Comments ( 0 )