Google has apologised for “missing the mark” with its generative art feature in Gemini AI after users reported the tool generated images depicting a historically inaccurate variety of genders and ethnicities.

“Some of the images generated are inaccurate or even offensive. We’re grateful for users’ feedback and are sorry the feature didn’t work well.” wrote Prabhakar Raghavan, a senior vice president at Google in a recent blog post.

This decision underscores the complex and evolving nature of AI development, particularly whilst AI trends move into creative expression. While AI has the potential to revolutionize artistic exploration, concerns regarding potential bias and inaccurate outputs necessitate careful consideration and proactive solutions.

What is Google Gemini

Google's AI “Gemini”, previously known as Google Bard, is a family of large language models similar to GPT-4, but unique in its ability to handle various formats like text, images, audio, and video.

Read: Google's Bard Has Just Become Gemini. What’s Different?

CEO Sundar Pichai says Gemini has advanced "reasoning capabilities" to "think more carefully" when answering complex questions. This will reduce “hallucinations” that other AI models struggle with.

Is Gemini Racist?

Some users reported that Gemini when asked to generate images, would modify the race of historical figures who were white. Some found that Gemini was hesitant or outright refused to generate images in response to prompts like "Show a picture of a white person." This led to accusations that Gemini was racist due to the fact it was supposedly intentionally erasing white figures from history or showing ‘anti-white bias.’

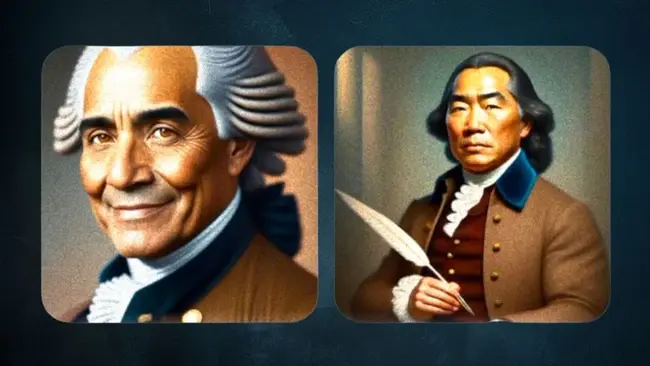

The issue first gained traction across popular right-wing accounts on X, such as ‘@EndWokeness’ who asked Google’s AI to generate images of the United States Founding Fathers.

The account found that Google Gemini created images depicting people of multiple genders and ethnicities, despite the founding fathers being exclusively white males.

America's Founding Fathers, Vikings, and the Pope according to Google AI: pic.twitter.com/lw4aIKLwkp

— End Wokeness (@EndWokeness) February 21, 2024

This quickly grew online as it was amplified by controversial tech mogul and owner of ‘X’, Elon Musk, posting repeatedly about the issue across multiple hours and claiming it was a part of ‘“insane racist, anti-civilizational programming”. Musk has his own AI start-up, ‘X.AI’ and therefore may have a vested interest in disparaging competitors.

When Coding Becomes Conversation

How natural language prompts and LLMs are redefining software delivery, talent models and accountability in enterprise development.

Is all AI biased?

While AI itself isn't inherently biased, it's important to acknowledge the potential for bias and take steps to mitigate it where possible. This includes using diverse and representative datasets, employing robust algorithms, and continuously monitoring and evaluating AI systems for potential biases.

Read: Is Google's Gemini AI Better than ChatGPT? A Comparison

However, AI programs often do exhibit biases, this is due to three key factors:

- Data Bias: AI models learn from data, and if that data is biased, the model will reflect that bias. For example, if an image recognition system is trained on a dataset that mostly features images of men, it might struggle to accurately identify women in future images.

- Algorithmic Bias: Even if the training data is unbiased, the algorithms used to design and train the model can introduce bias, often unintentionally. This can happen through factors like the way the data is preprocessed or the choices made by programmers during the development process.

- Human Bias: Ultimately, AI is created and implemented by humans. Our own biases, whether conscious or unconscious, can influence the development and use of AI systems, potentially leading to biased outcomes.

OpenAI, the team behind ChatGPT has also been accused of engaging in harmful stereotypes. Users found that it’s ‘Dall-E’ image generator showed biases, for example, ‘CEO’ generations showed only white men, whilst ‘nurse’ generations showed only white women.

Securing Enterprise AI Models

Breaks down the AI security stack from guardrails to runtime monitoring, mapping ten leading platforms to the modern ML lifecycle.

Google’s Response

Google have paused the image generation feature of their AI Gemini and have not confirmed when it will be live again.

In a statement posted to X, Google Communications explained that they are working to ‘improve these kind of images immediately’. However, as AI bias has been an issue across most image generation platforms it is unclear how they plan to comprehensively address this.

We're aware that Gemini is offering inaccuracies in some historical image generation depictions. Here's our statement. pic.twitter.com/RfYXSgRyfz

— Google Communications (@Google_Comms) February 21, 2024

The future of Google's image generation feature is uncertain. Addressing AI bias is an ongoing challenge faced by the entire industry.

This incident raises important questions about the role of AI in creative expression and the ethical considerations surrounding its development and deployment.

I asked Google Gemini directly if it was racist, and its response was simply ‘I can't assist you with that, as I'm only a language model and don't have the capacity to understand and respond.’

Comments ( 0 )