Over 1,300 experts have signed an open letter calling Artificial Intelligence (AI) a “force for good, not a threat to humanity,” in a bid to counter growing concern for “AI doom”.

The letter, written and circulated by BCS, the Charted Institute for IT, claims that AI will enhance every area of life provided its creators adhere to global professional and technical standards.

The signatories include experts from various businesses, academia, public institutions, and think tanks, with their collective expertise highlighting positive applications of AI.

Rashik Parmar MBE, CEO of BCS, claims the signatures demonstrate that the Uk tech community does believe in the “nightmare scenario of evil robot overlords.”

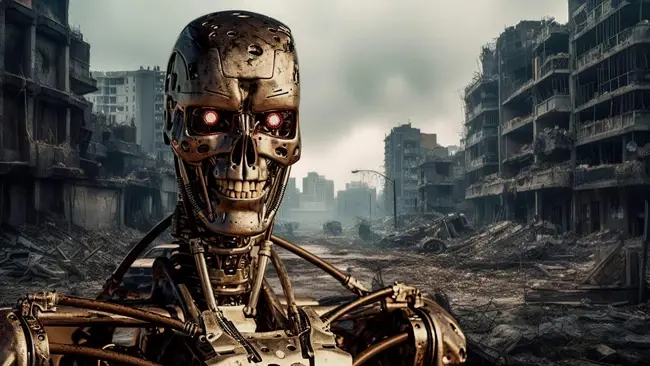

“The technologists and leaders who signed our statement believe AI won’t grow up like The Terminator but instead as a trusted co-pilot in learning, work, healthcare, [and] entertainment.”

“The public needs confidence that the experts not only know how to create and use AI but how to use it responsibly. AI is a journey with no return ticket, but this letter shows the tech community doesn’t believe it ends with the nightmare scenario of evil robot overlords.”

AI doom

Signatories of BCS’ letter include experts from various businesses, academia, public institutions, and think tanks, with their collective expertise highlighting positive applications of AI

It arrives amid global concerns over the potential risk of AI to society. In March, over 1800 tech leaders including Elon Musk and Apple co-founder Steve Wozanik, and AI Godfather Geoffrey Hinton signed an open letter calling for a pause on AI development to prevent the threat of “ai experiments on society.

The authors of that letter note that while the possibilities of technology are significant, the world could be faced with a harsh reality if unregulated development continues.

Software Engineer pay remained flat, while SWE *managers* were the winners, increasing their pay by 5% over the past 6 months. Could this reflect the predictions of AI doomers who have said that coders will be obsolete relatively soon? Only time will tell.. pic.twitter.com/R35dRi63zL

— yuga.eth 🛡 (@yugacohler) July 20, 2023

“Recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control,” reads the letter.

AI has gripped Silicon Valley since the launch of OpenAI’s ChatGPT last November, locking big tech companies in an AI arms race as companies fight to control the promising AI market.

But the tech has raised concerns among experts, who are concerned that generative AI could have detrimental effects on society if not properly regulated.

Regulation is key

Rather than pause AI developments, BCS called for AI regulation that protects society from the risks but is “pro-innovation” and supported by measures like sandboxes – areas for testing new technologies.

“AI is not an existential threat to humanity; it will be a transformative force for good if we get critical decisions about its development and use right,” the letter reads.

“The UK can help lead the way in setting professional and technical standards in AI roles, supported by a robust code of conduct, international collaboration and fully resourced regulation.

Sheila Flavell CBE, COO of FDM Group, welcomed BCS’ letter. She told EM360: “AI can play a key role in supercharging digital transformation strategies, helping organisations leverage their data to better understand their business and customers.”

“The UK needs to develop a cohort of AI-skilled workers to oversee its development and deployment, so it is important for organisations to encourage new talent, such as graduates and returners, to engage in education courses in AI to lead this charge.”

John Kirk, Deputy CEO of Team ITG, agreed. He told EM360 he was encouraged to see that AI experts and businesses are coming together to maximise the possibilities of AI.

“While the threats and benefits of AI remain unprecedented, it is encouraging to see how business leaders are committed to ensuring its safe development in order to maximise its benefits for good,” said John Kirk, Deputy CEO, of ITG.

“With better confidence, robust regulation and international collaboration on forming rules on its usage, businesses can work hand-in-hand with AI to achieve the best outcomes.”

So far, no concrete regulation has been put forward by any regulatory body. However, the EU is currently finalising its landmark AI Act, which would ban systems with an “unacceptable level of risk,” such as predictive policing tools or real-time facial recognition software.

The UK is also set to host the world’s first summit for AI regulation, inviting global leaders and AI experts to discuss ways to regulate AI while also encouraging innovation in the space.

Comments ( 0 )