Clearview AI has overturned a £7.5 million fine from the Information Commissioner's Office (ICO) after a court found the UK privacy watchdog lacked jurisdiction in the case.

The facial recognition company, which the ICO accused of illegally collecting images of millions of British citizens without their knowledge, won its appeal to have the fine overturned after a court found the firm had not used this data unlawfully in the UK.

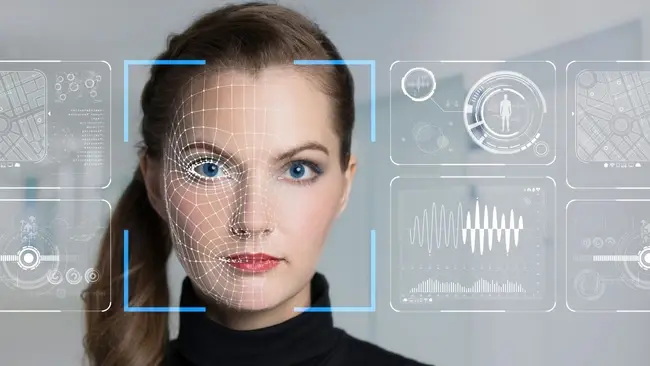

Clearview AI has over 30 billion images scraped from the internet in its database and allows its clients to search its database of billions of faces to find matches. The service has been used by US police in up to a million searches to solve a variety of different crimes, including murders.

Clearview previously had commercial customers as well, but since a 2020 settlement in a case brought by US civil liberties campaigners, it now only accepts clients who carry out criminal law enforcement or national security operations.

It also does not have any UK or EU clients – a fact that was crucial in its appeal against the ICO’s fine since its data was only used by law enforcement bodies outside of the UK.

The court consequently found that, while Clearview did carry out data processing related to monitoring the behaviour of people in the UK, ICO "did not have jurisdiction" to take enforcement action or issue a fine.

In response to the ruling, the ICO said it would “take stock” of the judgement and consider its next steps carefully. It noted that the judgement would not prevent it from taking action against other firms.

"It is important to note that this judgement does not remove the ICO's ability to act against companies based internationally who process data of people in the UK, particularly businesses scraping data of people in the UK and instead covers a specific exemption around foreign law enforcement,” it said in a statement.

Facial recognition and the law

Experts and privacy advocates have long warned of the potential risks of using face recognition technology.

UK British civil liberties and privacy campaigning group, Big Brother Watch has previously described the technology as an “epidemic”, warning that the technology is not only intrusive but also ineffective.

Meanwhile, a recent report by the Guardian found that facial recognition had led to the false arrest of six black people across the US, signalling that the tech may have a racial bias due to a lack of data on certain minority groups.

Read more: AI and GDPR: A Data Privacy Nightmare

According to a 2017 NIST study, the poorest accuracy rates come when identifying Black women between the ages of 18-30. Moreover, false positive rates are highest in west and east African and east Asian people, and lowest in eastern European individuals.

The study concludes by stating that with a factor of 100 more false positives between countries, the disparity between these groups is high. In other words, you are far less likely to be falsely identified for a crime if you’re white.

Comments ( 0 )